正在加载图片...

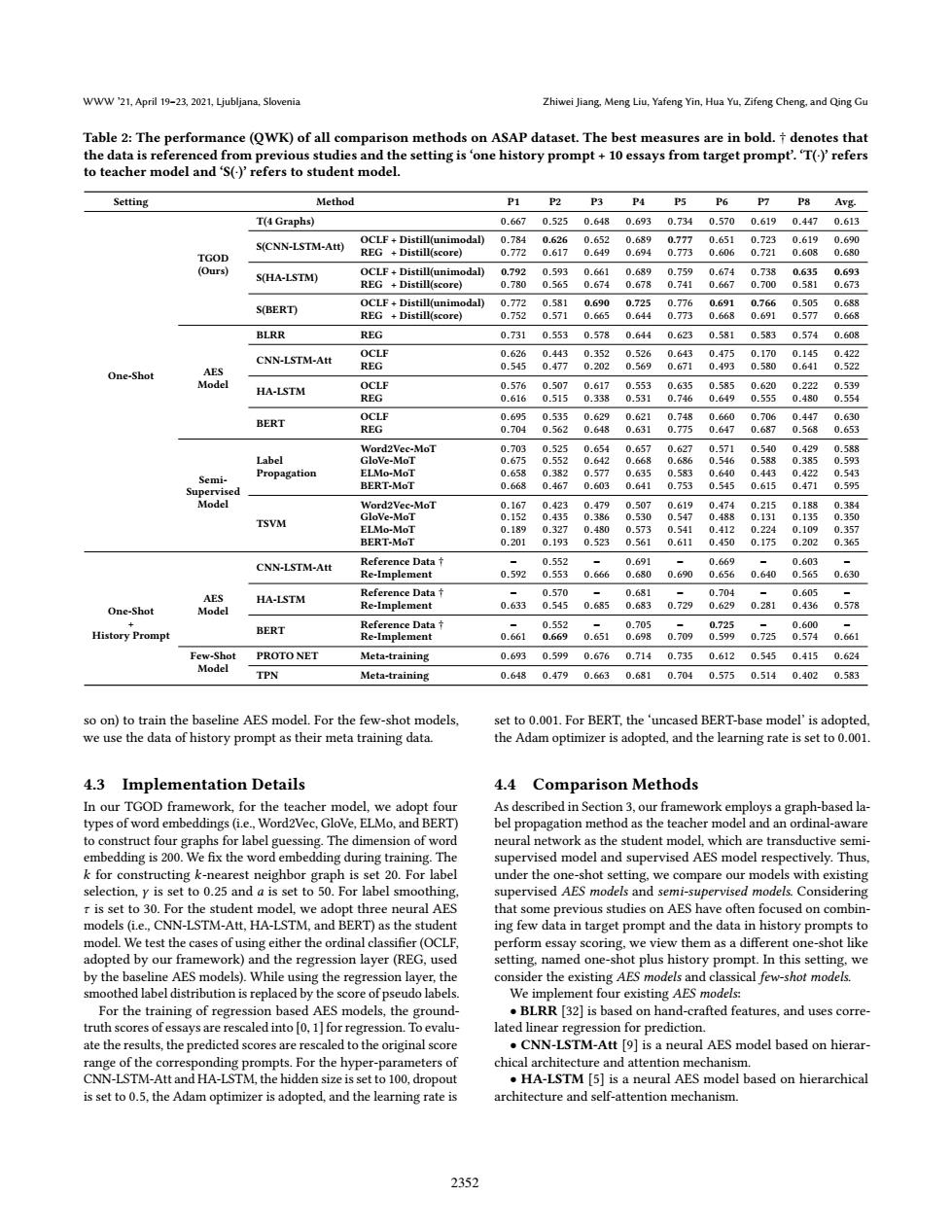

WWW '21,April 19-23,2021,Ljubljana,Slovenia Zhiwei Jiang,Meng Liu,Yafeng Yin,Hua Yu,Zifeng Cheng,and Qing Gu Table 2:The performance (QWK)of all comparison methods on ASAP dataset.The best measures are in bold.denotes that the data is referenced from previous studies and the setting is'one history prompt+10 essays from target prompt'.T()'refers to teacher model and'S()'refers to student model. Setting Method P1 P2 P3 P4 P5 P6 P7 Avg. T(4 Graphs) 0.6670.5250.6480.6930.7340.5700.6190.4470.613 OCLF+Distill(unimodal) 0.784 0.626 0.6520.689 0.7770.651 0.723 0.619 0.690 S(CNN-LSTM-Att) TGOD REG +Distill(score) 0.7720.6170.6490.6940.7730.6060.7210.6080.680 (Ours) 0CLF+Distill(unimoda0.7920.5930.6610.6890.759 0.6740.7380.635 0.693 S(HA-LSTM) REG Distill(score) 0.7800.565 0.6740.6780.741 0.6670.700 0.581 0.673 0CLF+Distill(unimodal)0.7720.5810.6900.7250.7760.691 S(BERT) 0.7660.5050.688 REG Distill(score) 0.7520.571 0.6650.644 0.773 0.6680.691 0.577 0.668 BLRR REG 0.7310.5530.5780.6440.6230.5810.5830.5740.608 OCLF 0.626 0.4430.352 0.5260.6430.475 0.1700.145 0.422 CNN-LSTM-Att REG 0.5450.477 0.2020.5690.6710.4930.580 0.641 0.522 One-Shot AES Model OCLE 0.576 05070617.0553 0.6350585 0.6200222 0.539 HA-LSTM REG 0.616 0.515 0.338 0.5310.746 0.649 0.555 0.480 0.554 OCLF 0.6950.5350.629 0.6210.7480.6600.706 0447 0.630 BERT REG 0.704 0.562 0.648 0.631 0.775 0.647 0.687 0.568 0.653 Word2Vec-MoT 0.703 0.525 0654 0.657 0.627 0571 0540 0429 0588 Label GloVe-MoT 0.675 0.552 0.642 0.668 0.686 0.346 0.588 0.385 0.593 Propagation ELMo-MoT 0658 0.382 0577 0.635 Semi- 0.583 0.640 0.443 0.422 0.543 Supervised BERT-MoT 0.668 0.467 0.603 0.641 0.753 0.545 0.615 0.471 0.595 Model Word2Vec-MoT 0.167 0423 0.479 0.507 0619 0474 0215 0188 0384 GloVe-MoT 0.152 0.435 0.530 0.547 0.488 0.131 0.135 0.350 TSVM ELMo-MoT 0.189 0327 0.480 0573 0.5410.412 0.224 0.109 0357 BERT-MoT 0.201 0.193 0.523 0.561 0.611 0.450 0.175 0.202 0.365 Reference Data 0552 0.691 0669 0603 CNN-LSTM-Att Re-Implement 0.592 0.553 0.666 0.680 0.6900.656 0.6400.565 0.630 Reference Data 0.570 0.681 0.704 0605 AES HA-LSTM One-Shot Re-Implement 0.633 0.545 0.6850.6830.7290.6290.2810.436 0.578 Model Reference Data 0.552 0.705 0.725 0.600 History Prompt BERT Re-Implement 0.6610.669 0.6510.6980.7090.5990.7250.5740.661 Few-Shot PROTO NET Meta-training 0.6930.599 0.6760.7140.7350.6120.5450.4150.624 Model TPN Meta-training 0.648 0.479 0.6630.6810.704 0.575 0.514 0.402 0.583 so on)to train the baseline AES model.For the few-shot models set to 0.001.For BERT,the 'uncased BERT-base model'is adopted, we use the data of history prompt as their meta training data. the Adam optimizer is adopted,and the learning rate is set to 0.001 4.3 Implementation Details 4.4 Comparison Methods In our TGOD framework,for the teacher model,we adopt four As described in Section 3.our framework employs a graph-based la- types of word embeddings(i.e.,Word2Vec,GloVe,ELMo,and BERT) bel propagation method as the teacher model and an ordinal-aware to construct four graphs for label guessing.The dimension of word neural network as the student model,which are transductive semi- embedding is 200.We fix the word embedding during training.The supervised model and supervised AES model respectively.Thus. k for constructing k-nearest neighbor graph is set 20.For label under the one-shot setting,we compare our models with existing selection,y is set to 0.25 and a is set to 50.For label smoothing. supervised AES models and semi-supervised models.Considering r is set to 30.For the student model,we adopt three neural AES that some previous studies on AES have often focused on combin- models (i.e.,CNN-LSTM-Att,HA-LSTM,and BERT)as the student ing few data in target prompt and the data in history prompts to model.We test the cases of using either the ordinal classifier(OCLF, perform essay scoring,we view them as a different one-shot like adopted by our framework)and the regression layer(REG,used setting.named one-shot plus history prompt.In this setting,we by the baseline AES models).While using the regression layer,the consider the existing AES models and classical few-shot models. smoothed label distribution is replaced by the score of pseudo labels. We implement four existing AES models: For the training of regression based AES models,the ground- BLRR [32]is based on hand-crafted features,and uses corre- truth scores of essays are rescaled into [0,1]for regression.To evalu- lated linear regression for prediction. ate the results,the predicted scores are rescaled to the original score CNN-LSTM-Att [9]is a neural AES model based on hierar- range of the corresponding prompts.For the hyper-parameters of chical architecture and attention mechanism. CNN-LSTM-Att and HA-LSTM,the hidden size is set to 100,dropout .HA-LSTM [5]is a neural AES model based on hierarchical is set to 0.5,the Adam optimizer is adopted,and the learning rate is architecture and self-attention mechanism. 2352WWW ’21, April 19–23, 2021, Ljubljana, Slovenia Zhiwei Jiang, Meng Liu, Yafeng Yin, Hua Yu, Zifeng Cheng, and Qing Gu Table 2: The performance (QWK) of all comparison methods on ASAP dataset. The best measures are in bold. † denotes that the data is referenced from previous studies and the setting is ‘one history prompt + 10 essays from target prompt’. ‘T(·)’ refers to teacher model and ‘S(·)’ refers to student model. Setting Method P1 P2 P3 P4 P5 P6 P7 P8 Avg. One-Shot TGOD (Ours) T(4 Graphs) 0.667 0.525 0.648 0.693 0.734 0.570 0.619 0.447 0.613 S(CNN-LSTM-Att) OCLF + Distill(unimodal) 0.784 0.626 0.652 0.689 0.777 0.651 0.723 0.619 0.690 REG + Distill(score) 0.772 0.617 0.649 0.694 0.773 0.606 0.721 0.608 0.680 S(HA-LSTM) OCLF + Distill(unimodal) 0.792 0.593 0.661 0.689 0.759 0.674 0.738 0.635 0.693 REG + Distill(score) 0.780 0.565 0.674 0.678 0.741 0.667 0.700 0.581 0.673 S(BERT) OCLF + Distill(unimodal) 0.772 0.581 0.690 0.725 0.776 0.691 0.766 0.505 0.688 REG + Distill(score) 0.752 0.571 0.665 0.644 0.773 0.668 0.691 0.577 0.668 AES Model BLRR REG 0.731 0.553 0.578 0.644 0.623 0.581 0.583 0.574 0.608 CNN-LSTM-Att OCLF 0.626 0.443 0.352 0.526 0.643 0.475 0.170 0.145 0.422 REG 0.545 0.477 0.202 0.569 0.671 0.493 0.580 0.641 0.522 HA-LSTM OCLF 0.576 0.507 0.617 0.553 0.635 0.585 0.620 0.222 0.539 REG 0.616 0.515 0.338 0.531 0.746 0.649 0.555 0.480 0.554 BERT OCLF 0.695 0.535 0.629 0.621 0.748 0.660 0.706 0.447 0.630 REG 0.704 0.562 0.648 0.631 0.775 0.647 0.687 0.568 0.653 SemiSupervised Model Label Propagation Word2Vec-MoT 0.703 0.525 0.654 0.657 0.627 0.571 0.540 0.429 0.588 GloVe-MoT 0.675 0.552 0.642 0.668 0.686 0.546 0.588 0.385 0.593 ELMo-MoT 0.658 0.382 0.577 0.635 0.583 0.640 0.443 0.422 0.543 BERT-MoT 0.668 0.467 0.603 0.641 0.753 0.545 0.615 0.471 0.595 TSVM Word2Vec-MoT 0.167 0.423 0.479 0.507 0.619 0.474 0.215 0.188 0.384 GloVe-MoT 0.152 0.435 0.386 0.530 0.547 0.488 0.131 0.135 0.350 ELMo-MoT 0.189 0.327 0.480 0.573 0.541 0.412 0.224 0.109 0.357 BERT-MoT 0.201 0.193 0.523 0.561 0.611 0.450 0.175 0.202 0.365 One-Shot + History Prompt AES Model CNN-LSTM-Att Reference Data † − 0.552 − 0.691 − 0.669 − 0.603 − Re-Implement 0.592 0.553 0.666 0.680 0.690 0.656 0.640 0.565 0.630 HA-LSTM Reference Data † − 0.570 − 0.681 − 0.704 − 0.605 − Re-Implement 0.633 0.545 0.685 0.683 0.729 0.629 0.281 0.436 0.578 BERT Reference Data † − 0.552 − 0.705 − 0.725 − 0.600 − Re-Implement 0.661 0.669 0.651 0.698 0.709 0.599 0.725 0.574 0.661 Few-Shot Model PROTO NET Meta-training 0.693 0.599 0.676 0.714 0.735 0.612 0.545 0.415 0.624 TPN Meta-training 0.648 0.479 0.663 0.681 0.704 0.575 0.514 0.402 0.583 so on) to train the baseline AES model. For the few-shot models, we use the data of history prompt as their meta training data. 4.3 Implementation Details In our TGOD framework, for the teacher model, we adopt four types of word embeddings (i.e., Word2Vec, GloVe, ELMo, and BERT) to construct four graphs for label guessing. The dimension of word embedding is 200. We fix the word embedding during training. The k for constructing k-nearest neighbor graph is set 20. For label selection, γ is set to 0.25 and a is set to 50. For label smoothing, τ is set to 30. For the student model, we adopt three neural AES models (i.e., CNN-LSTM-Att, HA-LSTM, and BERT) as the student model. We test the cases of using either the ordinal classifier (OCLF, adopted by our framework) and the regression layer (REG, used by the baseline AES models). While using the regression layer, the smoothed label distribution is replaced by the score of pseudo labels. For the training of regression based AES models, the groundtruth scores of essays are rescaled into [0, 1] for regression. To evaluate the results, the predicted scores are rescaled to the original score range of the corresponding prompts. For the hyper-parameters of CNN-LSTM-Att and HA-LSTM, the hidden size is set to 100, dropout is set to 0.5, the Adam optimizer is adopted, and the learning rate is set to 0.001. For BERT, the ‘uncased BERT-base model’ is adopted, the Adam optimizer is adopted, and the learning rate is set to 0.001. 4.4 Comparison Methods As described in Section 3, our framework employs a graph-based label propagation method as the teacher model and an ordinal-aware neural network as the student model, which are transductive semisupervised model and supervised AES model respectively. Thus, under the one-shot setting, we compare our models with existing supervised AES models and semi-supervised models. Considering that some previous studies on AES have often focused on combining few data in target prompt and the data in history prompts to perform essay scoring, we view them as a different one-shot like setting, named one-shot plus history prompt. In this setting, we consider the existing AES models and classical few-shot models. We implement four existing AES models: • BLRR [32] is based on hand-crafted features, and uses correlated linear regression for prediction. • CNN-LSTM-Att [9] is a neural AES model based on hierarchical architecture and attention mechanism. • HA-LSTM [5] is a neural AES model based on hierarchical architecture and self-attention mechanism. 2352