正在加载图片...

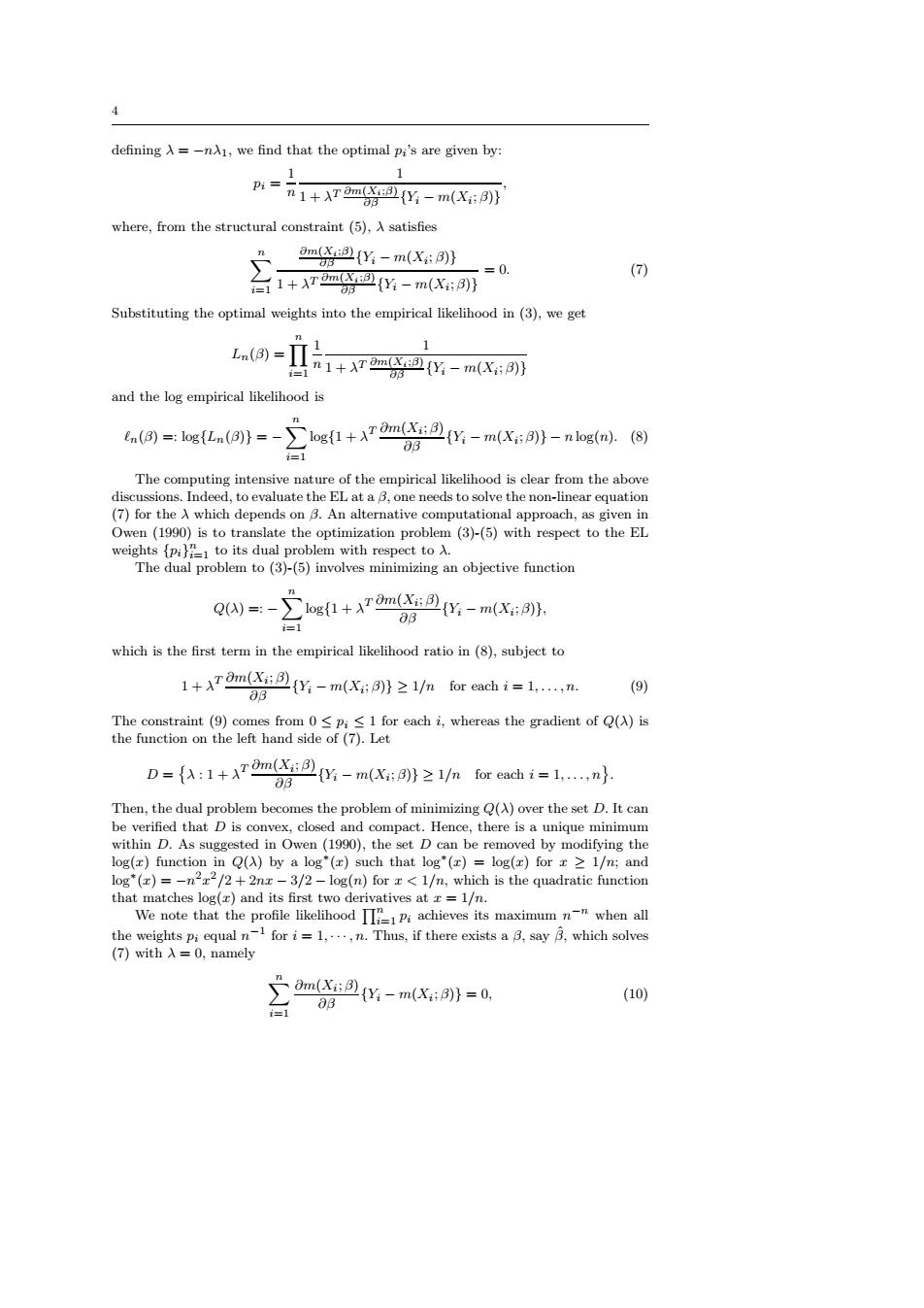

defining A =-nA1,we find that the optimal pi's are given by: 1 1 pi= 1+ATmg器出:-m(X:奶 where,from the structural constraint (5),A satisfies ma出-m(X 合1+AT型-m(X: -=0 (7) 8B Substituting the optimal weights into the empirical likelihood in(3),we get and the log empirical likelihood is in(8)=log(n(B))=->logf1+xm((Y-m(X:))-nlog(n).(8) The computing intensive nature of the empirical likelihood is clear from the above discussions.Indeed,to evaluate the EL at a B,one needs to solve the non-linear equation (7)for the A which depends on B.An alternative computational approach,as given in Owen (1990)is to translate the optimization problem (3)-(5)with respect to the EL weights (pi)to its dual problem with respect to A. The dual problem to(3)-(5)involves minimizing an objective function Q()=:->logf1+xTm(x(Y:-m(X::) i=1 which is the first term in the empirical likelihood ratio in (8),subject to 1+XTOm(X:化-m(X:8}≥1 for eachi=l,,n. 03 (9) The constraint(9)comes from 0<pi<1 for each i,whereas the gradient of Q(A)is the function on the left hand side of(7).Let D:1+m(-m())2 I/for each=1. 83 Then,the dual problem becomes the problem of minimizing (A)over the set D.It can be verified that D is convex,closed and compact.Hence,there is a unique minimum within D.As suggested in Owen (1990),the set D can be removed by modifying the log(r)function in Q()by a log"()such that log"(r)=log(r)for r 1/n;and log*(x)=-n222/2+2nx-3/2-log(n)for r<1/n,which is the quadratic function that matches log(r)and its first two derivatives at r=1/n. We note that the profile likelihood Pi achieves its maximum n when all the weights pi equal n for i=1,.,n.Thus,if there exists a B,say B,which solves (7)with入=0,namely (Xm(-m(X:}=0, (10)4 defining λ = −nλ1, we find that the optimal pi ’s are given by: pi = 1 n 1 1 + λT ∂m(Xi;β) ∂β {Yi − m(Xi ; β)} , where, from the structural constraint (5), λ satisfies Xn i=1 ∂m(Xi;β) ∂β {Yi − m(Xi ; β)} 1 + λT ∂m(Xi;β) ∂β {Yi − m(Xi ; β)} = 0. (7) Substituting the optimal weights into the empirical likelihood in (3), we get Ln(β) = Yn i=1 1 n 1 1 + λT ∂m(Xi;β) ∂β {Yi − m(Xi ; β)} and the log empirical likelihood is ℓn(β) =: log{Ln(β)} = − Xn i=1 log{1 + λ T ∂m(Xi ; β) ∂β {Yi − m(Xi ; β)} − n log(n). (8) The computing intensive nature of the empirical likelihood is clear from the above discussions. Indeed, to evaluate the EL at a β, one needs to solve the non-linear equation (7) for the λ which depends on β. An alternative computational approach, as given in Owen (1990) is to translate the optimization problem (3)-(5) with respect to the EL weights {pi} n i=1 to its dual problem with respect to λ. The dual problem to (3)-(5) involves minimizing an objective function Q(λ) =: − Xn i=1 log{1 + λ T ∂m(Xi ; β) ∂β {Yi − m(Xi ; β)}, which is the first term in the empirical likelihood ratio in (8), subject to 1 + λ T ∂m(Xi ; β) ∂β {Yi − m(Xi ; β)} ≥ 1/n for each i = 1, . . . , n. (9) The constraint (9) comes from 0 ≤ pi ≤ 1 for each i, whereas the gradient of Q(λ) is the function on the left hand side of (7). Let D = λ : 1 + λ T ∂m(Xi ; β) ∂β {Yi − m(Xi ; β)} ≥ 1/n for each i = 1, . . . , n . Then, the dual problem becomes the problem of minimizing Q(λ) over the set D. It can be verified that D is convex, closed and compact. Hence, there is a unique minimum within D. As suggested in Owen (1990), the set D can be removed by modifying the log(x) function in Q(λ) by a log∗ (x) such that log∗ (x) = log(x) for x ≥ 1/n; and log∗ (x) = −n 2x 2 /2 + 2nx − 3/2 − log(n) for x < 1/n, which is the quadratic function that matches log(x) and its first two derivatives at x = 1/n. We note that the profile likelihood Qn i=1 pi achieves its maximum n −n when all the weights pi equal n −1 for i = 1, · · · , n. Thus, if there exists a β, say βˆ, which solves (7) with λ = 0, namely Xn i=1 ∂m(Xi ; β) ∂β {Yi − m(Xi ; β)} = 0, (10)