正在加载图片...

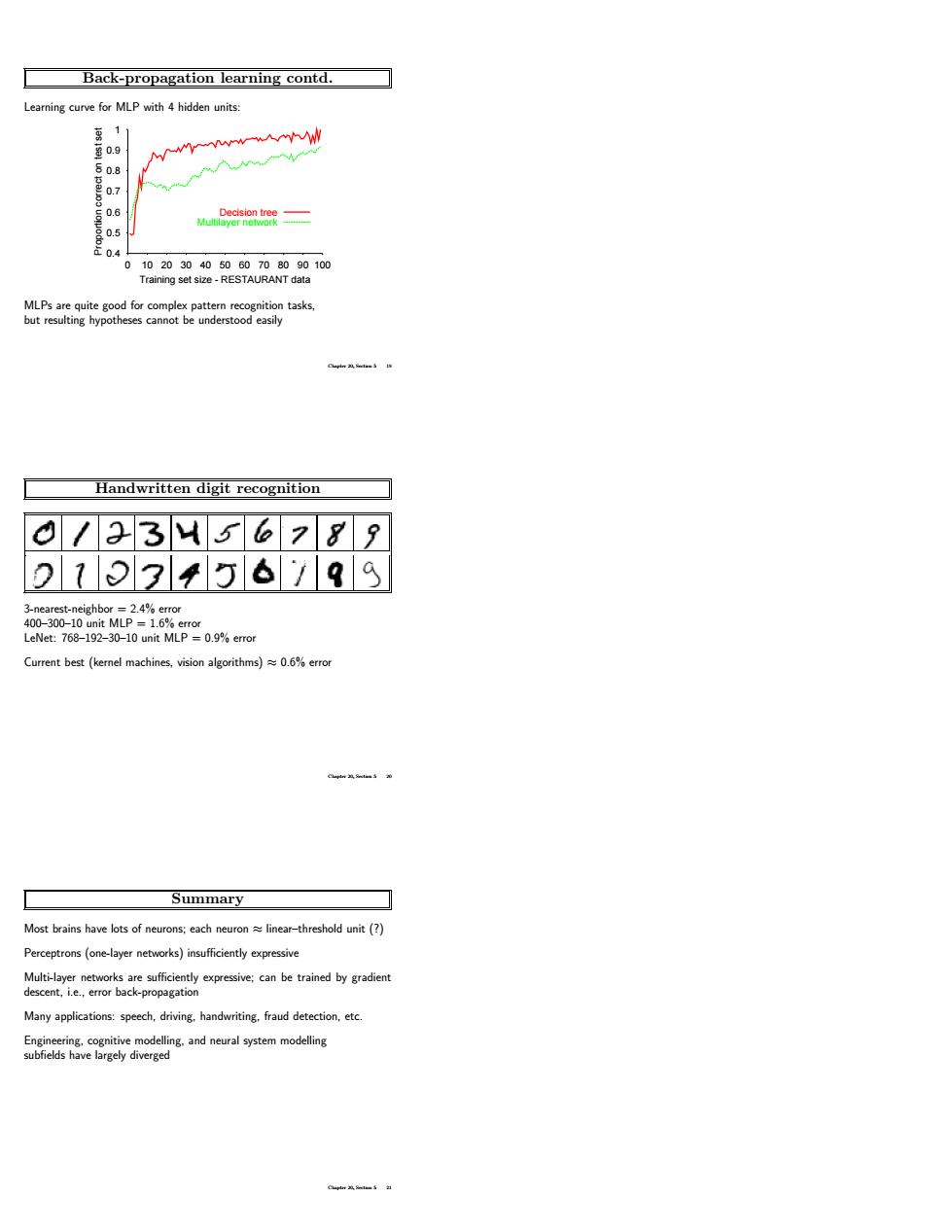

Back-propagation learning contd. eamning curve for MLP with 4 hidden units: Dedsion tree 。 Handwritten digit recognition 0/2356289 p13456/99 Summary Most brains have lots of neurons:each neuron linear-threshold unit (? Perceptrons (one-layer networks)insufficiently expressive be trained by ae Many applications:speech.driving.handwriting,fraud detection,etc er ing.ystem modellingBack-propagation learning contd. Learning curve for MLP with 4 hidden units: 0.4 0.5 0.6 0.7 0.8 0.9 1 0 10 20 30 40 50 60 70 80 90 100 Proportion correct on test set Training set size - RESTAURANT data Decision tree Multilayer network MLPs are quite good for complex pattern recognition tasks, but resulting hypotheses cannot be understood easily Chapter 20, Section 5 19 Handwritten digit recognition 3-nearest-neighbor = 2.4% error 400–300–10 unit MLP = 1.6% error LeNet: 768–192–30–10 unit MLP = 0.9% error Current best (kernel machines, vision algorithms) ≈ 0.6% error Chapter 20, Section 5 20 Summary Most brains have lots of neurons; each neuron ≈ linear–threshold unit (?) Perceptrons (one-layer networks) insufficiently expressive Multi-layer networks are sufficiently expressive; can be trained by gradient descent, i.e., error back-propagation Many applications: speech, driving, handwriting, fraud detection, etc. Engineering, cognitive modelling, and neural system modelling subfields have largely diverged Chapter 20, Section 5 21