正在加载图片...

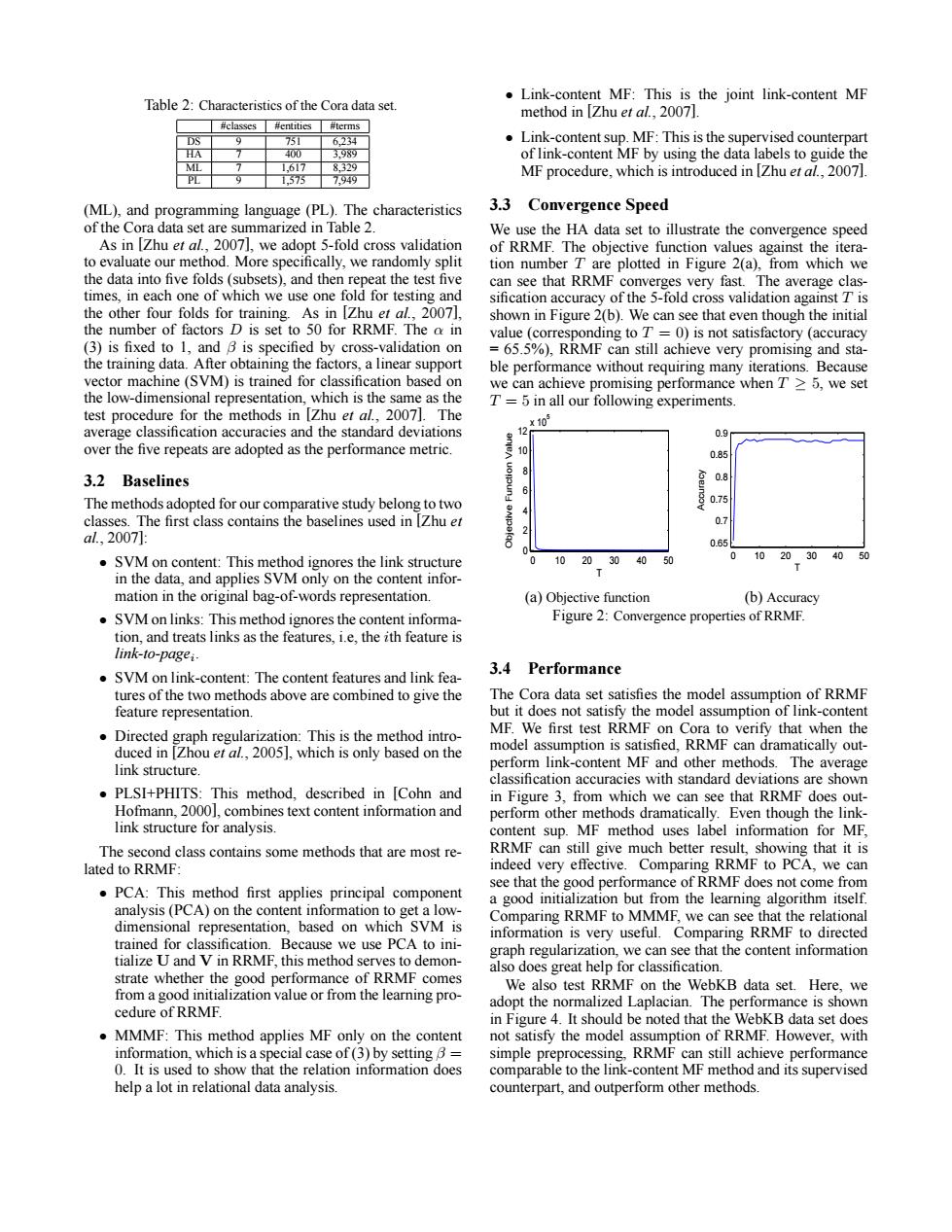

Link-content MF:This is the joint link-content MF Table 2:Characteristics of the Cora data set. method in IZhu et al.,2007]. #classes #entities #terms 751 6,234 Link-content sup.MF:This is the supervised counterpart 400 3.989 of link-content MF by using the data labels to guide the ML 1,617 8,329 MF procedure,which is introduced in [Zhu et al.,2007]. 1575 7,949 (ML),and programming language(PL).The characteristics 3.3 Convergence Speed of the Cora data set are summarized in Table 2. We use the HA data set to illustrate the convergence speed As in [Zhu et al.,2007],we adopt 5-fold cross validation of RRMF.The objective function values against the itera- to evaluate our method.More specifically,we randomly split tion number T are plotted in Figure 2(a).from which we the data into five folds(subsets),and then repeat the test five can see that RRMF converges very fast.The average clas- times,in each one of which we use one fold for testing and sification accuracy of the 5-fold cross validation against T is the other four folds for training.As in Zhu et al..2007. shown in Figure 2(b).We can see that even though the initial the number of factors D is set to 50 for RRMF.The a in value (corresponding to T =0)is not satisfactory (accuracy (3)is fixed to 1,and B is specified by cross-validation on =65.5%),RRMF can still achieve very promising and sta- the training data.After obtaining the factors,a linear support ble performance without requiring many iterations.Because vector machine(SVM)is trained for classification based on we can achieve promising performance when T>5,we set the low-dimensional representation,which is the same as the T=5 in all our following experiments. test procedure for the methods in Zhu et al..2007.The x103 average classification accuracies and the standard deviations 12 0.9 over the five repeats are adopted as the performance metric. 10 0.85 8 3.2 Baselines 0.8 6 The methods adopted for our comparative study belong to two classes.The first class contains the baselines used in IZhu et al.,2007: 8 0.65 SVM on content:This method ignores the link structure 0 10 2030 0 50 01020304050 in the data,and applies SVM only on the content infor- mation in the original bag-of-words representation (a)Objective function (b)Accuracy SVM on links:This method ignores the content informa- Figure 2:Convergence properties of RRMF. tion,and treats links as the features,i.e,the ith feature is link-to-pagei- 3.4 Performance .SVM on link-content:The content features and link fea- tures of the two methods above are combined to give the The Cora data set satisfies the model assumption of RRMF feature representation. but it does not satisfy the model assumption of link-content Directed graph regularization:This is the method intro- MF.We first test RRMF on Cora to verify that when the duced in Zhou et al.,2005,which is only based on the model assumption is satisfied.RRMF can dramatically out- link structure. perform link-content MF and other methods.The average classification accuracies with standard deviations are shown .PLSI+PHITS:This method,described in [Cohn and in Figure 3,from which we can see that RRMF does out- Hofmann,2000],combines text content information and perform other methods dramatically.Even though the link- link structure for analysis. content sup.MF method uses label information for MF, The second class contains some methods that are most re- RRMF can still give much better result,showing that it is lated to RRMF: indeed very effective.Comparing RRMF to PCA,we can see that the good performance of RRMF does not come from PCA:This method first applies principal component a good initialization but from the learning algorithm itself. analysis(PCA)on the content information to get a low- Comparing RRMF to MMMF,we can see that the relational dimensional representation,based on which SVM is information is very useful.Comparing RRMF to directed trained for classification.Because we use PCA to ini- graph regularization,we can see that the content information tialize U and V in RRMF,this method serves to demon- also does great help for classification. strate whether the good performance of RRMF comes from a good initialization value or from the learning pro- We also test RRMF on the WebKB data set.Here,we adopt the normalized Laplacian.The performance is shown cedure of RRMF. in Figure 4.It should be noted that the WebKB data set does MMMF:This method applies MF only on the content not satisfy the model assumption of RRMF.However,with information,which is a special case of(3)by setting B= simple preprocessing,RRMF can still achieve performance 0.It is used to show that the relation information does comparable to the link-content MF method and its supervised help a lot in relational data analysis. counterpart,and outperform other methods.Table 2: Characteristics of the Cora data set. #classes #entities #terms DS 9 751 6,234 HA 7 400 3,989 ML 7 1,617 8,329 PL 9 1,575 7,949 (ML), and programming language (PL). The characteristics of the Cora data set are summarized in Table 2. As in [Zhu et al., 2007], we adopt 5-fold cross validation to evaluate our method. More specifically, we randomly split the data into five folds (subsets), and then repeat the test five times, in each one of which we use one fold for testing and the other four folds for training. As in [Zhu et al., 2007], the number of factors D is set to 50 for RRMF. The α in (3) is fixed to 1, and β is specified by cross-validation on the training data. After obtaining the factors, a linear support vector machine (SVM) is trained for classification based on the low-dimensional representation, which is the same as the test procedure for the methods in [Zhu et al., 2007]. The average classification accuracies and the standard deviations over the five repeats are adopted as the performance metric. 3.2 Baselines The methods adopted for our comparative study belong to two classes. The first class contains the baselines used in [Zhu et al., 2007]: • SVM on content: This method ignores the link structure in the data, and applies SVM only on the content information in the original bag-of-words representation. • SVM on links: This method ignores the content information, and treats links as the features, i.e, the ith feature is link-to-pagei . • SVM on link-content: The content features and link features of the two methods above are combined to give the feature representation. • Directed graph regularization: This is the method introduced in [Zhou et al., 2005], which is only based on the link structure. • PLSI+PHITS: This method, described in [Cohn and Hofmann, 2000], combines text content information and link structure for analysis. The second class contains some methods that are most related to RRMF: • PCA: This method first applies principal component analysis (PCA) on the content information to get a lowdimensional representation, based on which SVM is trained for classification. Because we use PCA to initialize U and V in RRMF, this method serves to demonstrate whether the good performance of RRMF comes from a good initialization value or from the learning procedure of RRMF. • MMMF: This method applies MF only on the content information, which is a special case of (3) by setting β = 0. It is used to show that the relation information does help a lot in relational data analysis. • Link-content MF: This is the joint link-content MF method in [Zhu et al., 2007]. • Link-content sup. MF: This is the supervised counterpart of link-content MF by using the data labels to guide the MF procedure, which is introduced in [Zhu et al., 2007]. 3.3 Convergence Speed We use the HA data set to illustrate the convergence speed of RRMF. The objective function values against the iteration number T are plotted in Figure 2(a), from which we can see that RRMF converges very fast. The average classification accuracy of the 5-fold cross validation against T is shown in Figure 2(b). We can see that even though the initial value (corresponding to T = 0) is not satisfactory (accuracy = 65.5%), RRMF can still achieve very promising and stable performance without requiring many iterations. Because we can achieve promising performance when T ≥ 5, we set T = 5 in all our following experiments. 0 10 20 30 40 50 0 2 4 6 8 10 12 x 105 T Objective Function Value 0 10 20 30 40 50 0.65 0.7 0.75 0.8 0.85 0.9 T Accuracy (a) Objective function (b) Accuracy Figure 2: Convergence properties of RRMF. 3.4 Performance The Cora data set satisfies the model assumption of RRMF but it does not satisfy the model assumption of link-content MF. We first test RRMF on Cora to verify that when the model assumption is satisfied, RRMF can dramatically outperform link-content MF and other methods. The average classification accuracies with standard deviations are shown in Figure 3, from which we can see that RRMF does outperform other methods dramatically. Even though the linkcontent sup. MF method uses label information for MF, RRMF can still give much better result, showing that it is indeed very effective. Comparing RRMF to PCA, we can see that the good performance of RRMF does not come from a good initialization but from the learning algorithm itself. Comparing RRMF to MMMF, we can see that the relational information is very useful. Comparing RRMF to directed graph regularization, we can see that the content information also does great help for classification. We also test RRMF on the WebKB data set. Here, we adopt the normalized Laplacian. The performance is shown in Figure 4. It should be noted that the WebKB data set does not satisfy the model assumption of RRMF. However, with simple preprocessing, RRMF can still achieve performance comparable to the link-content MF method and its supervised counterpart, and outperform other methods