正在加载图片...

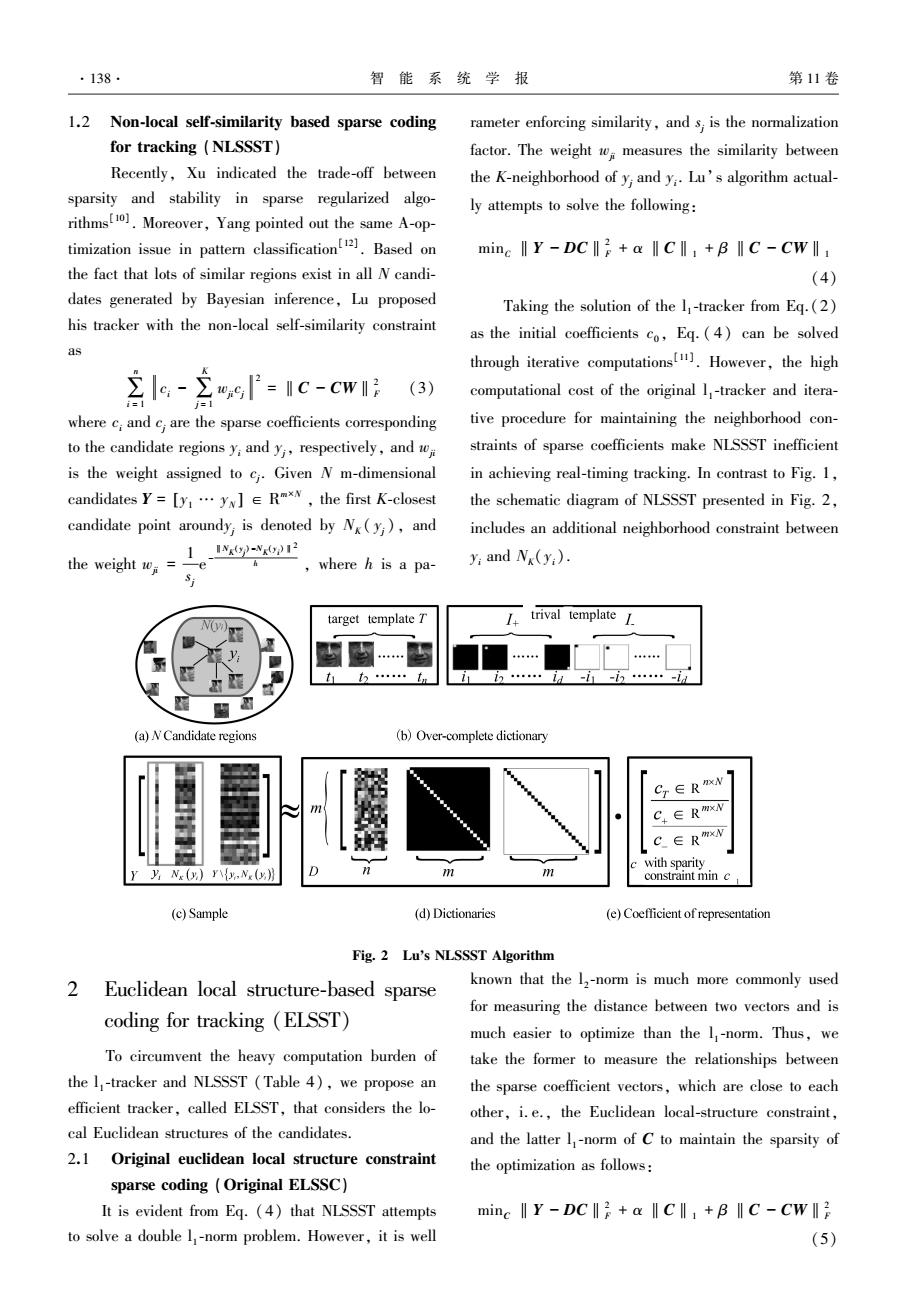

·138. 智能系统学报 第11卷 1.2 Non-local self-similarity based sparse coding rameter enforcing similarity,and s,is the normalization for tracking (NLSSST) factor.The weight measures the similarity between Recently,Xu indicated the trade-off between the K-neighborhood of y;and y:.Lu's algorithm actual- sparsity and stability in sparse regularized algo- ly attempts to solve the following: rithms[].Moreover,Yang pointed out the same A-op- timization issue in pattern classification Based on minc I Y-DC+a cll+B II C-CWIl the fact that lots of similar regions exist in all N candi- (4) dates generated by Bayesian inference,Lu proposed Taking the solution of the I-tracker from Eq.(2) his tracker with the non-local self-similarity constraint as the initial coefficients co,Eq.(4)can be solved as 含s-含6P=1c-cw1 through iterative computations.However,the high (3) computational cost of the original I,-tracker and itera- i=1 where c;and c,are the sparse coefficients corresponding tive procedure for maintaining the neighborhood con- to the candidate regions y;and y,respectively,and w straints of sparse coefficients make NLSSST inefficient is the weight assigned to c.Given N m-dimensional in achieving real-timing tracking.In contrast to Fig.1, candidates Y=[y.yx]E RTxN,the first K-closest the schematic diagram of NLSSST presented in Fig.2, candidate point aroundy,is denoted by N(y),and includes an additional neighborhood constraint between 1_Iwy-W2☑ the weight w=一e where h is a pa- y;and N(y:). target template T trival template t 图图 (a)N Candidate regions (b)Over-complete dictionary Cr∈RxN C+∈RmxN C∈RmxN with sparity yNx()y\{y,N(y》 constraint min c (c)Sample (d)Dictionaries (e)Coefficient of representation Fig.2 Lu's NLSSST Algorithm 2 Euclidean local structure-based sparse known that the 12-norm is much more commonly used for measuring the distance between two vectors and is coding for tracking (ELSST) much easier to optimize than the I-norm.Thus,we To circumvent the heavy computation burden of take the former to measure the relationships between the 1-tracker and NISSST (Table 4),we propose an the sparse coefficient vectors,which are close to each efficient tracker,called ELSST,that considers the lo- other,i.e.,the Euclidean local-structure constraint, cal Euclidean structures of the candidates. and the latter I-norm of C to maintain the sparsity of 2.1 Original euclidean local structure constraint the optimization as follows: sparse coding Original ELSSC) It is evident from Eq.(4)that NLSSST attempts minc I Y-DC+a C+B I C-CWll to solve a double I-norm problem.However,it is well (5)1.2 Non⁃local self⁃similarity based sparse coding for tracking (NLSSST) Recently, Xu indicated the trade⁃off between sparsity and stability in sparse regularized algo⁃ rithms [10] . Moreover, Yang pointed out the same A⁃op⁃ timization issue in pattern classification [12] . Based on the fact that lots of similar regions exist in all N candi⁃ dates generated by Bayesian inference, Lu proposed his tracker with the non⁃local self⁃similarity constraint as ∑ n i = 1 ci - ∑ K j = 1 wji cj 2 = ‖C - CW‖2 F (3) where ci and cj are the sparse coefficients corresponding to the candidate regions yi and yj, respectively, and wji is the weight assigned to cj . Given N m⁃dimensional candidates Y = y1 … yN [ ] ∈ R m×N , the first K⁃closest candidate point aroundyj is denoted by NK ( yj ), and the weight wji = 1 sj e - ‖NK (y j ) -NK (y i )‖2 h , where h is a pa⁃ rameter enforcing similarity, and sj is the normalization factor. The weight wji measures the similarity between the K⁃neighborhood of yj and yi . Lu’s algorithm actual⁃ ly attempts to solve the following: minC ‖Y - DC‖2 F + α ‖C‖1 + β ‖C - CW‖1 (4) Taking the solution of the l 1 ⁃tracker from Eq.(2) as the initial coefficients c0 , Eq. ( 4) can be solved through iterative computations [11] . However, the high computational cost of the original l 1 ⁃tracker and itera⁃ tive procedure for maintaining the neighborhood con⁃ straints of sparse coefficients make NLSSST inefficient in achieving real⁃timing tracking. In contrast to Fig. 1, the schematic diagram of NLSSST presented in Fig. 2, includes an additional neighborhood constraint between yi and NK(yi). Fig. 2 Lus NLSSST Algorithm 2 Euclidean local structure⁃based sparse coding for tracking (ELSST) To circumvent the heavy computation burden of the l 1 ⁃tracker and NLSSST (Table 4), we propose an efficient tracker, called ELSST, that considers the lo⁃ cal Euclidean structures of the candidates. 2.1 Original euclidean local structure constraint sparse coding (Original ELSSC) It is evident from Eq. (4) that NLSSST attempts to solve a double l 1 ⁃norm problem. However, it is well known that the l 2 ⁃norm is much more commonly used for measuring the distance between two vectors and is much easier to optimize than the l 1 ⁃norm. Thus, we take the former to measure the relationships between the sparse coefficient vectors, which are close to each other, i. e., the Euclidean local⁃structure constraint, and the latter l 1 ⁃norm of C to maintain the sparsity of the optimization as follows: minC ‖Y - DC‖2 F + α ‖C‖1 + β ‖C - CW‖2 F (5) ·138· 智 能 系 统 学 报 第 11 卷