正在加载图片...

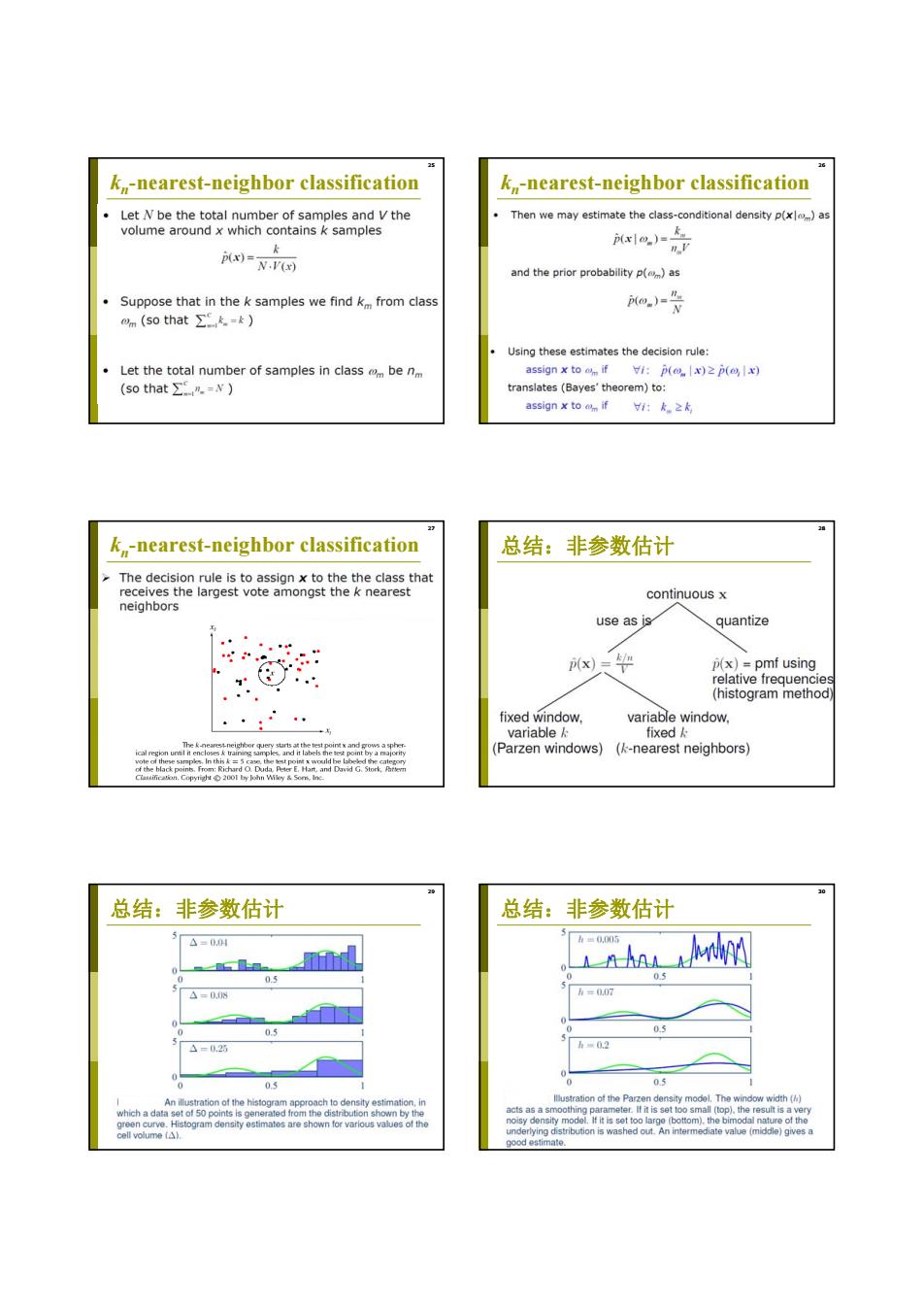

16 k-nearest-neighbor classification k-nearest-neighbor classification Let N be the total number of samples and V the Then we may estimate the class-conditional density p(xl)as volume around x which contains k samples pxlo.)-k p(x)=N.V() and the prior probability p()as Suppose that in the k samples we find k from class -是 omn(so that∑k.=k) Using these estimates the decision rule: Let the total number of samples in classm be nm assign x toom if :p(x)≥(间) (so that∑CR=v) translates (Bayes'theorem)to: assign x tom if:k.≥k k,-nearest-neighbor classification 总结:非参数估计 The decision rule is to assign x to the the class that receives the largest vote amongst the k nearest continuous x neighbors use as is quantize x)天 (x)=pmf using relative frequencie (histogram method fixed window, variable window. variable k: fixed nunhe (Parzen windows)(-nearest neighbors) 总结:非参数估计 总结:非参数估计 △=0.04 nhar nd h■0.005 0.5 0.5 △=0.08 h=0.07 0.5 03 △=0.25 0 0.5 0.5 An illustration of the histogram approach to density estimation.in llustration of the Parzen density model.The window width ( which a data set of 50 points is generated from the distribution shown by the acts as a sm tet,tis set to green curve.Histogram density estimates are shown for various values of the onis washgermediate value (middle/ee de I it is set tog cell volume (A). imate25 kn-nearest-neighbor classification 26 kn-nearest-neighbor classification 27 kn-nearest-neighbor classification 28 总结:非参数估计 29 总结:非参数估计 30 总结:非参数估计