正在加载图片...

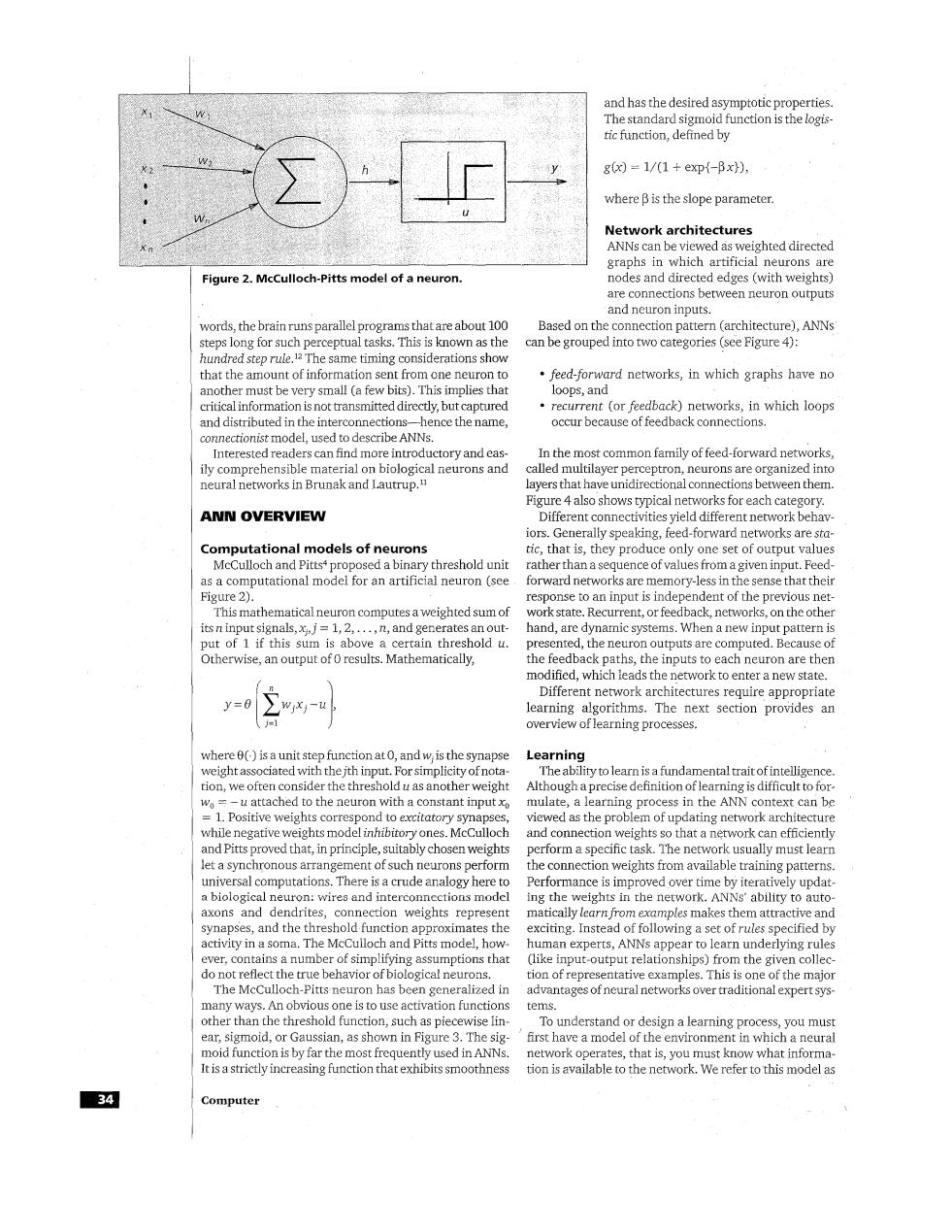

and has the desired asymptotic properties. The standard sigmoid function is the logis tic function,defined by gx)=1/(1+exp{-Bx}), where B is the slope parameter. Network architectures ANNs can be viewed as weighted directed graphs in which artificial neurons are Figure 2.McCulloch-Pitts model of a neuron nodes and directed edges (with weights) are connections between neuron outputs and neuron inputs. words,the brain runs parallel programs that are about 100 Based on the connection pattern(architecture),ANNs steps long for such perceptual tasks.This is known as the can be grouped into two categories (see Figure 4): hundred step rule.The same timing considerations show that the amount of information sent from one neuron to feed-forward networks,in which graphs have no another must be very small(a few bits).This implies that loops,and critical information is not transmitted directly,but captured recurrent (or feedback)networks,in which loops and distributed in the interconnections-hence the name, occur because of feedback connections. connectionist model,used to describe ANNs. Interested readers can find more introductory and eas- In the most common family of feed-forward networks. ily comprehensible material on biological neurons and called multilayer perceptron,neurons are organized into neural networks in Brunak and Lautrup.n layers that have unidirectional connections between them Figure 4 also shows typical networks for each category. ANN OVERVIEW Different connectivities yield different network behav- iors.Generally speaking,feed-forward networks are sta- Computational models of neurons tic,that is,they produce only one set of output values MeCulloch and Pitts+proposed a binary threshold unit rather than a sequence of values from a given input.Feed- as a computational model for an artificial neuron (see forward networks are memory-less in the sense that their Figure 2). response to an input is independent of the previous net- This mathematical neuron computes a weighted sum of work state.Recurrent,or feedback,networks,on the other itsn input signals,x,=1,2,...,n,and generates an out- hand,are dynamic systems.When a new input pattern is put of 1 if this sum is above a certain threshold u. presented,the neuron outputs are computed.Because of Otherwise,an output of 0 results.Mathematically, the feedback paths,the inputs to each neuron are then modified,which leads the network to enter a new state. Different network architectures require appropriate learning algorithms.The next section provides an overview of learning processes. where 0()is a unit step function at 0,and w,is the synapse Learning weight associated with the jth input.For simplicity of nota- The ability to learn is a fundamental trait of intelligence. tion,we often consider the threshold u as another weight Although a precise definition of learning is difficult to for- wo=-u attached to the neuron with a constant input xo mulate,a learning process in the ANN context can be =1.Positive weights correspond to excitatory synapses, viewed as the problem of updating network architecture while negative weights model inhibitory ones.McCulloch and connection weights so that a network can efficiently and Pitts proved that,in principle,suitably chosen weights perform a specific task.The network usually must learn let a synchronous arrangement of such neurons perform the connection weights from available training patterns. universal computations.There is a crude analogy here to Performance is improved over time by iteratively updat. a biological neuron:wires and interconnections model ing the weights in the network.ANNs'ability to auto- axons and dendrites,connection weights represent matically learn from examples makes them attractive and synapses,and the threshold function approximates the exciting.Instead of following a set of rules specified by activity in a soma.The McCulloch and Pitts model,how- human experts,ANNs appear to learn underlying rules ever,contains a number of simplifying assumptions that (like input-output relationships)from the given collec- do not reflect the true behavior of biological neurons. tion of representative examples.This is one of the major The McCulloch-Pitts neuron has been generalized in advantages of neural networks over traditional expert sys- many ways.An obvious one is to use activation functions tems. other than the threshold function,such as piecewise lin- To understand or design a learning process,you must ear,sigmoid,or Gaussian,as shown in Figure 3.The sig- first have a model of the environment in which a neural moid function is by far the most frequently used in ANNs. network operates,that is,you must know what informa- It is a strictly increasing function that exhibits smoothness tion is available to the network.We refer to this model as 34 ComputerFigure 2. McCulloch-Pitts model of a neuron. words, the brain runs parallel programs that are about 100 steps long for such perceptual tasks. This is known as the hundred step rule.12 The same timing considerations show that the amount of information sent from one neuron to another must be very small (a few bits). This implies that critical information is not transmitted directly, but captured and distributed in the interconnections-hence the name, connectionist model, used to describe A"s. Interested readers can find more introductory and easily comprehensible material on biological neurons and neural networks in Brunak and Lautrup.ll ANN OVERVIEW Computational models of neurons McCulloch and Pitts4 proposed a binary threshold unit as a computational model for an artificial neuron (see Figure 2). This mathematical neuron computes a weighted sum of its n input signals,x,, j = 1,2, . . . , n, and generates an output of 1 if this sum is above a certain threshold U. Otherwise, an output of 0 results. Mathematically, where O( ) is a unit step function at 0, and w, is the synapse weight associated with the jth input. For simplicity of notation, we often consider the threshold U as anotherweight wo = - U attached to the neuron with a constant input x, = 1. Positive weights correspond to excitatory synapses, while negative weights model inhibitory ones. McCulloch and Pitts proved that, in principle, suitably chosen weights let a synchronous arrangement of such neurons perform universal computations. There is a crude analogy here to a biological neuron: wires and interconnections model axons and dendrites, connection weights represent synapses, and the threshold function approximates the activity in a soma. The McCulloch and Pitts model, however, contains a number of simplifylng assumptions that do not reflect the true behavior of biological neurons. The McCulloch-Pitts neuron has been generalized in many ways. An obvious one is to use activation functions other than the threshold function, such as piecewise linear, sigmoid, or Gaussian, as shown in Figure 3. The sigmoid function is by far the most frequently used in A"s. It is a strictly increasing function that exhibits smoothness Computer and has the desired asymptotic properties. The standard sigmoid function is the logistic function, defined by where p is the slope parameter. Network architectures A"s can be viewed as weighted directed graphs in which artificial neurons are nodes and directed edges (with weights) are connections between neuron outputs and neuron inputs. Based on the connection pattern (architecture), A"s can be grouped into two categories (see Figure 4) : * feed-forward networks, in which graphs have no * recurrent (or feedback) networks, in which loops loops, and occur because of feedback connections. In the most common family of feed-forward networks, called multilayer perceptron, neurons are organized into layers that have unidirectional connections between them. Figure 4 also shows typical networks for each category. Different connectivities yield different network behaviors. Generally speaking, feed-forward networks are static, that is, they produce only one set of output values rather than a sequence of values from a given input. Feedforward networks are memory-less in the sense that their response to an input is independent of the previous networkstate. Recurrent, or feedback, networks, on the other hand, are dynamic systems. When a new input pattern is presented, the neuron outputs are computed. Because of the feedback paths, the inputs to each neuron are then modified, which leads the network to enter a new state. Different network architectures require appropriate learning algorithms. The next section provides an overview of learning processes. Learning The ability to learn is a fundamental trait of intelligence. Although aprecise definition of learning is difficult to formulate, a learning process in the ANN context can be viewed as the problem of updating network architecture and connection weights so that a network can efficiently perform a specific task. The network usually must learn the connection weights from available training patterns. Performance is improved over time by iteratively updating the weights in the network. ANNs' ability to automatically learnfrom examples makes them attractive and exciting. Instead of following a set of rules specified by human experts, ANNs appear to learn underlying rules (like input-output relationships) from the given collection of representative examples. This is one of the major advantages of neural networks over traditional expert systems. To understand or design a learning process, you must first have a model of the environment in which a neural network operates, that is, you must know what information is available to the network. We refer to this model as