正在加载图片...

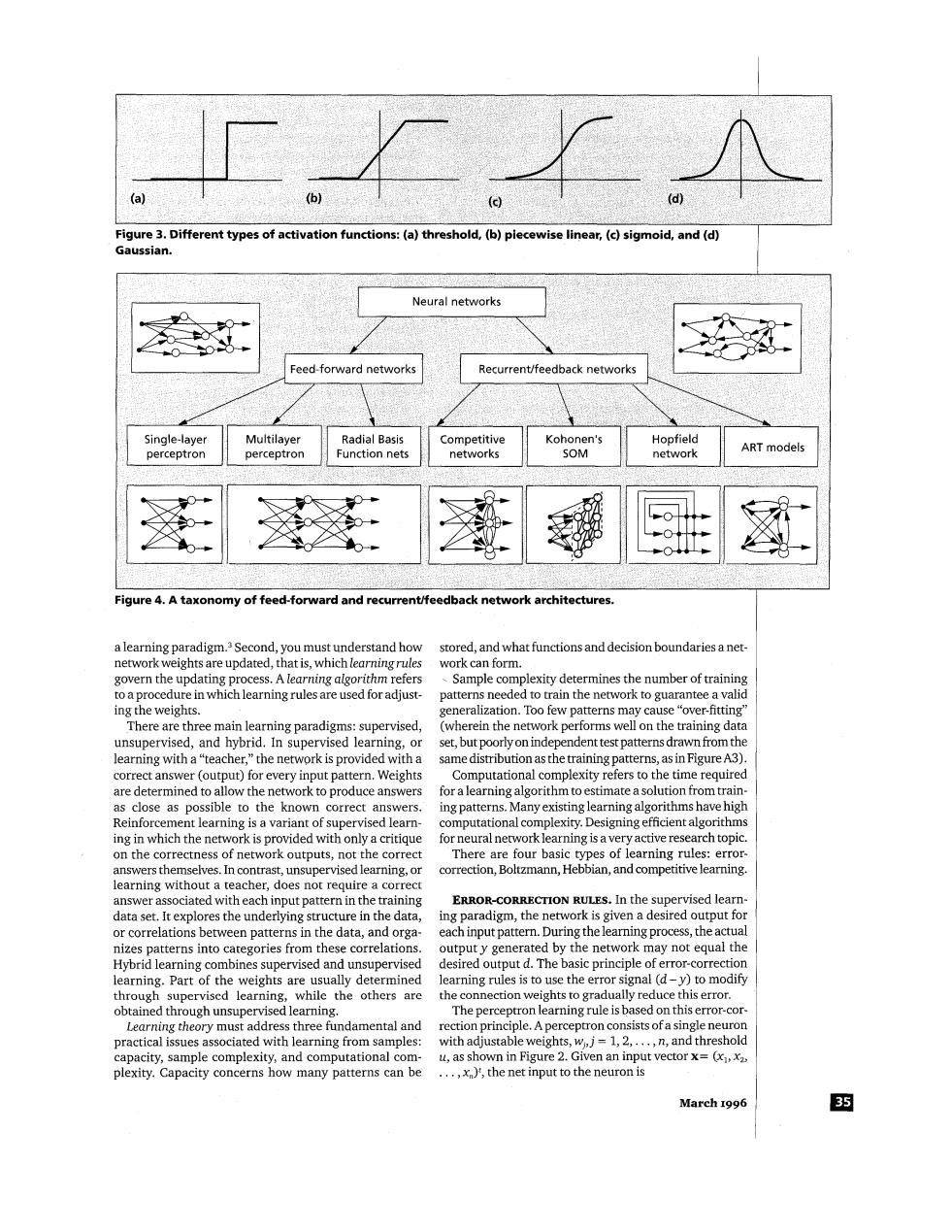

(a (b) d Figure 3.Different types of activation functions:(a)threshold,(b)piecewise linear,(c)sigmoid,and(d) Gaussian. Neural networks Feed-forward networks Recurrent/feedback networks 1+2 Single-layer Multilayer Radial Basis Competitive Kohonen's Hopfield SOM ART models perceptron perceptron Function nets networks network Figure 4.A taxonomy of feed-forward and recurrent/feedback network architectures. a learning paradigm.Second,you must understand how stored,and what functions and decision boundaries a net- network weights are updated,that is,which learning rules work can form. govern the updating process.A learning algorithm refers -Sample complexity determines the number of training to a procedure in which learning rules are used for adjust- patterns needed to train the network to guarantee a valid ing the weights. generalization.Too few patterns may cause "over-fitting' There are three main learning paradigms:supervised, (wherein the network performs well on the training data unsupervised,and hybrid.In supervised learning,or set,but poorly on independent test patterns drawn from the learning with a"teacher,"the network is provided with a same distribution as the training patterns,as in Figure A3). correct answer (output)for every input pattern.Weights Computational complexity refers to the time required are determined to allow the network to produce answers for a learning algorithm to estimate a solution from train- as close as possible to the known correct answers ing patterns.Many existing learning algorithms have high Reinforcement learning is a variant of supervised learn- computational complexity.Designing efficient algorithms ing in which the network is provided with only a critique for neural network learning is a very active research topic. on the correctness of network outputs,not the correct There are four basic types of learning rules:error- answers themselves.In contrast,unsupervised learning,or correction,Boltzmann,Hebbian,and competitive learning learning without a teacher,does not require a correct answer associated with each input pattern in the training ERROR-CORRECTION RULES.In the supervised learn- data set.It explores the underlying structure in the data ing paradigm,the network is given a desired output for or correlations between patterns in the data,and orga each input pattern.During the learning process,the actual nizes patterns into categories from these correlations. outputy generated by the network may not equal the Hybrid learning combines supervised and unsupervised desired output d.The basic principle of error-correction learning.Part of the weights are usually determined learning rules is to use the error signal(d-y)to modify through supervised learning,while the others are the connection weights to gradually reduce this error. obtained through unsupervised learning. The perceptron learning rule is based on this error-cor- Learning theory must address three fundamental and rection principle.A perceptron consists of a single neuron practical issues associated with learning from samples: with adjustable weights,w,j=1,2,...,n,and threshold capacity,sample complexity,and computational com- u,as shown in Figure 2.Given an input vector x=(x,x2, plexity.Capacity concerns how many patterns can be ...,x),the net input to the neuron is March 1996 35(a) Jr- (4 (C) 4 (4 Figure 3. Different types of activation functions: (a) threshold, (b) piecewise linear, (c) sigmoid, and (d) Gaussian. Figure 4. A taxonomy of feed-forward and recurrentlfeedback network architectures. a learning ~aradigm.~ Second, you must understand how network weights are updated, that is, which learning rules govern the updating process. A learning algorithm refers to a procedure in which learning rules are used for adjusting the weights. There are three main learning paradigms: supervised, unsupervised, and hybrid. In supervised learning, or learning with a “teacher,” the network is provided with a correct answer (output) for every input pattern. Weights are determined to allow the network to produce answers as close as possible to the known correct answers. Reinforcement learning is a variant of supervised learning in which the network is provided with only a critique on the correctness of network outputs, not the correct answers themselves. In contrast, unsupervised learning, or learning without a teacher, does not require a correct answer associated with each input pattern in the training data set. It explores the underlying structure in the data, or correlations between patterns in the data, and organizes patterns into categories from these correlations. Hybrid learning combines supervised and unsupervised learning. Part of the weights are usually determined through supervised learning, while the others are obtained through unsupervised learning. Learning theory must address three fundamental and practical issues associated with learning from samples: capacity, sample complexity, and computational complexity. Capacity concerns how many patterns can be stored, and what functions and decision boundaries a network can form. , Sample complexity determines the number of training patterns needed to train the network to guarantee a valid generalization. Too few patterns may cause “over-fitting” (wherein the network performs well on the training data set, but poorly on independent test patterns drawn from the same distribution as the training patterns, as in Figure A3). Computational complexity refers to the time required for a learning algorithm to estimate a solution from training patterns. Many existing learning algorithms have high computational complexity. Designing efficient algorithms for neural network learning is avery active research topic. There are four basic types of learning rules: errorcorrection, Boltzmann, Hebbian, and competitive learning. ERROR-CORRECTION RULES. In the supervised learning paradigm, the network is given a desired output for each input pattern. During the learning process, the actual output y generated by the network may not equal the desired output d. The basic principle of error-correction learning rules is to use the error signal (d -y) to modify the connection weights to gradually reduce this error. The perceptron learning rule is based on this error-correction principle. A perceptron consists of a single neuron with adjustable weights, w,, j = 1,2, . . . , n, and threshold U, as shown in Figure 2. Given an input vector x= (xl, x,, . . . , xJt, the net input to the neuron is March 1996