正在加载图片...

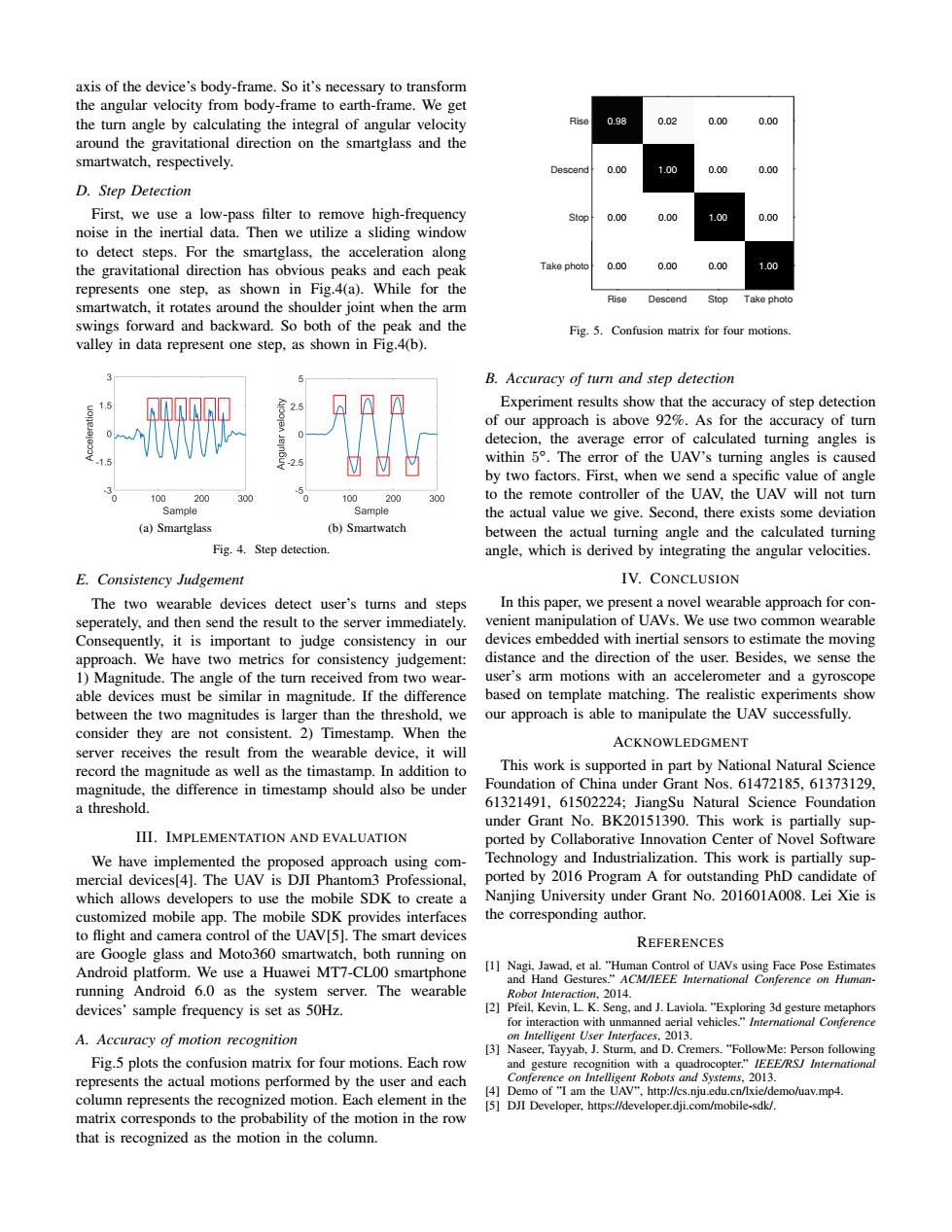

axis of the device's body-frame.So it's necessary to transform the angular velocity from body-frame to earth-frame.We get the turn angle by calculating the integral of angular velocity 0.98 0.02 0.00 0.00 around the gravitational direction on the smartglass and the smartwatch,respectively. Descend 0.00 1.00 0.00 0.00 D.Step Detection First,we use a low-pass filter to remove high-frequency Stop 0.00 0.00 1.00 0.00 noise in the inertial data.Then we utilize a sliding window to detect steps.For the smartglass.the acceleration along the gravitational direction has obvious peaks and each peak Take photo 0.00 0.00 0.00 1.00 represents one step.as shown in Fig.4(a).While for the Rise Descend Stop Take photo smartwatch,it rotates around the shoulder joint when the arm swings forward and backward.So both of the peak and the Fig.5.Confusion matrix for four motions. valley in data represent one step,as shown in Fig.4(b). B.Accuracy of turn and step detection 25 Experiment results show that the accuracy of step detection of our approach is above 92%.As for the accuracy of turn detecion,the average error of calculated turning angles is F-2.5 within 5.The error of the UAV's turning angles is caused by two factors.First,when we send a specific value of angle 100 200 300 100 200 300 to the remote controller of the UAV.the UAV will not turn Sample Sample the actual value we give.Second,there exists some deviation (a)Smartglass (b)Smartwatch between the actual turning angle and the calculated turning Fig.4.Step detection. angle,which is derived by integrating the angular velocities. E.Consistency Judgement IV.CONCLUSION The two wearable devices detect user's turns and steps In this paper,we present a novel wearable approach for con- seperately,and then send the result to the server immediately. venient manipulation of UAVs.We use two common wearable Consequently,it is important to judge consistency in our devices embedded with inertial sensors to estimate the moving approach.We have two metrics for consistency judgement: distance and the direction of the user.Besides,we sense the 1)Magnitude.The angle of the turn received from two wear- user's arm motions with an accelerometer and a gyroscope able devices must be similar in magnitude.If the difference based on template matching.The realistic experiments show between the two magnitudes is larger than the threshold,we our approach is able to manipulate the UAV successfully. consider they are not consistent.2)Timestamp.When the ACKNOWLEDGMENT server receives the result from the wearable device,it will record the magnitude as well as the timastamp.In addition to This work is supported in part by National Natural Science magnitude,the difference in timestamp should also be under Foundation of China under Grant Nos.61472185,61373129, a threshold. 61321491,61502224;JiangSu Natural Science Foundation under Grant No.BK20151390.This work is partially sup- III.IMPLEMENTATION AND EVALUATION ported by Collaborative Innovation Center of Novel Software We have implemented the proposed approach using com- Technology and Industrialization.This work is partially sup- mercial devices[4].The UAV is DJI Phantom3 Professional, ported by 2016 Program A for outstanding PhD candidate of which allows developers to use the mobile SDK to create a Nanjing University under Grant No.201601A008.Lei Xie is customized mobile app.The mobile SDK provides interfaces the corresponding author. to flight and camera control of the UAV[5].The smart devices REFERENCES are Google glass and Moto360 smartwatch,both running on Android platform.We use a Huawei MT7-CL00 smartphone [1]Nagi,Jawad,et al."Human Control of UAVs using Face Pose Estimates and Hand Gestures."ACM/IEEE International Conference on Human- running Android 6.0 as the system server.The wearable Robot Interaction,2014. devices'sample frequency is set as 50Hz. [2]Pfeil,Kevin,L.K.Seng,and J.Laviola."Exploring 3d gesture metaphors for interaction with unmanned aerial vehicles."International Conference A.Accuracy of motion recognition on Intelligent User Interfaces,2013. [3]Naseer,Tayyab,J.Sturm,and D.Cremers."FollowMe:Person following Fig.5 plots the confusion matrix for four motions.Each row and gesture recognition with a quadrocopter."IEEE/RSJ International represents the actual motions performed by the user and each Conference on Intelligent Robots and Systems,2013. [4]Demo of "I am the UAV",http://cs.nju.edu.cn/lxie/demo/uav.mp4 column represents the recognized motion.Each element in the [5]DJI Developer,https://developer.dji.com/mobile-sdk/. matrix corresponds to the probability of the motion in the row that is recognized as the motion in the column.axis of the device’s body-frame. So it’s necessary to transform the angular velocity from body-frame to earth-frame. We get the turn angle by calculating the integral of angular velocity around the gravitational direction on the smartglass and the smartwatch, respectively. D. Step Detection First, we use a low-pass filter to remove high-frequency noise in the inertial data. Then we utilize a sliding window to detect steps. For the smartglass, the acceleration along the gravitational direction has obvious peaks and each peak represents one step, as shown in Fig.4(a). While for the smartwatch, it rotates around the shoulder joint when the arm swings forward and backward. So both of the peak and the valley in data represent one step, as shown in Fig.4(b). (a) Smartglass (b) Smartwatch Fig. 4. Step detection. E. Consistency Judgement The two wearable devices detect user’s turns and steps seperately, and then send the result to the server immediately. Consequently, it is important to judge consistency in our approach. We have two metrics for consistency judgement: 1) Magnitude. The angle of the turn received from two wearable devices must be similar in magnitude. If the difference between the two magnitudes is larger than the threshold, we consider they are not consistent. 2) Timestamp. When the server receives the result from the wearable device, it will record the magnitude as well as the timastamp. In addition to magnitude, the difference in timestamp should also be under a threshold. III. IMPLEMENTATION AND EVALUATION We have implemented the proposed approach using commercial devices[4]. The UAV is DJI Phantom3 Professional, which allows developers to use the mobile SDK to create a customized mobile app. The mobile SDK provides interfaces to flight and camera control of the UAV[5]. The smart devices are Google glass and Moto360 smartwatch, both running on Android platform. We use a Huawei MT7-CL00 smartphone running Android 6.0 as the system server. The wearable devices’ sample frequency is set as 50Hz. A. Accuracy of motion recognition Fig.5 plots the confusion matrix for four motions. Each row represents the actual motions performed by the user and each column represents the recognized motion. Each element in the matrix corresponds to the probability of the motion in the row that is recognized as the motion in the column. 0.98 0.00 0.00 0.00 0.02 1.00 0.00 0.00 0.00 0.00 1.00 0.00 0.00 0.00 0.00 1.00 Rise Descend Stop Take photo Rise Descend Stop Take photo Fig. 5. Confusion matrix for four motions. B. Accuracy of turn and step detection Experiment results show that the accuracy of step detection of our approach is above 92%. As for the accuracy of turn detecion, the average error of calculated turning angles is within 5∘. The error of the UAV’s turning angles is caused by two factors. First, when we send a specific value of angle to the remote controller of the UAV, the UAV will not turn the actual value we give. Second, there exists some deviation between the actual turning angle and the calculated turning angle, which is derived by integrating the angular velocities. IV. CONCLUSION In this paper, we present a novel wearable approach for convenient manipulation of UAVs. We use two common wearable devices embedded with inertial sensors to estimate the moving distance and the direction of the user. Besides, we sense the user’s arm motions with an accelerometer and a gyroscope based on template matching. The realistic experiments show our approach is able to manipulate the UAV successfully. ACKNOWLEDGMENT This work is supported in part by National Natural Science Foundation of China under Grant Nos. 61472185, 61373129, 61321491, 61502224; JiangSu Natural Science Foundation under Grant No. BK20151390. This work is partially supported by Collaborative Innovation Center of Novel Software Technology and Industrialization. This work is partially supported by 2016 Program A for outstanding PhD candidate of Nanjing University under Grant No. 201601A008. Lei Xie is the corresponding author. REFERENCES [1] Nagi, Jawad, et al. ”Human Control of UAVs using Face Pose Estimates and Hand Gestures.” ACM/IEEE International Conference on HumanRobot Interaction, 2014. [2] Pfeil, Kevin, L. K. Seng, and J. Laviola. ”Exploring 3d gesture metaphors for interaction with unmanned aerial vehicles.” International Conference on Intelligent User Interfaces, 2013. [3] Naseer, Tayyab, J. Sturm, and D. Cremers. ”FollowMe: Person following and gesture recognition with a quadrocopter.” IEEE/RSJ International Conference on Intelligent Robots and Systems, 2013. [4] Demo of ”I am the UAV”, http://cs.nju.edu.cn/lxie/demo/uav.mp4. [5] DJI Developer, https://developer.dji.com/mobile-sdk/