正在加载图片...

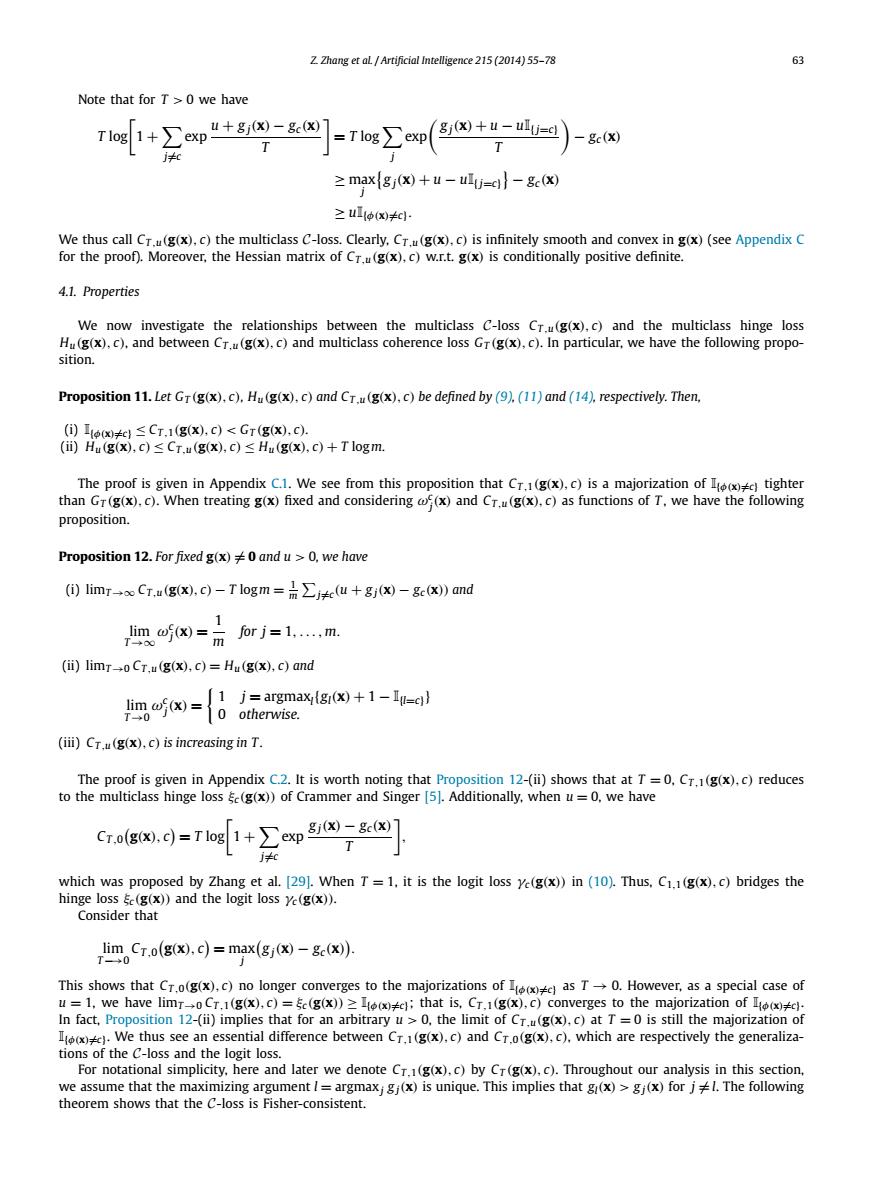

Z Zhang et aL Artificial Intelligence 215 (2014)55-78 63 Note that for T>0 we have gj(x)+u-uluj=c) T -gc(x) T j+c zmax gj(x)+u-ulj=c)-gc(x) ≥ulp(x≠c We thus call Cr.u(g(x),c)the multiclass C-loss.Clearly,Cr.u(g(x).c)is infinitely smooth and convex in g(x)(see Appendix C for the proof).Moreover,the Hessian matrix of Cr.u(g(x),c)w.r.t.g(x)is conditionally positive definite. 4.1.Properties We now investigate the relationships between the multiclass C-loss Cr.u(g(x).c)and the multiclass hinge loss Hu(g(x),c).and between Cr.u(g(x),c)and multiclass coherence loss Gr(g(x),c).In particular,we have the following propo- sition. Proposition 11.Let Gr(g(x).c).Hu(g(x).c)and Cr.u(g(x),c)be defined by (9).(11)and (14),respectively.Then, (i)x)CT.1(g(x).c)<GT(g(x).c). ()Hug(x),c)≤Cr.u(g(x,c)≤Hu(gx),c)+T logm. The proof is given in Appendix C.1.We see from this proposition that Cr.1(g(x).c)is a majorization of)tighter than Gr(g(x).c).When treating g(x)fixed and considering (x)and Cr.u(g(x),c)as functions of T.we have the following proposition. Proposition 12.For fixed g(x)0 and u 0,we have ()limr-→eCr,u(gx,c)-Tlogm=a∑j*e(u+8影x-gcX)amd m风=而orj=1m (ii)limroCr.u(g(x),c)=Hu(g(x),c)and [1 j=argmax (gi(x)+1-Iu=c)) lim (x)=0 otherwise. 0 (iii)Cr.u(g(x).c)is increasing in T. The proof is given in Appendix C.2.It is worth noting that Proposition 12-(ii)shows that at T=0.Cr.1(g(x).c)reduces to the multiclass hinge loss gc(g(x))of Crammer and Singer [5].Additionally,when u=0,we have Cr.o(g(X).c)=Tlog1+exp()-gc(x) L T itc which was proposed by Zhang et al.[29].When T =1,it is the logit loss ye(g(x))in (10).Thus.C1.1(g(x).c)bridges the hinge loss (g(x))and the logit loss ye(g(x)). Consider that lim Cr.o(g(x).c)=max(gj(x)-gc(x)). 0 This shows that CT.o(g(x).c)no longer converges to the majorizations of as T0.However,as a special case of u=1,we have limr-0Cr.1(g(x).c)=(g(x))x:that is,Cr.1(g(x).c)converges to the majorization ofx) In fact,Proposition 12-(ii)implies that for an arbitrary u>0,the limit of Cr.u(g(x),c)at T =0 is still the majorization of ).We thus see an essential difference between Cr.1(g(x).c)and Cr.o(g(x).c).which are respectively the generaliza- tions of the C-loss and the logit loss. For notational simplicity,here and later we denote Cr.(g(x).c)by Cr(g(x).c).Throughout our analysis in this section, we assume that the maximizing argument 1=argmaxjgj(x)is unique.This implies that gi(x)>gj(x)for j.The following theorem shows that the C-loss is Fisher-consistent.Z. Zhang et al. / Artificial Intelligence 215 (2014) 55–78 63 Note that for T > 0 we have T log 1 + j=c exp u + g j(x) − gc (x) T = T log j exp g j(x) + u − uI{j=c} T − gc (x) ≥ max j g j(x) + u − uI{j=c} − gc (x) ≥ uI{φ(x)=c}. We thus call CT ,u(g(x), c) the multiclass C-loss. Clearly, CT ,u(g(x), c) is infinitely smooth and convex in g(x) (see Appendix C for the proof). Moreover, the Hessian matrix of CT ,u(g(x), c) w.r.t. g(x) is conditionally positive definite. 4.1. Properties We now investigate the relationships between the multiclass C-loss CT ,u(g(x), c) and the multiclass hinge loss Hu(g(x), c), and between CT ,u(g(x), c) and multiclass coherence loss GT (g(x), c). In particular, we have the following proposition. Proposition 11. Let GT (g(x), c), Hu(g(x), c) and CT ,u(g(x), c) be defined by (9), (11) and (14), respectively. Then, (i) I{φ(x)=c} ≤ CT ,1(g(x), c) < GT (g(x), c). (ii) Hu(g(x), c) ≤ CT ,u(g(x), c) ≤ Hu(g(x), c) + T logm. The proof is given in Appendix C.1. We see from this proposition that CT ,1(g(x), c) is a majorization of I{φ(x)=c} tighter than GT (g(x), c). When treating g(x) fixed and considering ωc j (x) and CT ,u(g(x), c) as functions of T , we have the following proposition. Proposition 12. For fixed g(x) = 0 and u > 0, we have (i) limT→∞ CT ,u(g(x), c) − T logm = 1 m j=c (u + g j(x) − gc (x)) and lim T→∞ ωc j (x) = 1 m for j = 1,...,m. (ii) limT→0 CT ,u(g(x), c) = Hu(g(x), c) and lim T→0 ωc j (x) = 1 j = argmaxl{gl(x) + 1 − I{l=c}} 0 otherwise. (iii) CT ,u(g(x), c) is increasing in T . The proof is given in Appendix C.2. It is worth noting that Proposition 12-(ii) shows that at T = 0, CT ,1(g(x), c) reduces to the multiclass hinge loss ξc (g(x)) of Crammer and Singer [5]. Additionally, when u = 0, we have CT ,0 g(x), c = T log 1 + j=c exp g j(x) − gc (x) T , which was proposed by Zhang et al. [29]. When T = 1, it is the logit loss γc (g(x)) in (10). Thus, C1,1(g(x), c) bridges the hinge loss ξc (g(x)) and the logit loss γc (g(x)). Consider that lim T−→0 CT ,0 g(x), c = max j g j(x) − gc (x) . This shows that CT ,0(g(x), c) no longer converges to the majorizations of I{φ(x)=c} as T → 0. However, as a special case of u = 1, we have limT→0 CT ,1(g(x), c) = ξc (g(x)) ≥ I{φ(x)=c}; that is, CT ,1(g(x), c) converges to the majorization of I{φ(x)=c}. In fact, Proposition 12-(ii) implies that for an arbitrary u > 0, the limit of CT ,u(g(x), c) at T = 0 is still the majorization of I{φ(x)=c}. We thus see an essential difference between CT ,1(g(x), c) and CT ,0(g(x), c), which are respectively the generalizations of the C-loss and the logit loss. For notational simplicity, here and later we denote CT ,1(g(x), c) by CT (g(x), c). Throughout our analysis in this section, we assume that the maximizing argument l = argmaxj g j(x) is unique. This implies that gl(x) > g j(x) for j = l. The following theorem shows that the C-loss is Fisher-consistent.���