正在加载图片...

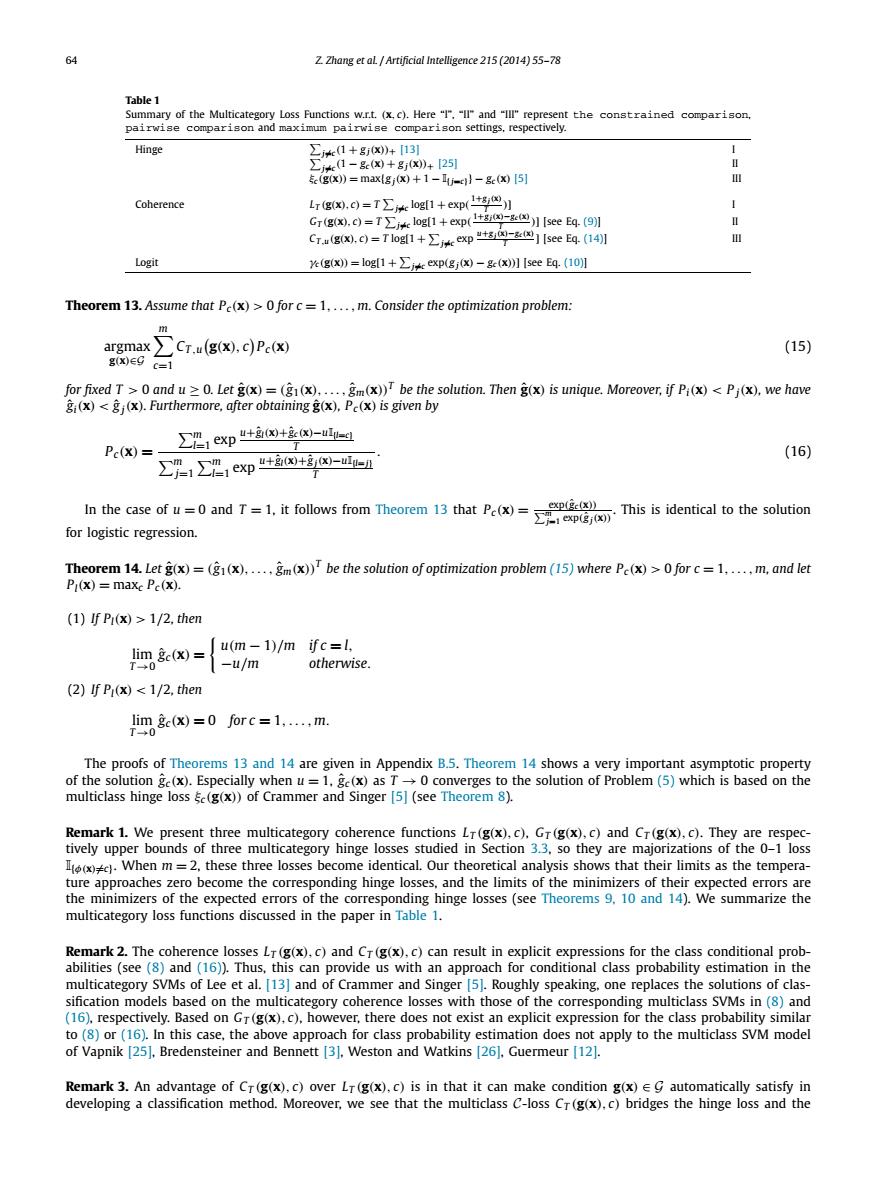

Z Zhang et aL Artificial Intelligence 215(2014)55-78 Table 1 Summary of the Multicategory Loss Functions w.r.t.(x,c).Here "I","II"and "Ill"represent the constrained comparison, pairwise comparison and maximum pairwise comparison settings.respectively. Hinge ∑j*(1+j)+13引 ∑j*e(1-8x+gx)+I251 (g(x))maxlgj(x)+1-Ij-c))-ge(x)[5] 分 Coherence rgW.c)=T∑*elog[1+exp(中巴】 Grg0.c0=T∑logl1+exp(+8r-3四】seE4(91 Cug.c0=T1og1+∑kexp-四1IseE4141 Logit Ye(g(x))=log[1+j exp(gj(x)-ge(x))]Isee Eq.(10)] Theorem 13.Assume that Pe(x)>0 for c=1....,m.Consider the optimization problem: m argmax Cr.u(g(x).c)Pc(x) (15) g(X)EG c=1 for fixed T>0 and u =0.Letg(x)=(g1(x).....gm(x))be the solution.Then g(x)is unique.Moreover,if Pi(x)<Pj(x),we have gi(x)<gj(x).Furthermore,after obtainingg(x),Pc(x)is given by Pc(X)=- ∑1exp"+8W+多6-ue型 -1∑1exp+W+W- (16) the caseofad1.it folws rom Therem that PThis is identical to the soution for logistic regression. Theorem 14.Letg(x)=((x).....gm(x))be the solution of optimization problem(15)where Pc(x)>0 for c=1....,m,and let Pi(x)=maxc Pc(x). (1)If Pi(x)>1/2.then u(m-1)/m ifc=I, m8(x= -u/m otherwise. (2)If P(x)<1/2,then lim ge(x)=0 forc=1,...,m. T0 The proofs of Theorems 13 and 14 are given in Appendix B.5.Theorem 14 shows a very important asymptotic property of the solution ge(x).Especially when u =1.ge(x)as T0 converges to the solution of Problem(5)which is based on the multiclass hinge loss Ec(g(x))of Crammer and Singer [5](see Theorem 8). Remark 1.We present three multicategory coherence functions Lr(g(x),c).Gr(g(x).c)and Cr(g(x),c).They are respec- tively upper bounds of three multicategory hinge losses studied in Section 3.3.so they are majorizations of the 0-1 loss ).When m=2.these three losses become identical.Our theoretical analysis shows that their limits as the tempera- ture approaches zero become the corresponding hinge losses,and the limits of the minimizers of their expected errors are the minimizers of the expected errors of the corresponding hinge losses(see Theorems 9.10 and 14).We summarize the multicategory loss functions discussed in the paper in Table 1. Remark 2.The coherence losses Lr(g(x).c)and Cr(g(x),c)can result in explicit expressions for the class conditional prob- abilities(see(8)and(16)).Thus,this can provide us with an approach for conditional class probability estimation in the multicategory SVMs of Lee et al.[13]and of Crammer and Singer [5].Roughly speaking,one replaces the solutions of clas- sification models based on the multicategory coherence losses with those of the corresponding multiclass SVMs in(8)and (16).respectively.Based on Gr(g(x).c),however,there does not exist an explicit expression for the class probability similar to(8)or(16).In this case,the above approach for class probability estimation does not apply to the multiclass SVM model of Vapnik [25],Bredensteiner and Bennett [3].Weston and Watkins [26].Guermeur [12]. Remark 3.An advantage of Cr(g(x),c)over Lr(g(x).c)is in that it can make condition g(x)EG automatically satisfy in developing a classification method.Moreover,we see that the multiclass C-loss Cr(g(x).c)bridges the hinge loss and the64 Z. Zhang et al. / Artificial Intelligence 215 (2014) 55–78 Table 1 Summary of the Multicategory Loss Functions w.r.t. (x, c). Here “I”, “II” and “III” represent the constrained comparison, pairwise comparison and maximum pairwise comparison settings, respectively. Hinge j=c (1 + g j(x))+ [13] I j=c (1 − gc (x) + g j(x))+ [25] II ξc (g(x)) = max{g j(x) + 1 − I{j=c}} − gc (x) [5] III Coherence LT (g(x), c) = T j=c log[1 + exp( 1+g j(x) T )] I GT (g(x), c) = T j=c log[1 + exp( 1+g j(x)−gc (x) T )] [see Eq. (9)] II CT ,u (g(x), c) = T log[1 + j=c exp u+g j(x)−gc (x) T ] [see Eq. (14)] III Logit γc (g(x)) = log[1 + j=c exp(g j(x) − gc (x))] [see Eq. (10)] Theorem 13. Assume that Pc(x) > 0 for c = 1,...,m. Consider the optimization problem: argmax g(x)∈G m c=1 CT ,u g(x), c Pc (x) (15) for fixed T > 0 and u ≥ 0. Let gˆ(x) = (gˆ 1(x),..., gˆm(x))T be the solution. Then gˆ(x) is unique. Moreover, if Pi(x) < P j(x), we have gˆi(x) < gˆ j(x). Furthermore, after obtaining gˆ(x), Pc (x) is given by Pc (x) = m l=1 exp u+gˆl(x)+gˆ c (x)−uI{l=c} T m j=1 m l=1 exp u+gˆl(x)+gˆ j(x)−uI{l= j} T . (16) In the case of u = 0 and T = 1, it follows from Theorem 13 that Pc (x) = exp(gˆ c (x)) m j=1 exp(gˆ j(x)) . This is identical to the solution for logistic regression. Theorem 14. Let gˆ(x) = (gˆ 1(x),..., gˆm(x))T be the solution of optimization problem (15) where Pc (x) > 0 for c = 1,...,m, and let Pl(x) = maxc Pc (x). (1) If Pl(x) > 1/2, then lim T→0 gˆ c (x) = u(m − 1)/m if c = l, −u/m otherwise. (2) If Pl(x) < 1/2, then lim T→0 gˆ c (x) = 0 for c = 1,...,m. The proofs of Theorems 13 and 14 are given in Appendix B.5. Theorem 14 shows a very important asymptotic property of the solution gˆ c (x). Especially when u = 1, gˆ c (x) as T → 0 converges to the solution of Problem (5) which is based on the multiclass hinge loss ξc (g(x)) of Crammer and Singer [5] (see Theorem 8). Remark 1. We present three multicategory coherence functions LT (g(x), c), GT (g(x), c) and CT (g(x), c). They are respectively upper bounds of three multicategory hinge losses studied in Section 3.3, so they are majorizations of the 0–1 loss I{φ(x)=c}. When m = 2, these three losses become identical. Our theoretical analysis shows that their limits as the temperature approaches zero become the corresponding hinge losses, and the limits of the minimizers of their expected errors are the minimizers of the expected errors of the corresponding hinge losses (see Theorems 9, 10 and 14). We summarize the multicategory loss functions discussed in the paper in Table 1. Remark 2. The coherence losses LT (g(x), c) and CT (g(x), c) can result in explicit expressions for the class conditional probabilities (see (8) and (16)). Thus, this can provide us with an approach for conditional class probability estimation in the multicategory SVMs of Lee et al. [13] and of Crammer and Singer [5]. Roughly speaking, one replaces the solutions of classification models based on the multicategory coherence losses with those of the corresponding multiclass SVMs in (8) and (16), respectively. Based on GT (g(x), c), however, there does not exist an explicit expression for the class probability similar to (8) or (16). In this case, the above approach for class probability estimation does not apply to the multiclass SVM model of Vapnik [25], Bredensteiner and Bennett [3], Weston and Watkins [26], Guermeur [12]. Remark 3. An advantage of CT (g(x), c) over LT (g(x), c) is in that it can make condition g(x) ∈ G automatically satisfy in developing a classification method. Moreover, we see that the multiclass C-loss CT (g(x), c) bridges the hinge loss and the�