正在加载图片...

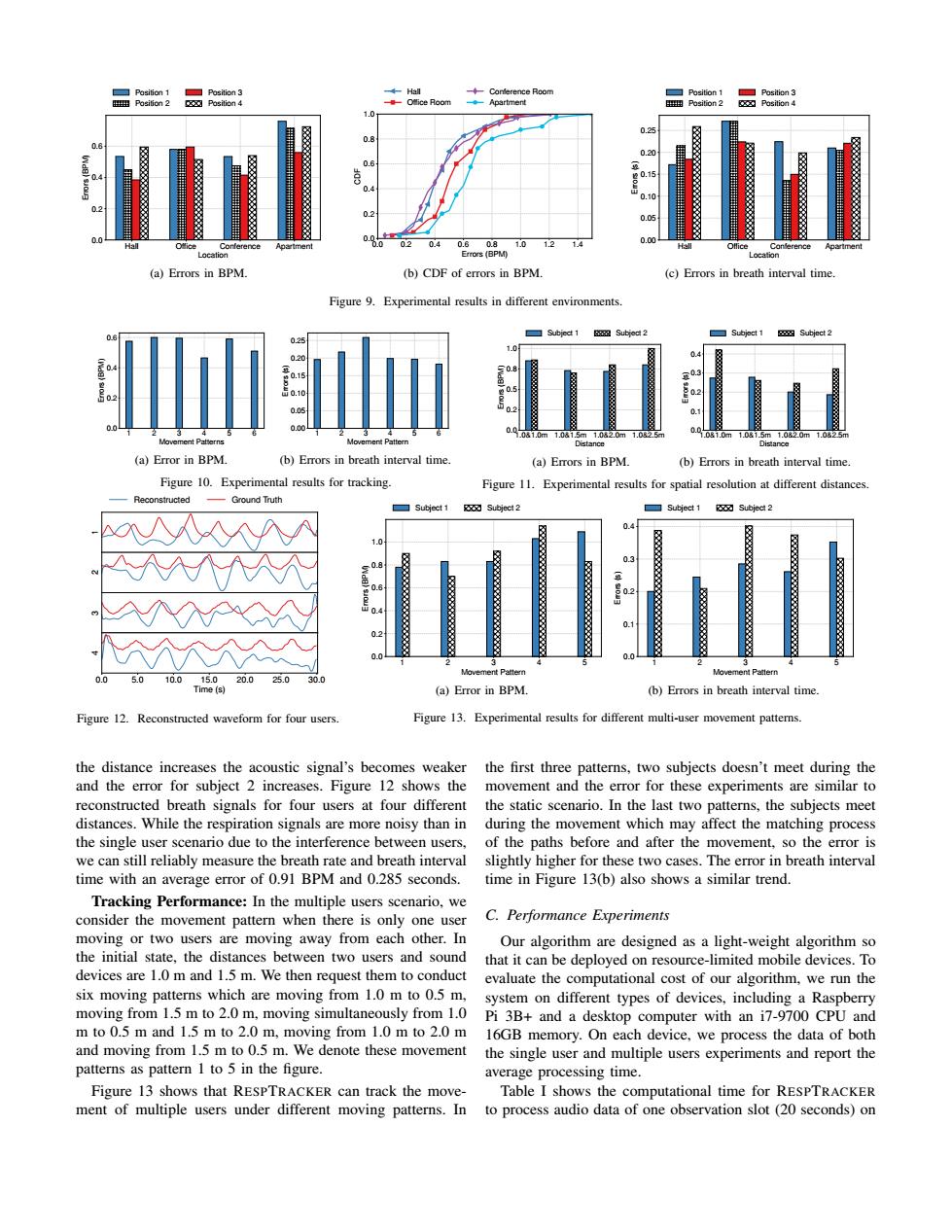

☐Position1 ☐Position3 ←Ha ☐Position1☐Position3 田Position2 ☒Position4 一ice Room -Apartment 里Position23☒Position4 1.0 0.25 0.20 0.6 00.1 g 0.10 02 0.2 0.05 00 03 04 (rM) .0 12 14 0.0 Hall Ohice ence (a)Errors in BPM. (b)CDF of errors in BPM. (c)Errors in breath interval time. Figure 9.Experimental results in different environments. ☐9 ubject18 Subjedt2 ☐Subject1 0.25 020 15 .10 0.05 0.00 Movement Patterns Movement Pattern (a)Error in BPM (b)Errors in breath interval time. (a)Errors in BPM. (b)Errors in breath interval time. Figure 10.Experimental results for tracking. Figure 11.Experimental results for spatial resolution at different distances Reconstructed Ground Truth ☐Subject1.Subject2 ☐Subject1 Subject2 0 0.3 0.8 02 0.4 0.0 Movemen Pattern 0.0 5.0 10.015.020.025.030.0 Time(s) (a)Error in BPM (b)Errors in breath interval time Figure 12.Reconstructed waveform for four users. Figure 13.Experimental results for different multi-user movement patterns. the distance increases the acoustic signal's becomes weaker the first three patterns,two subjects doesn't meet during the and the error for subject 2 increases.Figure 12 shows the movement and the error for these experiments are similar to reconstructed breath signals for four users at four different the static scenario.In the last two patterns,the subjects meet distances.While the respiration signals are more noisy than in during the movement which may affect the matching process the single user scenario due to the interference between users, of the paths before and after the movement,so the error is we can still reliably measure the breath rate and breath interval slightly higher for these two cases.The error in breath interval time with an average error of 0.91 BPM and 0.285 seconds. time in Figure 13(b)also shows a similar trend. Tracking Performance:In the multiple users scenario,we consider the movement pattern when there is only one user C.Performance Experiments moving or two users are moving away from each other.In Our algorithm are designed as a light-weight algorithm so the initial state,the distances between two users and sound that it can be deployed on resource-limited mobile devices.To devices are 1.0 m and 1.5 m.We then request them to conduct evaluate the computational cost of our algorithm,we run the six moving patterns which are moving from 1.0 m to 0.5 m,system on different types of devices,including a Raspberry moving from 1.5 m to 2.0 m,moving simultaneously from 1.0 Pi 3B+and a desktop computer with an i7-9700 CPU and m to 0.5 m and 1.5 m to 2.0 m,moving from 1.0 m to 2.0 m 16GB memory.On each device,we process the data of both and moving from 1.5 m to 0.5 m.We denote these movement the single user and multiple users experiments and report the patterns as pattern 1 to 5 in the figure. average processing time. Figure 13 shows that RESPTRACKER can track the move- Table I shows the computational time for RESPTRACKER ment of multiple users under different moving patterns.In to process audio data of one observation slot(20 seconds)onHall Office Conference Apartment Location 0.0 0.2 0.4 0.6 Errors (BPM) Position 1 Position 2 Position 3 Position 4 (a) Errors in BPM. 0.0 0.2 0.4 0.6 0.8 1.0 1.2 1.4 Errors (BPM) 0.0 0.2 0.4 0.6 0.8 1.0 CDF Hall Office Room Conference Room Apartment (b) CDF of errors in BPM. Hall Office Conference Apartment Location 0.00 0.05 0.10 0.15 0.20 0.25 Errors (s) Position 1 Position 2 Position 3 Position 4 (c) Errors in breath interval time. Figure 9. Experimental results in different environments. 1 2 3 4 5 6 Movement Patterns 0.0 0.2 0.4 0.6 Errors (BPM) (a) Error in BPM. 1 2 3 4 5 6 Movement Pattern 0.00 0.05 0.10 0.15 0.20 0.25 Errors (s) (b) Errors in breath interval time. Figure 10. Experimental results for tracking. 1.0&1.0m 1.0&1.5m 1.0&2.0m 1.0&2.5m Distance 0.0 0.2 0.5 0.8 1.0 Errors (BPM) Subject 1 Subject 2 (a) Errors in BPM. 1.0&1.0m 1.0&1.5m 1.0&2.0m 1.0&2.5m Distance 0.0 0.1 0.2 0.3 0.4 Errors (s) Subject 1 Subject 2 (b) Errors in breath interval time. Figure 11. Experimental results for spatial resolution at different distances. 1 Reconstructed Ground Truth 2 3 0.0 5.0 10.0 15.0 20.0 25.0 30.0 Time (s) 4 Figure 12. Reconstructed waveform for four users. 1 2 3 4 5 Movement Pattern 0.0 0.2 0.4 0.6 0.8 1.0 Errors (BPM) Subject 1 Subject 2 (a) Error in BPM. 1 2 3 4 5 Movement Pattern 0.0 0.1 0.2 0.3 0.4 Errors (s) Subject 1 Subject 2 (b) Errors in breath interval time. Figure 13. Experimental results for different multi-user movement patterns. the distance increases the acoustic signal’s becomes weaker and the error for subject 2 increases. Figure 12 shows the reconstructed breath signals for four users at four different distances. While the respiration signals are more noisy than in the single user scenario due to the interference between users, we can still reliably measure the breath rate and breath interval time with an average error of 0.91 BPM and 0.285 seconds. Tracking Performance: In the multiple users scenario, we consider the movement pattern when there is only one user moving or two users are moving away from each other. In the initial state, the distances between two users and sound devices are 1.0 m and 1.5 m. We then request them to conduct six moving patterns which are moving from 1.0 m to 0.5 m, moving from 1.5 m to 2.0 m, moving simultaneously from 1.0 m to 0.5 m and 1.5 m to 2.0 m, moving from 1.0 m to 2.0 m and moving from 1.5 m to 0.5 m. We denote these movement patterns as pattern 1 to 5 in the figure. Figure 13 shows that RESPTRACKER can track the movement of multiple users under different moving patterns. In the first three patterns, two subjects doesn’t meet during the movement and the error for these experiments are similar to the static scenario. In the last two patterns, the subjects meet during the movement which may affect the matching process of the paths before and after the movement, so the error is slightly higher for these two cases. The error in breath interval time in Figure 13(b) also shows a similar trend. C. Performance Experiments Our algorithm are designed as a light-weight algorithm so that it can be deployed on resource-limited mobile devices. To evaluate the computational cost of our algorithm, we run the system on different types of devices, including a Raspberry Pi 3B+ and a desktop computer with an i7-9700 CPU and 16GB memory. On each device, we process the data of both the single user and multiple users experiments and report the average processing time. Table I shows the computational time for RESPTRACKER to process audio data of one observation slot (20 seconds) on