正在加载图片...

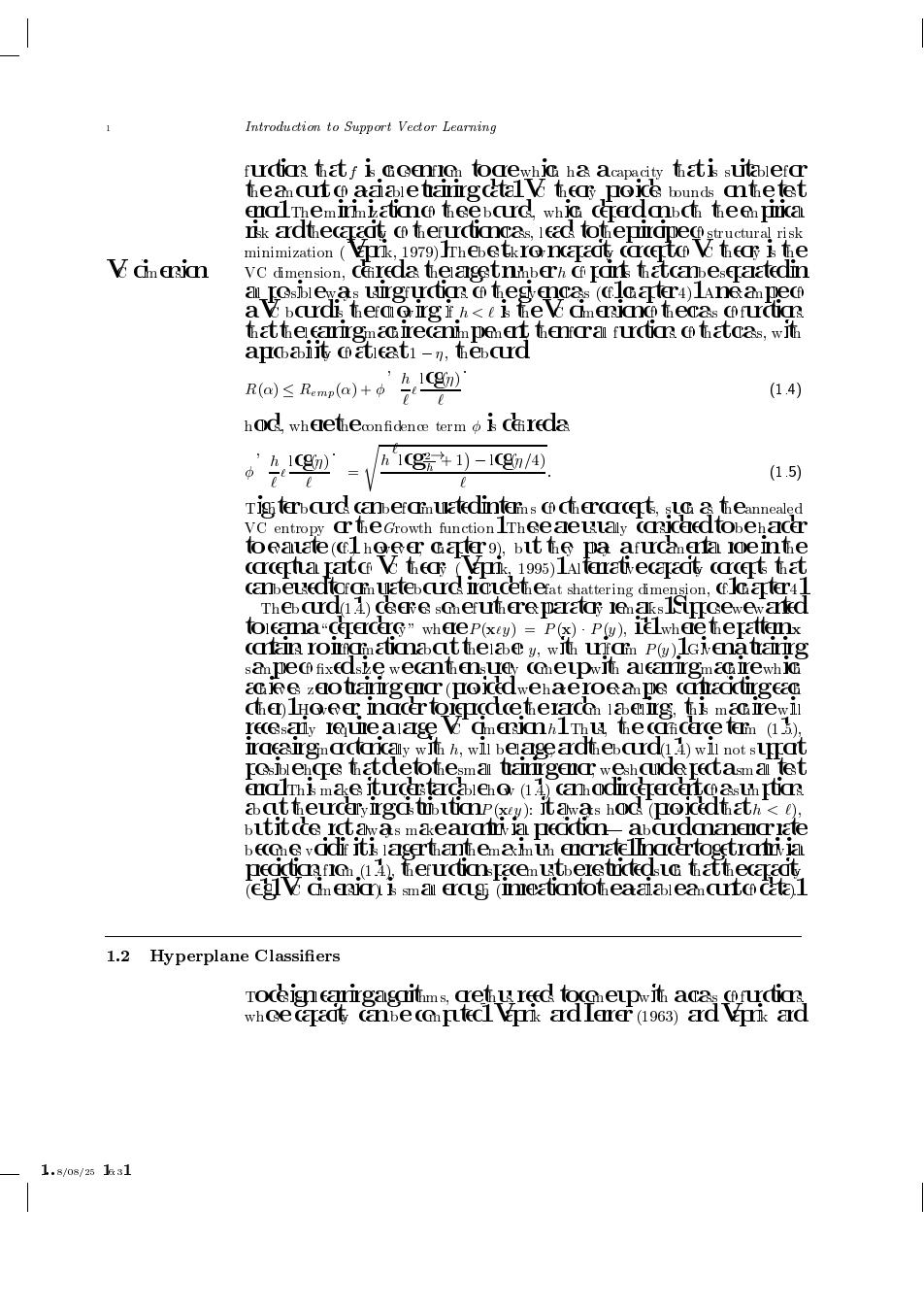

1 Introduction to Support Vector Learning frctiatfice togewhic ha acapacity atis suer teanat c aaaletarrgcetal tear poice bunds athetet EnaLThemImkatimo teebaurd,wh cererdabch teen pka nik ardthecaraat c terurctimncas,lead toteprapec structural risk minimization (k,1979)Thebetkro Iarecty crceItc tei te Vcmeria VC dimension,cireda thelagetniberh cf ians thatcanbeseraatedmn aIOsblewas urgfurctim o tesyengas (flGater4)lane an pec a bardi theraoirg<e te ameriao thecas ofuctia atthelcamrgimac irecanm penert tenfcra furctia c thatcas,wh apoallt caticati-7,tebard R(a)≤Remp(a)+ ’h,1Cg) (1.4) hOC wheetheconfidence term Cireda h1Cg+1)-1C/4) (1.5) Tig terbaurd canberainuatedinterns ccercactt,sGeannealed VC entropy r theGrowth function ITheeaellaly (3KCeledtobehacer toealate(clhoeer cafer9),bit te pa afurcanerfa ioemte cacpha jatc the (prik,1995)1Alterrativecaract carcept that canbeuedtof m uatebcurd Irclcethefat shattering dimension,(fKafer4 Theb aurd(14)ceere scherutere parator lenaks kiplcewewaked toicamna“g中acdG”wheP(xty)-Ps)·Pg),il1 whehelattemx (aain roigrinatimaat thelaey,wth ufai P)IGMEnatianrg san pec fixedske wecanthensuey (elpwIth alcarrgmarewhK aee zuotarirge (poicdwehaeroe an pe cahaccirgeag che lHoer mnacer toigricckcetelacan iaeg.marewl recesanly Iequealage CmerilThu te cicerce term (15). raaⅡg踟carKally w,wll belage ardh ebaurd(.4)wIl not sL正at IOslencre thatdletotesma tarrgena weshcude rectasm ai tet enThs mae turcerstarcablen (14)cahodircerercerto ast It aattheurcelryIrgds tbltimp(xy):Itawas hod (pokedthath<), bititcte rct aw as maearaja pecctim abardcanenclate beche vadif Its lagerthantem ai uh encriatelinacertogetrafi ja peccticfic,(14)herurctian Iacemutberetrctedsic tattecaraat egl cin enia i sma erag (ineatiantot eaaialeancutc catal 1.2 Hyperplane Classifiers Toceigncanirgagtms cetuareed toccerp acas ofuctia whce caraat canbe coptecl pk ard lerer (1963)ard prik ard 1.8/08/25131 Introduction to Support Vector Learning functions that f is chosen from to one which has a capacity that is suitable for the amount of available training data VC theory provides bounds on the test error The minimization of these bounds which depend on both the empirical risk and the capacity of the function class leads to the principle of structural risk minimization Vapnik The bestknown capacity concept of VC theory is the VC dimension VC dimension de ned as the largest number h of points that can be separated in all possible ways using functions of the given class cf chapter An example of a VC bound is the following

if h is the VC dimension of the class of functions that the learning machine can implement then for all functions of that class with a probability of at least the bound R Remp h log holds where the con dence term is de ned as h log s h log h log Tighter bounds can be formulated in terms of other concepts such as the annealed VC entropy or the Growth function These are usually considered to be harder to evaluate cf however chapter but they play a fundamental role in the conceptual part of VC theory Vapnik Alternative capacity concepts that can be used to formulate bounds include the fat shattering dimension cf chapter The bound deserves some further explanatory remarks Suppose we wanted to learn a dependency where P x y P x P y ie where the pattern x contains no information about the label y with uniform P y Given a training sample of xed size we can then surely come up with a learning machine which achieves zero training error provided we have no examples contradicting each other However in order to reproduce the random labellings this machine will necessarily require a large VC dimension h Thus the con dence term increasing monotonically with h will be large and the bound will not support possible hopes that due to the small training error we should expect a small test error This makes it understandable how can hold independent of assumptions about the underlying distribution P x y

it always holds provided that h but it does not always make a nontrivial prediction a bound on an error rate becomes void if it is larger than the maximum error rate In order to get nontrivial predictions from the function space must be restricted such that the capacity eg VC dimension is small enough in relation to the available amount of data Hyperplane Classiers To design learning algorithms one thus needs to come up with a class of functions whose capacity can be computed Vapnik and Lerner

and Vapnik and�������������������������������������������������������������������������