正在加载图片...

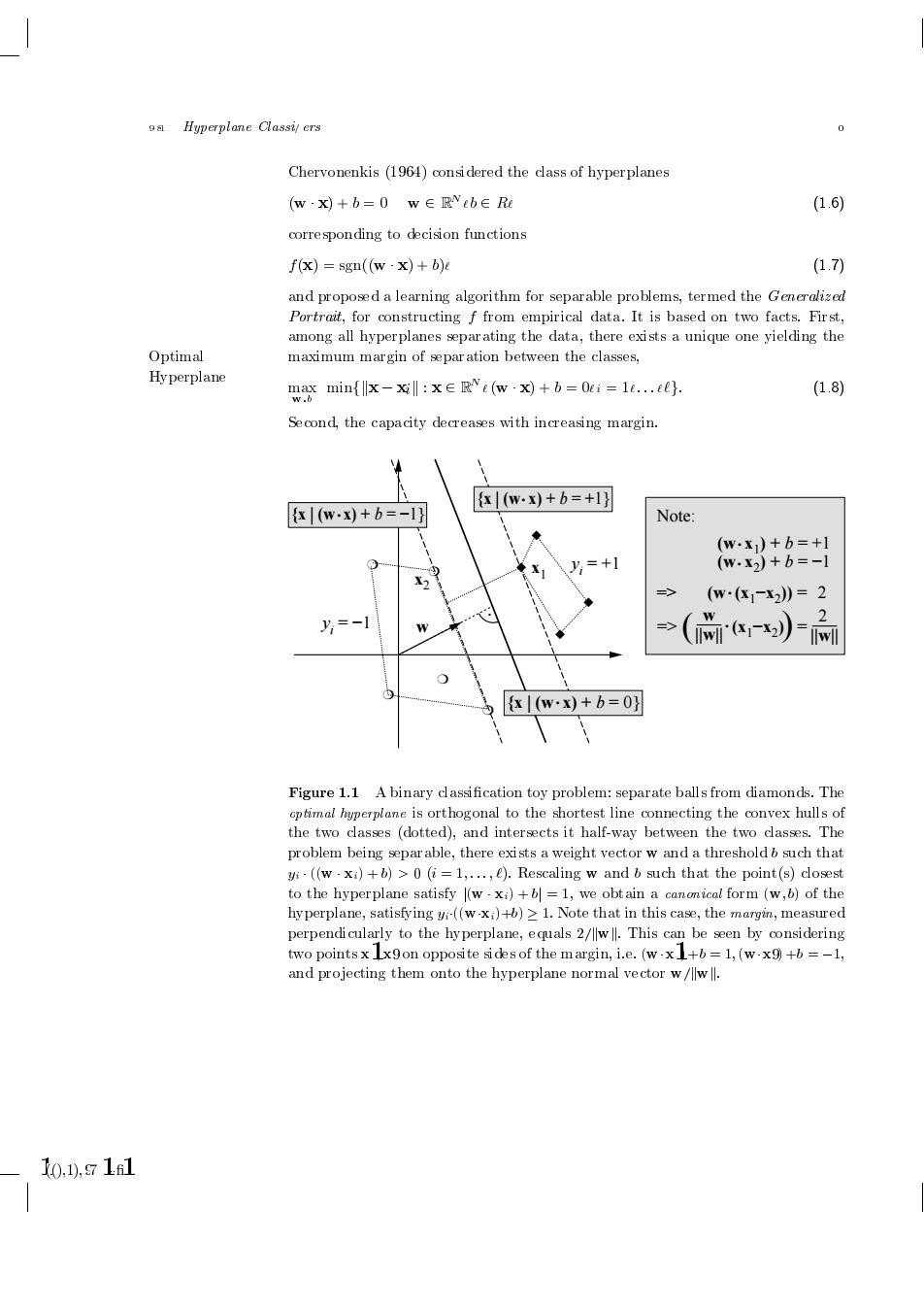

oa Hyperplane Classi/ers Chervonenkis(1964)considered the cass of hyperplanes (w:X)+b=0w∈Rveb∈Re (1.6) corresponding to decision functions f(x)=gn(w·x)+b)0 (1.7) and proposed a learning algorithm for separable problems,termed the Generalized Portrait,for constructing f from empirical data.It is based on two facts.First, among all hyperplanes separating the data,there exists a unique one yielding the Optimal maximum margin of separ ation between the classes, Hy perplane minfx-为l:x∈Rvc(w-xy+b=0ei=1..t0. (1.8) Second,the capacity decreases with increasing margin. {在(w.x)+b=+1} {x|(wx)+b=-1} Note: (w.x)+b=+1 X =+1 (w.x2+b=-1 => (w·(1-x2》=2 红 => (同动)=奇 {x|(wx)+b=0} Figure 1.1 A binary cassification toy problem:separate balls from diamonds.The optimal hyperplane is orthogonal to the shortest line connecting the convex hulls of the two classes (dotted),and intersects it half-way between the two casses.The problem being separ able,there exists a weight vector w and a threshold b such that i((w.x)+b)>0(i=1,...,e).Rescaling w and b such that the point(s)closest to the hyperplane satisfy (wxi)+b=1,we obt ain a canomical form(w,b)of the hy perplane,satisfying y((wx:)+b)>1.Note that in this case,the margin,measured perpendicularly to the hyperplane,equals 2/wll.This can be seen by considering two points x x9on opposite sides of the margin,i.e.(wx6=1,(w-x9+=-1, and projecting them onto the hyperplane normal vector w/wll. 10,1,91l Hyperplane Classiers Chervonenkis

considered the class of hyperplanes w x b w RN b R corresponding to decision functions f x sgnw x b and proposed a learning algorithm for separable problems termed the Generalized Portrait for constructing f from empirical data It is based on two facts First among all hyperplanes separating the data there exists a unique one yielding the Optimal maximum margin of separation between the classes Hyperplane max wb minfkx xik

x RN w x b i g Second the capacity decreases with increasing margin . w {x | (w x) + . b = 0} {x | (w x) + . b = −1} {x | (w x) + . b = +1} x2 x1 Note: (w x1) + b = +1 (w x2) + b = −1 => (w (x1−x2)) = 2 => (x1−x2) = w (||w|| ) . . . . 2 ||w|| yi = −1 yi ❍ = +1 ❍ ❍ ❍ ❍ ◆ ◆ ◆ ◆ Figure A binary classi cation toy problem

separate balls from diamonds The optimal hyperplane is orthogonal to the shortest line connecting the convex hulls of the two classes dotted and intersects it halfway between the two classes The problem being separable there exists a weight vector w and a threshold b such that yi w xi b i Rescaling w and b such that the points closest to the hyperplane satisfy jw xi bj we obtain a canonical form w b of the hyperplane satisfying yi wxib Note that in this case the margin measured perpendicularly to the hyperplane equals kwk This can be seen by considering two points x x on opposite sides of the margin ie wxb wxb and pro jecting them onto the hyperplane normal vector wkwk���������������������������������������������������