正在加载图片...

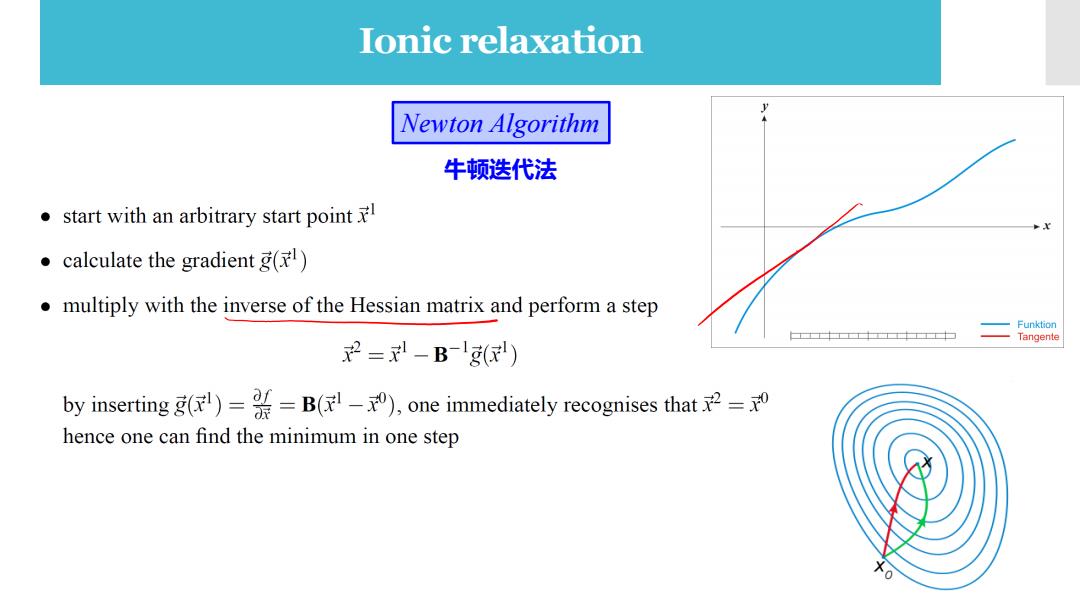

Ionic relaxation Newton Algorithm 牛顿迭代法 .start with an arbitrary start point .calculate the gradient g() multiply with the inverse of the Hessian matrix and perform a step 十 2=-B1() by inserting g()=B(),one immediately recognises that hence one can find the minimum in one step 0Ionic relaxation 牛顿迭代法 Newton Algorithm