正在加载图片...

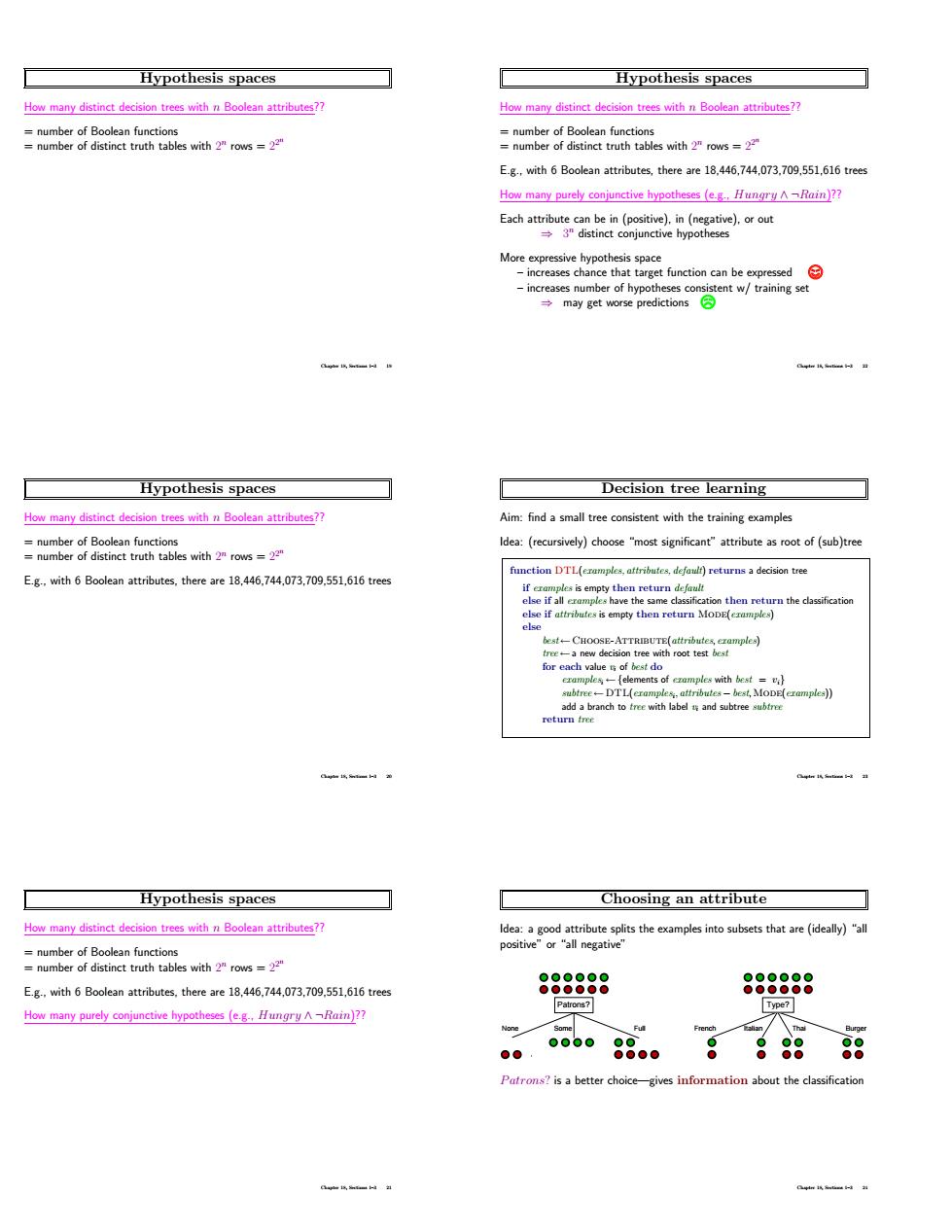

Hypothesis spaces Hypothesis spaces How many distinct decision trees with n Boolean attributes? How many purely conjunctive hypotheses (e.g-.Hungry ARain)? ncre may get Hypothesis spaces Decision tree learning dea:(recursively)choose "most significant"attribute as root of (sub)tree E.gwith6 Boolean there are .44.073.709551.616 trees a de ke if a ares-DTLeramzl Hypothesis spaces Choosing an attribute themthat() E.g.,with 6 Boolean attributes,there are 18,446.744.073.709,551,616 trees 888888 888888 How many purely conjunctive hypotheses (e.g Hungry ARain)?? 0。 g日88 88 bout the classificatioHypothesis spaces How many distinct decision trees with n Boolean attributes?? = number of Boolean functions = number of distinct truth tables with 2 n rows = 2 2 n Chapter 18, Sections 1–3 19 Hypothesis spaces How many distinct decision trees with n Boolean attributes?? = number of Boolean functions = number of distinct truth tables with 2 n rows = 2 2 n E.g., with 6 Boolean attributes, there are 18,446,744,073,709,551,616 trees Chapter 18, Sections 1–3 20 Hypothesis spaces How many distinct decision trees with n Boolean attributes?? = number of Boolean functions = number of distinct truth tables with 2 n rows = 2 2 n E.g., with 6 Boolean attributes, there are 18,446,744,073,709,551,616 trees How many purely conjunctive hypotheses (e.g., Hungry ∧ ¬Rain)?? Chapter 18, Sections 1–3 21 Hypothesis spaces How many distinct decision trees with n Boolean attributes?? = number of Boolean functions = number of distinct truth tables with 2 n rows = 2 2 n E.g., with 6 Boolean attributes, there are 18,446,744,073,709,551,616 trees How many purely conjunctive hypotheses (e.g., Hungry ∧ ¬Rain)?? Each attribute can be in (positive), in (negative), or out ⇒ 3 n distinct conjunctive hypotheses More expressive hypothesis space – increases chance that target function can be expressed – increases number of hypotheses consistent w/ training set ⇒ may get worse predictions Chapter 18, Sections 1–3 22 Decision tree learning Aim: find a small tree consistent with the training examples Idea: (recursively) choose “most significant” attribute as root of (sub)tree function DTL(examples, attributes, default) returns a decision tree if examples is empty then return default else if all examples have the same classification then return the classification else if attributes is empty then return Mode(examples) else best ←Choose-Attribute(attributes, examples) tree ←a new decision tree with root test best for each value vi of best do examplesi ← {elements of examples with best = vi} subtree ←DTL(examplesi, attributes − best,Mode(examples)) add a branch to tree with label vi and subtree subtree return tree Chapter 18, Sections 1–3 23 Choosing an attribute Idea: a good attribute splits the examples into subsets that are (ideally) “all positive” or “all negative” None Some Full Patrons? French Italian Thai Burger Type? Patrons? is a better choice—gives information about the classification Chapter 18, Sections 1–3 24