正在加载图片...

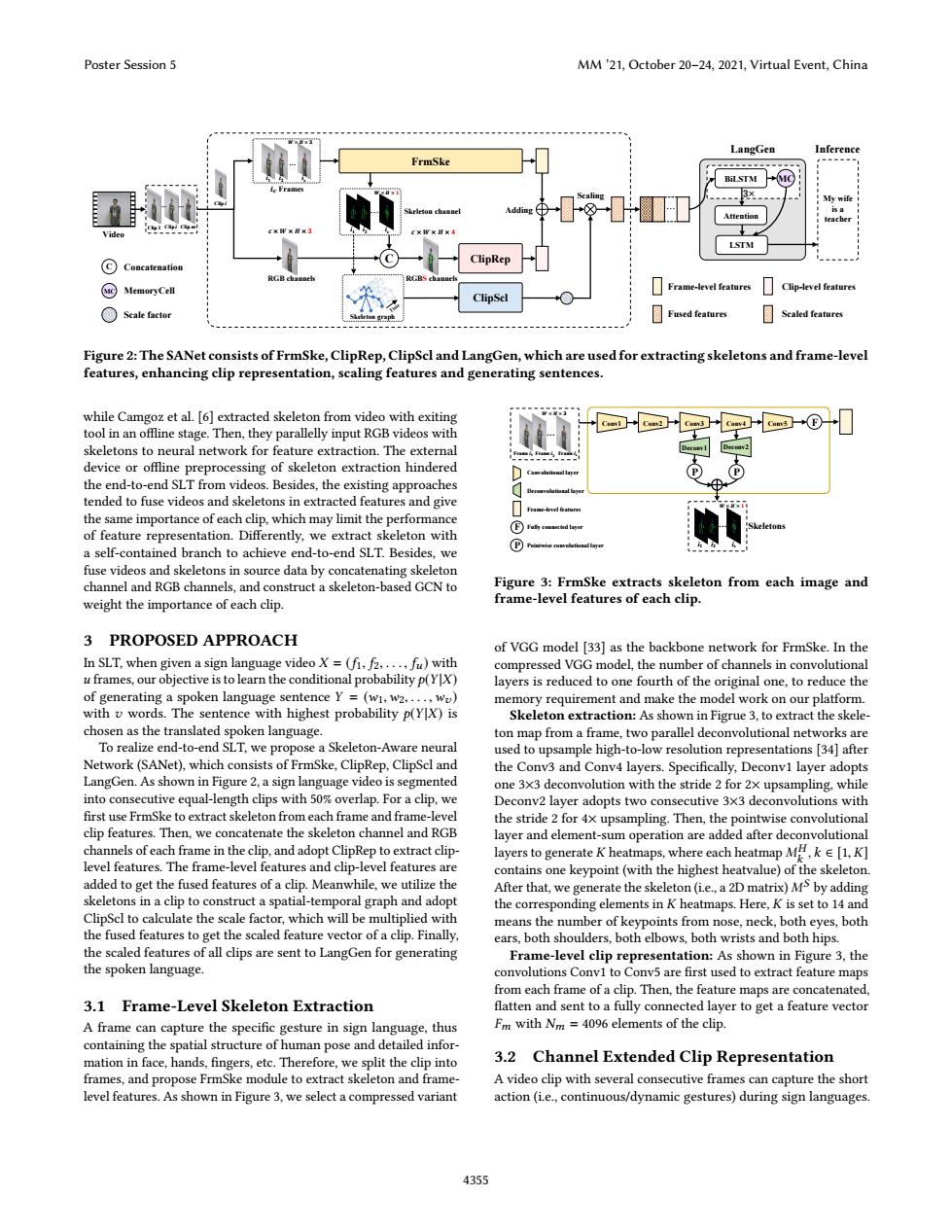

Poster Session 5 MM'21,October 20-24,2021,Virtual Event,China LangGen Inference FrmSke BiLSTM wife tentio cher ide LSTM ⊙Concatenation ClipRep MemoryCel Frame-level features 0 Clip-level features ClipSel ○Scale factor on graph 目Scaled eatre Figure 2:The SANet consists of FrmSke,ClipRep,ClipScl and LangGen,which are used for extracting skeletons and frame-level features,enhancing clip representation,scaling features and generating sentences. while Camgoz et al.[6]extracted skeleton from video with exiting tool in an offline stage.Then,they parallelly input RGB videos with skeletons to neural network for feature extraction.The external device or offline preprocessing of skeleton extraction hindered the end-to-end SLT from videos.Besides,the existing approaches tended to fuse videos and skeletons in extracted features and give frame-loeri features the same importance of each clip,which may limit the performance of feature representation.Differently,we extract skeleton with a self-contained branch to achieve end-to-end SLT.Besides,we ① fuse videos and skeletons in source data by concatenating skeleton channel and RGB channels,and construct a skeleton-based GCN to Figure 3:FrmSke extracts skeleton from each image and weight the importance of each clip. frame-level features of each clip. 3 PROPOSED APPROACH of VGG model [33]as the backbone network for FrmSke.In the In SLT,when given a sign language video X=(fi.f2....,fu)with compressed VGG model,the number of channels in convolutional uframes,our objective is to learn the conditional probability p(YX) layers is reduced to one fourth of the original one,to reduce the of generating a spoken language sentence Y =(wi,w2,...,wo) memory requirement and make the model work on our platform. with v words.The sentence with highest probability p(YX)is Skeleton extraction:As shown in Figrue 3,to extract the skele- chosen as the translated spoken language. ton map from a frame,two parallel deconvolutional networks are To realize end-to-end SLT,we propose a Skeleton-Aware neural used to upsample high-to-low resolution representations [34]after Network(SANet),which consists of FrmSke,ClipRep,ClipScl and the Conv3 and Conv4 layers.Specifically,Deconv1 layer adopts LangGen.As shown in Figure 2,a sign language video is segmented one 3x3 deconvolution with the stride 2 for 2x upsampling,while into consecutive equal-length clips with 50%overlap.For a clip.we Deconv2 layer adopts two consecutive 3x3 deconvolutions with first use FrmSke to extract skeleton from each frame and frame-level the stride 2 for 4x upsampling.Then,the pointwise convolutional clip features.Then,we concatenate the skeleton channel and RGB layer and element-sum operation are added after deconvolutional channels of each frame in the clip.and adopt ClipRep to extract clip- layers to generateK heatmaps,where each heatmap Mk[1.K] level features.The frame-level features and clip-level features are contains one keypoint(with the highest heatvalue)of the skeleton added to get the fused features of a clip.Meanwhile,we utilize the After that,we generate the skeleton(ie.,a 2D matrix)MS by adding skeletons in a clip to construct a spatial-temporal graph and adopt the corresponding elements in K heatmaps.Here,K is set to 14 and ClipScl to calculate the scale factor,which will be multiplied with means the number of keypoints from nose,neck,both eyes,both the fused features to get the scaled feature vector of a clip.Finally. ears,both shoulders,both elbows,both wrists and both hips. the scaled features of all clips are sent to LangGen for generating Frame-level clip representation:As shown in Figure 3,the the spoken language. convolutions Convl to Conv5 are first used to extract feature maps from each frame of a clip.Then,the feature maps are concatenated, 3.1 Frame-Level Skeleton Extraction flatten and sent to a fully connected layer to get a feature vector A frame can capture the specific gesture in sign language,thus Fm with Nm =4096 elements of the clip. containing the spatial structure of human pose and detailed infor- mation in face,hands,fingers,etc.Therefore,we split the clip into 3.2 Channel Extended Clip Representation frames,and propose FrmSke module to extract skeleton and frame- A video clip with several consecutive frames can capture the short level features.As shown in Figure 3,we select a compressed variant action(i.e.,continuous/dynamic gestures)during sign languages. 4355Video RGB channels 𝑾𝑾 × 𝑯𝑯 × 𝟑𝟑 … … ClipRep ClipScl Clip i … Clip 1 … Clip m 𝒄𝒄 × 𝑾𝑾 × 𝑯𝑯 × 𝟑𝟑 𝑾𝑾 × 𝑯𝑯 × 𝟏𝟏 𝒄𝒄 × 𝑾𝑾 × 𝑯𝑯 × 𝟒𝟒 i1 i2 ic Skeleton graph Scaling Clip i … My wife is a teacher Inference C C Concatenation Frame-level features Clip-level features Scale factor MC MemoryCell BiLSTM MC 3× LSTM Attention LangGen Skeleton channel Fused features FrmSke Scaled features RGBS channels 𝒊𝒊𝒄𝒄 Frames i1 i2 ic Adding Figure 2: The SANet consists of FrmSke, ClipRep, ClipScl and LangGen, which are used for extracting skeletons and frame-level features, enhancing clip representation, scaling features and generating sentences. while Camgoz et al. [6] extracted skeleton from video with exiting tool in an offline stage. Then, they parallelly input RGB videos with skeletons to neural network for feature extraction. The external device or offline preprocessing of skeleton extraction hindered the end-to-end SLT from videos. Besides, the existing approaches tended to fuse videos and skeletons in extracted features and give the same importance of each clip, which may limit the performance of feature representation. Differently, we extract skeleton with a self-contained branch to achieve end-to-end SLT. Besides, we fuse videos and skeletons in source data by concatenating skeleton channel and RGB channels, and construct a skeleton-based GCN to weight the importance of each clip. 3 PROPOSED APPROACH In SLT, when given a sign language video X = (f1, f2, . . . , fu ) with u frames, our objective is to learn the conditional probability p(Y |X) of generating a spoken language sentence Y = (w1,w2, . . . ,wv ) with v words. The sentence with highest probability p(Y |X) is chosen as the translated spoken language. To realize end-to-end SLT, we propose a Skeleton-Aware neural Network (SANet), which consists of FrmSke, ClipRep, ClipScl and LangGen. As shown in Figure 2, a sign language video is segmented into consecutive equal-length clips with 50% overlap. For a clip, we first use FrmSke to extract skeleton from each frame and frame-level clip features. Then, we concatenate the skeleton channel and RGB channels of each frame in the clip, and adopt ClipRep to extract cliplevel features. The frame-level features and clip-level features are added to get the fused features of a clip. Meanwhile, we utilize the skeletons in a clip to construct a spatial-temporal graph and adopt ClipScl to calculate the scale factor, which will be multiplied with the fused features to get the scaled feature vector of a clip. Finally, the scaled features of all clips are sent to LangGen for generating the spoken language. 3.1 Frame-Level Skeleton Extraction A frame can capture the specific gesture in sign language, thus containing the spatial structure of human pose and detailed information in face, hands, fingers, etc. Therefore, we split the clip into frames, and propose FrmSke module to extract skeleton and framelevel features. As shown in Figure 3, we select a compressed variant 𝑾𝑾 × 𝑯𝑯 × 𝟑𝟑 Frame i1 Frame i2 Frame ic … F … 𝑾𝑾 × 𝑯𝑯 × 𝟏𝟏 i1 i2 ic Convolutional layer Deconvolutional layer Fully connected layer Pointwise convolutional layer Deconv1 Deconv2 Conv1 Conv2 Conv3 Conv4 Conv5 P P F P Frame-level features Skeletons Figure 3: FrmSke extracts skeleton from each image and frame-level features of each clip. of VGG model [33] as the backbone network for FrmSke. In the compressed VGG model, the number of channels in convolutional layers is reduced to one fourth of the original one, to reduce the memory requirement and make the model work on our platform. Skeleton extraction: As shown in Figrue 3, to extract the skeleton map from a frame, two parallel deconvolutional networks are used to upsample high-to-low resolution representations [34] after the Conv3 and Conv4 layers. Specifically, Deconv1 layer adopts one 3×3 deconvolution with the stride 2 for 2× upsampling, while Deconv2 layer adopts two consecutive 3×3 deconvolutions with the stride 2 for 4× upsampling. Then, the pointwise convolutional layer and element-sum operation are added after deconvolutional layers to generate K heatmaps, where each heatmap MH k , k ∈ [1,K] contains one keypoint (with the highest heatvalue) of the skeleton. After that, we generate the skeleton (i.e., a 2D matrix) MS by adding the corresponding elements in K heatmaps. Here, K is set to 14 and means the number of keypoints from nose, neck, both eyes, both ears, both shoulders, both elbows, both wrists and both hips. Frame-level clip representation: As shown in Figure 3, the convolutions Conv1 to Conv5 are first used to extract feature maps from each frame of a clip. Then, the feature maps are concatenated, flatten and sent to a fully connected layer to get a feature vector Fm with Nm = 4096 elements of the clip. 3.2 Channel Extended Clip Representation A video clip with several consecutive frames can capture the short action (i.e., continuous/dynamic gestures) during sign languages. Poster Session 5 MM ’21, October 20–24, 2021, Virtual Event, China 4355