正在加载图片...

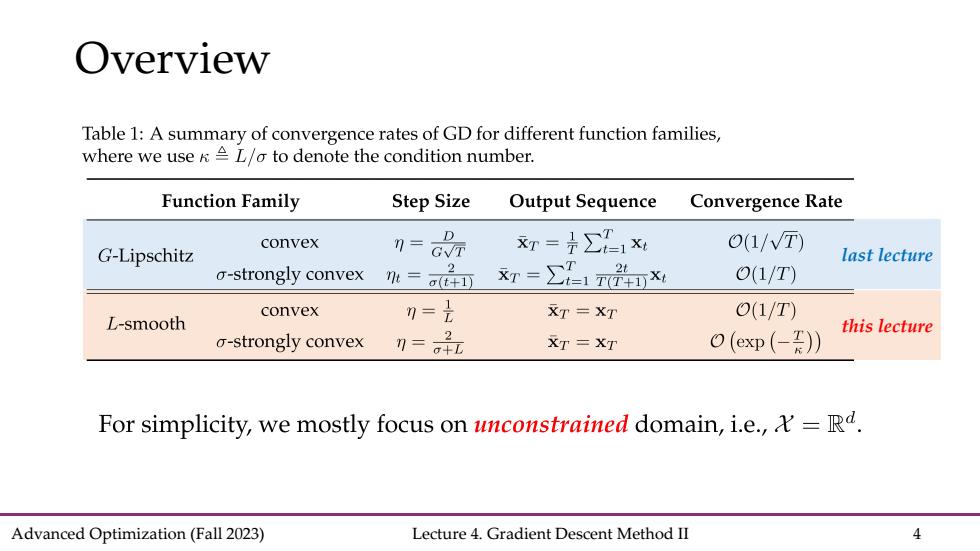

Overview Table 1:A summary of convergence rates of GD for different function families, where we use KL/o to denote the condition number. Function Family Step Size Output Sequence Convergence Rate convex 刀 xr=∑1x 0(1/T) G-Lipschitz last lecture a-strongly convex 2 m=a(t+1) xr=∑1nX O(1/T) convex 0=是 XT =XT O(1/T) L-smooth this lecture o-strongly convex 刀=品 XT =XT O(exp(-I)) For simplicity,we mostly focus on unconstrained domain,i.e.,=Rd. Advanced Optimization(Fall 2023) Lecture 4.Gradient Descent Method II 4Advanced Optimization (Fall 2023) Lecture 4. Gradient Descent Method II 4 Overview last lecture this lecture