正在加载图片...

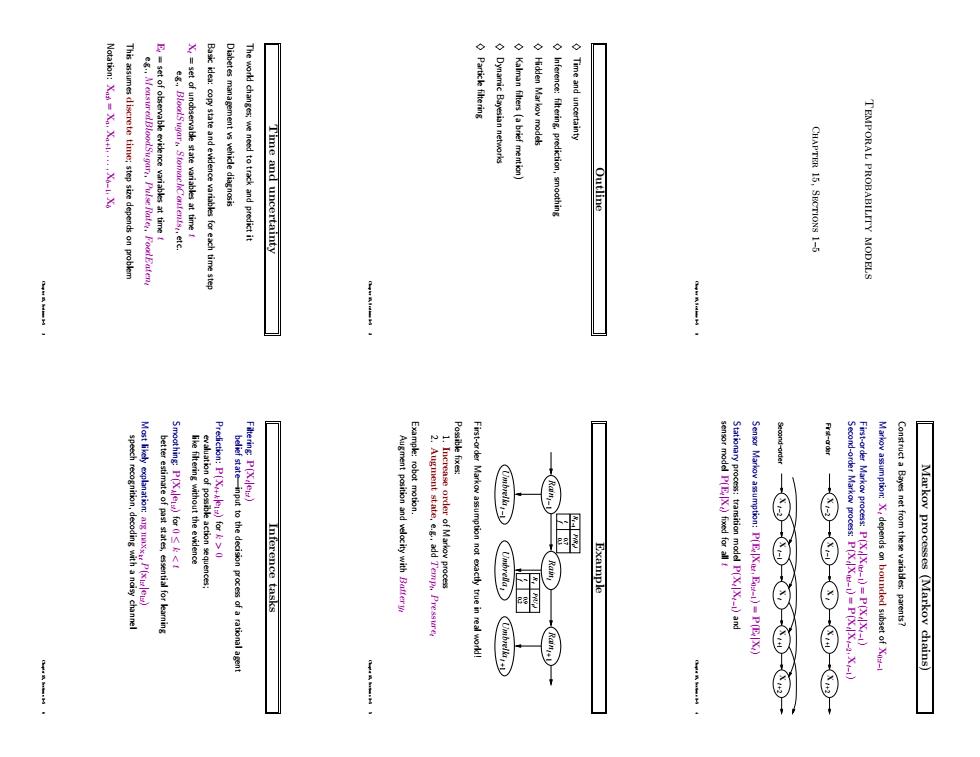

Dynamic Bayesian network Hidden Markov model Time and uncertainty Time and uncertainty Kalman filters (a brief mention Outlin CHAPTER 15,SECTIONS 1- TEMPORAL PROBABILITY MODE Fr-arder Inference tasks Sensor Markov assumption:P(EX E-1)-P(EX) asumption:X depends on bounded subset of X Markov processes (Markoy chains) rrFTemporal probability models Chapter 15, Sections 1–5 Chapter 15, Sections 1–5 1 Outline ♦ Time and uncertainty ♦ Inference: filtering, prediction, smoothing ♦ Hidden Markov models ♦ Kalman filters (a brief mention) ♦ Dynamic Bayesian networks ♦ Particle filtering Chapter 15, Sections 1–5 2 Time and uncertainty The world changes; we need to track and predict it Diabetes management vs vehicle diagnosis Basic idea: copy state and evidence variables for each time step Xt = set of unobservable state variables at time t e.g., BloodSugart , StomachContentst , etc. Et = set of observable evidence variables at time t e.g., MeasuredBloodSugart , PulseRatet , FoodEatent This assumes discrete time; step size depends on problem Notation: Xa:b = Xa, Xa+1, . . . , Xb−1, Xb Chapter 15, Sections 1–5 3 Markov processes (Markov chains) Construct a Bayes net from these variables: parents? Markov assumption: Xt depends on bounded subset of X0:t−1 First-order Markov process: P(Xt |X0:t−1) = P(Xt |Xt−1) Second-order Markov process: P(Xt |X0:t−1) = P(Xt |Xt−2, Xt−1) X t −1 X t X t −2 X t +1 X t +2 X t −1 X t X t −2 X t +1 X t +2 Senso Second−order First−order r Markov assumption: P(Et |X0:t , E0:t−1) = P(Et |Xt) Stationary process: transition model P(Xt |Xt−1) and sensor model P(Et |Xt) fixed for all t Chapter 15, Sections 1–5 4 Example t Rain t Umbrella Raint −1 Umbrellat −1 Raint +1 Umbrellat +1 Rt −1 t P(R ) 0.3 f 0.7 t t R t P(U ) 0.9 t 0.2 f First-order Markov assumption not exactly true in real world! Possible fixes: 1. Increase order of Markov process 2. Augment state, e.g., add Tempt , Pressuret Example: robot motion. Augment position and velocity with Batteryt Chapter 15, Sections 1–5 5 Inference tasks Filtering: P(Xt |e1:t) belief state—input to the decision process of a rational agent Prediction: P(Xt+k|e1:t) for k > 0 evaluation of possible action sequences; like filtering without the evidence Smoothing: P(Xk|e1:t) for 0 ≤ k < t better estimate of past states, essential for learning Most likely explanation: arg maxx1:t P(x1:t |e1:t) speech recognition, decoding with a noisy channel Chapter 15, Sections 1–5 6