正在加载图片...

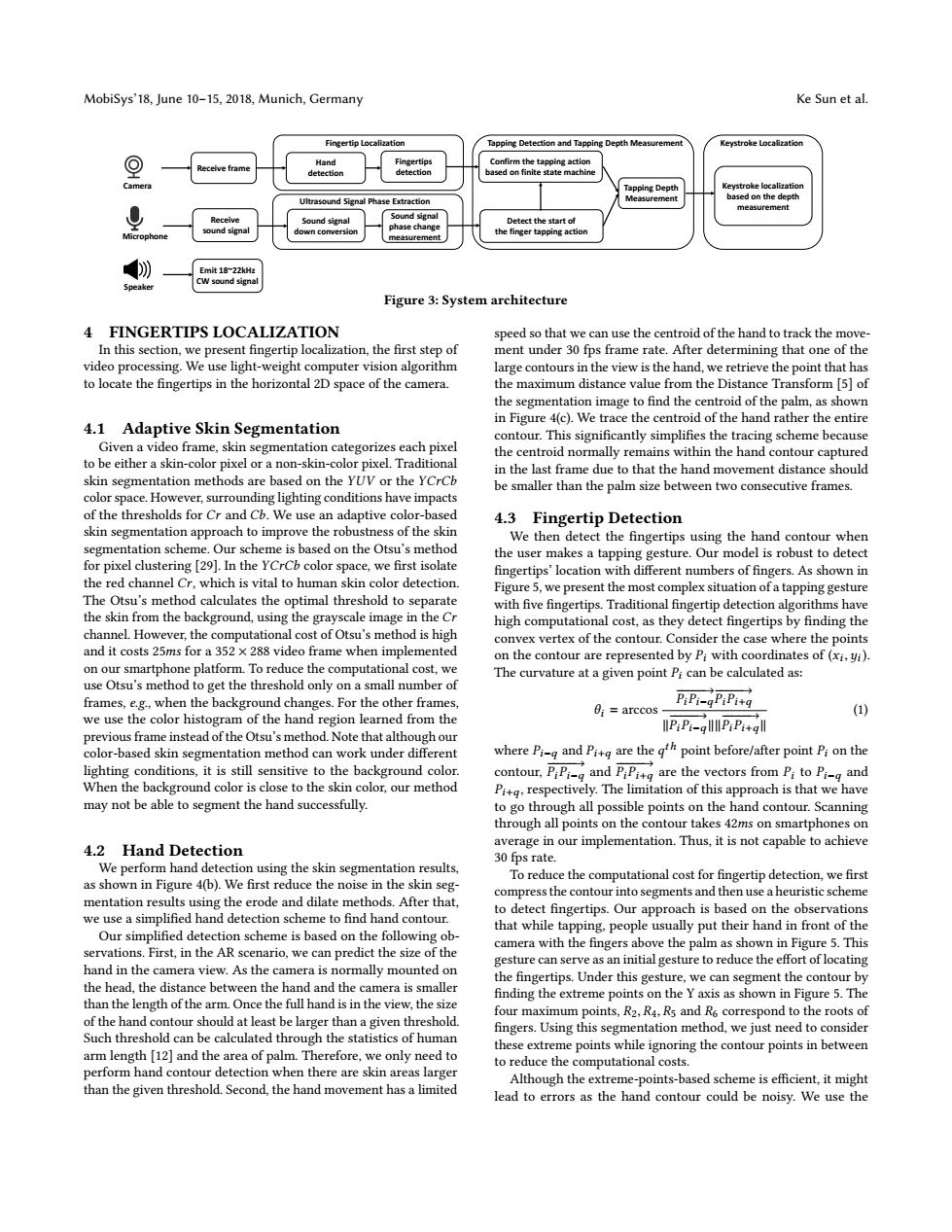

MobiSys'18,June 10-15,2018,Munich,Germany Ke Sun et al. Fingertip Localization Tapping Detection and Tapping Depth Measurement Keystroke Localization Hand gertips Confirm the tapping action detection detection sed on finite state machi ping Depth Keystroke localization Ultrasound Signal Phase Extraction based on the depth measurement Sound signal Sound signal Detect the start of h3s色ch3n起 ound signal icropho down comversion the finger tapping action measurement mit 18-22kHa .W sound signa Figure 3:System architecture 4 FINGERTIPS LOCALIZATION speed so that we can use the centroid of the hand to track the move- In this section,we present fingertip localization,the first step of ment under 30 fps frame rate.After determining that one of the video processing.We use light-weight computer vision algorithm large contours in the view is the hand,we retrieve the point that has to locate the fingertips in the horizontal 2D space of the camera. the maximum distance value from the Distance Transform [5]of the segmentation image to find the centroid of the palm,as shown in Figure 4(c).We trace the centroid of the hand rather the entire 4.1 Adaptive Skin Segmentation contour.This significantly simplifies the tracing scheme because Given a video frame,skin segmentation categorizes each pixel the centroid normally remains within the hand contour captured to be either a skin-color pixel or a non-skin-color pixel.Traditional in the last frame due to that the hand movement distance should skin segmentation methods are based on the YUV or the YCrCb be smaller than the palm size between two consecutive frames. color space.However,surrounding lighting conditions have impacts of the thresholds for Cr and Cb.We use an adaptive color-based 4.3 Fingertip Detection skin segmentation approach to improve the robustness of the skin We then detect the fingertips using the hand contour when segmentation scheme.Our scheme is based on the Otsu's method the user makes a tapping gesture.Our model is robust to detect for pixel clustering [29].In the YCrCb color space,we first isolate fingertips'location with different numbers of fingers.As shown in the red channel Cr,which is vital to human skin color detection. Figure 5,we present the most complex situation of a tapping gesture The Otsu's method calculates the optimal threshold to separate with five fingertips.Traditional fingertip detection algorithms have the skin from the background,using the grayscale image in the Cr high computational cost,as they detect fingertips by finding the channel.However,the computational cost of Otsu's method is high convex vertex of the contour.Consider the case where the points and it costs 25ms for a 352 x 288 video frame when implemented on the contour are represented by Pi with coordinates of(xi,yi). on our smartphone platform.To reduce the computational cost,we The curvature at a given point Pi can be calculated as: use Otsu's method to get the threshold only on a small number of frames,e.g.,when the background changes.For the other frames, 0;=arccos- PiPi-qPiPitg (1) we use the color histogram of the hand region learned from the WIP:Pi-gPiPi+gll previous frame instead of the Otsu's method.Note that although our color-based skin segmentation method can work under different where Pi-and Pitq are thethpoint before/after point Pi on the lighting conditions,it is still sensitive to the background color. contour,PiPi-g and PiPi+g are the vectors from Pi to Pi-g and When the background color is close to the skin color,our method Pit,respectively.The limitation of this approach is that we have may not be able to segment the hand successfully to go through all possible points on the hand contour.Scanning through all points on the contour takes 42ms on smartphones on average in our implementation.Thus,it is not capable to achieve 4.2 Hand Detection 30 fps rate. We perform hand detection using the skin segmentation results, To reduce the computational cost for fingertip detection,we first as shown in Figure 4(b).We first reduce the noise in the skin seg- mentation results using the erode and dilate methods.After that, compress the contour into segments and then use a heuristic scheme we use a simplified hand detection scheme to find hand contour. to detect fingertips.Our approach is based on the observations that while tapping,people usually put their hand in front of the Our simplified detection scheme is based on the following ob- servations.First,in the AR scenario,we can predict the size of the camera with the fingers above the palm as shown in Figure 5.This gesture can serve as an initial gesture to reduce the effort of locating hand in the camera view.As the camera is normally mounted on the fingertips.Under this gesture,we can segment the contour by the head,the distance between the hand and the camera is smaller finding the extreme points on the Y axis as shown in Figure 5.The than the length of the arm.Once the full hand is in the view,the size four maximum points,R2,R4.Rs and R correspond to the roots of of the hand contour should at least be larger than a given threshold. fingers.Using this segmentation method,we just need to consider Such threshold can be calculated through the statistics of human arm length [12]and the area of palm.Therefore,we only need to these extreme points while ignoring the contour points in between to reduce the computational costs. perform hand contour detection when there are skin areas larger than the given threshold.Second,the hand movement has a limited Although the extreme-points-based scheme is efficient,it might lead to errors as the hand contour could be noisy.We use theMobiSys’18, June 10–15, 2018, Munich, Germany Ke Sun et al. Microphone Speaker Emit 18~22kHz CW sound signal Receive sound signal Sound signal down conversion Sound signal phase change measurement Detect the start of the finger tapping action Ultrasound Signal Phase Extraction Camera Receive frame Hand detection Fingertips detection Fingertip Localization Tapping Detection and Tapping Depth Measurement Confirm the tapping action based on finite state machine Tapping Depth Measurement Keystroke Localization Keystroke localization based on the depth measurement Figure 3: System architecture 4 FINGERTIPS LOCALIZATION In this section, we present fingertip localization, the first step of video processing. We use light-weight computer vision algorithm to locate the fingertips in the horizontal 2D space of the camera. 4.1 Adaptive Skin Segmentation Given a video frame, skin segmentation categorizes each pixel to be either a skin-color pixel or a non-skin-color pixel. Traditional skin segmentation methods are based on the YUV or the YCrCb color space. However, surrounding lighting conditions have impacts of the thresholds for Cr and Cb. We use an adaptive color-based skin segmentation approach to improve the robustness of the skin segmentation scheme. Our scheme is based on the Otsu’s method for pixel clustering [29]. In the YCrCb color space, we first isolate the red channel Cr, which is vital to human skin color detection. The Otsu’s method calculates the optimal threshold to separate the skin from the background, using the grayscale image in the Cr channel. However, the computational cost of Otsu’s method is high and it costs 25ms for a 352 × 288 video frame when implemented on our smartphone platform. To reduce the computational cost, we use Otsu’s method to get the threshold only on a small number of frames, e.g., when the background changes. For the other frames, we use the color histogram of the hand region learned from the previous frame instead of the Otsu’s method. Note that although our color-based skin segmentation method can work under different lighting conditions, it is still sensitive to the background color. When the background color is close to the skin color, our method may not be able to segment the hand successfully. 4.2 Hand Detection We perform hand detection using the skin segmentation results, as shown in Figure 4(b). We first reduce the noise in the skin segmentation results using the erode and dilate methods. After that, we use a simplified hand detection scheme to find hand contour. Our simplified detection scheme is based on the following observations. First, in the AR scenario, we can predict the size of the hand in the camera view. As the camera is normally mounted on the head, the distance between the hand and the camera is smaller than the length of the arm. Once the full hand is in the view, the size of the hand contour should at least be larger than a given threshold. Such threshold can be calculated through the statistics of human arm length [12] and the area of palm. Therefore, we only need to perform hand contour detection when there are skin areas larger than the given threshold. Second, the hand movement has a limited speed so that we can use the centroid of the hand to track the movement under 30 fps frame rate. After determining that one of the large contours in the view is the hand, we retrieve the point that has the maximum distance value from the Distance Transform [5] of the segmentation image to find the centroid of the palm, as shown in Figure 4(c). We trace the centroid of the hand rather the entire contour. This significantly simplifies the tracing scheme because the centroid normally remains within the hand contour captured in the last frame due to that the hand movement distance should be smaller than the palm size between two consecutive frames. 4.3 Fingertip Detection We then detect the fingertips using the hand contour when the user makes a tapping gesture. Our model is robust to detect fingertips’ location with different numbers of fingers. As shown in Figure 5, we present the most complex situation of a tapping gesture with five fingertips. Traditional fingertip detection algorithms have high computational cost, as they detect fingertips by finding the convex vertex of the contour. Consider the case where the points on the contour are represented by Pi with coordinates of (xi ,yi ). The curvature at a given point Pi can be calculated as: θi = arccos −−−−−→ PiPi−q −−−−−→ PiPi+q ∥ −−−−−→ PiPi−q ∥ ∥−−−−−→ PiPi+q ∥ (1) where Pi−q and Pi+q are the q th point before/after point Pi on the contour, −−−−−→ PiPi−q and −−−−−→ PiPi+q are the vectors from Pi to Pi−q and Pi+q, respectively. The limitation of this approach is that we have to go through all possible points on the hand contour. Scanning through all points on the contour takes 42ms on smartphones on average in our implementation. Thus, it is not capable to achieve 30 fps rate. To reduce the computational cost for fingertip detection, we first compress the contour into segments and then use a heuristic scheme to detect fingertips. Our approach is based on the observations that while tapping, people usually put their hand in front of the camera with the fingers above the palm as shown in Figure 5. This gesture can serve as an initial gesture to reduce the effort of locating the fingertips. Under this gesture, we can segment the contour by finding the extreme points on the Y axis as shown in Figure 5. The four maximum points, R2, R4, R5 and R6 correspond to the roots of fingers. Using this segmentation method, we just need to consider these extreme points while ignoring the contour points in between to reduce the computational costs. Although the extreme-points-based scheme is efficient, it might lead to errors as the hand contour could be noisy. We use the