正在加载图片...

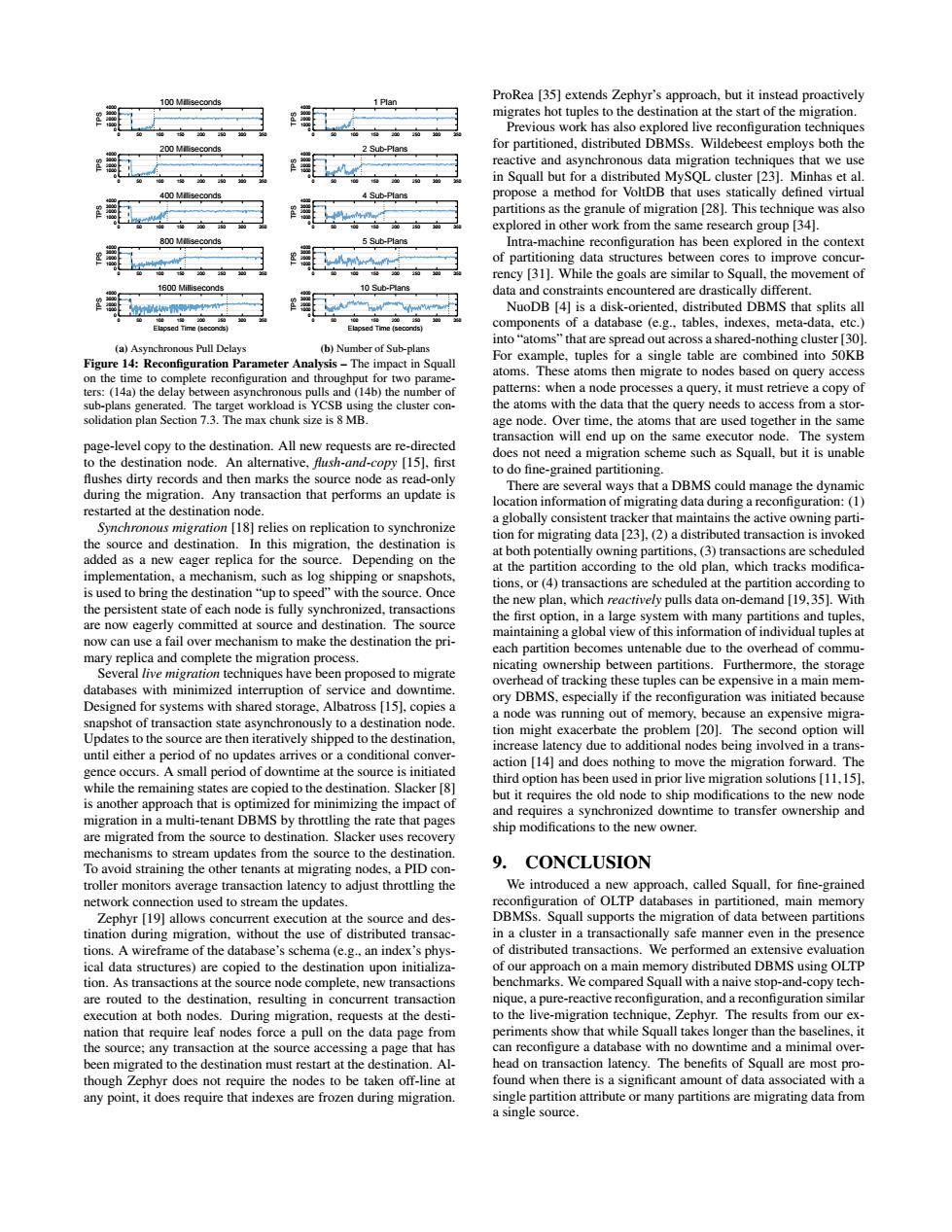

ach.but it instead proactively nathcsatoftcnnei or DBMS 4 Sub-Plans ule of migration 8.This technique was also ountcredarcdtasticalygticTr adatabase tables For example.tuplesfor a single table are combined into 50K v.it m 7.3.Tget wo th the da access a st age-eve copy oh An ing. node as read-o infor (1 urc and stn tion for m ing pokee ing or snapsh at the ns.or (4)trar spee d tr any pa nd untenable due to the and complet cach partition with minimiz of tracki the sive in time to a des The ely shipp imnolCgption ion [14]ar oes n d ing to move the migration for s but it reauires the old node d ing th e to destination. o a PID co 9. CONCLUSION er n adiust throttling the cu hesoureanddes in th schema (e.g..an inde phys sactions.We perf ed an d mplete. ompare Squall with naive stop- to the live nustrestartatthd tinat ead on t The ben quall are most any point.it doesrequire that indexes are frozen durine migration0 50 100 150 200 250 300 350 0 1000 2000 3000 4000 TPS 100 Milliseconds 0 50 100 150 200 250 300 350 0 1000 2000 3000 4000 TPS 200 Milliseconds 0 50 100 150 200 250 300 350 0 1000 2000 3000 4000 TPS 400 Milliseconds 0 50 100 150 200 250 300 350 0 1000 2000 3000 4000 TPS 800 Milliseconds 0 50 100 150 200 250 300 350 Elapsed Time (seconds) 0 1000 2000 3000 4000 TPS 1600 Milliseconds (a) Asynchronous Pull Delays 0 50 100 150 200 250 300 350 0 1000 2000 3000 4000 TPS 1 Plan 0 50 100 150 200 250 300 350 0 1000 2000 3000 4000 TPS 2 Sub-Plans 0 50 100 150 200 250 300 350 0 1000 2000 3000 4000 TPS 4 Sub-Plans 0 50 100 150 200 250 300 350 0 1000 2000 3000 4000 TPS 5 Sub-Plans 0 50 100 150 200 250 300 350 Elapsed Time (seconds) 0 1000 2000 3000 4000 TPS 10 Sub-Plans (b) Number of Sub-plans Figure 14: Reconfiguration Parameter Analysis – The impact in Squall on the time to complete reconfiguration and throughput for two parameters: (14a) the delay between asynchronous pulls and (14b) the number of sub-plans generated. The target workload is YCSB using the cluster consolidation plan Section 7.3. The max chunk size is 8 MB. page-level copy to the destination. All new requests are re-directed to the destination node. An alternative, flush-and-copy [15], first flushes dirty records and then marks the source node as read-only during the migration. Any transaction that performs an update is restarted at the destination node. Synchronous migration [18] relies on replication to synchronize the source and destination. In this migration, the destination is added as a new eager replica for the source. Depending on the implementation, a mechanism, such as log shipping or snapshots, is used to bring the destination “up to speed” with the source. Once the persistent state of each node is fully synchronized, transactions are now eagerly committed at source and destination. The source now can use a fail over mechanism to make the destination the primary replica and complete the migration process. Several live migration techniques have been proposed to migrate databases with minimized interruption of service and downtime. Designed for systems with shared storage, Albatross [15], copies a snapshot of transaction state asynchronously to a destination node. Updates to the source are then iteratively shipped to the destination, until either a period of no updates arrives or a conditional convergence occurs. A small period of downtime at the source is initiated while the remaining states are copied to the destination. Slacker [8] is another approach that is optimized for minimizing the impact of migration in a multi-tenant DBMS by throttling the rate that pages are migrated from the source to destination. Slacker uses recovery mechanisms to stream updates from the source to the destination. To avoid straining the other tenants at migrating nodes, a PID controller monitors average transaction latency to adjust throttling the network connection used to stream the updates. Zephyr [19] allows concurrent execution at the source and destination during migration, without the use of distributed transactions. A wireframe of the database’s schema (e.g., an index’s physical data structures) are copied to the destination upon initialization. As transactions at the source node complete, new transactions are routed to the destination, resulting in concurrent transaction execution at both nodes. During migration, requests at the destination that require leaf nodes force a pull on the data page from the source; any transaction at the source accessing a page that has been migrated to the destination must restart at the destination. Although Zephyr does not require the nodes to be taken off-line at any point, it does require that indexes are frozen during migration. ProRea [35] extends Zephyr’s approach, but it instead proactively migrates hot tuples to the destination at the start of the migration. Previous work has also explored live reconfiguration techniques for partitioned, distributed DBMSs. Wildebeest employs both the reactive and asynchronous data migration techniques that we use in Squall but for a distributed MySQL cluster [23]. Minhas et al. propose a method for VoltDB that uses statically defined virtual partitions as the granule of migration [28]. This technique was also explored in other work from the same research group [34]. Intra-machine reconfiguration has been explored in the context of partitioning data structures between cores to improve concurrency [31]. While the goals are similar to Squall, the movement of data and constraints encountered are drastically different. NuoDB [4] is a disk-oriented, distributed DBMS that splits all components of a database (e.g., tables, indexes, meta-data, etc.) into “atoms” that are spread out across a shared-nothing cluster [30]. For example, tuples for a single table are combined into 50KB atoms. These atoms then migrate to nodes based on query access patterns: when a node processes a query, it must retrieve a copy of the atoms with the data that the query needs to access from a storage node. Over time, the atoms that are used together in the same transaction will end up on the same executor node. The system does not need a migration scheme such as Squall, but it is unable to do fine-grained partitioning. There are several ways that a DBMS could manage the dynamic location information of migrating data during a reconfiguration: (1) a globally consistent tracker that maintains the active owning partition for migrating data [23], (2) a distributed transaction is invoked at both potentially owning partitions, (3) transactions are scheduled at the partition according to the old plan, which tracks modifications, or (4) transactions are scheduled at the partition according to the new plan, which reactively pulls data on-demand [19,35]. With the first option, in a large system with many partitions and tuples, maintaining a global view of this information of individual tuples at each partition becomes untenable due to the overhead of communicating ownership between partitions. Furthermore, the storage overhead of tracking these tuples can be expensive in a main memory DBMS, especially if the reconfiguration was initiated because a node was running out of memory, because an expensive migration might exacerbate the problem [20]. The second option will increase latency due to additional nodes being involved in a transaction [14] and does nothing to move the migration forward. The third option has been used in prior live migration solutions [11,15], but it requires the old node to ship modifications to the new node and requires a synchronized downtime to transfer ownership and ship modifications to the new owner. 9. CONCLUSION We introduced a new approach, called Squall, for fine-grained reconfiguration of OLTP databases in partitioned, main memory DBMSs. Squall supports the migration of data between partitions in a cluster in a transactionally safe manner even in the presence of distributed transactions. We performed an extensive evaluation of our approach on a main memory distributed DBMS using OLTP benchmarks. We compared Squall with a naive stop-and-copy technique, a pure-reactive reconfiguration, and a reconfiguration similar to the live-migration technique, Zephyr. The results from our experiments show that while Squall takes longer than the baselines, it can reconfigure a database with no downtime and a minimal overhead on transaction latency. The benefits of Squall are most profound when there is a significant amount of data associated with a single partition attribute or many partitions are migrating data from a single source