正在加载图片...

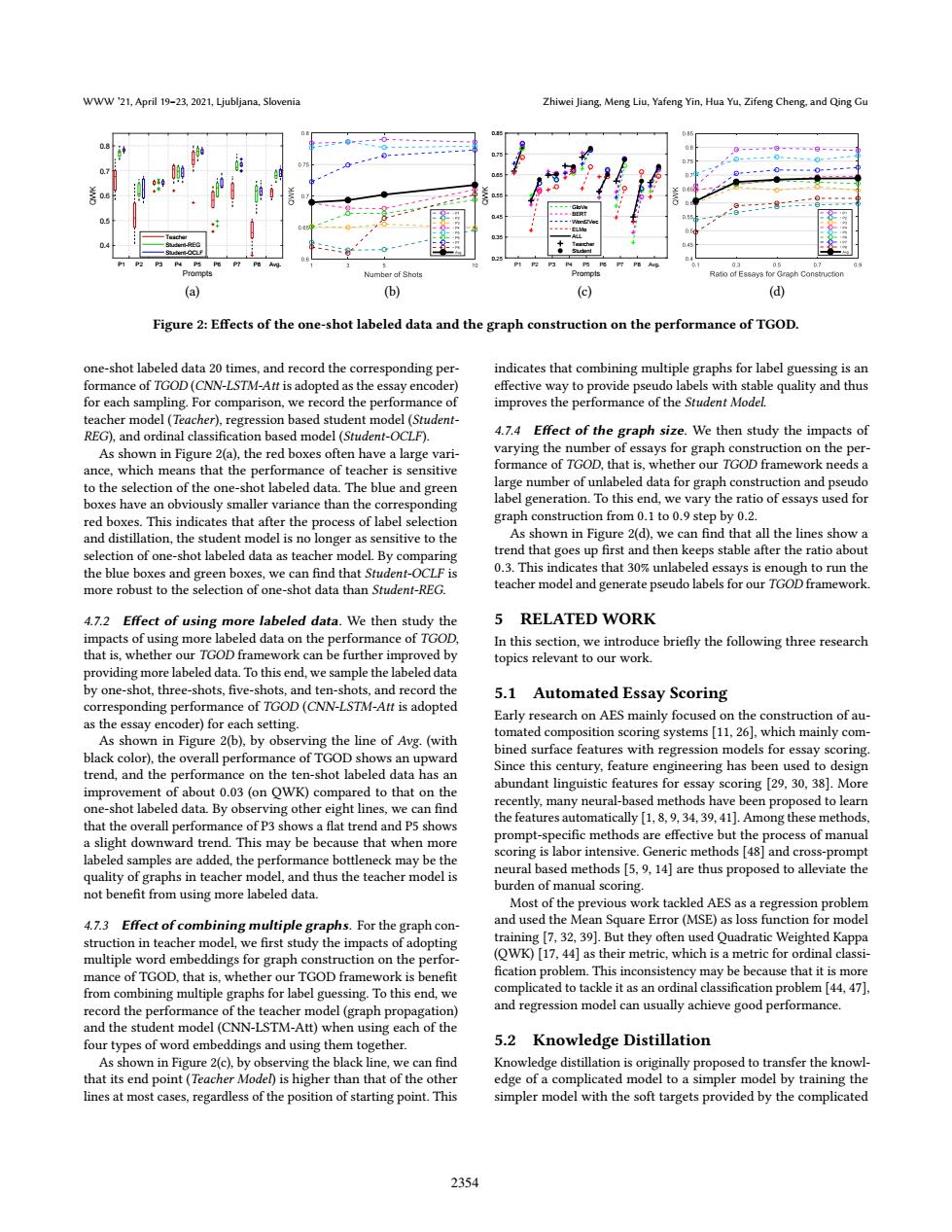

WWW '21,April 19-23,2021,Ljubljana,Slovenia Zhiwei Jiang,Meng Liu,Yafeng Yin,Hua Yu,Zifeng Cheng,and Qing Gu 08 Prompts Number of Shot Prompts Ratio of Essays for Graph Constuction (a) (b) (c) (d) Figure 2:Effects of the one-shot labeled data and the graph construction on the performance of TGOD. one-shot labeled data 20 times,and record the corresponding per- indicates that combining multiple graphs for label guessing is an formance of TGOD(CNN-LSTM-Att is adopted as the essay encoder) effective way to provide pseudo labels with stable quality and thus for each sampling.For comparison,we record the performance of improves the performance of the Student Model. teacher model (Teacher),regression based student model (Student- REG),and ordinal classification based model (Student-OCLF). 4.74 Effect of the graph size.We then study the impacts of As shown in Figure 2(a),the red boxes often have a large vari- varying the number of essays for graph construction on the per- ance,which means that the performance of teacher is sensitive formance of TGOD,that is,whether our TGOD framework needs a to the selection of the one-shot labeled data.The blue and green large number of unlabeled data for graph construction and pseudo boxes have an obviously smaller variance than the corresponding label generation.To this end,we vary the ratio of essays used for red boxes.This indicates that after the process of label selection graph construction from 0.1 to 0.9 step by 0.2. and distillation,the student model is no longer as sensitive to the As shown in Figure 2(d),we can find that all the lines show a selection of one-shot labeled data as teacher model.By comparing trend that goes up first and then keeps stable after the ratio about the blue boxes and green boxes,we can find that Student-OCLF is 0.3.This indicates that 30%unlabeled essays is enough to run the more robust to the selection of one-shot data than Student-REG. teacher model and generate pseudo labels for our TGOD framework. 4.7.2 Effect of using more labeled data.We then study the 5 RELATED WORK impacts of using more labeled data on the performance of TGOD. In this section,we introduce briefly the following three research that is,whether our TGOD framework can be further improved by topics relevant to our work. providing more labeled data.To this end,we sample the labeled data by one-shot,three-shots,five-shots,and ten-shots,and record the 5.1 Automated Essay Scoring corresponding performance of TGOD(CNN-LSTM-Att is adopted Early research on AES mainly focused on the construction of au- as the essay encoder)for each setting. As shown in Figure 2(b),by observing the line of Avg.(with tomated composition scoring systems [11,26],which mainly com- black color),the overall performance of TGOD shows an upward bined surface features with regression models for essay scoring. trend,and the performance on the ten-shot labeled data has an Since this century,feature engineering has been used to design improvement of about 0.03 (on OWK)compared to that on the abundant linguistic features for essay scoring [29,30,38].More one-shot labeled data.By observing other eight lines,we can find recently,many neural-based methods have been proposed to learn that the overall performance of P3 shows a flat trend and P5 shows the features automatically [1,8,9,34,39,41].Among these methods. a slight downward trend.This may be because that when more prompt-specific methods are effective but the process of manual scoring is labor intensive.Generic methods [48]and cross-prompt labeled samples are added,the performance bottleneck may be the quality of graphs in teacher model,and thus the teacher model is neural based methods [5,9,14]are thus proposed to alleviate the not benefit from using more labeled data. burden of manual scoring. Most of the previous work tackled AES as a regression problem 4.7.3 Effect of combining multiple graphs.For the graph con- and used the Mean Square Error(MSE)as loss function for model struction in teacher model,we first study the impacts of adopting training [7,32,39].But they often used Quadratic Weighted Kappa multiple word embeddings for graph construction on the perfor- (OWK)[17,44]as their metric,which is a metric for ordinal classi- mance of TGOD,that is,whether our TGOD framework is benefit fication problem.This inconsistency may be because that it is more from combining multiple graphs for label guessing.To this end,we complicated to tackle it as an ordinal classification problem [44,47]. record the performance of the teacher model(graph propagation) and regression model can usually achieve good performance. and the student model(CNN-LSTM-Att)when using each of the four types of word embeddings and using them together. 5.2 Knowledge Distillation As shown in Figure 2(c).by observing the black line,we can find Knowledge distillation is originally proposed to transfer the knowl- that its end point(Teacher Model)is higher than that of the other edge of a complicated model to a simpler model by training the lines at most cases,regardless of the position of starting point.This simpler model with the soft targets provided by the complicated 2354WWW ’21, April 19–23, 2021, Ljubljana, Slovenia Zhiwei Jiang, Meng Liu, Yafeng Yin, Hua Yu, Zifeng Cheng, and Qing Gu 0.4 0.5 0.6 0.7 0.8 QWK Prompts Teacher Student-REG Student-OCLF P1 P2 P3 P4 P5 P6 P7 P8 Avg. P1 P2 P3 P4 P5 P6 P7 P8 Avg. Prompts 0.25 0.35 0.45 0.55 0.65 0.75 0.85 QWK GloVe BERT Word2Vec ELMo ALL Tearcher Student (a) (b) (c) (d) Figure 2: Effects of the one-shot labeled data and the graph construction on the performance of TGOD. one-shot labeled data 20 times, and record the corresponding performance of TGOD (CNN-LSTM-Att is adopted as the essay encoder) for each sampling. For comparison, we record the performance of teacher model (Teacher), regression based student model (StudentREG), and ordinal classification based model (Student-OCLF). As shown in Figure 2(a), the red boxes often have a large variance, which means that the performance of teacher is sensitive to the selection of the one-shot labeled data. The blue and green boxes have an obviously smaller variance than the corresponding red boxes. This indicates that after the process of label selection and distillation, the student model is no longer as sensitive to the selection of one-shot labeled data as teacher model. By comparing the blue boxes and green boxes, we can find that Student-OCLF is more robust to the selection of one-shot data than Student-REG. 4.7.2 Effect of using more labeled data. We then study the impacts of using more labeled data on the performance of TGOD, that is, whether our TGOD framework can be further improved by providing more labeled data. To this end, we sample the labeled data by one-shot, three-shots, five-shots, and ten-shots, and record the corresponding performance of TGOD (CNN-LSTM-Att is adopted as the essay encoder) for each setting. As shown in Figure 2(b), by observing the line of Avg. (with black color), the overall performance of TGOD shows an upward trend, and the performance on the ten-shot labeled data has an improvement of about 0.03 (on QWK) compared to that on the one-shot labeled data. By observing other eight lines, we can find that the overall performance of P3 shows a flat trend and P5 shows a slight downward trend. This may be because that when more labeled samples are added, the performance bottleneck may be the quality of graphs in teacher model, and thus the teacher model is not benefit from using more labeled data. 4.7.3 Effect of combining multiple graphs. For the graph construction in teacher model, we first study the impacts of adopting multiple word embeddings for graph construction on the performance of TGOD, that is, whether our TGOD framework is benefit from combining multiple graphs for label guessing. To this end, we record the performance of the teacher model (graph propagation) and the student model (CNN-LSTM-Att) when using each of the four types of word embeddings and using them together. As shown in Figure 2(c), by observing the black line, we can find that its end point (Teacher Model) is higher than that of the other lines at most cases, regardless of the position of starting point. This indicates that combining multiple graphs for label guessing is an effective way to provide pseudo labels with stable quality and thus improves the performance of the Student Model. 4.7.4 Effect of the graph size. We then study the impacts of varying the number of essays for graph construction on the performance of TGOD, that is, whether our TGOD framework needs a large number of unlabeled data for graph construction and pseudo label generation. To this end, we vary the ratio of essays used for graph construction from 0.1 to 0.9 step by 0.2. As shown in Figure 2(d), we can find that all the lines show a trend that goes up first and then keeps stable after the ratio about 0.3. This indicates that 30% unlabeled essays is enough to run the teacher model and generate pseudo labels for our TGOD framework. 5 RELATED WORK In this section, we introduce briefly the following three research topics relevant to our work. 5.1 Automated Essay Scoring Early research on AES mainly focused on the construction of automated composition scoring systems [11, 26], which mainly combined surface features with regression models for essay scoring. Since this century, feature engineering has been used to design abundant linguistic features for essay scoring [29, 30, 38]. More recently, many neural-based methods have been proposed to learn the features automatically [1, 8, 9, 34, 39, 41]. Among these methods, prompt-specific methods are effective but the process of manual scoring is labor intensive. Generic methods [48] and cross-prompt neural based methods [5, 9, 14] are thus proposed to alleviate the burden of manual scoring. Most of the previous work tackled AES as a regression problem and used the Mean Square Error (MSE) as loss function for model training [7, 32, 39]. But they often used Quadratic Weighted Kappa (QWK) [17, 44] as their metric, which is a metric for ordinal classification problem. This inconsistency may be because that it is more complicated to tackle it as an ordinal classification problem [44, 47], and regression model can usually achieve good performance. 5.2 Knowledge Distillation Knowledge distillation is originally proposed to transfer the knowledge of a complicated model to a simpler model by training the simpler model with the soft targets provided by the complicated 2354