正在加载图片...

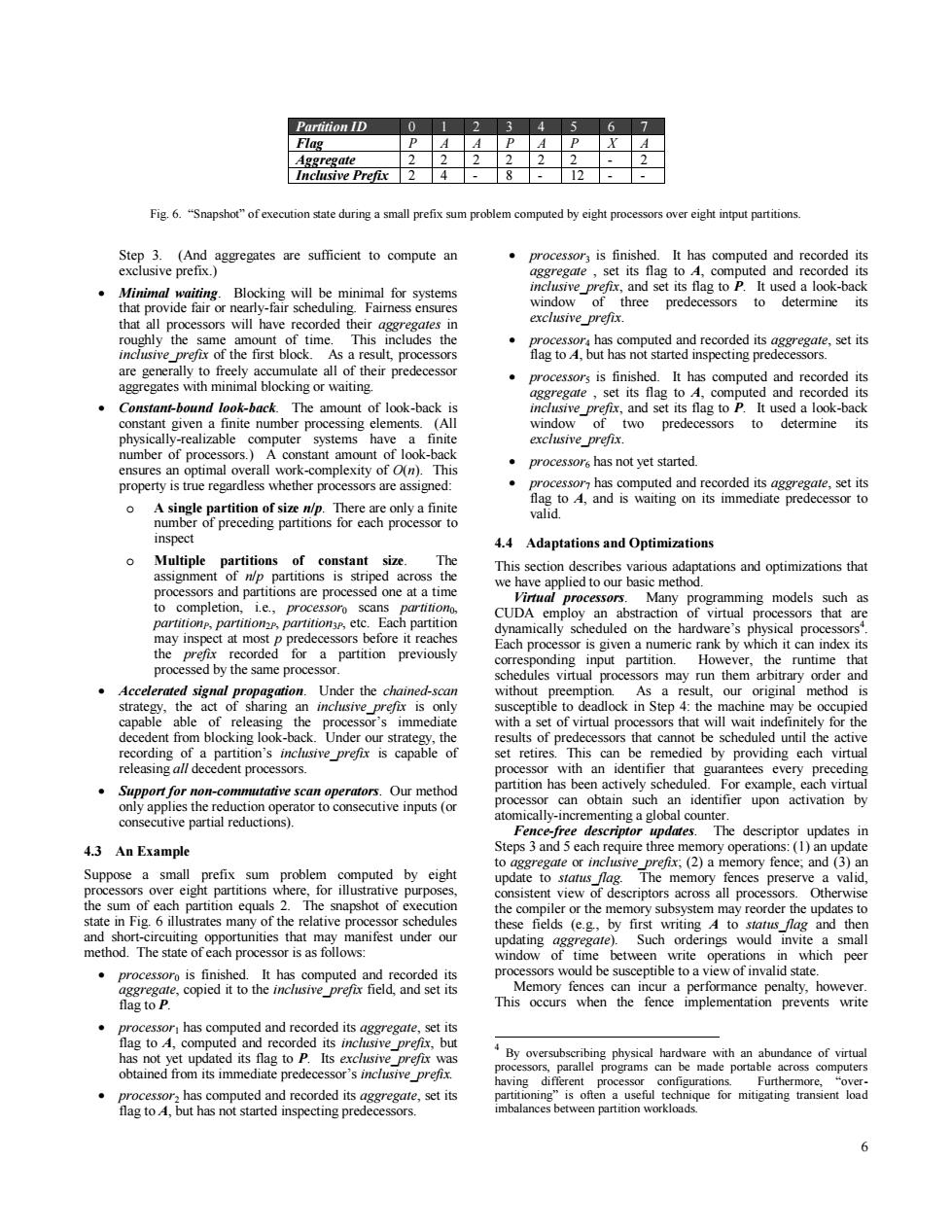

Partition ID 0 Flag P A Aggregate 2 Inclusive Prefix 2 4 Fig.6."Snapshot"of execution state during a small prefix sum problem computed by eight processors over eight intput partitions. Step 3.(And aggregates are sufficient to compute an processors is finished.It has computed and recorded its exclusive prefix.) aggregate,set its flag to A,computed and recorded its Minimal waiting.Blocking will be minimal for systems inclusive_prefix,and set its flag to P.It used a look-back that provide fair or nearly-fair scheduling.Fairness ensures window of three predecessors to determine its that all processors will have recorded their aggregates in exclusive prefix. roughly the same amount of time.This includes the processor has computed and recorded its aggregate,set its inclusive_prefix of the first block.As a result,processors flag to 4,but has not started inspecting predecessors. are generally to freely accumulate all of their predecessor aggregates with minimal blocking or waiting processors is finished.It has computed and recorded its aggregare set its flag to A,computed and recorded its Constant-bound look-back.The amount of look-back is inclusive_prefix,and set its flag to P.It used a look-back constant given a finite number processing elements.(All window of two predecessors to determine its physically-realizable computer systems have a finite exclusive prefix. number of processors.)A constant amount of look-back processors has not yet started. ensures an optimal overall work-complexity of O(n).This property is true regardless whether processors are assigned: processor has computed and recorded its aggregate,set its flag to A,and is waiting on its immediate predecessor to o A single partition of size n/p.There are only a finite valid. number of preceding partitions for each processor to inspect 4.4 Adaptations and Optimizations 0 Multiple partitions of constant size. The This section describes various adaptations and optimizations that assignment of n/p partitions is striped across the we have applied to our basic method. processors and partitions are processed one at a time Virtual processors.Many programming models such as to completion,l.e.,processoro scans partitiono partitionp,partitiomp,partitions,etc.Each partition CUDA employ an abstraction of virtual processors that are dynamically scheduled on the hardware's physical processors" may inspect at most p predecessors before it reaches Each processor is given a numeric rank by which it can index its the prefix recorded for a partition previously corresponding input partition.However,the runtime that processed by the same processor schedules virtual processors may run them arbitrary order and .Accelerated signal propagation.Under the chained-scan without preemption.As a result,our original method is strategy,the act of sharing an inclusive prefix is only susceptible to deadlock in Step 4:the machine may be occupied capable able of releasing the processor's immediate with a set of virtual processors that will wait indefinitely for the decedent from blocking look-back.Under our strategy,the results of predecessors that cannot be scheduled until the active recording of a partition's inclusive prefix is capable of set retires.This can be remedied by providing each virtual releasing al/decedent processors. processor with an identifier that guarantees every preceding Support for non-commutative scan operators.Our method partition has been actively scheduled.For example,each virtual only applies the reduction operator to consecutive inputs (or processor can obtain such an identifier upon activation by consecutive partial reductions). atomically-incrementing a global counter. Fence-free descriptor updates.The descriptor updates in 4.3 An Example Steps 3 and 5 each require three memory operations:(1)an update to aggregate or inclusive prefix;(2)a memory fence;and (3)an Suppose a small prefix sum problem computed by eight update to status flag.The memory fences preserve a valid, processors over eight partitions where,for illustrative purposes, consistent view of descriptors across all processors.Otherwise the sum of each partition equals 2.The snapshot of execution the compiler or the memory subsystem may reorder the updates to state in Fig.6 illustrates many of the relative processor schedules these fields (e.g,by first writing A to status flag and then and short-circuiting opportunities that may manifest under our updating aggregate).Such orderings would invite a small method.The state of each processor is as follows: window of time between write operations in which peer processoro is finished.It has computed and recorded its processors would be susceptible to a view of invalid state. aggregate,copied it to the inclusive prefix field,and set its Memory fences can incur a performance penalty,however flag to P This occurs when the fence implementation prevents write processori has computed and recorded its aggregate,set its flag to A,computed and recorded its inclusive prefix,but has not yet updated its flag to P.Its exclusive prefix was By oversubscribing physical hardware with an abundance of virtual obtained from its immediate predecessor's inclusive prefix. processors,parallel programs can be made portable across computers having different processor configurations. Furthermore,“over- processor,has computed and recorded its aggregate,set its partitioning"is often a useful technique for mitigating transient load flag to 4,but has not started inspecting predecessors. imbalances between partition workloads. 66 Step 3. (And aggregates are sufficient to compute an exclusive prefix.) Minimal waiting. Blocking will be minimal for systems that provide fair or nearly-fair scheduling. Fairness ensures that all processors will have recorded their aggregates in roughly the same amount of time. This includes the inclusive_prefix of the first block. As a result, processors are generally to freely accumulate all of their predecessor aggregates with minimal blocking or waiting. Constant-bound look-back. The amount of look-back is constant given a finite number processing elements. (All physically-realizable computer systems have a finite number of processors.) A constant amount of look-back ensures an optimal overall work-complexity of O(n). This property is true regardless whether processors are assigned: o A single partition of size n/p. There are only a finite number of preceding partitions for each processor to inspect o Multiple partitions of constant size. The assignment of n/p partitions is striped across the processors and partitions are processed one at a time to completion, i.e., processor0 scans partition0, partitionP, partition2P, partition3P, etc. Each partition may inspect at most p predecessors before it reaches the prefix recorded for a partition previously processed by the same processor. Accelerated signal propagation. Under the chained-scan strategy, the act of sharing an inclusive_prefix is only capable able of releasing the processor’s immediate decedent from blocking look-back. Under our strategy, the recording of a partition’s inclusive_prefix is capable of releasing all decedent processors. Support for non-commutative scan operators. Our method only applies the reduction operator to consecutive inputs (or consecutive partial reductions). 4.3 An Example Suppose a small prefix sum problem computed by eight processors over eight partitions where, for illustrative purposes, the sum of each partition equals 2. The snapshot of execution state in Fig. 6 illustrates many of the relative processor schedules and short-circuiting opportunities that may manifest under our method. The state of each processor is as follows: processor0 is finished. It has computed and recorded its aggregate, copied it to the inclusive_prefix field, and set its flag to P. processor1 has computed and recorded its aggregate, set its flag to A, computed and recorded its inclusive_prefix, but has not yet updated its flag to P. Its exclusive_prefix was obtained from its immediate predecessor’s inclusive_prefix. processor2 has computed and recorded its aggregate, set its flag to A, but has not started inspecting predecessors. processor3 is finished. It has computed and recorded its aggregate , set its flag to A, computed and recorded its inclusive_prefix, and set its flag to P. It used a look-back window of three predecessors to determine its exclusive_prefix. processor4 has computed and recorded its aggregate, set its flag to A, but has not started inspecting predecessors. processor5 is finished. It has computed and recorded its aggregate , set its flag to A, computed and recorded its inclusive_prefix, and set its flag to P. It used a look-back window of two predecessors to determine its exclusive_prefix. processor6 has not yet started. processor7 has computed and recorded its aggregate, set its flag to A, and is waiting on its immediate predecessor to valid. 4.4 Adaptations and Optimizations This section describes various adaptations and optimizations that we have applied to our basic method. Virtual processors. Many programming models such as CUDA employ an abstraction of virtual processors that are dynamically scheduled on the hardware’s physical processors4 . Each processor is given a numeric rank by which it can index its corresponding input partition. However, the runtime that schedules virtual processors may run them arbitrary order and without preemption. As a result, our original method is susceptible to deadlock in Step 4: the machine may be occupied with a set of virtual processors that will wait indefinitely for the results of predecessors that cannot be scheduled until the active set retires. This can be remedied by providing each virtual processor with an identifier that guarantees every preceding partition has been actively scheduled. For example, each virtual processor can obtain such an identifier upon activation by atomically-incrementing a global counter. Fence-free descriptor updates. The descriptor updates in Steps 3 and 5 each require three memory operations: (1) an update to aggregate or inclusive_prefix; (2) a memory fence; and (3) an update to status_flag. The memory fences preserve a valid, consistent view of descriptors across all processors. Otherwise the compiler or the memory subsystem may reorder the updates to these fields (e.g., by first writing A to status_flag and then updating aggregate). Such orderings would invite a small window of time between write operations in which peer processors would be susceptible to a view of invalid state. Memory fences can incur a performance penalty, however. This occurs when the fence implementation prevents write 4 By oversubscribing physical hardware with an abundance of virtual processors, parallel programs can be made portable across computers having different processor configurations. Furthermore, “overpartitioning” is often a useful technique for mitigating transient load imbalances between partition workloads. Partition ID 0 1 2 3 4 5 6 7 Flag P A A P A P X A Aggregate 2 2 2 2 2 2 - 2 Inclusive Prefix 2 4 - 8 - 12 - - Fig. 6. “Snapshot” of execution state during a small prefix sum problem computed by eight processors over eight intput partitions