正在加载图片...

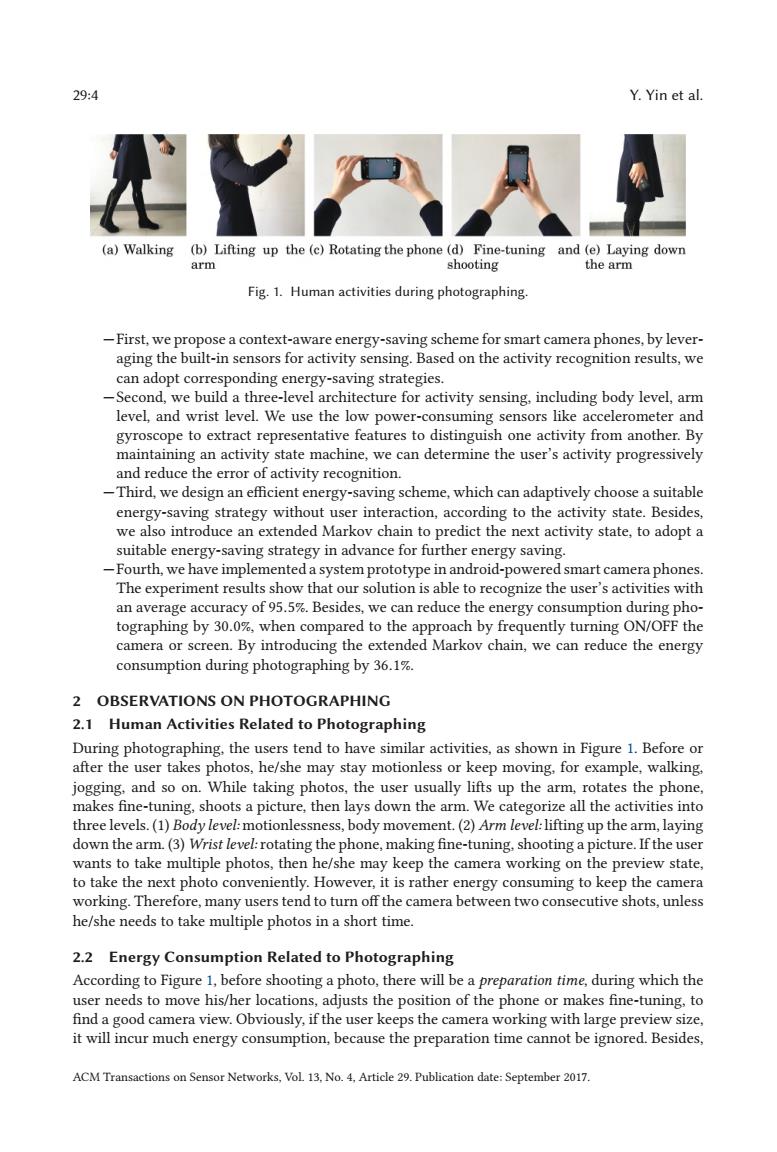

29:4 Y.Yin et al. (a)Walking (b)Lifting up the (c)Rotating the phone(d)Fine-tuning and (e)Laying down arm shooting the arm Fig.1.Human activities during photographing. -First,we propose a context-aware energy-saving scheme for smart camera phones,by lever- aging the built-in sensors for activity sensing.Based on the activity recognition results,we can adopt corresponding energy-saving strategies. -Second,we build a three-level architecture for activity sensing,including body level,arm level,and wrist level.We use the low power-consuming sensors like accelerometer and gyroscope to extract representative features to distinguish one activity from another.By maintaining an activity state machine,we can determine the user's activity progressively and reduce the error of activity recognition. -Third,we design an efficient energy-saving scheme,which can adaptively choose a suitable energy-saving strategy without user interaction,according to the activity state.Besides, we also introduce an extended Markov chain to predict the next activity state,to adopt a suitable energy-saving strategy in advance for further energy saving. -Fourth,we have implemented a system prototype in android-powered smart camera phones. The experiment results show that our solution is able to recognize the user's activities with an average accuracy of 95.5%.Besides,we can reduce the energy consumption during pho- tographing by 30.0%,when compared to the approach by frequently turning ON/OFF the camera or screen.By introducing the extended Markov chain,we can reduce the energy consumption during photographing by 36.1%. 2 OBSERVATIONS ON PHOTOGRAPHING 2.1 Human Activities Related to Photographing During photographing,the users tend to have similar activities,as shown in Figure 1.Before or after the user takes photos,he/she may stay motionless or keep moving,for example,walking. jogging,and so on.While taking photos,the user usually lifts up the arm,rotates the phone, makes fine-tuning,shoots a picture,then lays down the arm.We categorize all the activities into three levels.(1)Body level:motionlessness,body movement.(2)Arm level:lifting up the arm,laying down the arm.(3)Wrist level:rotating the phone,making fine-tuning,shooting a picture.If the user wants to take multiple photos,then he/she may keep the camera working on the preview state, to take the next photo conveniently.However,it is rather energy consuming to keep the camera working.Therefore,many users tend to turn off the camera between two consecutive shots,unless he/she needs to take multiple photos in a short time. 2.2 Energy Consumption Related to Photographing According to Figure 1,before shooting a photo,there will be a preparation time,during which the user needs to move his/her locations,adjusts the position of the phone or makes fine-tuning,to find a good camera view.Obviously,if the user keeps the camera working with large preview size, it will incur much energy consumption,because the preparation time cannot be ignored.Besides, ACM Transactions on Sensor Networks,Vol 13.No.4,Article 29.Publication date:September 2017.29:4 Y. Yin et al. Fig. 1. Human activities during photographing. —First, we propose a context-aware energy-saving scheme for smart camera phones, by leveraging the built-in sensors for activity sensing. Based on the activity recognition results, we can adopt corresponding energy-saving strategies. —Second, we build a three-level architecture for activity sensing, including body level, arm level, and wrist level. We use the low power-consuming sensors like accelerometer and gyroscope to extract representative features to distinguish one activity from another. By maintaining an activity state machine, we can determine the user’s activity progressively and reduce the error of activity recognition. —Third, we design an efficient energy-saving scheme, which can adaptively choose a suitable energy-saving strategy without user interaction, according to the activity state. Besides, we also introduce an extended Markov chain to predict the next activity state, to adopt a suitable energy-saving strategy in advance for further energy saving. —Fourth, we have implemented a system prototype in android-powered smart camera phones. The experiment results show that our solution is able to recognize the user’s activities with an average accuracy of 95.5%. Besides, we can reduce the energy consumption during photographing by 30.0%, when compared to the approach by frequently turning ON/OFF the camera or screen. By introducing the extended Markov chain, we can reduce the energy consumption during photographing by 36.1%. 2 OBSERVATIONS ON PHOTOGRAPHING 2.1 Human Activities Related to Photographing During photographing, the users tend to have similar activities, as shown in Figure 1. Before or after the user takes photos, he/she may stay motionless or keep moving, for example, walking, jogging, and so on. While taking photos, the user usually lifts up the arm, rotates the phone, makes fine-tuning, shoots a picture, then lays down the arm. We categorize all the activities into three levels. (1) Body level: motionlessness, body movement. (2) Arm level: lifting up the arm, laying down the arm. (3) Wrist level: rotating the phone, making fine-tuning, shooting a picture. If the user wants to take multiple photos, then he/she may keep the camera working on the preview state, to take the next photo conveniently. However, it is rather energy consuming to keep the camera working. Therefore, many users tend to turn off the camera between two consecutive shots, unless he/she needs to take multiple photos in a short time. 2.2 Energy Consumption Related to Photographing According to Figure 1, before shooting a photo, there will be a preparation time, during which the user needs to move his/her locations, adjusts the position of the phone or makes fine-tuning, to find a good camera view. Obviously, if the user keeps the camera working with large preview size, it will incur much energy consumption, because the preparation time cannot be ignored. Besides, ACM Transactions on Sensor Networks, Vol. 13, No. 4, Article 29. Publication date: September 2017