正在加载图片...

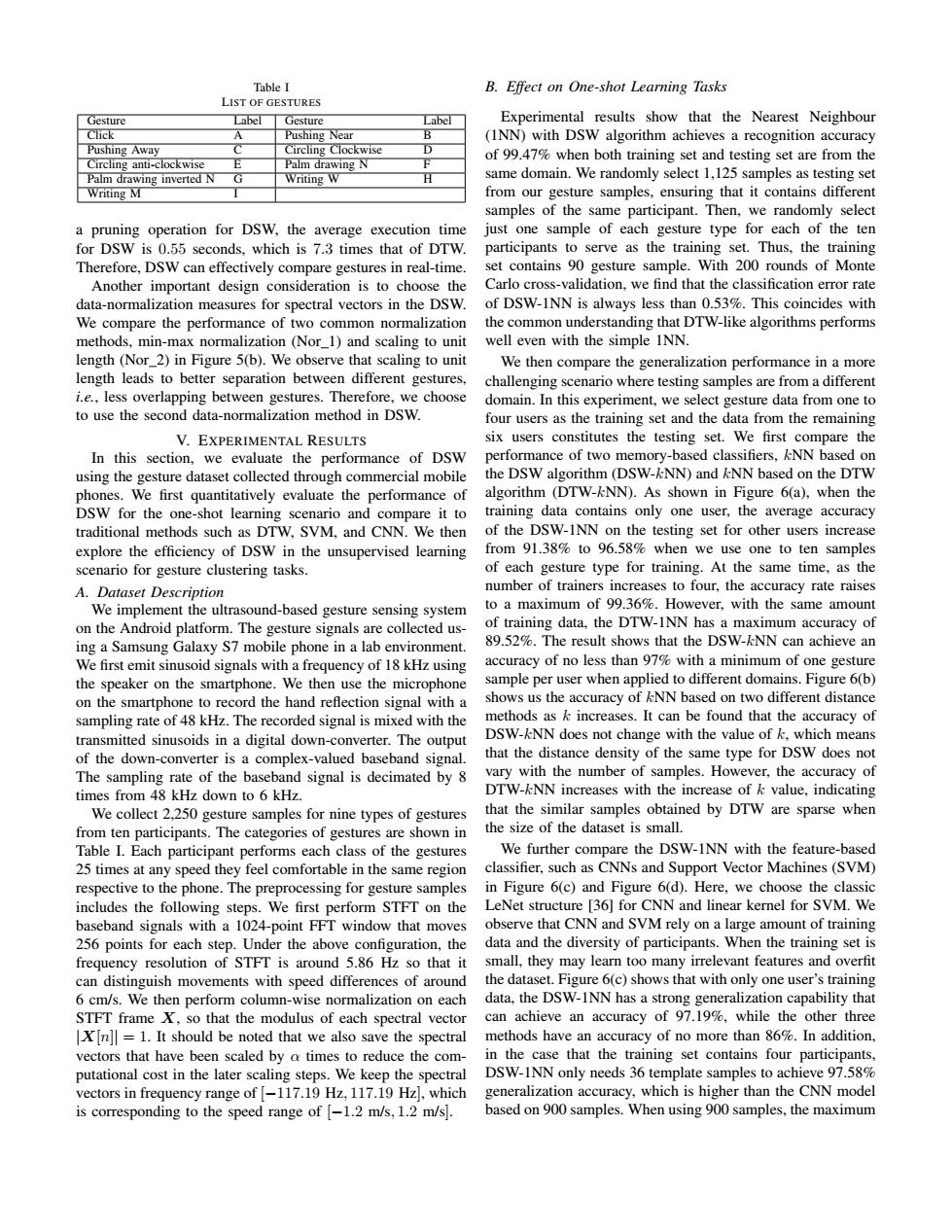

Table I B.Effect on One-shot Learning Tasks LIST OF GESTURES Gesture Label Gesture Label Experimental results show that the Nearest Neighbour Click Pushing Near B (INN)with DSW algorithm achieves a recognition accuracy Pushing Away Circling Clockwise D Circling anti-clockwise Palm drawing N of 99.47%when both training set and testing set are from the 不 Palm drawing inverted N G Writing W H same domain.We randomly select 1,125 samples as testing set Writing M from our gesture samples,ensuring that it contains different samples of the same participant.Then,we randomly select a pruning operation for DSW,the average execution time just one sample of each gesture type for each of the ten for DSW is 0.55 seconds.which is 7.3 times that of DTW. participants to serve as the training set.Thus,the training Therefore,DSW can effectively compare gestures in real-time. set contains 90 gesture sample.With 200 rounds of Monte Another important design consideration is to choose the Carlo cross-validation,we find that the classification error rate data-normalization measures for spectral vectors in the DSW. of DSW-INN is always less than 0.53%.This coincides with We compare the performance of two common normalization the common understanding that DTW-like algorithms performs methods,min-max normalization (Nor_1)and scaling to unit well even with the simple INN. length (Nor 2)in Figure 5(b).We observe that scaling to unit We then compare the generalization performance in a more length leads to better separation between different gestures, challenging scenario where testing samples are from a different i.e.,less overlapping between gestures.Therefore,we choose domain.In this experiment,we select gesture data from one to to use the second data-normalization method in DSW. four users as the training set and the data from the remaining V.EXPERIMENTAL RESULTS six users constitutes the testing set.We first compare the In this section,we evaluate the performance of DSW performance of two memory-based classifiers,kNN based on using the gesture dataset collected through commercial mobile the DSW algorithm (DSW-kNN)and kNN based on the DTW phones.We first quantitatively evaluate the performance of algorithm (DTW-kNN).As shown in Figure 6(a),when the DSW for the one-shot learning scenario and compare it to training data contains only one user,the average accuracy traditional methods such as DTW.SVM.and CNN.We then of the DSW-INN on the testing set for other users increase explore the efficiency of DSW in the unsupervised learning from 91.38%to 96.58%when we use one to ten samples scenario for gesture clustering tasks. of each gesture type for training.At the same time,as the A.Dataset Description number of trainers increases to four,the accuracy rate raises We implement the ultrasound-based gesture sensing system to a maximum of 99.36%.However,with the same amount on the Android platform.The gesture signals are collected us- of training data,the DTW-INN has a maximum accuracy of ing a Samsung Galaxy S7 mobile phone in a lab environment. 89.52%.The result shows that the DSW-kNN can achieve an We first emit sinusoid signals with a frequency of 18 kHz using accuracy of no less than 97%with a minimum of one gesture the speaker on the smartphone.We then use the microphone sample per user when applied to different domains.Figure 6(b) on the smartphone to record the hand reflection signal with a shows us the accuracy of kNN based on two different distance sampling rate of 48 kHz.The recorded signal is mixed with the methods as k increases.It can be found that the accuracy of transmitted sinusoids in a digital down-converter.The output DSW-kNN does not change with the value of k,which means of the down-converter is a complex-valued baseband signal. that the distance density of the same type for DSW does not The sampling rate of the baseband signal is decimated by 8 vary with the number of samples.However,the accuracy of times from 48 kHz down to 6 kHz. DTW-kNN increases with the increase of k value,indicating We collect 2,250 gesture samples for nine types of gestures that the similar samples obtained by DTW are sparse when from ten participants.The categories of gestures are shown in the size of the dataset is small. Table I.Each participant performs each class of the gestures We further compare the DSW-INN with the feature-based 25 times at any speed they feel comfortable in the same region classifier,such as CNNs and Support Vector Machines(SVM) respective to the phone.The preprocessing for gesture samples in Figure 6(c)and Figure 6(d).Here,we choose the classic includes the following steps.We first perform STFT on the LeNet structure [36]for CNN and linear kernel for SVM.We baseband signals with a 1024-point FFT window that moves observe that CNN and SVM rely on a large amount of training 256 points for each step.Under the above configuration,the data and the diversity of participants.When the training set is frequency resolution of STFT is around 5.86 Hz so that it small,they may learn too many irrelevant features and overfit can distinguish movements with speed differences of around the dataset.Figure 6(c)shows that with only one user's training 6 cm/s.We then perform column-wise normalization on each data,the DSW-INN has a strong generalization capability that STFT frame X.so that the modulus of each spectral vector can achieve an accuracy of 97.19%,while the other three X[n]=1.It should be noted that we also save the spectral methods have an accuracy of no more than 86%.In addition, vectors that have been scaled by a times to reduce the com- in the case that the training set contains four participants, putational cost in the later scaling steps.We keep the spectral DSW-INN only needs 36 template samples to achieve 97.58% vectors in frequency range of[-117.19 Hz,117.19 Hz],which generalization accuracy,which is higher than the CNN model is corresponding to the speed range of [-1.2 m/s,1.2 m/s]. based on 900 samples.When using 900 samples,the maximumTable I LIST OF GESTURES Gesture Label Gesture Label Click A Pushing Near B Pushing Away C Circling Clockwise D Circling anti-clockwise E Palm drawing N F Palm drawing inverted N G Writing W H Writing M I a pruning operation for DSW, the average execution time for DSW is 0.55 seconds, which is 7.3 times that of DTW. Therefore, DSW can effectively compare gestures in real-time. Another important design consideration is to choose the data-normalization measures for spectral vectors in the DSW. We compare the performance of two common normalization methods, min-max normalization (Nor 1) and scaling to unit length (Nor 2) in Figure 5(b). We observe that scaling to unit length leads to better separation between different gestures, i.e., less overlapping between gestures. Therefore, we choose to use the second data-normalization method in DSW. V. EXPERIMENTAL RESULTS In this section, we evaluate the performance of DSW using the gesture dataset collected through commercial mobile phones. We first quantitatively evaluate the performance of DSW for the one-shot learning scenario and compare it to traditional methods such as DTW, SVM, and CNN. We then explore the efficiency of DSW in the unsupervised learning scenario for gesture clustering tasks. A. Dataset Description We implement the ultrasound-based gesture sensing system on the Android platform. The gesture signals are collected using a Samsung Galaxy S7 mobile phone in a lab environment. We first emit sinusoid signals with a frequency of 18 kHz using the speaker on the smartphone. We then use the microphone on the smartphone to record the hand reflection signal with a sampling rate of 48 kHz. The recorded signal is mixed with the transmitted sinusoids in a digital down-converter. The output of the down-converter is a complex-valued baseband signal. The sampling rate of the baseband signal is decimated by 8 times from 48 kHz down to 6 kHz. We collect 2,250 gesture samples for nine types of gestures from ten participants. The categories of gestures are shown in Table I. Each participant performs each class of the gestures 25 times at any speed they feel comfortable in the same region respective to the phone. The preprocessing for gesture samples includes the following steps. We first perform STFT on the baseband signals with a 1024-point FFT window that moves 256 points for each step. Under the above configuration, the frequency resolution of STFT is around 5.86 Hz so that it can distinguish movements with speed differences of around 6 cm/s. We then perform column-wise normalization on each STFT frame X, so that the modulus of each spectral vector |X[n]| = 1. It should be noted that we also save the spectral vectors that have been scaled by ↵ times to reduce the computational cost in the later scaling steps. We keep the spectral vectors in frequency range of [117.19 Hz, 117.19 Hz], which is corresponding to the speed range of [1.2 m/s, 1.2 m/s]. B. Effect on One-shot Learning Tasks Experimental results show that the Nearest Neighbour (1NN) with DSW algorithm achieves a recognition accuracy of 99.47% when both training set and testing set are from the same domain. We randomly select 1,125 samples as testing set from our gesture samples, ensuring that it contains different samples of the same participant. Then, we randomly select just one sample of each gesture type for each of the ten participants to serve as the training set. Thus, the training set contains 90 gesture sample. With 200 rounds of Monte Carlo cross-validation, we find that the classification error rate of DSW-1NN is always less than 0.53%. This coincides with the common understanding that DTW-like algorithms performs well even with the simple 1NN. We then compare the generalization performance in a more challenging scenario where testing samples are from a different domain. In this experiment, we select gesture data from one to four users as the training set and the data from the remaining six users constitutes the testing set. We first compare the performance of two memory-based classifiers, kNN based on the DSW algorithm (DSW-kNN) and kNN based on the DTW algorithm (DTW-kNN). As shown in Figure 6(a), when the training data contains only one user, the average accuracy of the DSW-1NN on the testing set for other users increase from 91.38% to 96.58% when we use one to ten samples of each gesture type for training. At the same time, as the number of trainers increases to four, the accuracy rate raises to a maximum of 99.36%. However, with the same amount of training data, the DTW-1NN has a maximum accuracy of 89.52%. The result shows that the DSW-kNN can achieve an accuracy of no less than 97% with a minimum of one gesture sample per user when applied to different domains. Figure 6(b) shows us the accuracy of kNN based on two different distance methods as k increases. It can be found that the accuracy of DSW-kNN does not change with the value of k, which means that the distance density of the same type for DSW does not vary with the number of samples. However, the accuracy of DTW-kNN increases with the increase of k value, indicating that the similar samples obtained by DTW are sparse when the size of the dataset is small. We further compare the DSW-1NN with the feature-based classifier, such as CNNs and Support Vector Machines (SVM) in Figure 6(c) and Figure 6(d). Here, we choose the classic LeNet structure [36] for CNN and linear kernel for SVM. We observe that CNN and SVM rely on a large amount of training data and the diversity of participants. When the training set is small, they may learn too many irrelevant features and overfit the dataset. Figure 6(c) shows that with only one user’s training data, the DSW-1NN has a strong generalization capability that can achieve an accuracy of 97.19%, while the other three methods have an accuracy of no more than 86%. In addition, in the case that the training set contains four participants, DSW-1NN only needs 36 template samples to achieve 97.58% generalization accuracy, which is higher than the CNN model based on 900 samples. When using 900 samples, the maximum