正在加载图片...

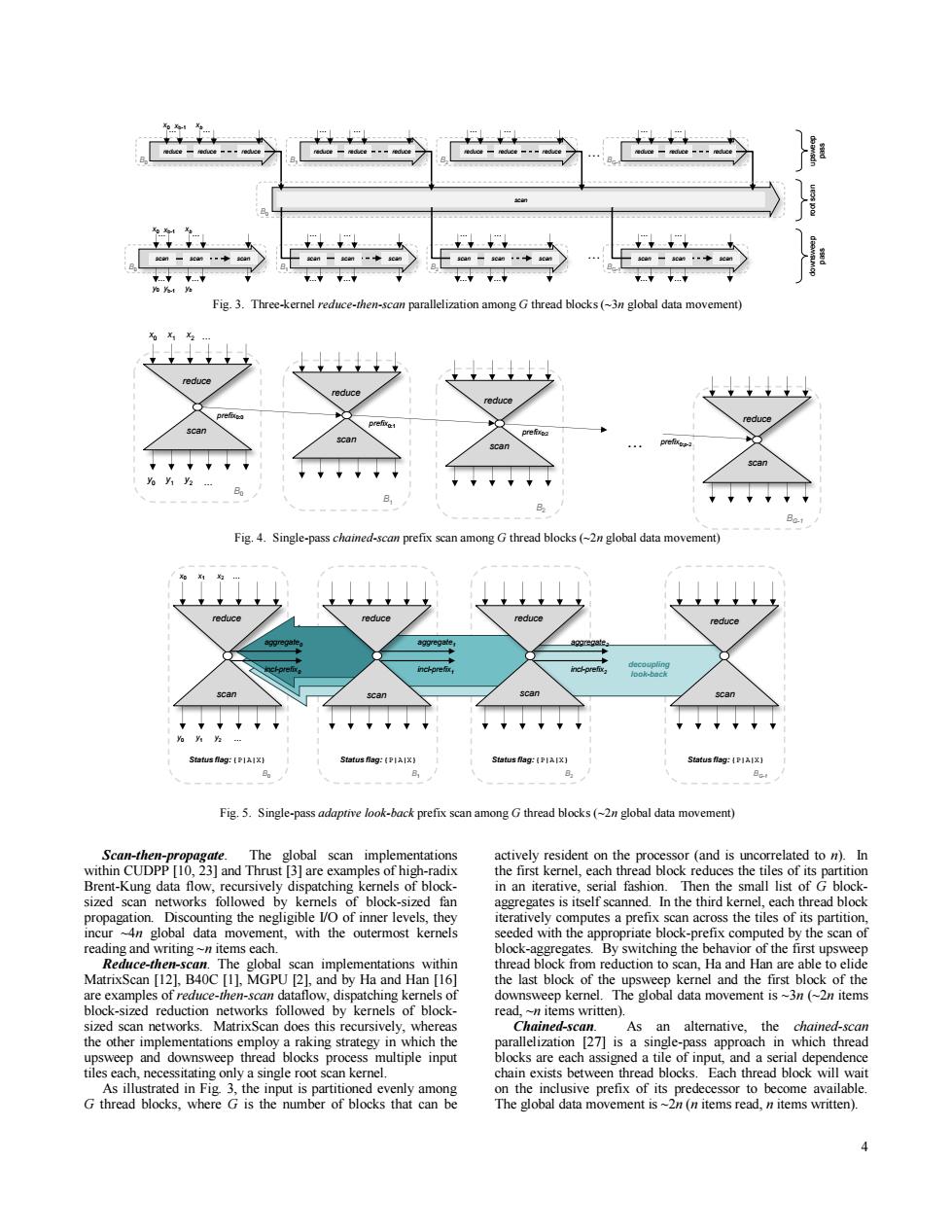

Fig.3.Three-kernel reduce-then-scan parallelization among G thread blocks(~3n global data movement) Fig.4.Single-pass chained-scan prefix scan among G thread blocks(~2n global data movement) reduce reduce scan Status flog:[PIAIX) Status flag:IP1Alx】 Status flag:【PIAIX】 Status flag:IP1Ax】 Fig.5.Single-pass adaptive look-back prefix scan among G thread blocks(~2n global data movement) Scan-then-propagate.The global scan implementations actively resident on the processor (and is uncorrelated to n).In within CUDPP [10,23]and Thrust [3]are examples of high-radix the first kernel,each thread block reduces the tiles of its partition Brent-Kung data flow,recursively dispatching kernels of block- in an iterative,serial fashion.Then the small list of G block- sized scan networks followed by kernels of block-sized fan aggregates is itself scanned.In the third kernel,each thread block propagation.Discounting the negligible I/O of inner levels,they iteratively computes a prefix scan across the tiles of its partition, incur ~4n global data movement,with the outermost kernels seeded with the appropriate block-prefix computed by the scan of reading and writing ~n items each. block-aggregates.By switching the behavior of the first upsweep Reduce-then-scan.The global scan implementations within thread block from reduction to scan,Ha and Han are able to elide MatrixScan [12],B40C [1],MGPU [2],and by Ha and Han [16] the last block of the upsweep kernel and the first block of the are examples of reduce-then-scan dataflow,dispatching kernels of downsweep kernel.The global data movement is~3n(~2n items block-sized reduction networks followed by kernels of block- read,~n items written). sized scan networks.MatrixScan does this recursively,whereas Chained-scan.As an alternative,the chained-scan the other implementations employ a raking strategy in which the parallelization [27]is a single-pass approach in which thread upsweep and downsweep thread blocks process multiple input blocks are each assigned a tile of input,and a serial dependence tiles each,necessitating only a single root scan kernel. chain exists between thread blocks.Each thread block will wait As illustrated in Fig 3,the input is partitioned evenly among on the inclusive prefix of its predecessor to become available. G thread blocks,where G is the number of blocks that can be The global data movement is~2n(n items read,n items written).4 Scan-then-propagate. The global scan implementations within CUDPP [10, 23] and Thrust [3] are examples of high-radix Brent-Kung data flow, recursively dispatching kernels of blocksized scan networks followed by kernels of block-sized fan propagation. Discounting the negligible I/O of inner levels, they incur ~4n global data movement, with the outermost kernels reading and writing ~n items each. Reduce-then-scan. The global scan implementations within MatrixScan [12], B40C [1], MGPU [2], and by Ha and Han [16] are examples of reduce-then-scan dataflow, dispatching kernels of block-sized reduction networks followed by kernels of blocksized scan networks. MatrixScan does this recursively, whereas the other implementations employ a raking strategy in which the upsweep and downsweep thread blocks process multiple input tiles each, necessitating only a single root scan kernel. As illustrated in Fig. 3, the input is partitioned evenly among G thread blocks, where G is the number of blocks that can be actively resident on the processor (and is uncorrelated to n). In the first kernel, each thread block reduces the tiles of its partition in an iterative, serial fashion. Then the small list of G blockaggregates is itself scanned. In the third kernel, each thread block iteratively computes a prefix scan across the tiles of its partition, seeded with the appropriate block-prefix computed by the scan of block-aggregates. By switching the behavior of the first upsweep thread block from reduction to scan, Ha and Han are able to elide the last block of the upsweep kernel and the first block of the downsweep kernel. The global data movement is ~3n (~2n items read, ~n items written). Chained-scan. As an alternative, the chained-scan parallelization [27] is a single-pass approach in which thread blocks are each assigned a tile of input, and a serial dependence chain exists between thread blocks. Each thread block will wait on the inclusive prefix of its predecessor to become available. The global data movement is ~2n (n items read, n items written). Fig. 3. Three-kernel reduce-then-scan parallelization among G thread blocks (~3n global data movement) Fig. 4. Single-pass chained-scan prefix scan among G thread blocks (~2n global data movement) Fig. 5. Single-pass adaptive look-back prefix scan among G thread blocks (~2n global data movement) reduce reduce x0 xb-1 … xb B0 reduce reduce reduce … … reduce … B1 reduce reduce reduce … reduce … B2 reduce reduce reduce … reduce … BG-1 reduce reduce reduce … reduce … B1 … scan … … reduce … B2 … scan scan scan … … reduce x0 xb-1 … xb B0 … scan scan scan … … y0 yb-1 yb B0 reduce … BG-1 … scan scan scan … … scan … upsweep pass downsweep pass root scan scan scan B0 B1 B2 BG-1 x0 x1 x2 y0 y1 y2 … … … prefix0:0 prefix0:1 prefix0:2 prefix0:p-2 reduce reduce reduce reduce scan scan scan scan B0 B1 B2 BG-1 x0 x1 x2 y0 y1 y2 … … aggregate0 incl-prefix0 decoupling look-back aggregate1 incl-prefix1 aggregate2 incl-prefix2 reduce reduce reduce reduce scan scan scan scan Status flag: {P|A|X} Status flag: {P|A|X} Status flag: {P|A|X} Status flag: {P|A|X}