正在加载图片...

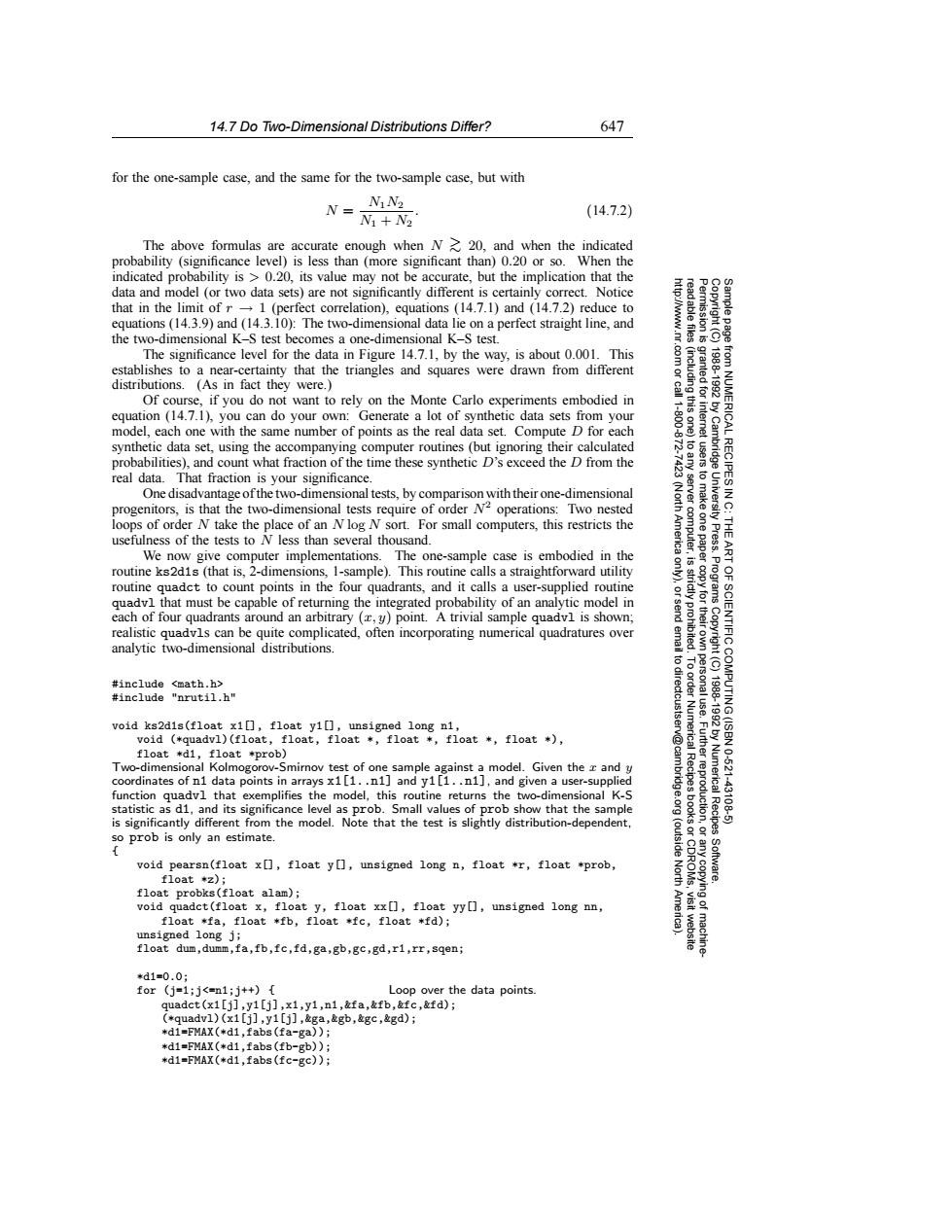

14.7 Do Two-Dimensional Distributions Differ? 647 for the one-sample case,and the same for the two-sample case,but with NIN2 N= (14.7.2) N1+N2 The above formulas are accurate enough when N 20,and when the indicated probability (significance level)is less than (more significant than)0.20 or so.When the indicated probability is 0.20,its value may not be accurate,but the implication that the data and model (or two data sets)are not significantly different is certainly correct.Notice that in the limit of r1 (perfect correlation),equations (14.7.1)and (14.7.2)reduce to equations (14.3.9)and (14.3.10):The two-dimensional data lie on a perfect straight line,and the two-dimensional K-S test becomes a one-dimensional K-S test. 5常 The significance level for the data in Figure 14.7.1,by the way,is about 0.001.This 8 establishes to a near-certainty that the triangles and squares were drawn from different distributions.(As in fact they were. Of course,if you do not want to rely on the Monte Carlo experiments embodied in equation (14.7.1),you can do your own:Generate a lot of synthetic data sets from your model,each one with the same number of points as the real data set.Compute D for each synthetic data set,using the accompanying computer routines (but ignoring their calculated probabilities).and count what fraction of the time these synthetic D's exceed the D from the real data.That fraction is your significance. One disadvantage of the two-dimensional tests,by comparison with their one-dimensional (North 令 progenitors,is that the two-dimensional tests require of order N2 operations:Two nested loops of order N take the place of an Nlog N sort.For small computers,this restricts the THE usefulness of the tests to N less than several thousand. We now give computer implementations.The one-sample case is embodied in the ART routine ks2d1s (that is,2-dimensions,1-sample).This routine calls a straightforward utility routine quadct to count points in the four quadrants,and it calls a user-supplied routine 9 Programs quadvl that must be capable of returning the integrated probability of an analytic model in each of four quadrants around an arbitrary (y)point.A trivial sample quadvl is shown; realistic quadvls can be quite complicated,often incorporating numerical quadratures over analytic two-dimensional distributions. to dir #include <math.h> #include "nrutil.h" ectcustser OF SCIENTIFIC COMPUTING(ISBN void ks2dis(float x1,float yi0],unsigned long n1, 1881892 void (quadvl)(float,float,float *float *float *float *) float *d1,float *prob) Two-dimensional Kolmogorov-Smirnov test of one sample against a model.Given the x and y 10-621 coordinates of n1 data points in arrays x1[1..n1]and y1[1..n1],and given a user-supplied function quadvl that exemplifies the model,this routine returns the two-dimensional K-S statistic as d1,and its significance level as prob.Small values of prob show that the sample Numerical Recipes 43108 is significantly different from the model.Note that the test is slightly distribution-dependent, so prob is only an estimate. (outside void pearsn(float x[],float y[],unsigned long n,float r,float *prob, North Software. f0at*2】: float probks(float alam); void quadct(float x,float y,float xx[],float yy],unsigned long nn, Ame float *fa,float *fb,float *fc,float *fd); unsigned long ji float dum,dumm,fa,fb,fc,fd,ga,gb,gc,gd,r1,rr,sqen; *d1=0.0: for(j=1;j<n1;j++)[ Loop over the data points. quadct(x1[j],y1[j],x1,y1,n1,&fa,&fb,&fc,&fd); (*quadv1)(x1[j],y1[j],&ga,&gb,&gc,&gd); *d1=FMAX(*d1,fabs (fa-ga)); *d1=FMAX(*d1,fabs(fb-gb)); *d1=FMAX(*d1,fabs(fc-gc));14.7 Do Two-Dimensional Distributions Differ? 647 Permission is granted for internet users to make one paper copy for their own personal use. Further reproduction, or any copyin Copyright (C) 1988-1992 by Cambridge University Press. Programs Copyright (C) 1988-1992 by Numerical Recipes Software. Sample page from NUMERICAL RECIPES IN C: THE ART OF SCIENTIFIC COMPUTING (ISBN 0-521-43108-5) g of machinereadable files (including this one) to any server computer, is strictly prohibited. To order Numerical Recipes books or CDROMs, visit website http://www.nr.com or call 1-800-872-7423 (North America only), or send email to directcustserv@cambridge.org (outside North America). for the one-sample case, and the same for the two-sample case, but with N = N1N2 N1 + N2 . (14.7.2) The above formulas are accurate enough when N >∼ 20, and when the indicated probability (significance level) is less than (more significant than) 0.20 or so. When the indicated probability is > 0.20, its value may not be accurate, but the implication that the data and model (or two data sets) are not significantly different is certainly correct. Notice that in the limit of r → 1 (perfect correlation), equations (14.7.1) and (14.7.2) reduce to equations (14.3.9) and (14.3.10): The two-dimensional data lie on a perfect straight line, and the two-dimensional K–S test becomes a one-dimensional K–S test. The significance level for the data in Figure 14.7.1, by the way, is about 0.001. This establishes to a near-certainty that the triangles and squares were drawn from different distributions. (As in fact they were.) Of course, if you do not want to rely on the Monte Carlo experiments embodied in equation (14.7.1), you can do your own: Generate a lot of synthetic data sets from your model, each one with the same number of points as the real data set. Compute D for each synthetic data set, using the accompanying computer routines (but ignoring their calculated probabilities), and count what fraction of the time these synthetic D’s exceed the D from the real data. That fraction is your significance. One disadvantage of the two-dimensional tests, by comparison with their one-dimensional progenitors, is that the two-dimensional tests require of order N2 operations: Two nested loops of order N take the place of an N log N sort. For small computers, this restricts the usefulness of the tests to N less than several thousand. We now give computer implementations. The one-sample case is embodied in the routine ks2d1s (that is, 2-dimensions, 1-sample). This routine calls a straightforward utility routine quadct to count points in the four quadrants, and it calls a user-supplied routine quadvl that must be capable of returning the integrated probability of an analytic model in each of four quadrants around an arbitrary (x, y) point. A trivial sample quadvl is shown; realistic quadvls can be quite complicated, often incorporating numerical quadratures over analytic two-dimensional distributions. #include <math.h> #include "nrutil.h" void ks2d1s(float x1[], float y1[], unsigned long n1, void (*quadvl)(float, float, float *, float *, float *, float *), float *d1, float *prob) Two-dimensional Kolmogorov-Smirnov test of one sample against a model. Given the x and y coordinates of n1 data points in arrays x1[1..n1] and y1[1..n1], and given a user-supplied function quadvl that exemplifies the model, this routine returns the two-dimensional K-S statistic as d1, and its significance level as prob. Small values of prob show that the sample is significantly different from the model. Note that the test is slightly distribution-dependent, so prob is only an estimate. { void pearsn(float x[], float y[], unsigned long n, float *r, float *prob, float *z); float probks(float alam); void quadct(float x, float y, float xx[], float yy[], unsigned long nn, float *fa, float *fb, float *fc, float *fd); unsigned long j; float dum,dumm,fa,fb,fc,fd,ga,gb,gc,gd,r1,rr,sqen; *d1=0.0; for (j=1;j<=n1;j++) { Loop over the data points. quadct(x1[j],y1[j],x1,y1,n1,&fa,&fb,&fc,&fd); (*quadvl)(x1[j],y1[j],&ga,&gb,&gc,&gd); *d1=FMAX(*d1,fabs(fa-ga)); *d1=FMAX(*d1,fabs(fb-gb)); *d1=FMAX(*d1,fabs(fc-gc));