正在加载图片...

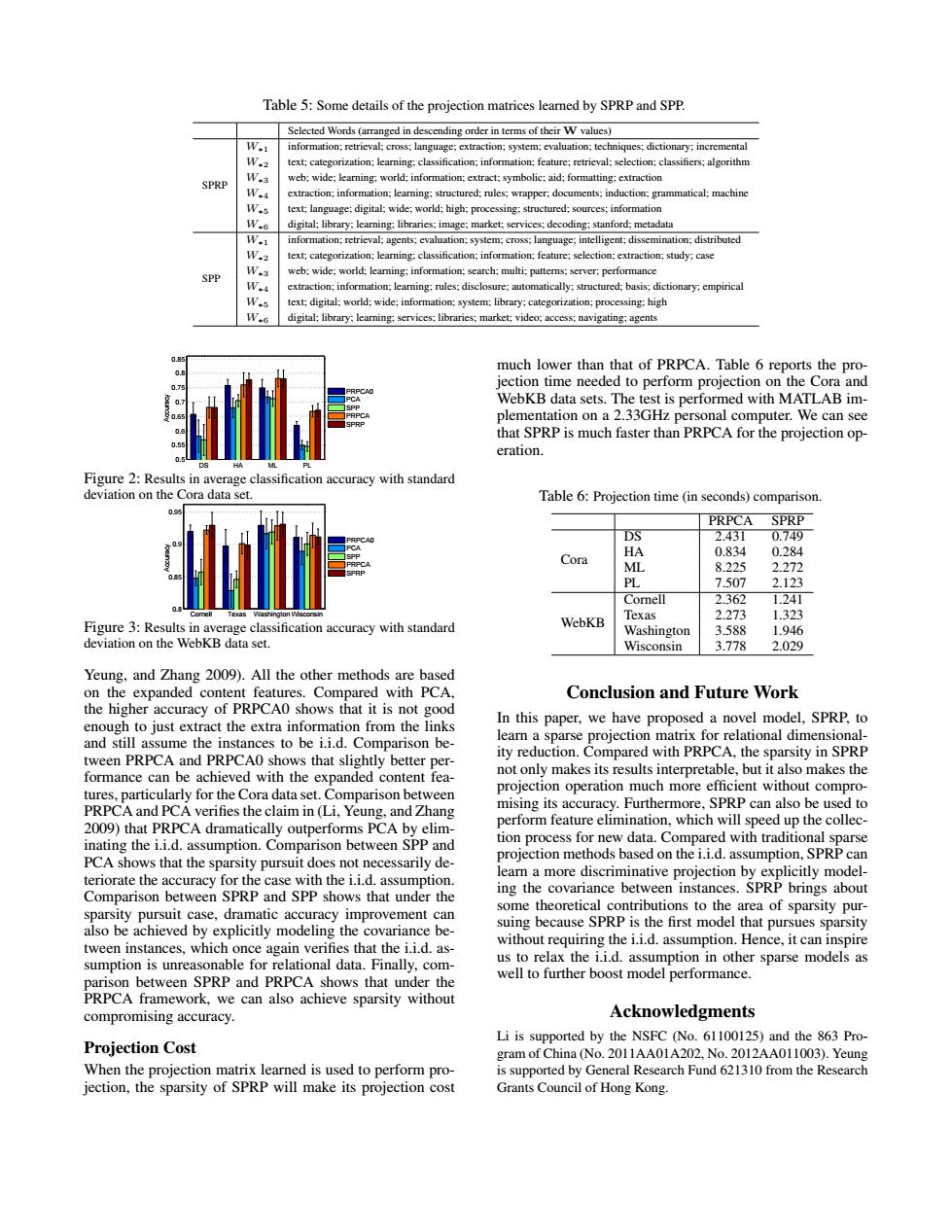

Table 5:Some details of the projection matrices learned by SPRP and SPP. Selected Words(arranged in descending order in terms of their W values) W. information:retrieval:cross;language:extraction;system:evaluation:techniques:dictionary:incremental W.2 ext categorization:learning:classification:information:feature:retrieval:selection:classifiers;algorithm W.3 web:wide:learning:world;information:extract:symbolic:aid:formatting:extraction SPRP W.4 extraction;information:leaming:structured:rules;wrapper:documents;induction:grammatical:machine W text:language:digital:wide;world:high;processing:structured;sources;information W,6 digital:library:learning:libraries:image;market:services;decoding:stanford;metadata W,1 information:retrieval:agents;evaluation:system:cross;language;intelligent:dissemination:distributed W.2 text:categorization;learning:classification;information:feature:selection;extraction:study;case W,3 web:wide;world;leaming:information;search;multi:pattems;server;performance SPP W4 extraction;information;leaming:rules;disclosure;automatically:structured;basis;dictionary:empirical W,5 text:digital:world:wide:information;system:library:categorization:processing:high W.6 digital:library:learning:services;libraries;market;video:access;navigating:agents much lower than that of PRPCA.Table 6 reports the pro- jection time needed to perform projection on the Cora and WebKB data sets.The test is performed with MATLAB im- plementation on a 2.33GHz personal computer.We can see that SPRP is much faster than PRPCA for the projection op- 1.5 eration. Figure 2:Results in average classification accuracy with standard deviation on the Cora data set. Table 6:Projection time (in seconds)comparison. PRPCA SPRP DS 2.431 0.749 HA 0.834 0.284 Cora ML 8.225 2.272 PL 7.507 2.123 Cornell 2.362 1.241 WebKB Texas 2.273 1.323 Figure 3:Results in average classification accuracy with standard Washington 3.588 1.946 deviation on the WebKB data set. Wisconsin 3.778 2.029 Yeung,and Zhang 2009).All the other methods are based on the expanded content features.Compared with PCA Conclusion and Future Work the higher accuracy of PRPCA0 shows that it is not good enough to just extract the extra information from the links In this paper,we have proposed a novel model,SPRP,to and still assume the instances to be i.i.d.Comparison be- learn a sparse projection matrix for relational dimensional- tween PRPCA and PRPCAO shows that slightly better per- ity reduction.Compared with PRPCA,the sparsity in SPRP formance can be achieved with the expanded content fea- not only makes its results interpretable,but it also makes the tures,particularly for the Cora data set.Comparison between projection operation much more efficient without compro- PRPCA and PCA verifies the claim in (Li,Yeung,and Zhang mising its accuracy.Furthermore,SPRP can also be used to 2009)that PRPCA dramatically outperforms PCA by elim- perform feature elimination,which will speed up the collec- inating the i.i.d.assumption.Comparison between SPP and tion process for new data.Compared with traditional sparse PCA shows that the sparsity pursuit does not necessarily de- projection methods based on the i.i.d.assumption,SPRP can teriorate the accuracy for the case with the i.i.d.assumption. learn a more discriminative projection by explicitly model- Comparison between SPRP and SPP shows that under the ing the covariance between instances.SPRP brings about sparsity pursuit case,dramatic accuracy improvement can some theoretical contributions to the area of sparsity pur- also be achieved by explicitly modeling the covariance be- suing because SPRP is the first model that pursues sparsity tween instances,which once again verifies that the i.i.d.as- without requiring the i.i.d.assumption.Hence,it can inspire sumption is unreasonable for relational data.Finally,com- us to relax the i.i.d.assumption in other sparse models as parison between SPRP and PRPCA shows that under the well to further boost model performance. PRPCA framework,we can also achieve sparsity without compromising accuracy. Acknowledgments Projection Cost Li is supported by the NSFC (No.61100125)and the 863 Pro- gram of China (No.2011AA01A202,No.2012AA011003).Yeung When the projection matrix learned is used to perform pro- is supported by General Research Fund 621310 from the Research jection,the sparsity of SPRP will make its projection cost Grants Council of Hong Kong.Table 5: Some details of the projection matrices learned by SPRP and SPP. Selected Words (arranged in descending order in terms of their W values) SPRP W∗1 information; retrieval; cross; language; extraction; system; evaluation; techniques; dictionary; incremental W∗2 text; categorization; learning; classification; information; feature; retrieval; selection; classifiers; algorithm W∗3 web; wide; learning; world; information; extract; symbolic; aid; formatting; extraction W∗4 extraction; information; learning; structured; rules; wrapper; documents; induction; grammatical; machine W∗5 text; language; digital; wide; world; high; processing; structured; sources; information W∗6 digital; library; learning; libraries; image; market; services; decoding; stanford; metadata SPP W∗1 information; retrieval; agents; evaluation; system; cross; language; intelligent; dissemination; distributed W∗2 text; categorization; learning; classification; information; feature; selection; extraction; study; case W∗3 web; wide; world; learning; information; search; multi; patterns; server; performance W∗4 extraction; information; learning; rules; disclosure; automatically; structured; basis; dictionary; empirical W∗5 text; digital; world; wide; information; system; library; categorization; processing; high W∗6 digital; library; learning; services; libraries; market; video; access; navigating; agents DS HA ML PL 0.5 0.55 0.6 0.65 0.7 0.75 0.8 0.85 Accuracy PRPCA0 PCA SPP PRPCA SPRP Figure 2: Results in average classification accuracy with standard deviation on the Cora data set. Cornell Texas Washington Wisconsin 0.8 0.85 0.9 0.95 Accuracy PRPCA0 PCA SPP PRPCA SPRP Figure 3: Results in average classification accuracy with standard deviation on the WebKB data set. Yeung, and Zhang 2009). All the other methods are based on the expanded content features. Compared with PCA, the higher accuracy of PRPCA0 shows that it is not good enough to just extract the extra information from the links and still assume the instances to be i.i.d. Comparison between PRPCA and PRPCA0 shows that slightly better performance can be achieved with the expanded content features, particularly for the Cora data set. Comparison between PRPCA and PCA verifies the claim in (Li, Yeung, and Zhang 2009) that PRPCA dramatically outperforms PCA by eliminating the i.i.d. assumption. Comparison between SPP and PCA shows that the sparsity pursuit does not necessarily deteriorate the accuracy for the case with the i.i.d. assumption. Comparison between SPRP and SPP shows that under the sparsity pursuit case, dramatic accuracy improvement can also be achieved by explicitly modeling the covariance between instances, which once again verifies that the i.i.d. assumption is unreasonable for relational data. Finally, comparison between SPRP and PRPCA shows that under the PRPCA framework, we can also achieve sparsity without compromising accuracy. Projection Cost When the projection matrix learned is used to perform projection, the sparsity of SPRP will make its projection cost much lower than that of PRPCA. Table 6 reports the projection time needed to perform projection on the Cora and WebKB data sets. The test is performed with MATLAB implementation on a 2.33GHz personal computer. We can see that SPRP is much faster than PRPCA for the projection operation. Table 6: Projection time (in seconds) comparison. PRPCA SPRP Cora DS 2.431 0.749 HA 0.834 0.284 ML 8.225 2.272 PL 7.507 2.123 WebKB Cornell 2.362 1.241 Texas 2.273 1.323 Washington 3.588 1.946 Wisconsin 3.778 2.029 Conclusion and Future Work In this paper, we have proposed a novel model, SPRP, to learn a sparse projection matrix for relational dimensionality reduction. Compared with PRPCA, the sparsity in SPRP not only makes its results interpretable, but it also makes the projection operation much more efficient without compromising its accuracy. Furthermore, SPRP can also be used to perform feature elimination, which will speed up the collection process for new data. Compared with traditional sparse projection methods based on the i.i.d. assumption, SPRP can learn a more discriminative projection by explicitly modeling the covariance between instances. SPRP brings about some theoretical contributions to the area of sparsity pursuing because SPRP is the first model that pursues sparsity without requiring the i.i.d. assumption. Hence, it can inspire us to relax the i.i.d. assumption in other sparse models as well to further boost model performance. Acknowledgments Li is supported by the NSFC (No. 61100125) and the 863 Program of China (No. 2011AA01A202, No. 2012AA011003). Yeung is supported by General Research Fund 621310 from the Research Grants Council of Hong Kong