正在加载图片...

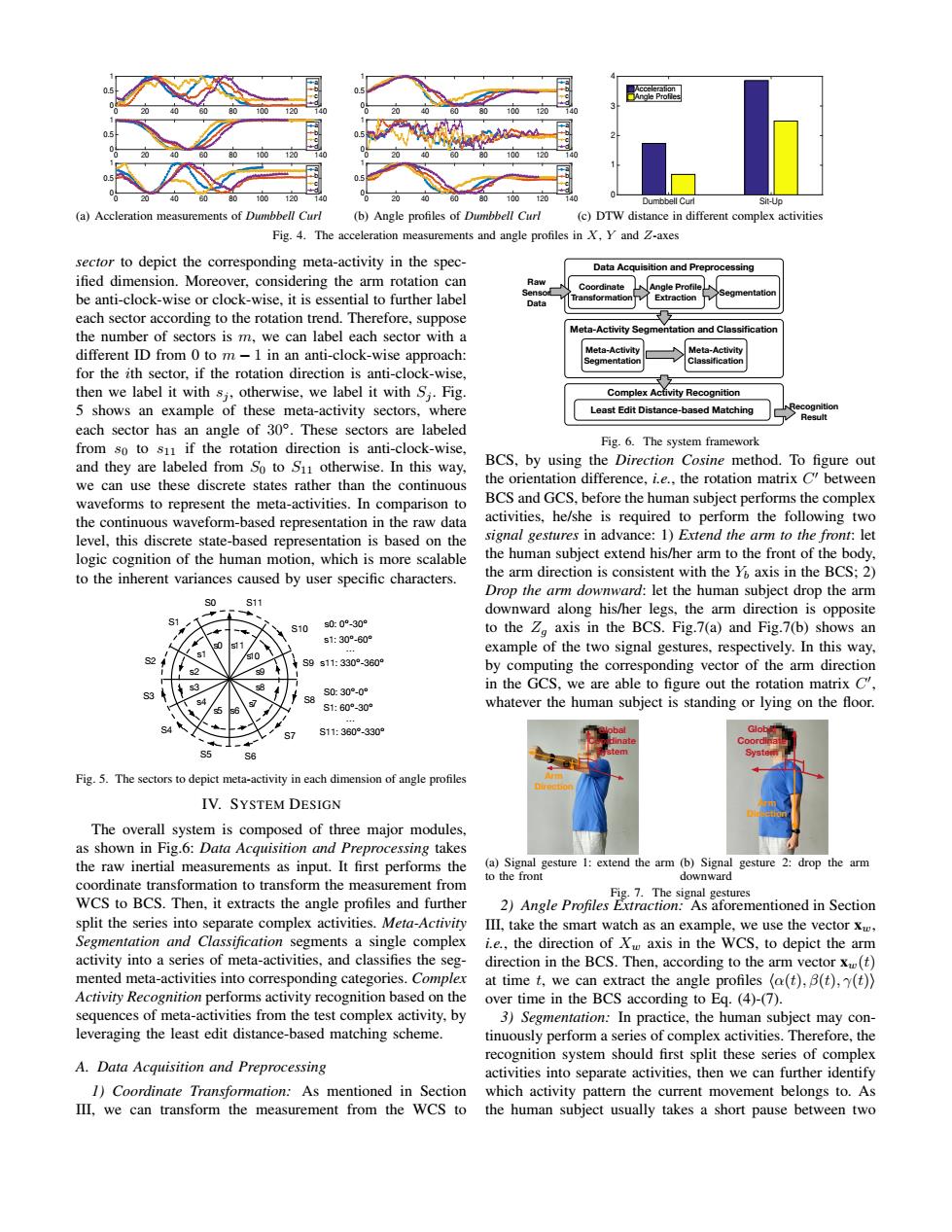

配 20 00 120 100 12 100 Dumbbell Curl Sit-Uo (a)Accleration measurements of Dumbbell Curl (b)Angle profiles of Dumbbell Curl (c)DTW distance in different complex activities Fig.4.The acceleration measurements and angle profiles in X,Y and Z-axes sector to depict the corresponding meta-activity in the spec- Data Acquisition and Preprocessing ified dimension.Moreover,considering the arm rotation can Raw Coordinate ngle Profile be anti-clock-wise or clock-wise,it is essential to further label Extraction Data each sector according to the rotation trend.Therefore,suppose Meta-Activity Segmentation and Classification the number of sectors is m,we can label each sector with a different ID from 0 to m-1 in an anti-clock-wise approach: Meta-Activity Meta-Activity for the ith sector,if the rotation direction is anti-clock-wise, then we label it with s;,otherwise,we label it with S,.Fig Complex Activity Recognition 5 shows an example of these meta-activity sectors,where Least Edit Distance-based Matching each sector has an angle of 30.These sectors are labeled from so to s11 if the rotation direction is anti-clock-wise, Fig.6.The system framework and they are labeled from So to S11 otherwise.In this way, BCS,by using the Direction Cosine method.To figure out we can use these discrete states rather than the continuous the orientation difference.i.e..the rotation matrix C between waveforms to represent the meta-activities.In comparison to BCS and GCS.before the human subject performs the complex the continuous waveform-based representation in the raw data activities,he/she is required to perform the following two level,this discrete state-based representation is based on the signal gestures in advance:1)Extend the arm to the front:let logic cognition of the human motion,which is more scalable the human subject extend his/her arm to the front of the body, to the inherent variances caused by user specific characters. the arm direction is consistent with the Y axis in the BCS:2) Drop the arm downward:let the human subject drop the arm s11 downward along his/her legs,the arm direction is opposite 81 10 s0:0°-30° to the Zo axis in the BCS.Fig.7(a)and Fig.7(b)shows an s1:30°-60 example of the two signal gestures,respectively.In this way, S2 S9s11:330°-360° by computing the corresponding vector of the arm direction S0:30°-0° in the GCS,we are able to figure out the rotation matrix C S1:60°-30° whatever the human subject is standing or lying on the floor. S11:360°-330° Glob Fig.5.The sectors to depict meta-activity in each dimension of angle profiles IV.SYSTEM DESIGN The overall system is composed of three major modules, as shown in Fig.6:Data Acquisition and Preprocessing takes the raw inertial measurements as input.It first performs the (a)Signal gesture 1:extend the arm (b)Signal gesture 2:drop the arm to the front downward coordinate transformation to transform the measurement from Fig.7.The signal gestures WCS to BCS.Then,it extracts the angle profiles and further 2)Angle Profiles Extraction:As aforementioned in Section split the series into separate complex activities.Meta-Activity III,take the smart watch as an example,we use the vector x, Segmentation and Classification segments a single complex i.e.,the direction of X axis in the WCS,to depict the arm activity into a series of meta-activities,and classifies the seg- direction in the BCS.Then,according to the arm vector x(t) mented meta-activities into corresponding categories.Complex at time t,we can extract the angle profiles (a(t),B(t),(t)) Activity Recognition performs activity recognition based on the over time in the BCS according to Eg.(4)-(7). sequences of meta-activities from the test complex activity,by 3)Segmentation:In practice,the human subject may con- leveraging the least edit distance-based matching scheme. tinuously perform a series of complex activities.Therefore,the recognition system should first split these series of complex A.Data Acquisition and Preprocessing activities into separate activities,then we can further identify 1)Coordinate Transformation:As mentioned in Section which activity pattern the current movement belongs to.As III,we can transform the measurement from the WCS to the human subject usually takes a short pause between two0 20 40 60 80 100 120 140 0 0.5 1 a b c d 0 20 40 60 80 100 120 140 0 0.5 1 a b c d 0 20 40 60 80 100 120 140 0 0.5 1 a b c d (a) Accleration measurements of Dumbbell Curl 0 20 40 60 80 100 120 140 0 0.5 1 a b c d 0 20 40 60 80 100 120 140 0 0.5 1 a b c d 0 20 40 60 80 100 120 140 0 0.5 1 a b c d (b) Angle profiles of Dumbbell Curl Dumbbell Curl Sit-Up 0 1 2 3 4 Acceleration Angle Profiles (c) DTW distance in different complex activities Fig. 4. The acceleration measurements and angle profiles in X, Y and Z-axes sector to depict the corresponding meta-activity in the specified dimension. Moreover, considering the arm rotation can be anti-clock-wise or clock-wise, it is essential to further label each sector according to the rotation trend. Therefore, suppose the number of sectors is m, we can label each sector with a different ID from 0 to m − 1 in an anti-clock-wise approach: for the ith sector, if the rotation direction is anti-clock-wise, then we label it with sj , otherwise, we label it with Sj . Fig. 5 shows an example of these meta-activity sectors, where each sector has an angle of 30◦ . These sectors are labeled from s0 to s11 if the rotation direction is anti-clock-wise, and they are labeled from S0 to S11 otherwise. In this way, we can use these discrete states rather than the continuous waveforms to represent the meta-activities. In comparison to the continuous waveform-based representation in the raw data level, this discrete state-based representation is based on the logic cognition of the human motion, which is more scalable to the inherent variances caused by user specific characters. 0 1 2 3 4 5 6 7 8 9 10 11 s0: 0º-30º s1: 30º-60º … s11: 330º-360º S0: 30º-0º S1: 60º-30º … S11: 360º-330º S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 s s s s s s s s s s s s Fig. 5. The sectors to depict meta-activity in each dimension of angle profiles IV. SYSTEM DESIGN The overall system is composed of three major modules, as shown in Fig.6: Data Acquisition and Preprocessing takes the raw inertial measurements as input. It first performs the coordinate transformation to transform the measurement from WCS to BCS. Then, it extracts the angle profiles and further split the series into separate complex activities. Meta-Activity Segmentation and Classification segments a single complex activity into a series of meta-activities, and classifies the segmented meta-activities into corresponding categories. Complex Activity Recognition performs activity recognition based on the sequences of meta-activities from the test complex activity, by leveraging the least edit distance-based matching scheme. A. Data Acquisition and Preprocessing 1) Coordinate Transformation: As mentioned in Section III, we can transform the measurement from the WCS to Data Acquisition and Preprocessing Coordinate Transformation Angle Profile Extraction Segmentation Meta-Activity Segmentation and Classification Meta-Activity Segmentation Meta-Activity Classification Complex Activity Recognition Least Edit Distance-based Matching Raw Sensor Data Recognition Result Fig. 6. The system framework BCS, by using the Direction Cosine method. To figure out the orientation difference, i.e., the rotation matrix C 0 between BCS and GCS, before the human subject performs the complex activities, he/she is required to perform the following two signal gestures in advance: 1) Extend the arm to the front: let the human subject extend his/her arm to the front of the body, the arm direction is consistent with the Yb axis in the BCS; 2) Drop the arm downward: let the human subject drop the arm downward along his/her legs, the arm direction is opposite to the Zg axis in the BCS. Fig.7(a) and Fig.7(b) shows an example of the two signal gestures, respectively. In this way, by computing the corresponding vector of the arm direction in the GCS, we are able to figure out the rotation matrix C 0 , whatever the human subject is standing or lying on the floor. ! Global Coordinate System Arm Direction (a) Signal gesture 1: extend the arm to the front Global Coordinate System Arm Direction (b) Signal gesture 2: drop the arm downward Fig. 7. The signal gestures 2) Angle Profiles Extraction: As aforementioned in Section III, take the smart watch as an example, we use the vector xw, i.e., the direction of Xw axis in the WCS, to depict the arm direction in the BCS. Then, according to the arm vector xw(t) at time t, we can extract the angle profiles hα(t), β(t), γ(t)i over time in the BCS according to Eq. (4)-(7). 3) Segmentation: In practice, the human subject may continuously perform a series of complex activities. Therefore, the recognition system should first split these series of complex activities into separate activities, then we can further identify which activity pattern the current movement belongs to. As the human subject usually takes a short pause between two