正在加载图片...

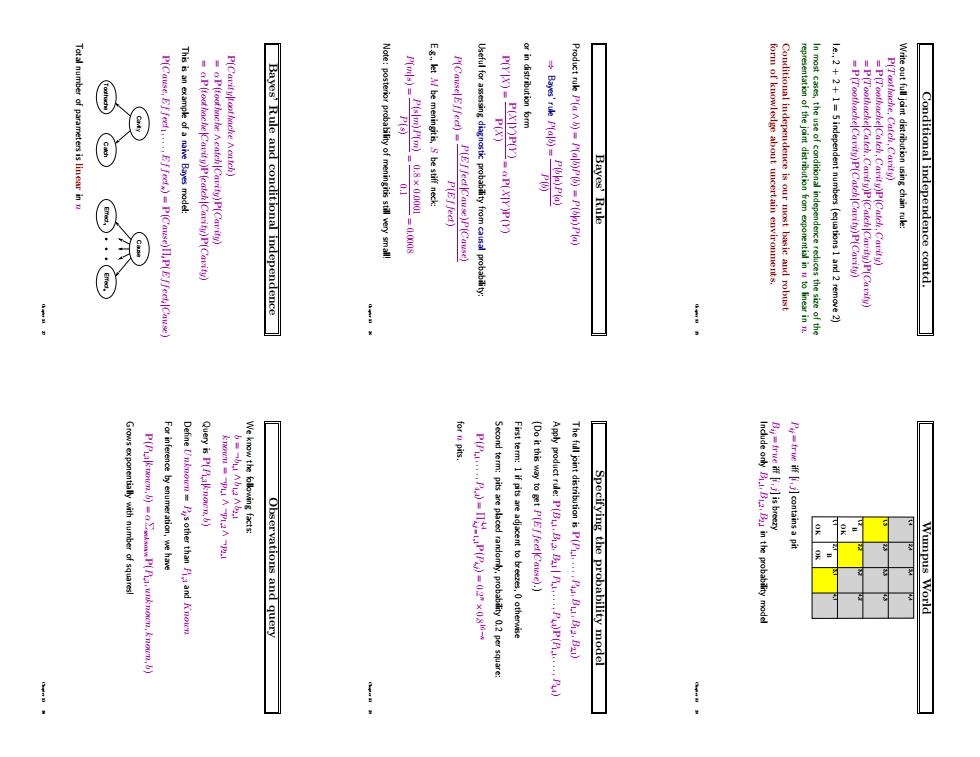

Total number of parameters is liner in n P(Cause,Effech... Bayes'Rule and conditional independence P(s) Eg..let be meningitis,5 be stiff neck: =3 P(EJJeet) Product ruk P(a=(a=P()P(a) Bayes'Rule le.++1=5 independent numbers (equations 1 and 2 remove2) Write out full joint distribution using chain rule: Conditional independence contd. Grows exponentially with number of squares P(P..b-aP(Pia.unk nown.&nomn,b) For inference by enumeration,we hae Query is P(,b) Observations and query P(PP)=-1P()=02x08- erm:pits areplaced randomly probablitypersquare First term:1 if pits are adjacent to breezeotherwise (Do it this way to get P(fectCause).) Apply product rue:P(BB.B .P)P(P....Pu) The full joint distribution is P(P.P,B.B2,B21) Specifying the probability model P=true iffj]contains a pit Wumpus World Conditional independence contd. Write out full joint distribution using chain rule: P(Toothache, Catch, Cavity) = P(Toothache|Catch, Cavity)P(Catch, Cavity) = P(Toothache|Catch, Cavity)P(Catch|Cavity)P(Cavity) = P(Toothache|Cavity)P(Catch|Cavity)P(Cavity) I.e., 2 + 2 + 1 = 5 independent numbers (equations 1 and 2 remove 2) In most cases, the use of conditional independence reduces the size of the representation of the joint distribution from exponential in n to linear in n. Conditional independence is our most basic and robust form of knowledge about uncertain environments. Chapter 13 25 Bayes’ Rule Product rule P(a ∧ b) = P(a|b)P(b) = P(b|a)P(a) ⇒ Bayes’ rule P(a|b) = P(b|a)P(a) P(b) or in distribution form P(Y |X) = P(X|Y )P(Y ) P(X) = αP(X|Y )P(Y ) Useful for assessing diagnostic probability from causal probability: P(Cause|Effect) = P(Effect|Cause)P(Cause) P(Effect) E.g., let M be meningitis, S be stiff neck: P(m|s) = P(s|m)P(m) P(s) = 0.8 × 0.0001 0.1 = 0.0008 Note: posterior probability of meningitis still very small! Chapter 13 26 Bayes’ Rule and conditional independence P(Cavity|toothache ∧ catch) = α P(toothache ∧ catch|Cavity)P(Cavity) = α P(toothache|Cavity)P(catch|Cavity)P(Cavity) This is an example of a naive Bayes model: P(Cause, Effect1, . . . , Effectn) = P(Cause)ΠiP(Effecti |Cause) Toothache Cavity Catch Cause Effect1 Effectn Total number of parameters is linear in n Chapter 13 27 Wumpus World OK 1,1 2,1 3,1 4,1 1,2 2,2 3,2 4,2 1,3 2,3 3,3 4,3 1,4 2,4 OK OK 3,4 4,4 B B Pij = true iff [i, j] contains a pit Bij = true iff [i, j] is breezy Include only B1,1, B1,2, B2,1 in the probability model Chapter 13 28 Specifying the probability model The full joint distribution is P(P1,1, . . . , P4,4, B1,1, B1,2, B2,1) Apply product rule: P(B1,1, B1,2, B2,1 | P1,1, . . . , P4,4)P(P1,1, . . . , P4,4) (Do it this way to get P(Effect|Cause).) First term: 1 if pits are adjacent to breezes, 0 otherwise Second term: pits are placed randomly, probability 0.2 per square: P(P1,1, . . . , P4,4) = Π 4,4 i,j = 1,1P(Pi,j) = 0.2 n × 0.8 16−n for n pits. Chapter 13 29 Observations and query We know the following facts: b = ¬b1,1 ∧ b1,2 ∧ b2,1 known = ¬p1,1 ∧ ¬p1,2 ∧ ¬p2,1 Query is P(P1,3|known, b) Define Unknown = Pijs other than P1,3 and Known For inference by enumeration, we have P(P1,3|known, b) = αΣunknownP(P1,3, unknown, known, b) Grows exponentially with number of squares! Chapter 13 30