Lecture 7 Hadoop/Spark

Lecture 7 Hadoop/Spark

What is Hadoop Hadoop is a software framework for distributed processing of large datasets across large clusters of computers Large datasets>Terabytes or petabytes of data Large clusters>hundreds or thousands of nodes Hadoop is open-source implementation for Google MapReduce Hadoop is based on a simple programming model called MapReduce Hadoop is based on a simple data model,any data will fit 3

What is Hadoop • Hadoop is a software framework for distributed processing of large datasets across large clusters of computers • Large datasets Æ Terabytes or petabytes of data • Large clusters Æ hundreds or thousands of nodes • Hadoop is open-source implementation for Google MapReduce • Hadoop is based on a simple programming model called MapReduce • Hadoop is based on a simple data model, any data will fit 3

Design Principles of Hadoop Need to process big data Need to parallelize computation across thousands of nodes Commodity hardware Large number of low-end cheap machines working in parallel to solve a computing problem This is in contrast to Parallel DBs Small number of high-end expensive machines 1

Design Principles of Hadoop • Need to process big data • Need to parallelize computation across thousands of nodes • Commodity hardware • Large number of low-end cheap machines working in parallel to solve a computing problem • This is in contrast to Parallel DBs • Small number of high-end expensive machines 4

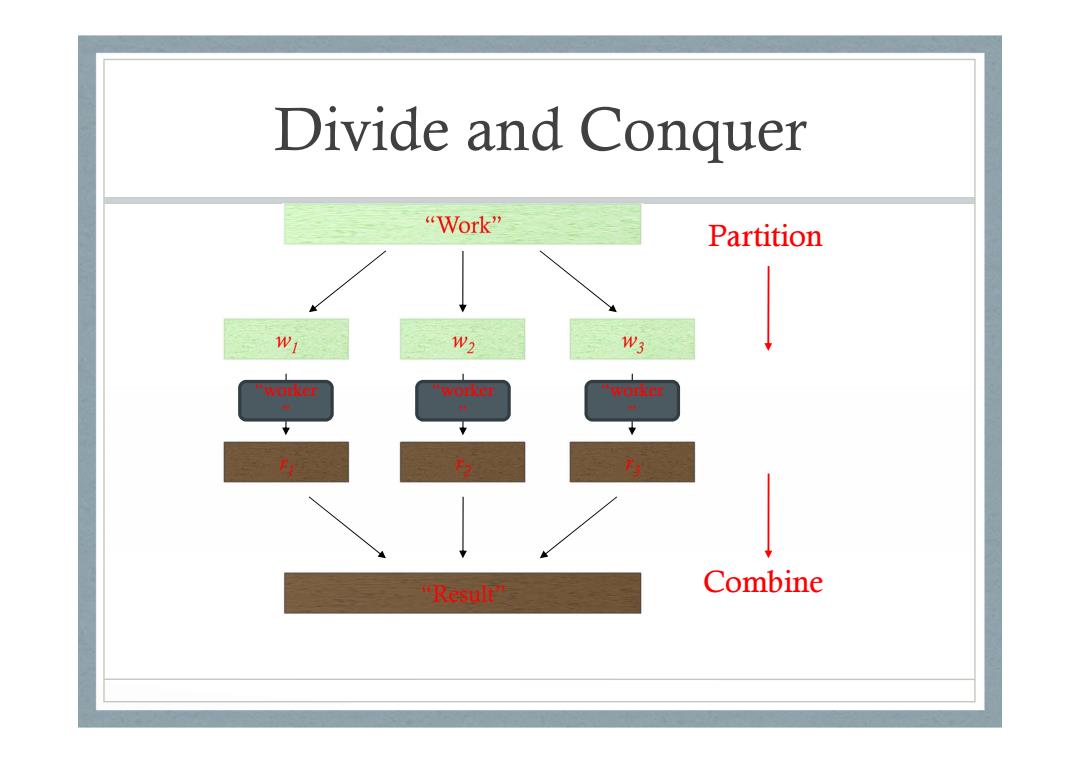

Divide and Conquer "Work" Partition W1 W2 W3 ”h0tkr worker workeE "Result Combine

Divide and Conquer “worker ” “worker ” “worker ” Partition Combine

It's a bit more complex... Fundamental issues scheduling,data distribution,synchronization, Different programming models inter-process communication,robustness,fault Message Passing Shared Memory tolerance,... P P2 P3 P4 Ps P:P2 P3 P4 Ps Architectural issues Flynn's taxonomy (SIMD,MIMD,etc.), network typology,bisection bandwidth UMA vs.NUMA,cache coherence Different programming constructs mutexes,conditional variables,barriers,... masters/slaves,producers/consumers,work queues,... Common problems livelock,deadlock,data starvation,priority inversion... dining philosophers,sleeping barbers,cigarette smokers,... The reality:programmer shoulders the burden of managing concurrency

It’s a bit more complex… Message Passing P1 P2 P3 P4 P5 Shared Memory P1 P2 P3 P4 P5 Memory Different programming models Different programming constructs mutexes, conditional variables, barriers, … masters/slaves, producers/consumers, work queues, … Fundamental issues scheduling, data distribution, synchronization, inter-process communication, robustness, fault tolerance, … Common problems livelock, deadlock, data starvation, priority inversion… dining philosophers, sleeping barbers, cigarette smokers, … Architectural issues Flynn’s taxonomy (SIMD, MIMD, etc.), network typology, bisection bandwidth UMA vs. NUMA, cache coherence The reality: programmer shoulders the burden of managing concurrency…

▣ 7 Source:Ricardo Guimaraes Herrmann

Source: Ricardo Guimarães Herrmann

Design Principles of Hadoop Automatic parallelization distribution Hidden from the end-user Fault tolerance and automatic recovery Nodes/tasks will fail and will recover automatically Clean and simple programming abstraction ·Users only provide two functions“map'and“reduce” 8

Design Principles of Hadoop • Automatic parallelization & distribution • Hidden from the end-user • Fault tolerance and automatic recovery • Nodes/tasks will fail and will recover automatically • Clean and simple programming abstraction • Users only provide two functions “map” and “reduce” 8

Distributed File System Don't move data to workers...move workers to the data! Store data on the local disks of nodes in the cluster Start up the workers on the node that has the data local ·Why? Not enough RAM to hold all the data in memory Disk access is slow,but disk throughput is reasonable A distributed file system is the answer 。 GFS(Google File System) HDFS for Hadoop(=GFS clone)

Distributed File System • Don’t move data to workers… move workers to the data! • Store data on the local disks of nodes in the cluster • Start up the workers on the node that has the data local • Why? • Not enough RAM to hold all the data in memory • Disk access is slow, but disk throughput is reasonable • A distributed file system is the answer • GFS (Google File System) • HDFS for Hadoop (= GFS clone)

Hadoop:How it Works 10

Hadoop: How it Works 10

Hadoop HBase Pig Hive Chukwa MapReduce HDFS Z00 Keeper Hadoop's stated mission(Doug Cutting interview): Commoditize infrastructure for web-scale, data-intensive applications

Hadoop HBase MapReduce Core Avro HDFS Zoo Keeper Pig Hive Chukwa Hadoop’s stated mission (Doug Cutting interview): Commoditize infrastructure for web-scale, data-intensive applications