电子科技大学研究生《机器学习》精品课程 第16讲生成对抗网络 16 GAN 郝家胜(Jiasheng Hao) Ph.D.,Associate Professor Email:hao@uestc.edu.cn School of Automation Engineering University of Electronic Science and Technology of China,Chengdu 611731 Aug.2015第一稿;Apr2021第四稿

电子科技大学研究生《机器学习》精品课程 Email: hao@uestc.edu.cn School of Automation Engineering University of Electronic Science and Technology of China, Chengdu 611731 郝家胜 (Jiasheng Hao) Ph.D., Associate Professor Aug. 2015 第一稿;Apr. 2021 第四稿 第16讲 生成对抗网络 16 GAN

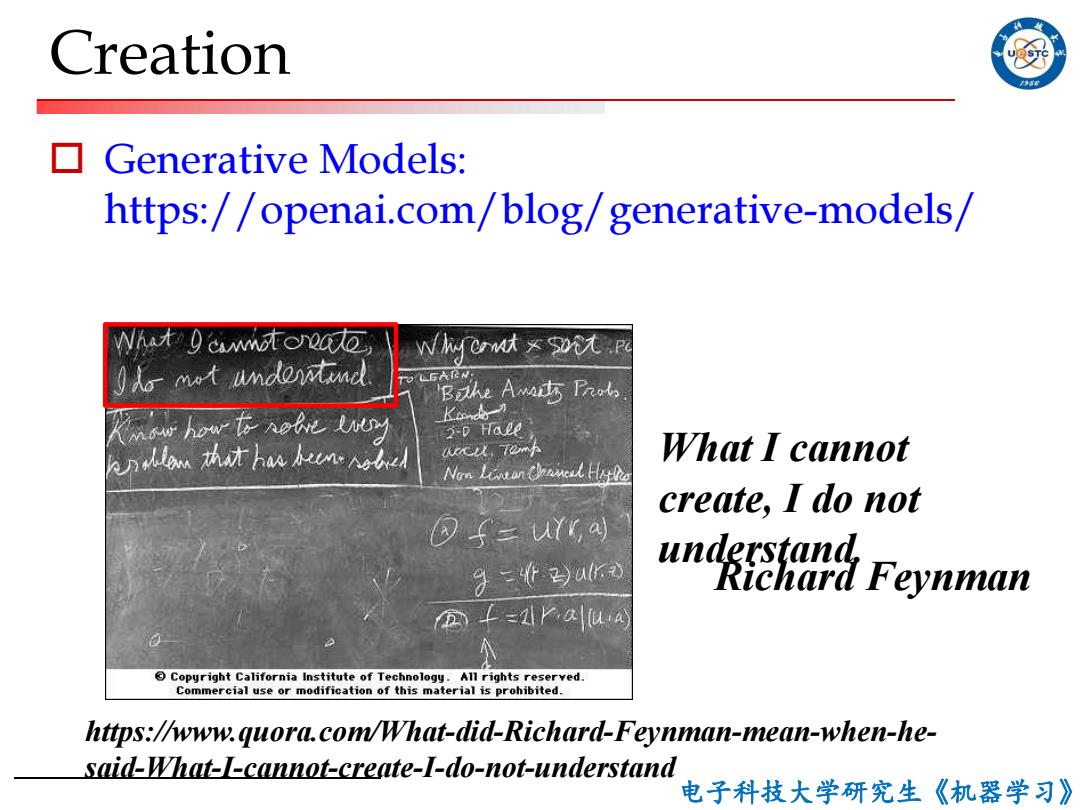

Creation /956 Generative Models: https://openai.com/blog/generative-models/ √hy ernat soi.Po Bathe Amar本fd K人力 3-0 Hale c以,70mh What I cannot Non Lonkan sl且d create,I do not 9()u) understand Richard Feynman t=arallua Copyright California Institute of Technology.All rights reserved. Commercial use or modification of this material is prohibited. https://www.quora.com/What-did-Richard-Feynman-mean-when-he- said-What-I-cannot-create-I-do-not-understand 电子科技大学研究生《机器学习》

电子科技大学研究生《机器学习》 Creation o Generative Models: https://openai.com/blog/generative-models/ https://www.quora.com/What-did-Richard-Feynman-mean-when-he- said-What-I-cannot-create-I-do-not-understand What I cannot create, I do not understand. Richard Feynman

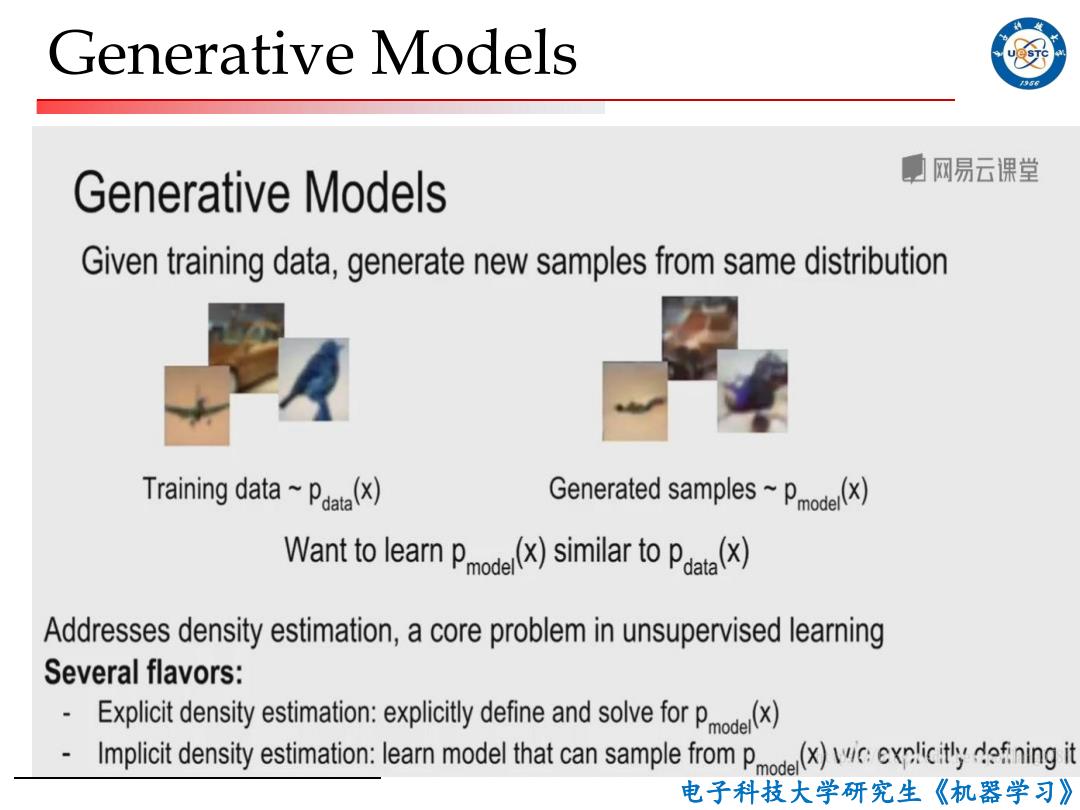

Generative models 号5不 夏网易云课堂 Generative models Given training data,generate new samples from same distribution Training dataP) Generated samples~Pd() Wanttoea Pde)similar to Pax) Addresses density estimation,a core problem in unsupervised learning Several flavors: Explicit densitytimation:expictly define an or P) Implicit density stimation:l mode that can sample from Ping it 电子科技大学研究生《机器学习》

电子科技大学研究生《机器学习》 Generative Models

Generative models 号5 Why Generative Models? 网易云识 Realistic samples for artwork,super-resolution,colorization,etc. Generative models of time-series data can be used for simulation and planning(reinforcement learning applications!) Training generative models can also enable inference of latent representations that can be useful as general features ttps://blog.csdn.net/poulang578 电子科技大学研究生《机器学习》

电子科技大学研究生《机器学习》 Generative Models

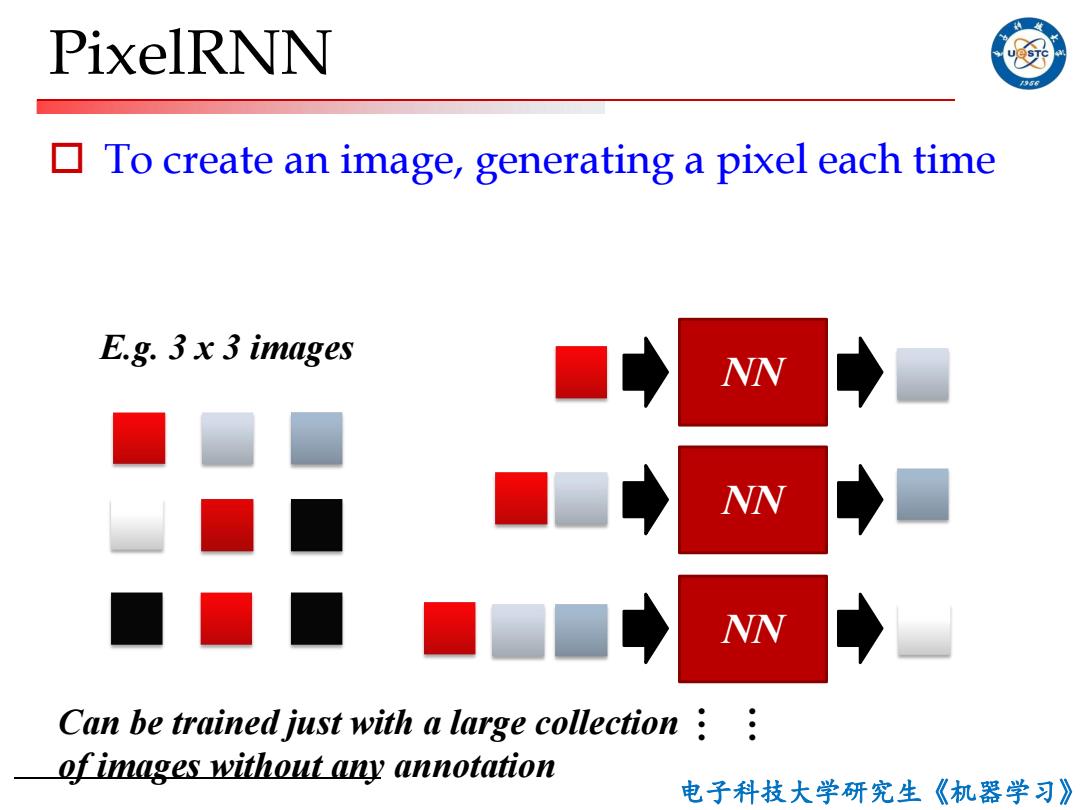

PixelRNN To create an image,generating a pixel each time E.g.3 x 3 images NN NN NN Can be trained just with a large collection: of images without any annotation 电子科技大学研究生《机器学习》

电子科技大学研究生《机器学习》 PixelRNN o To create an image, generating a pixel each time E.g. 3 x 3 images NN NN NN … … Can be trained just with a large collection of images without any annotation

PixelRNN 956 Real World ICML2016最佳论文,出自于DeepMind 电子科技大学研究生《机器学习》

电子科技大学研究生《机器学习》 PixelRNN Real World ICML2016最佳论文,出自于DeepMind

Wavenet beyond Image 56 Output Hidden Layer Hidden Layer Hidden Layer Audio:Aaron van den Oord,Sander Dieleman,Heiga Zen,Karen Simonyan,Oriol Vinyals,Alex Graves,Nal Kalchbrenner,Andrew Senior,Koray Kavukcuoglu,WaveNet:A Generative Model for Raw Audio,arXiv preprint,2016 Video:Nal Kalchbrenner,Aaron van den Oord,Karen Simonyan,Ivo Danihelka,Oriol Vinyals,Alex Graves,Koray Kavukcuoglu,Video Pixel Networks,arXiv preprint,2016 电子科技大学研究生《机器学习》

电子科技大学研究生《机器学习》 Wavenet – beyond Image Audio: Aaron van den Oord, Sander Dieleman, Heiga Zen, Karen Simonyan, Oriol Vinyals, Alex Graves, Nal Kalchbrenner, Andrew Senior, Koray Kavukcuoglu, WaveNet: A Generative Model for Raw Audio, arXiv preprint, 2016 Video: Nal Kalchbrenner, Aaron van den Oord, Karen Simonyan, Ivo Danihelka, Oriol Vinyals, Alex Graves, Koray Kavukcuoglu, Video Pixel Networks , arXiv preprint, 2016

例 Any other ideas? 电子科技大学研究生《模式识别》

电子科技大学研究生《模式识别》 Any other ideas?

PixelCNN Still generate image pixels starting from Softmax loss at each pixel corner Dependency on previous pixels now modeled using a CNN over context region Training:maximize likelihood of training images n p(x)=p(xc1,,ci-1) i=1 电子科技大学研究生《机器学习》

电子科技大学研究生《机器学习》 PixelCNN

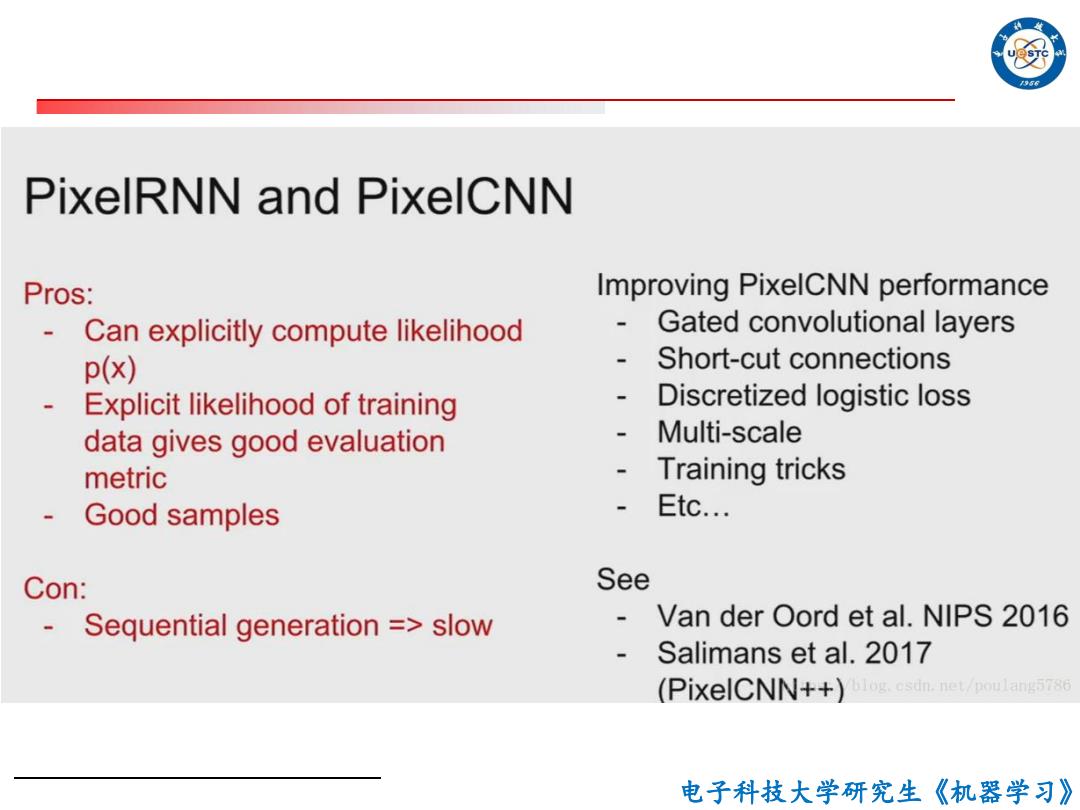

PixelRNN and PixelCNN Pros: Improving PixelCNN performance Can explicitly compute likelihood - Gated convolutional layers p(x) Short-cut connections Explicit likelihood of training Discretized logistic loss data gives good evaluation Multi-scale metric Training tricks Good samples Etc.… Con: See Sequential generation =slow Van der Oord et al.NIPS 2016 Salimans et al.2017 (PixelCNN++) log.csdn.net/poulang5786 电子科技大学研究生《机器学习》

电子科技大学研究生《机器学习》