第5卷第1期 智能系统学报 Vol.5 No.1 2010年2月 CAAI Transactions on Intelligent Systems Feh.2010 doi:10.3969/j.issn.16734785.2010.01.015 Classification and recognition of image/non-image data based on multinomial logistic regression with nonlinear dimensionality reduction Mudasser NASEER,QIN Shi-yin (School of Automation Science and Electrical Engineering,Beihang University,Beijing 100037,China) Abstract:In pattem classification and recognition oriented to massively complex data most classifiers suffer from the curse of dimensionality.Manifold leaming based nonlinear dimensionality reduction (NLDR)methods provide a good preprocessing to reduce dimensionality before applying any classification method on high dimensional data.Multinomial logistic regression (MLR)can be used to predict the class membership of feature data.In this study several unsupervised NLDR methods are employed to reduce dimensions of the data and the MLR is used for class prediction of image/non-image data so that a new method of classification and recognition oriented to massively complex image/non-image data is proposed based on multinomi- al Logistic regression with nonlinear dimensionality reduction.Through a series of experiments and comparative analysis with supervised NLDR methods for a lot of typical test data the new proposed method is validated to outperform other supervised NLDR ones. Keywords:nonlinear dimensionality reduction;data classification;multinomial logistic regression;image/non-image data CLC Number:TP391 Document code:A Article ID:1673-4785(2010)01-0085-09 Many decision-making problems can be catego-SA)7.These methods fall in the category of unsuper- rized as classification problems.In a number of vised learning and could not be directly used for the practical applications,digital images have been used to purpose of supervised learning of classification.For classifing objects;most examples have dealt with the classification,supervised versions of most of the algo- classification of handwritten numbers,motor vehicle li-rithms such as PCA-LLE and modified supervised LLE cence plates,images of human faces and so on.These (MSLLE)(,supervised LLE(SLLE),Weighte- images contain high dimensional data and require di- dso,supervised Isomap and supervised LT- mensionality reduction before they can be used for clas- SA[3]were introduced from time to time. sification.Recently,a class of nonlinear dimensionali- For this paper,the idea of using unsupervised ty reduction methods,based on the concept of manifold NLDR methods with MLR (U-NLDR MLR)for im- learning,attracted researchers studying dimensionality age data classification was introduced and correspond- reduction for nonlinear images and non-image data. ing comparative analysis of their performance with some Some conventional manifold based learning methods well known supervised versions of NLDR algorithms may be listed as locally linear embedding (LLE)121, was carried out.For experimental and test purposes, Isomap3,Laplacian eigenmaps (LE)4,diffusion some well known grey scale images of handwritten dig- maps(s1,Hessian locally linear embedding its and human faces were used.The performance of va- (HLLE),and local tangent space alignment (LT- rious algorithms was evaluated by classification error rate (ER)for out-of-sample data points. Received Data:2009-08-15. Foundation Item:This work is supported by The Major Program of Hi-tech- 1 Classification nology Research and Development (863)of China. (2008AA12A200 and Programs of National Natural Science Foundation of China (60875072 ) One problem in classification or supervised learn- Corresponding Author:QIN Shi-yin.E-mail:qsy@buaa.edu.cn. ing involves guessing or predicting unknown classes

·86 智能系统学报 第5卷 based on observation.Typically speaking,a set of pat- D =D amax(D)A. terns of features along with a correct classification, Where D is the pairwise distance matrix for combine known as training data,is available.Aftter training, data set,and A equals to 1 if data points are from dif- the task is to classify a new set of patterns,known as ferent classes,and 0 otherwise.Here a (0,1)con- test data.The training data set is assumed to be a ran- trols the amount to which class information should be dom sample from some large population. incorporated.This supervised version of LLE behaves When the dimensionality of input data is relatively as a nonlinear Fisher mapping which controls nonlin- high,most classification methods,such as K-nearest earity. neighbor (K-NN)classifier4),will suffer from the Supervised LTSA also used the idea of artificially curse of dimensionality and produce highly biased esti- increased shift distances between points belonging to mates.Most of the high dimensional data is intrinsical- different classes,but left them unchanged if samples ly low dimensional.Thus,the problem of classification were from the same class.The new pairwise distance can be solved by firstly mapping the high dimensional matrix was given as D'=D+pA,where the shift dis- data into low dimensional subspace by using a suitable tance p is assigned a relatively large value in compari- NLDR method and then applying some classification son with the distance between any pairs of points.A:e- method 9).The above mentioned U-NLDR methods are quals to 1 if data points are from different classes,and not suitable for classification purpose.Some supervised 0 otherwise. versions of these algorithms were developed.In our We chose the above mentioned S-NLDR methods study,we use four supervised NLDR methods-due to their similar approachs of using class informa- WeightedIso,supervised Isomap (S-Isomap),SLLE tion;they increased the distance between data points of and SLTSA. different classes. WeightedIso changed the first step of Isomap.It The S-NLDR methods do not explicitly provide proceeded by first computing the K nearest neighbors of any mapping function for out-of-sample data points, each data point x and denoted Km as the set of nearest and it can be learnt by the estimation methodtis]or by neighbors having the same class label as x.Then it some nonlinear interpolation techniques,such as gen- "moved"each nearest neighbor in Kcloser to by eralized regression networks(GRN).To summa- rescaling their Euclidean distance by a constant factor rize,the general classification procedure has three 1/(>1).Remaining steps of the algorithm re- steps,as follows: main the same as of the unsupervised Isomap. i.Map high dimensional data into a lower dimen- In S-Isomap Euclidean distance d(x;,)is re- sional space using an S-NLDR method. placed by D(,),where ii.Find mapping function for out-of-sample data d( points using an estimation method or GRN. N1-e a D(x,) y:=y分; iii.Map the given query to low dimensional space WeB-a,y:≠y分 using the mapping function and then predict its class The parameter B is used to prevent D(x,)increasing label using K-NN. too fast when d(is relatively large.Usually,t is 2 Multinomial logistic regression set to be the average Euclidean distance between all pairs of data points.The parameter a gives some oppor- Multinomial logistic regression (MLR)is used to tunity to points in different classes to be "more simi- model the relationship between a multiple response var- lar”. iable and one or more predictor variables,which may Among different versions of SLLE,we choose one be either discrete or continuous.Let Ybe a poly- given in the reference,which used the idea of chotomous random variable denoting the outcome of adding distance between samples in different classes as some experiment,and let =(1,2,,)be a

第1期 Classification and recognition of image/non-image data based on multinomial logistic regression with nonlinear dimensionality reduction87 collection of predictor variables.Then the conditional maximum probability. probability of outcome P(Y =1/X)is presented by The flowchart is shown as Fig.1. ()where Input high dimensional imensional π(x)= training data test data exp(B+B1+B2x2+…+B2-l2-1) 1+exp(B+B1出+B,2+…+B-1x-1) Find low dimensional Find low dimensional mapping of test data using If the x are varied and the n values Y,Y2,,Y of Y estimation method are observed,we write Find classification T:= model using MLR exp(B0+B11+B2x2+·+Bp-1p-1) 1+exp(B0+B1xH+B2x2+…+B2-1xp-1) Find classification The problem is now to obtain an estimate of the probability for low dimensional test data vector B=(Bo,B,B1).As the number of signifi- cant predictor variables increases the predicted class Assign low dimensional test data probability becomes more accurate.MLR is a modal to appropriate class based approach and provides classification probability for individual objects as compared to the KNN method, Output classification which gives classification membership.We use U-NLDR error rate methods to map the high dimensional image data into Fig.1 Flowchart of U-NLDR MLR algorithm low dimensional subspace and then use an MLR model to estimate the classification probability for out-of-sam- 3 Performance evaluation ple data points.The low dimensional embedded values are taken as an independent predictor variable.In con- The most commonly used performance evaluation criteria for classification is the ER.If unlimited cases trast,class membership values are taken as values of the dependent variable Y.Since all the NLDR methods for training and testing are available,the error rate is use eigen decomposition,the independence of predictor simply the error rate on the test cases.The simplest variables is obvious.We increase the number of predic- technique for estimating error rates,the holdout meth- tor variables by increasing the dimensions of the embed- ods,is a single training and test experiment.The ded subspace to obtain more accurate estimates of B. sample cases are broken into two groups of cases:a An increase of predictor variables also give rise to the training group and a test group.The classifier is inde- problem of including irrelevant or less important varia- pendently derived from the training cases,and the er- bles in the model.This problem is handled by checking ror estimate is the performance of the classifier on the test cases.A single random partition of training and the significance of the selected model and including the most important or significant predictor variables in the test cases can be somewhat misleading.Random resa- final model. mpling can produce better estimates than a single train and test partition. The procedure is summarized as follows: i.Map high dimensional data into lower dimen- In this study,we used a 10-fold cross validation sional subspace using the U-NLDR method. resampling method to find the error rate.That is,the ii.Apply MLR to find a classification model. original data set was randomly divided into ten equal- iii.Find low dimensional mapping for out-of-sam- sized subsets.Then in each iteration,one subset was ple data points using an estimation method or GRN. used as testing set and the union of the remaining ones was used as the training set.After ten iterations,the iv.Find classification probability for out-of-sample data points by applying the MLR model obtained in average result was taken as the final ER.For Olivitief- (ii),and assign new data points to the class having aces and the Lubeck University face images database

·88 智能系统学报 第5卷 we used the leave-one-out resampling method due to ent persons.Image dimensions are 64 x 64.Fig.5 the small number of images available for each person. shows some sample images. 4 Test data For the purpose of experimentation,we used sev- en different image and non-image datasets in this stud- y.A brief description is given below: Fig.5 Sample images from Olivettiefaces i.The Yale face database-B:This dataset consists v.Lubeck University face images:This database of images of 10 subjects,each seen under 576 viewing consists of face images of 31 persons,13 images each, conditions(9 poses x64 illumination conditions).For with different expression and light conditions.We use this study,we use 567 images of three persons (9 po- images of 5 persons.Images dimensions are 36 x 48. ses x 21 illumination conditions).The images are Fig.6 shows some sample images. cropped and dimension reduced to 36 x 32.Fig.2 shows some sample images Fig.6 Sample images from Lubeck Uni.database vi.UCI Isolet data set:This data set consists of 617 different features of spoken alphabets by 150 ob- Fig.2 Sample images from Yale-B database jects.Every subject speaks each alphabet twice.We ii.UMIST face images:This database consists of use all instances of letters G,H,I,J,K and L. 564 images of 20 people.Each covers a range of poses vii.UCI optical digits:This data set consists of from profile to frontal views.Again we use images of 3 normalized bitmaps of handwritten digits(0~9)from persons.Dimension reduced to 45 x37.Fig.3 shows a preprinted form.Total number of attributes are 64, sample images of first subject. whereas the total number of instances are 5 620. 5 Methodology For a comparison between supervised versions of NLDR and U-NLDR MLR,we ran six U-NLDR Fig.3 Sample images from UMIST database methods,namely Isomap,LLE,HLLE (results are not iii.MNIST handwritten digits:MNIST handwritten presented here due to very poor performance for all the data sets),LE,Diffusion Maps and LTSA.To find digits consists of grayscale images of“0”through“9”. The images are digitized as 28 x28.We use 500 images low dimensional embedding for out-of-sample test data, both estimation and GRN methods were used.In order of digits 1,3 and 4.Fig.4 shows sample images. to select significant predictor variables in the MLR /11)33 model,we used the Wald test [is] 33444 Under supervised NLDR we use four methods, namely WeightedIso,S-Isomap,SLLE and SLTSA.In the following experiments,the number of neighborhood Fig.4 Sample images from MNIST database points K was taken as 8 and 10,for both supervised iv.Olivettiefaces database:The Olivettiefaces da- and unsupervised methods.These values of K are most tabase consists of 400 images of 40 persons with differ- commonly used by different researchers.In our experi- ent poses and expressions.We use images of 6 differ- ments these values were optimal ones for given data

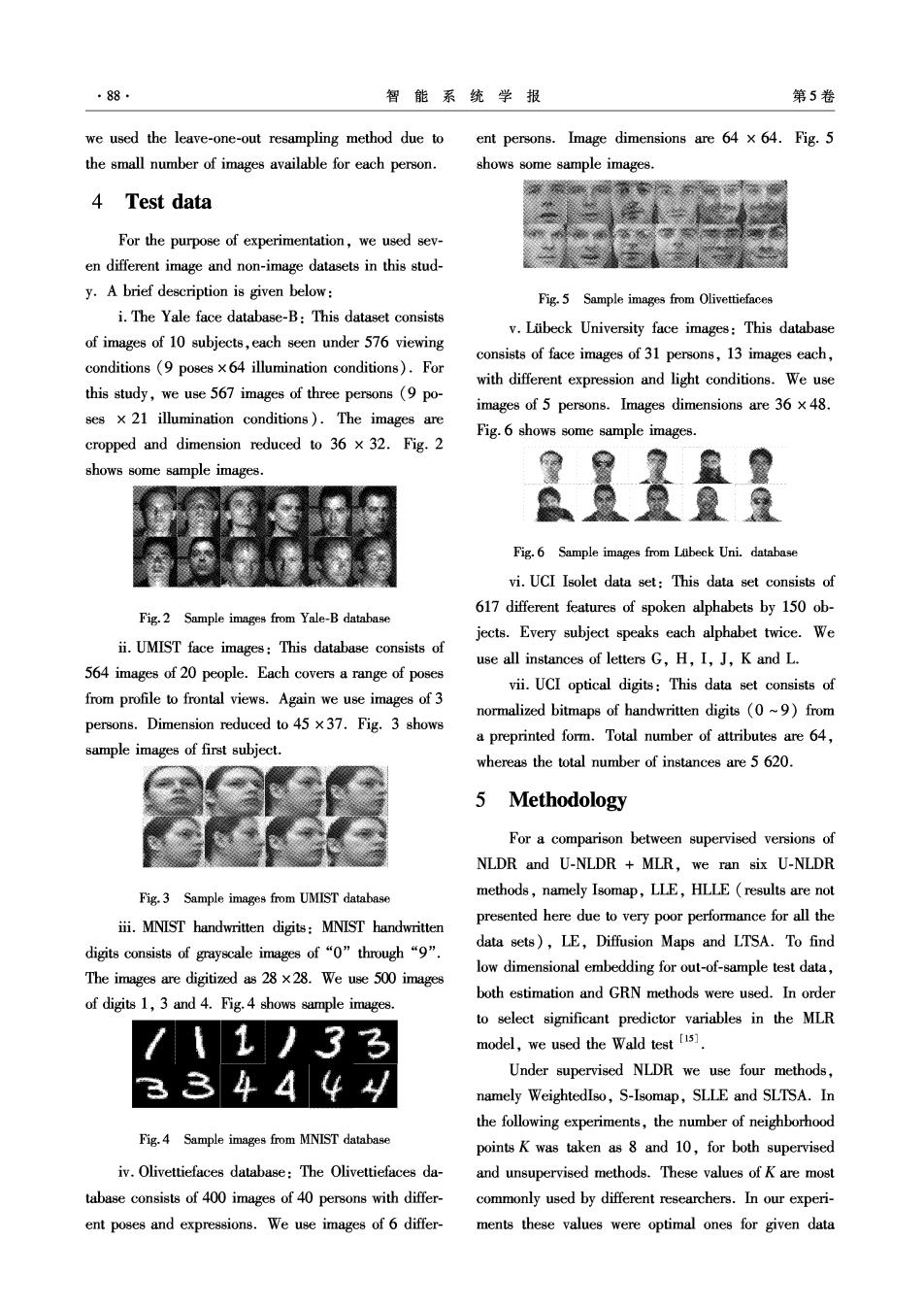

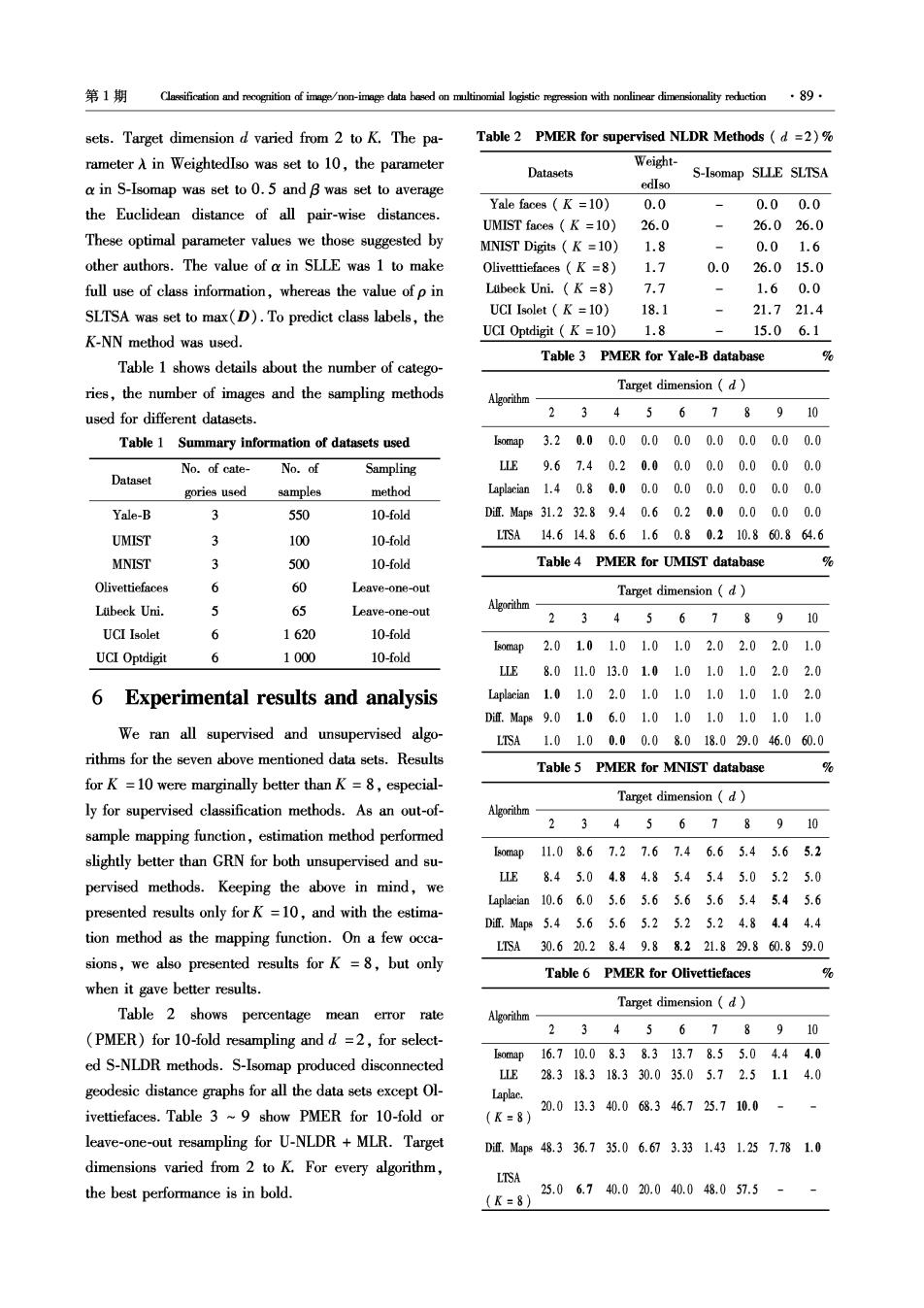

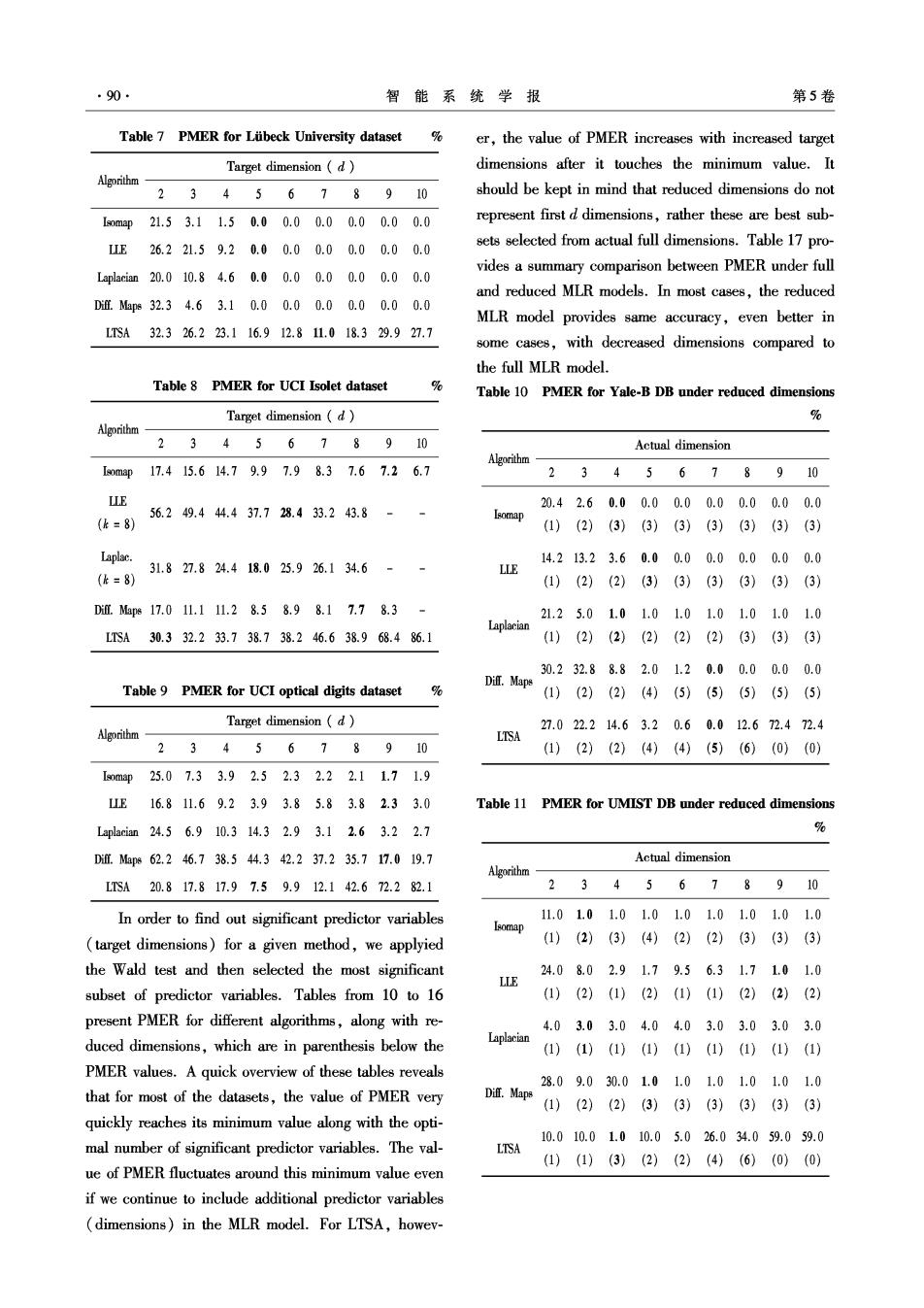

第1期 Classification and recognition of image/non-image data based on multinomial logistic regression with nonlinear dimensionality reduction89 sets.Target dimension d varied from 2 to K.The pa- Table 2 PMER for supervised NLDR Methods (d =2)% rameter A in Weightedlso was set to 10,the parameter Weight- Datasets S-Isomap SLLE SLTSA a in S-Isomap was set to 0.5 and B was set to average edIso Yale faces K =10) 0.0 0.00.0 the Euclidean distance of all pair-wise distances. UMIST faces (K =10) 26.0 26.026.0 These optimal parameter values we those suggested by MNIST Digits K =10) 1.8 0.01.6 other authors.The value of a in SLLE was 1 to make Olivettiefaces (K =8) 1.7 0.026.015.0 full use of class information,whereas the value of p in Ltibeck Uni.(K =8) 7.7 1.60.0 SLTSA was set to max(D).To predict class labels,the UCI Isolet (K =10) 18.1 21.721.4 UCI Optdigit (K =10) 1.8 15.06.1 K-NN method was used. Table 3 PMER for Yale-B database % Table 1 shows details about the number of catego- ries,the number of images and the sampling methods Target dimension (d) Algorithm used for different datasets. 23456789 10 Table 1 Summary information of datasets used map3.20.00.00.00.00.00.00.00.0 No.of cate- No.of Sampling LLE9.67.40.20.00.00.00.00.00.0 Dataset gories used samples method Laplacian1.40.80.00.00.00.00.00.00.0 Yale-B 3 550 10-fold Df.Map831.232.89.40.60.20.00.00.00.0 UMIST 3 100 10-fold LTSA14.614.86.61.60.80.210.860.864.6 MNIST 3 500 10-fold Table 4 PMER for UMIST database % Olivettiefaces 6 60 Leave-one-out Target dimension (d) Lubeck Uni. J 65 Leave-one-out Algorithm 2345678910 UCI Isolet 6 1620 10-fold map2.01.01.01.01.02.02.02.01.0 UCI Optdigit 6 1000 10-fold LLE8.011.013.01.01.01.01.02.02.0 6 Experimental results and analysis Laplacian1.01.02.01.01.01.0.1.01.02.0 Dit.Map89.01.06.01.01.01.01.01.01.0 We ran all supervised and unsupervised algo- LSA1.01.00.00.08.018.029.046.060.0 rithms for the seven above mentioned data sets.Results Table 5 PMER for MNIST database % for K =10 were marginally better than K =8,especial- Target dimension (d) ly for supervised classification methods.As an out-of- 2345678910 sample mapping function,estimation method performed slightly better than GRN for both unsupervised and su- 0map11.08.67.27.67.46.65.45.65.2 LLE 8.45.04.84.85.45.45.05.25.0 pervised methods.Keeping the above in mind,we Laplacian10.66.05.65.65.65.65.45.45.6 presented results only forK=10,and with the estima- Dif.Map85.45.65.65.25.25.24.84.44.4 tion method as the mapping function.On a few occa- LTSA30.620.28.49.88.221.829.860.859.0 sions,we also presented results for K=8,but only Table 6 PMER for Olivettiefaces % when it gave better results. Target dimension (d) Table 2 shows percentage mean error rate Algorithm (PMER)for 10-fold resampling and d =2,for select- 2345678910 ed S-NLDR methods.S-Isomap produced disconnected omp16.710.08.38.313.78.55.04.44.0 L1E28.318.318.330.035.05.72.51.14.0 geodesic distance graphs for all the data sets except Ol- Laplac. 20.013.340.068.346.725.710.0- ivettiefaces.Table 3 ~9 show PMER for 10-fold or (K=8) leave-one-out resampling for U-NLDR MLR.Target Dif.Map848.336.735.06.673.331.431.257.781.0 dimensions varied from 2 to K.For every algorithm, LTSA the best performance is in bold. 25.06.740.020.040.048.057.5 (K=8)

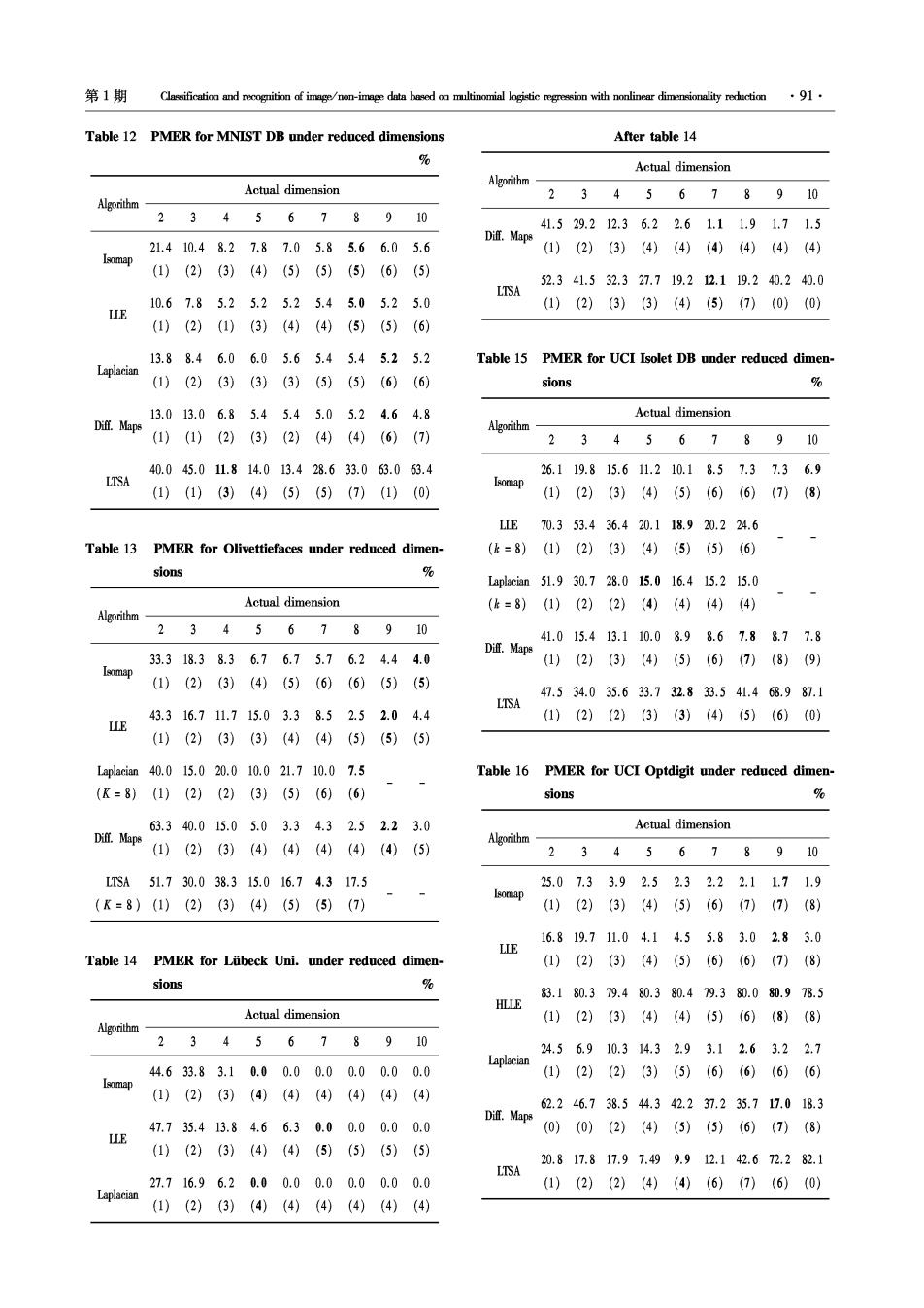

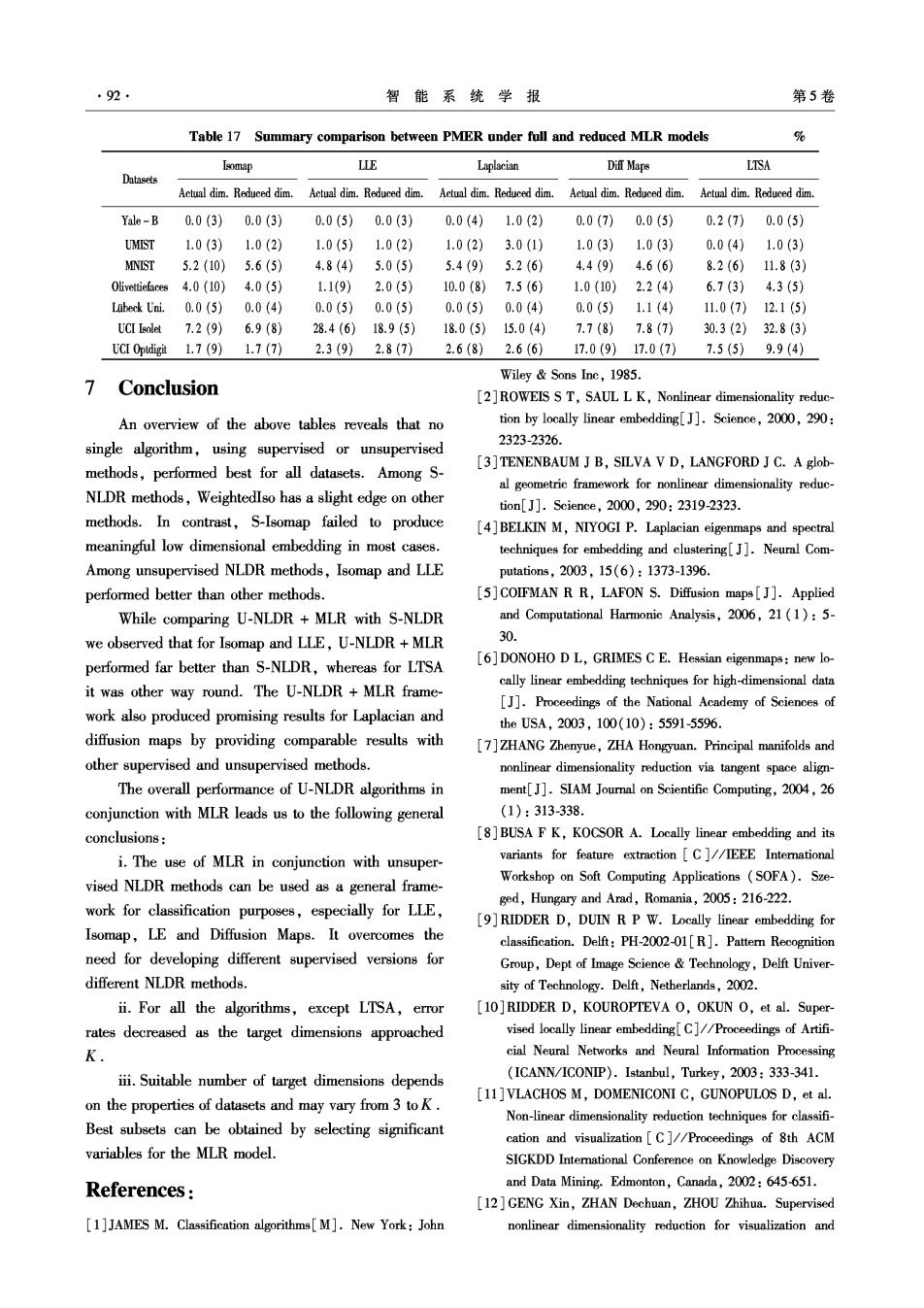

90· 智能系统学报 第5卷 Table 7 PMER for Luibeck University dataset% er,the value of PMER increases with increased target Target dimension (d dimensions after it touches the minimum value.It Algorithm- 2345678910 should be kept in mind that reduced dimensions do not smmp21.53.11.50.00.00.00.00.00.0 represent first d dimensions,rather these are best sub- [LE26.221.59.20.00.00.00.00.00.0 sets selected from actual full dimensions.Table 17 pro- Laplacian20.010.84.60.00.00.00.00.00.0 vides a summary comparison between PMER under full and reduced MLR models.In most cases,the reduced Dif.Maps32.34.63.10.00.00.00.00.00.0 MLR model provides same accuracy,even better in LTSA32.326.223.116.912.811.018.329.927.7 some cases,with decreased dimensions compared to the full MLR model. Table 8 PMER for UCI Isolet dataset % Table 10 PMER for Yale-B DB under reduced dimensions Target dimension (d) % Algorithm- 2345678910 Actual dimension Algorithm- omp17.415.614.79.97.98.37.67.26.7 2345678910 LLE 56.249.444.437.728.433.243.8- 20.42.60.00.00.00.00.00.00.0 (k=8) Isomap (1)(2)(3)(3)(3)(3)(3)(3)(3) Laplac. 31.827.824.418.025.926.134.6- LLE 14.213.23.60.00.00.00.00.00.0 (k=8) 1)(2)(2)(3)(3)(3)(3)(3)(3) Dim.Mps17.01L.111.28.58.98.17.78.3 21.25.01.01.01.01.01.01.01.0 Laplacian LTSA30.332.233.738.738.246.638.968.486.1 (1)(2)(2)(2)(2)(2)(3)(3)(3) 30.232.88.82.01.20.00.00.00.0 Table 9 PMER for UCI optical digits dataset Dif.Maps (1)(2)(2)(4)(5)(5)(5)(5)(5) Target dimension (d) 27.022.214.63.20.60.012.672.472.4 Algorithm LISA 2345678910 (1)(2)(2)(4)(4)(5)(6)(0)(0) Ls0mp25.07.33.92.52.32.22.11.71.9 E16.811.69.23.93.85.83.82.33.0 Table 11 PMER for UMIST DB under reduced dimensions Laplacian24.56.910.314.32.93.12.63.22.7 % Dm.Maps62.246.738.544.342.237.235.717.019.7 Actual dimension Algorithm LTSA20.817.817.97.59.912.142.672.282.1 2345678910 In order to find out significant predictor variables 11.01.01.01.01.01.01.01.01.0 Isomap (target dimensions)for a given method,we applyied (1)(2)(3)(4)(2)(2)(3)(3)(3) the Wald test and then selected the most significant 24.08.02.91.79.56.31.71.01.0 LLE subset of predictor variables.Tables from 10 to 16 (1)(2)(1)(2)(1)(1)(2)(2)(2) present PMER for different algorithms,along with re- 4.03.03.04.04.03.03.03.03.0 duced dimensions,which are in parenthesis below the Laplacian (1)(1)(1)(1)(1)(1)(1)(1)(1) PMER values.A quick overview of these tables reveals 28.09.030.01.01.01.01.01.01.0 that for most of the datasets,the value of PMER very Diff.Maps (1)(2)(2)(3)(3)(3)(3)(3)(3) quickly reaches its minimum value along with the opti- mal number of significant predictor variables.The val- 10.010.01.010.05.026.034.059.059.0 LTSA (1)(1)(3)(2)(2)(4)(6)(0)(0) ue of PMER fluctuates around this minimum value even if we continue to include additional predictor variables (dimensions)in the MLR model.For LTSA,howev-

第1期 Table 12 PMER for MNIST DB under reduced dimensions After table 14 % Actual dimension Algorithm- Actual dimension 23 45 678910 Algorithm 2345678910 41.529.212.36.22.61.11.91.71.5 Diff.Maps 21.410.48.27.87.05.85.66.05.6 (1)(2)(3)(4)(4)(4)(4)(4)(4) Tsomap (1)(2)(3)(4)(5)(5)(5)(6)(5) 52.341.532.327.719.212.119.240.240.0 LTSA 10.67.85.25.25.25.45.05.25.0 (1)(2)(3)(3)(4)(5)(7)(0)(0) (1)(2)(1)(3)(4)(4)(5)(5)(6) 13.88.46.06.05.65.45.45.25.2 Table 15 PMER for UCI Isolet DB under reduced dimen- Laplacian (1)(2)(3)(3)(3)(5)(5)(6)(6) sions % 13.013.06.85.45.45.05.24.64.8 Actual dimension Diff.Maps Algorithm- (1)(1)(2)(3)(2)(4)(4)(6)(7) 2345678910 40.045.011.814.013.428.633.063.063.4 26.119.815.611.210.18.57.37.36.9 LTSA (1)(1)(3)(4)(5)(5)(7)(1)(0) Isomap (1)(2)(3)(4)(5)(6)(6)(7)(8) LB70.353.436.420.118.920.224.6 Table 13 PMER for Olivettiefaces under reduced dimen- (k=8)(1)(2)(3)(4)(5)(5)(6) sions % Laplacian51.930.728.015.016.415.215.0 Actual dimension (k=8)(1)(2)(2)(4)(4)(4)(4) Algorithm 234567 8910 41.015.413.110.08.98.67.88.77.8 Diff.Maps 33.318.38.36.76.75.76.24.44.0 (1)(2)(3)(4)(5)(6)(7)(8)(9) Isomap (1)(2)(3)(4)(5)(6)(6)(5)(5) 47.534.035.633.732.833.541.468.987.1 LTSA 43.316.711.715.03.38.52.52.04.4 (1)(2)(2)(3)(3)(4)(5)(6)(0) (1)(2)(3)(3)(4)(4)(5)(5)(5) Laplacian40.015.020.010.021.710.07.5 Table 16 PMER for UCI Optdigit under reduced dimen- (K=8)(1)(2)(2)(3)(5)(6)(6) sions % 63.340.015.05.03.34.32.52.23.0 Actual dimension Diff.Mape Algorithm (1)(2)(3)(4)(4)(4)(4)(4)(5) 2345678910 LTSA51.730.038.315.016.74.317.5 25.07.33.92.52.32.22.11.71.9 (K=8)(1)(2)(3)(4)(5)(5)(7) Isomap (1)(2)(3)(4)(5)(6)(7)(7)(8) 16.819.711.04.14.55.83.02.83.0 LLE Table 14 PMER for Lubeck Uni.under reduced dimen- (1)(2)(3)(4)(5)(6)(6)(7)(8) sions % 83.180.379.480.380.479.380.080.978.5 HLIE Actual dimension (1)(2)(3)(4)(4)(5)(6)(8)(8) Algorithm- 234567 8910 24.56.910.314.32.93.12.63.22.7 Laplacian 44.633.83.10.00.00.00.00.00.0 (1)(2)(2)(3)(5)(6)(6)(6)(6) 即(2)(3)(④)(④)④4)(④(④) 62.246.738.54.342.237.235.717.018.3 47.735.413.84.66.30.00.00.00.0 Difl.Maps (0)(0)(2)(4)(5)(5)(6)(7)(8) LIE (1)(2)(3)(4)(4)(5)(5)(5)(5) 20.817.817.97.499.912.142.672.282.1 LTSA 27.716.96.20.00.00.00.00.00.0 (1)(2)(2)(4)(4)(6)(7)(6)(0) Laplacian (1)(2)(3)(4)(4)(4)(4)(4)(4)

92 智能系统学报 第5卷 Table 17 Summary comparison between PMER under full and reduced MLR models 0 Isomap LIE Laplacian Dift Mape LTSA Datasets Actual dim.Reduced dim Actual dim.Reduced dim. Actual dim.Reduced dim. Actual dim.Reduced dim Actual dim.Reduced dim Yale-B 0.0(3)0.0(3) 0.0(5) 0.0(3) 0.0(4) 1.0(2) 0.0(7) 0.0(5) 0.2(7) 0.0(5) UMIST 1.0(3)1.0(2) 1.0(5) 1.0(2) 1.0(2) 3.0(1) 1.0(3)1.0(3) 0.0(4) 1.0(3) MNIST 5.2(10) 5.6(5) 4.8(4) 5.0(5) 5.4(9) 5.2(6) 4.4(9) 4.6(6) 8.2(6) 1.8(3) Olivettiefaces 4.0 (10) 4.0(5) 1.1(9) 2.0(5) 10.0(8) 7.5(6) 1.0(10)2.2(4) 6.7(3) 4.3(5) Lubeck Uni.0.0 (5) 0.0(4) 0.0(5) 0.0(5) 0.0(5)0.0(4) 0.0(5)1.1(4) 11.0(7)12.1(5 UCI Isolet7.2(9)6.9(8) 28.4(6)18.9(5) 18.0(5)15.0(4)7.7(8)7.8(7) 30.3(2)32.8(3 UCI0 ptdigit1.7(9)1.7(7)) 2.3(9)2.8(7) 2.6(8)2.6(6)17.0(9)17.0(7)7.5(5)9.9(4) Wiley Sons Inc,1985. 7 Conclusion [2]ROWEIS S T,SAUL L K,Nonlinear dimensionality reduc- An overview of the above tables reveals that no tion by locally linear embedding[J].Science,2000,290: single algorithm,using supervised or unsupervised 2323-2326. [3]TENENBAUM J B,SILVA V D,LANGFORD J C.A glob- methods,performed best for all datasets.Among S- al geometric framework for nonlinear dimensionality reduc- NLDR methods,WeightedIso has a slight edge on other tion[J].Science,2000,290:23192323. methods.In contrast,S-Isomap failed to produce [4]BELKIN M,NIYOGI P.Laplacian eigenmaps and spectral meaningful low dimensional embedding in most cases. techniques for embedding and clustering[J].Neural Com- Among unsupervised NLDR methods,Isomap and LLE putations,2003,15(6):1373-1396. performed better than other methods. [5]COIFMAN RR,LAFON S.Diffusion maps J].Applied While comparing U-NLDR MLR with S-NLDR and Computational Harmonic Analysis,2006,21(1):5- we observed that for Isomap and LLE,U-NLDR MLR 30. performed far better than S-NLDR,whereas for LTSA [6]DONOHO D L,GRIMES C E.Hessian eigenmaps:new lo- it was other way round.The U-NLDR MLR frame- cally linear embedding techniques for high-dimensional data [J].Proceedings of the National Academy of Sciences of work also produced promising results for Laplacian and the USA,2003,100(10):5591-5596. diffusion maps by providing comparable results with [7]ZHANG Zhenyue,ZHA Hongyuan.Principal manifolds and other supervised and unsupervised methods. nonlinear dimensionality reduction via tangent space align- The overall performance of U-NLDR algorithms in ment[J].SIAM Joumal on Scientific Computing,2004,6 conjunction with MLR leads us to the following general (1):313-338. conclusions: [8]BUSA F K,KOCSOR A.Locally linear embedding and its i.The use of MLR in conjunction with unsuper- variants for feature extraction [C]//IEEE International vised NLDR methods can be used as a general frame- Workshop on Soft Computing Applications (SOFA).Sze- ged,Hungary and Arad,Romania,2005:216-222. work for classification purposes,especially for LLE, [9]RIDDER D,DUIN R P W.Locally linear embedding for Isomap,LE and Diffusion Maps.It overcomes the classification.Delft:PH-2002-01[R].Pattem Recognition need for developing different supervised versions for Group,Dept of Image Science Technology,Delft Univer- different NLDR methods. sity of Technology.Delft,Netherlands,2002. ii.For all the algorithms,except LTSA,error [10]RIDDER D,KOUROPTEVA O,OKUN O,et al.Super- rates decreased as the target dimensions approached vised locally linear embedding[C]//Proceedings of Artifi- K cial Neural Networks and Neural Information Processing iii.Suitable number of target dimensions depends (ICANN/ICONIP).Istanbul,Turkey,2003:333-341. on the properties of datasets and may vary from 3 to K. [11]VLACHOS M,DOMENICONI C,GUNOPULOS D,et al. Non-linear dimensionality reduction techniques for classifi- Best subsets can be obtained by selecting significant cation and visualization [C]//Proceedings of 8th ACM variables for the MLR model. SIGKDD Interational Conference on Knowledge Discovery References: and Data Mining.Edmonton,Canada,2002:645-651. [12]GENG Xin,ZHAN Dechuan,ZHOU Zhihua.Supervised [1]JAMES M.Classification algorithms[M].New York:John nonlinear dimensionality reduction for visualization and

第1期 Classification and recognition of image/non-image data besed on multinomial logistic regression with nonlinear dimensionality reduction ·93· classification[J].IEEE Transactions on Systems,Man, QIN Shi-yin,received his Bachelor and Cybernetics-Part B:Cyberetics,2005,35 (6): Degree and Master's Degree for Engineer- 1098-1107. ing Science in Automatic Controls and In- [13]LI Hongyu,CHEN Wenbin,SHEN Ifan.Supervised local dustrial Systems Engineering from Lanzhou tangent space alignment for classification[C]//Proceedings Jiaotong University in 1978 and 1984 re- of the Nineteenth Interational Joint Conference on Artifi- spectively,and his PhD Degree in Indus- cial Intelligence.Edinburgh,Scotland,2005:1620-1621. trial Control Engineering and Intelligent Automation from Zhe- [14]ROSS D A,LIM J,LIN R S,et al.Incremental leaming jiang University in 1990.He is now a professor at the School of for robust visual tracking J].Intemational Joumal of Automation Science and Electrical Engineering in Beihang Uni- Computer Vision,2008,77(1):125-141. versity(Beijing University of Aeronautics and Astronautics).He [15]BENGIO Y,PAIEMENT J-F,VINCENT P,et al.Out-of- is a standing member of the council and the secretary-general of sample extensions for LIE,Isomap,MDS,eigenmaps, the Chinese Association for Artificial Intelligence (CAAI),and and spectral clustering[J].Advances in Neural Informa- the vice-chairman of the Society of Intelligent Control and Intelli- tion Processing Systems,2004(16):177-184. gent Management in CAAI,and is also a member of the Commit- [16]HO T K.Nearest neighbors in random subspaces[J]. tee of Intelligent Automation of the Chinese Association of Auto- LNCS:Advances in Pattern Recognition,1998,1451: mation (CAA).He has also been a professor in Xi'an Jiaotong 640648. University and Beijing University of Technology.His current re [17]HOSMER D W,LEMESHOW S.Applied logistic regers- search interests include intelligent autonomous controls of com- sion[M].2nd ed.New York:John Wiley Sons Inc, plex spacecraft;autonomous intelligent control of formation 2000:128-135. processes for multi-robot systems;theory of hybrid control sys [18]FUKUNAGA K.Introduction to statistical pattern recogni- tems with applications;fault diagnosis and fault-tolerant con tion[M].2nd ed.San Diago:Academic Press,1990: trols;image processing and patter recognition;intelligent sys- 219-237. tems and artificial life;the modeling and optimizing decision of About the authors: open complex giant systems;computational intelligence and en- Mudasser NASEER received his tropy optimization.He is the author of 3 monographs.He was a- Master's and M.Phil degrees in Statistics warded the First Level Prize of "1999'National Excellent from Govt.College University Lahore,Pa- Books of Science and Technology and the Progress of Science kistan,in 1990 and 2001.He completed and Technology",and the Gold Medal Prize for the Excellent his MS in CS from LUMS Lahore in 2004. Software in the“5 th National Engineering Design”(1999).He Presently he is pursuing his PhD in Pat- has published more than 150 papers in journals and proceedings tern Recognition from Beihang University,Beijing,China.His in the fields of intelligent controls for complex spacecrafts and field of interest is pattern recognition,machine learning and large scale systems,hybrid control systems,systems engineer- heuristic optimization techniques.So far he has published two ing,artificial intelligence,neural networks,fuzzy control sys- research papers. tems,expert systems,evolutionary computation and entropy op- timization,and so on. 基于非线性降维多项式逻辑斯蒂回归的 图像/非图像数据的分类与识别 Mudasser NASEER,秦世引 (北京航空航天大学自动化科学与电气工程学院,北京100037) 摘要:在面向大规模复杂数据的模式分类和识别问题中,绝大多数的分类器都遇到了维数灾难这一棘手的问题.在进行高 维数据分类之前,基于监督流形学习的非线性降维方法可提供一种有效的解决方法.利用多项式逻辑斯蒂回归方法进行分类 预测,并结合基于非线性降维的非监督流形学习方法解决图像以及非图像数据的分类问题,因而形成了一种新的分类识别方 法.大量的实验测试和比较分析验证了本文所提方法的优越性, 关键词:非线性降维;数据分类;多项式逻辑斯蒂回归;图像/非图像数据 中图分类号:TP391文献标识码:A文章编号:16734785(2010)01008509