Unsupervised Learning: Deep Auto-encoder

Unsupervised Learning: Deep Auto-encoder

Unsupervised Learning "We expect unsupervised learning to become far more important in the longer term.Human and animal learning is largely unsupervised:we discover the structure of the world by observing it,not by being told the name of every object." LeCun,Bengio,Hinton,Nature 2015 As I've said in previous statements:most of human and animal learning is unsupervised learning.If intelligence was a cake, unsupervised learning would be the cake,supervised learning would be the icing on the cake,and reinforcement learning would be the cherry on the cake.We know how to make the icing and the cherry,but we don't know how to make the cake. Yann LeCun,March 14,2016(Facebook)

Unsupervised Learning “We expect unsupervised learning to become far more important in the longer term. Human and animal learning is largely unsupervised: we discover the structure of the world by observing it, not by being told the name of every object.” – LeCun, Bengio, Hinton, Nature 2015 As I've said in previous statements: most of human and animal learning is unsupervised learning. If intelligence was a cake, unsupervised learning would be the cake, supervised learning would be the icing on the cake, and reinforcement learning would be the cherry on the cake. We know how to make the icing and the cherry, but we don't know how to make the cake. - Yann LeCun, March 14, 2016 (Facebook)

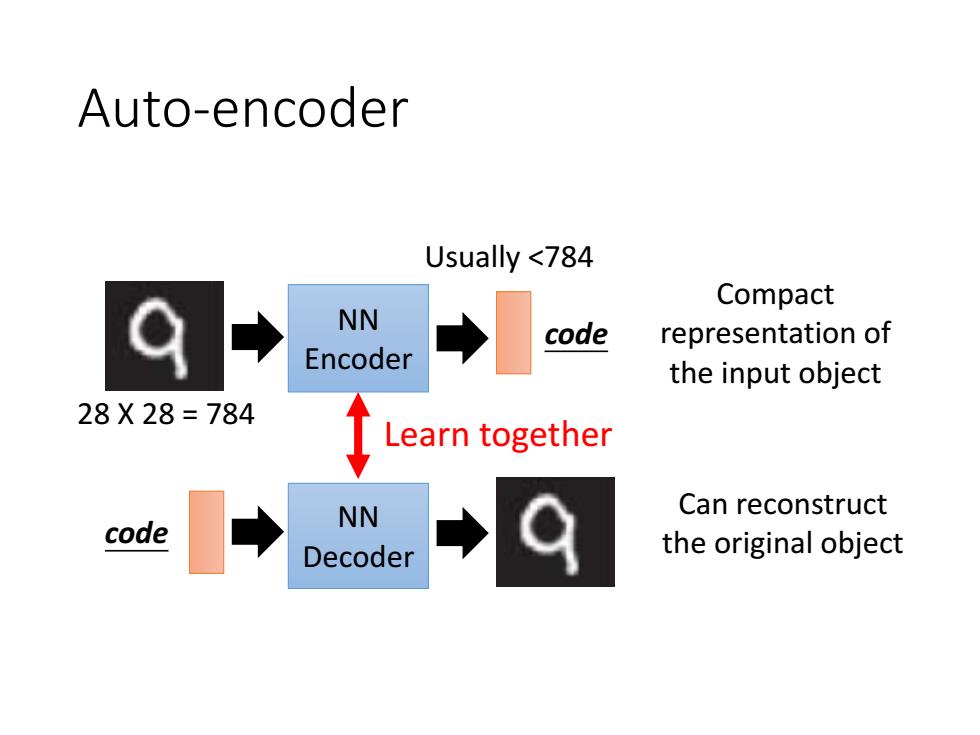

Auto-encoder Usually <784 Compact NN code representation of Encoder the input object 28X28=784 Learn together Can reconstruct code NN Decoder the original object

Auto-encoder NN Encoder NN Decoder code Compact representation of the input object code Can reconstruct the original object Learn together 28 X 28 = 784 Usually <784

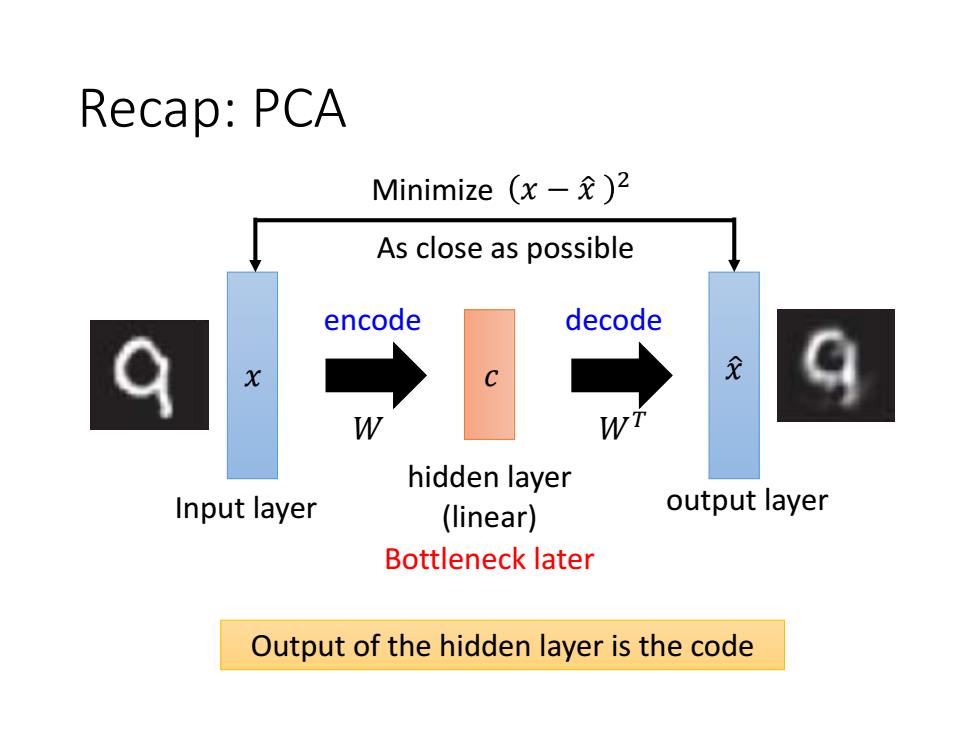

Recap:PCA Minimize(x-龙)2 As close as possible encode decode W hidden layer Input layer (linear) output layer Bottleneck later Output of the hidden layer is the code

Recap: PCA 𝑥 Input layer 𝑊 𝑥 ො 𝑊𝑇 output layer hidden layer (linear) 𝑐 As close as possible Minimize 𝑥 − 𝑥 ො 2 Bottleneck later Output of the hidden layer is the code encode decode

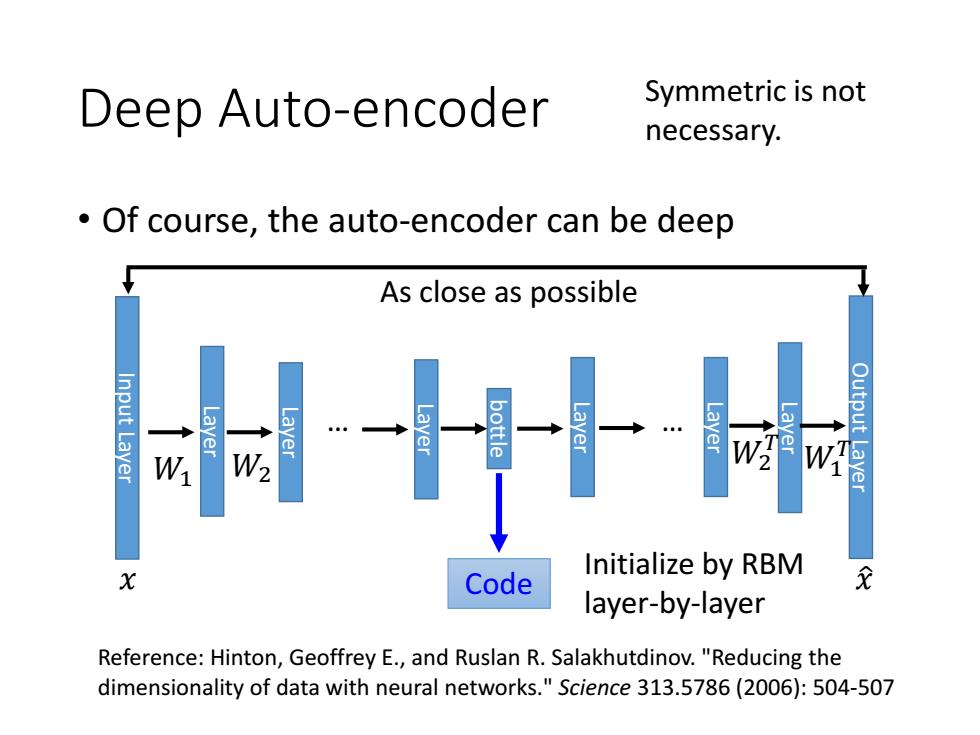

Deep Auto-encoder Symmetric is not necessary. Of course,the auto-encoder can be deep As close as possible W2 Initialize by RBM Code layer-by-layer Reference:Hinton,Geoffrey E.,and Ruslan R.Salakhutdinov."Reducing the dimensionality of data with neural networks."Science 313.5786(2006):504-507

Initialize by RBM layer-by-layer Reference: Hinton, Geoffrey E., and Ruslan R. Salakhutdinov. "Reducing the dimensionality of data with neural networks." Science 313.5786 (2006): 504-507 • Of course, the auto-encoder can be deep Deep Auto-encoder Input Layer Layer Layer bottle Output Layer Layer Layer Layer Layer … … Code As close as possible 𝑥 𝑥 ො 𝑊1 𝑊1 𝑇 𝑊2 𝑊2 𝑇 Symmetric is not necessary

Deep Auto-encoder Original /234 Image PCA /334 Deep Auto-encoder /234 1000 73A

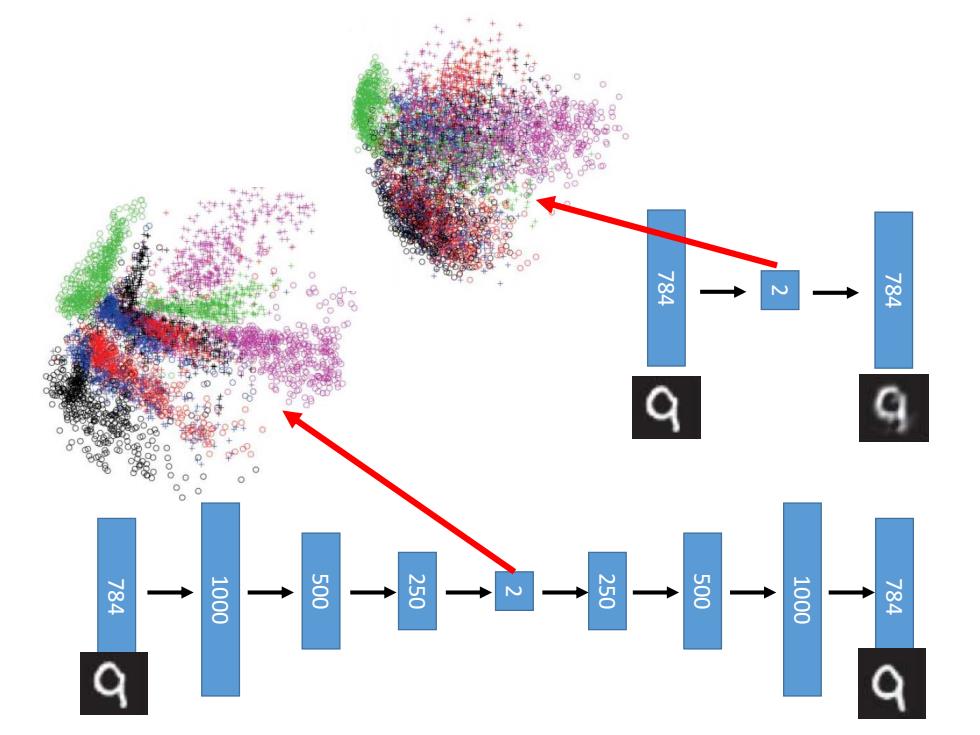

Deep Auto-encoder Original Image PCA Deep Auto-encoder 784 784 784 1000 500 250 30 30 250 500 1000 784

空 函 1000

784 784 784 1000 500 250 2 2 250 500 1000 784

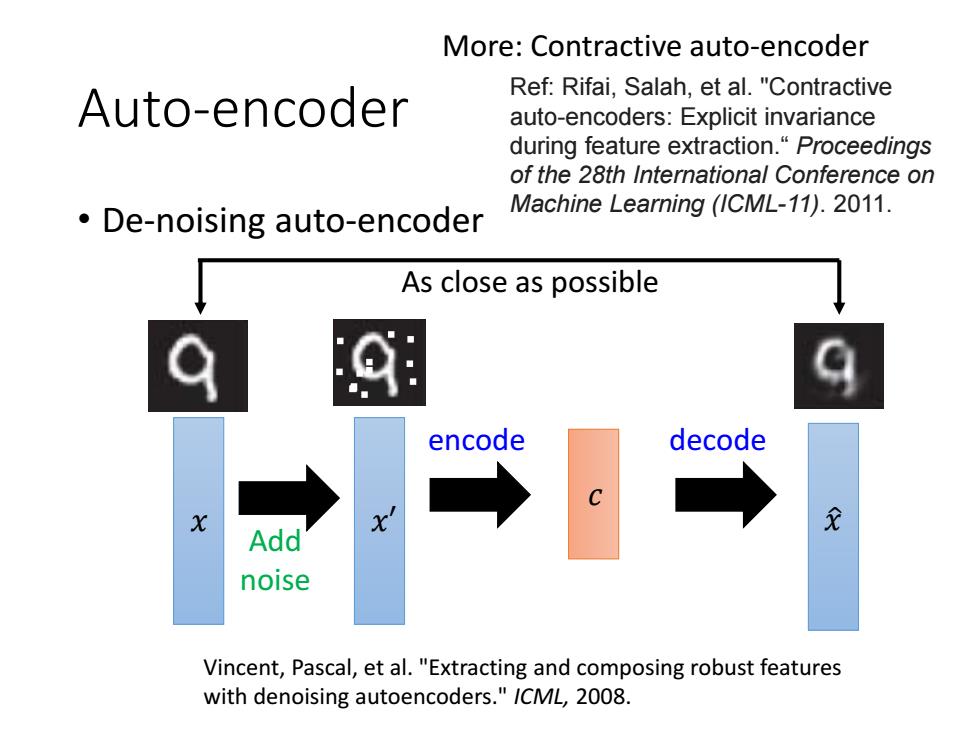

More:Contractive auto-encoder Ref:Rifai,Salah,et al."Contractive Auto-encoder auto-encoders:Explicit invariance during feature extraction."Proceedings of the 28th International Conference on De-noising auto-encoder Machine Learning(ICML-11).2011. As close as possible ◆ encode decode Add noise Vincent,Pascal,et al."Extracting and composing robust features with denoising autoencoders."/CML,2008

Auto-encoder • De-noising auto-encoder 𝑥 𝑥 ො 𝑐 encode decode Add noise 𝑥′ As close as possible More: Contractive auto-encoder Ref: Rifai, Salah, et al. "Contractive auto-encoders: Explicit invariance during feature extraction.“ Proceedings of the 28th International Conference on Machine Learning (ICML-11). 2011. Vincent, Pascal, et al. "Extracting and composing robust features with denoising autoencoders." ICML, 2008

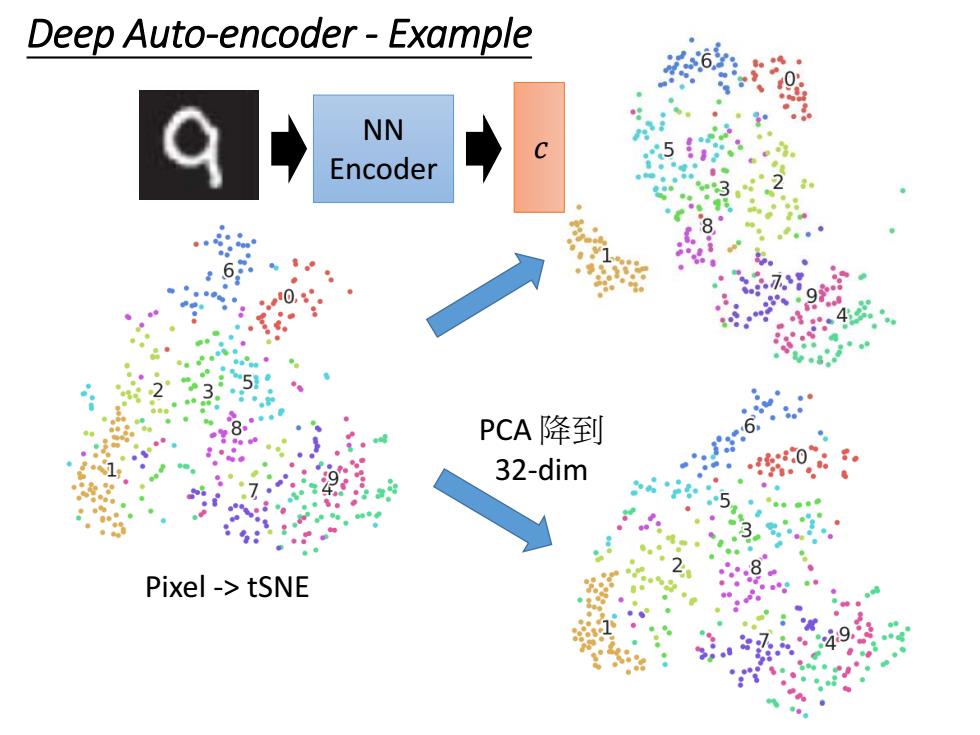

Deep Auto-encoder-Example NN C 5 Encoder 3 8 6 0,° 878 8e4 23 。 PCA降到 32-dim 5 .3 Pixel -tSNE 0 89

Deep Auto-encoder - Example Pixel -> tSNE 𝑐 NN Encoder PCA 降到 32-dim

Auto-encoder Text Retrieval Vector Space Model Bag-of-word this is 1 word string: query “This is an apple'”e 0 an 1 apple 1 pen 0 document Semantics are not considered

Auto-encoder – Text Retrieval word string: “This is an apple” … this is a an apple pen 1 1 0 1 1 0 Bag-of-word Semantics are not considered. Vector Space Model document query