动手学深度学习 12.LeNet,AlexNet,VGG NiN 中文教材:zh.d2.ai 英文教材:ww.d2.ai 教学视频:https://courses.d2L.ai/berkeley-stat-157/units/lenet..html D2L.ai

动手学深度学习 12.LeNet, AlexNet, VGG 和 NiN 中文教材:zh.d2l.ai 英文教材:www.d2l.ai 教学视频:https://courses.d2l.ai/berkeley-stat-157/units/lenet.html

概要 ·LeNet(第一个卷积神经网络) ·AlexNet ·升级版的LeNet ·ReLu激活,丢弃法,平移不变性 ·VGG ·升华版的AlexNet ·重复的VGG块 ·NiN ·1x1卷积+全局池化 D2L.ai

概要 • LeNet (第一个卷积神经网络) • AlexNet • 升级 版的 LeNet • ReLu 激活, 丢弃法,平移不变性 • VGG • 升华版的 AlexNet • 重复的 VGG 块 • NiN • 1x1 卷积 + 全局池化

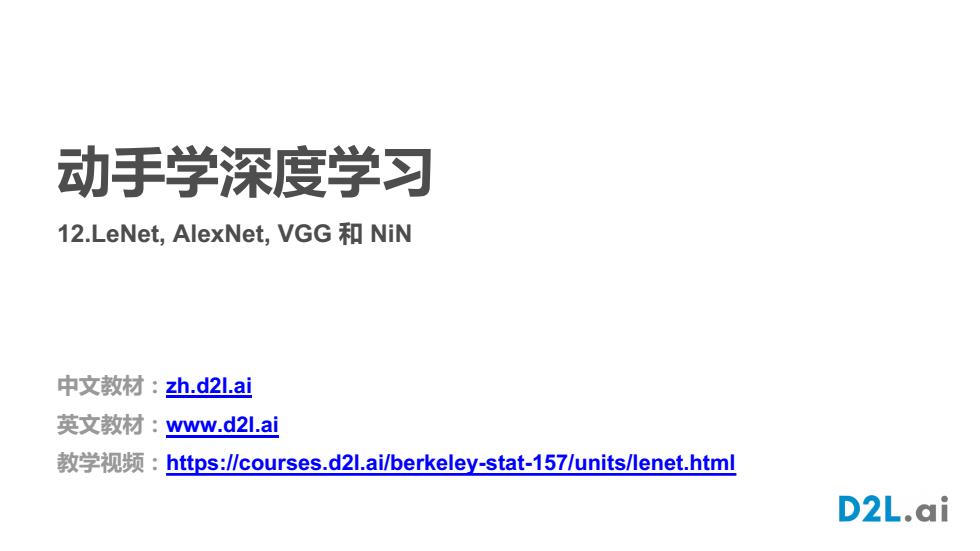

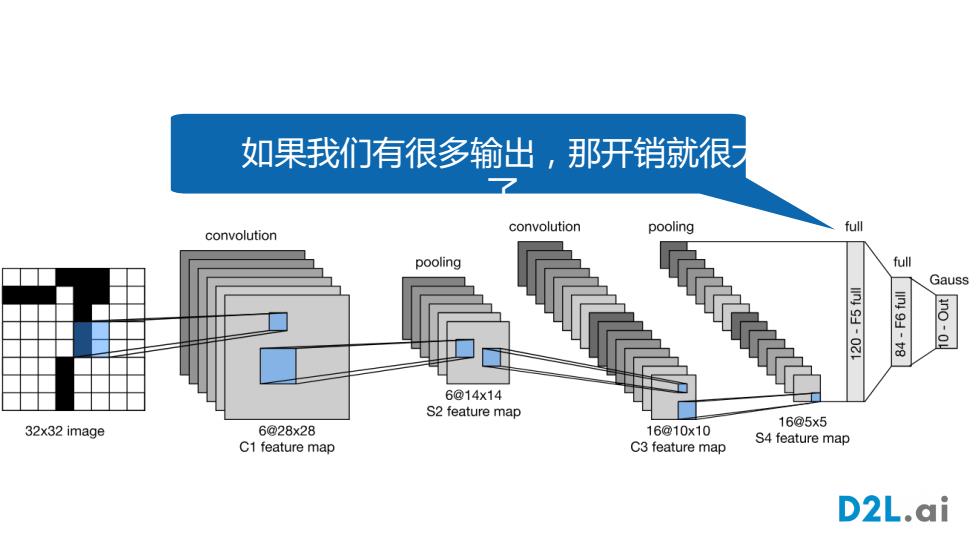

LeNet架构 convolution pooling full convolution pooling full Gauss 6@14x14 S2 feature map 32x32 image 16@5x5 6@28x28 16@10x10 C1 feature map C3 feature map S4 feature map D2L.ai

LeNet 架构

b乡B1erk sh加g0CA等9gi Dae色nM亿 心地n Hood Rier DR 17021 97031206000 dytoplpphful年p 手写的数字识别 CARROLL O'CONNOR 17a5 715 BUSINESS ACCOUNT NANAS,STERN,BIERS AND CO. 9454 WILSHIRE BLVD.,STE.405 273-2501 BBVERLY HILLS,CALIF.90212 2hcL包192 16-24/6 1220 5000 DOLLA >tWE8A好S9AN区 y rit 1to Chwu pihup yo Uc0. ®1:1220m00241:7150B351a1bB75 '0000500000

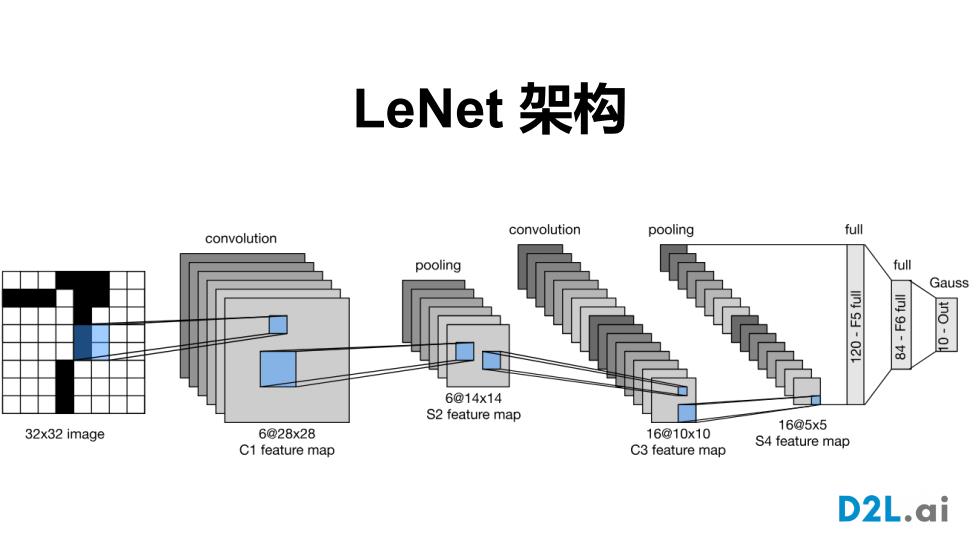

手写的数字识别

MNIST bD000O00000 ·居中和缩放 22 2上QQ2.2282 ·50,000个训练数据 6 333 3333 3 3 3 3 ·10,000个测试数据 图像大小28*28 H 444 4 ·10类 5 5 5 55 5 5 s o 6 o 6 o(0 (0 b 6 G a 7 7 77?7 7 子 7 n 8 888g 8 888 飞 8 99 999 9 9

MNIST • 居中和缩放 • 50,000 个训练数据 • 10,000 个测试数据 • 图像大小28*28 • 10 类

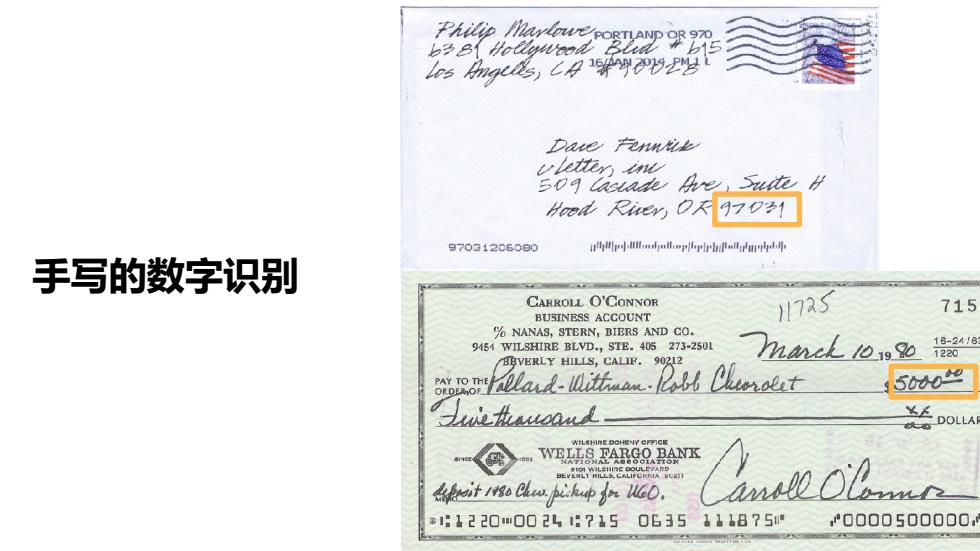

ATET LeNet 5 RESEARCH answer: 0 0 163 Y.LeCun,L Bottou,Y.Bengio P.Haffner,1998 Gradient-based learning applied to document recognition 2L.ai

Y. LeCun, L. Bottou, Y. Bengio, P. Haffner, 1998 Gradient-based learning applied to document recognition

如果我们有很多输出,那开销就很 convolution pooling full convolution pooling full Gauss 6@14x14 S2 feature map 32x32 image 16@5x5 6@28x28 16@10x10 C1 feature map C3 feature map S4 feature map D2L.ai

如果我们有很多输出,那开销就很大 了

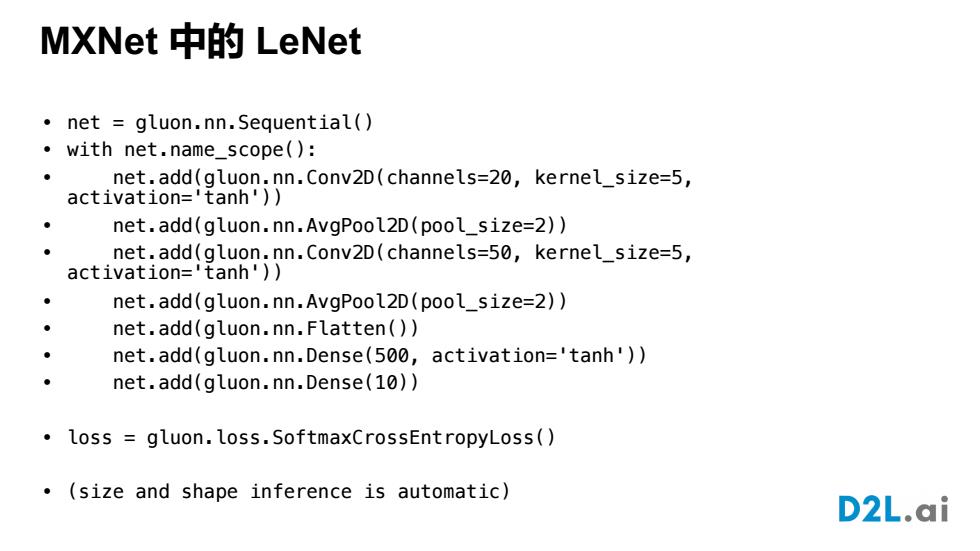

MXNet中的LeNet net gluon.nn.Sequential() with net.name_scope(): net.add(gluon.nn.Conv2D(channels=20,kernel_size=5, activation='tanh')) net.add(gluon.nn.AvgPool2D(pool_size=2)) net.add(gluon.nn.Conv2D(channels=50,kernel_size=5, activation='tanh')) net.add(gluon.nn.AvgPool2D(pool_size=2)) ● net.add(gluon.nn.Flatten()) net.add(gluon.nn.Dense(500,activation='tanh')) ● net.add(gluon.nn.Dense(10)) loss gluon.loss.SoftmaxCrossEntropyLoss() .(size and shape inference is automatic) D2L.ai

MXNet 中的 LeNet • net = gluon.nn.Sequential() • with net.name_scope(): • net.add(gluon.nn.Conv2D(channels=20, kernel_size=5, activation='tanh')) • net.add(gluon.nn.AvgPool2D(pool_size=2)) • net.add(gluon.nn.Conv2D(channels=50, kernel_size=5, activation='tanh')) • net.add(gluon.nn.AvgPool2D(pool_size=2)) • net.add(gluon.nn.Flatten()) • net.add(gluon.nn.Dense(500, activation='tanh')) • net.add(gluon.nn.Dense(10)) • loss = gluon.loss.SoftmaxCrossEntropyLoss() • (size and shape inference is automatic)

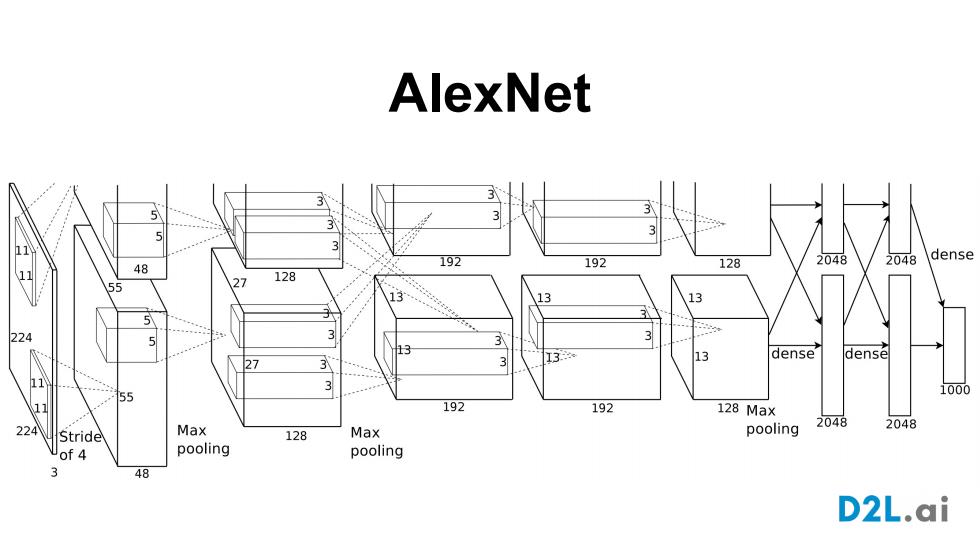

AlexNet 2048 2048 dense 192 192 128 11 48 128 3 13 *28 224 5 I3. 03 dense dense 27 11 755 1000 192 192 128Ma× 2048 224 Max pooling 2048 Stride 128 Max of 4 pooling pooling 3 48 D2L.ai

AlexNet

2001 机器学习 In the 1990s,a new type of learning algorithm was developed,based on results from statistical learning theory: Learning with Kernels the Support Vector Machine(SVM).This gave rise to a new class of theoretically elegant learning machines that use a central concept of SVMs--kernels-for a number of Support Vector Machines,Regularization, Optimization,and Beyond 提取特征 ·选择内核以获得相似性 Bernhard Scholkopf and Alexander J.Smola ·凸优化问题 米 米 。许多完美的定理… D2L.ai 米

机器学习 • 提取特征 • 选择内核以获得相似性 • 凸优化问题 • 许多完美的定理 ...... 2001