版像 NJUAT 南京大学 人工智能学院 SCHODL OF ARTIFICIAL INTELUGENCE,NANJING UNIVERSITY Lecture 5.Online Convex Optimization Advanced Optimization(Fall 2023) Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University

Lecture 5. Online Convex Optimization Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University Advanced Optimization (Fall 2023)

Outline Online Learning Online Convex Optimization ·Convex Functions Strongly Convex Functions Exp-concave Functions Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 2

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 2 Outline • Online Learning • Online Convex Optimization • Convex Functions • Strongly Convex Functions • Exp-concave Functions

A Brief Review of Statistical Learning The fundamental goal of(supervised)learning:Risk Minimization(RM), minE(h(x),y)], h∈H where -h denotes the hypothesis(model)from the hypothesis space Ht. -(x,y)is an instance chosen from a unknown distribution D. -e(h(x),y)denotes the loss of using hypothesis h on the instance(x,y). Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 3

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 3 A Brief Review of Statistical Learning

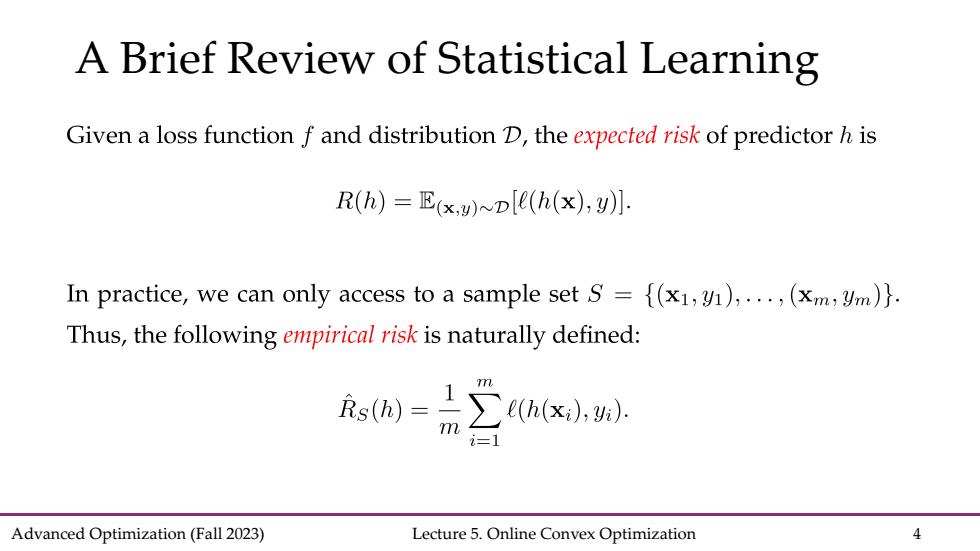

a Brief Review of Statistical Learning Given a loss function f and distribution D,the expected risk of predictor h is R(h)=E(xD[(h(x),y)]. In practice,we can only access to a sample set S={(x1,1),...,(xm,Um)} Thus,the following empirical risk is naturally defined: Rsm=工x.8 77 i=1 Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 4

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 4 A Brief Review of Statistical Learning

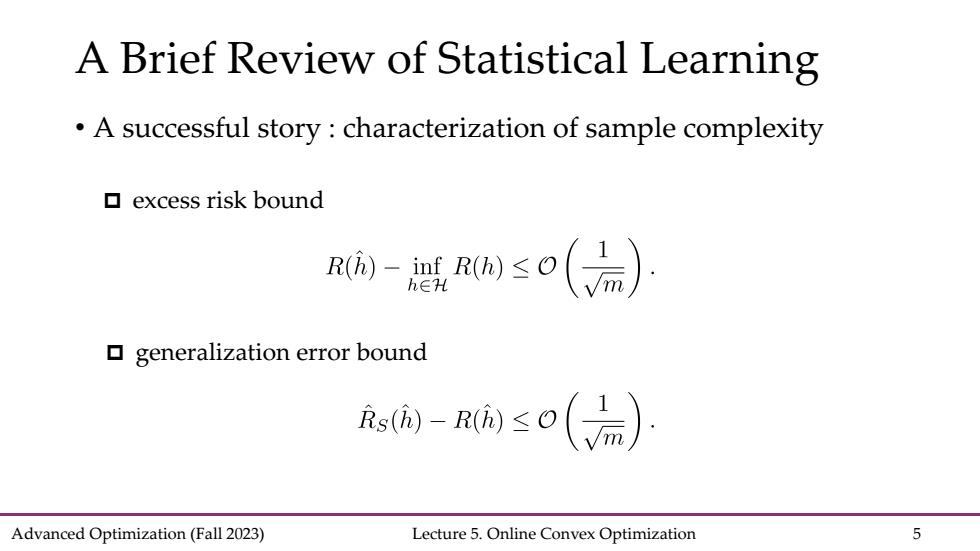

A Brief Review of Statistical Learning A successful story characterization of sample complexity ▣excess risk bound -恶so(a) generalization error bound R,a-≤o(元) Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 5

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 5 A Brief Review of Statistical Learning • A successful story : characterization of sample complexity p excess risk bound p generalization error bound

Offline Towards Online Learning Traditional statistical machine learning The training data are available offline Learning model is trained based on the offline data in a batch setting Online learning scenario In real applications,data are in the form of stream New data are being collected all the time:after observing a new data point,the model should be online updated at a constant cost Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 6

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 6 Offline Towards Online Learning • Traditional statistical machine learning • The training data are available offline • Learning model is trained based on the offline data in a batch setting • Online learning scenario • In real applications, data are in the form of stream • New data are being collected all the time: after observing a new data point, the model should be online updated at a constant cost

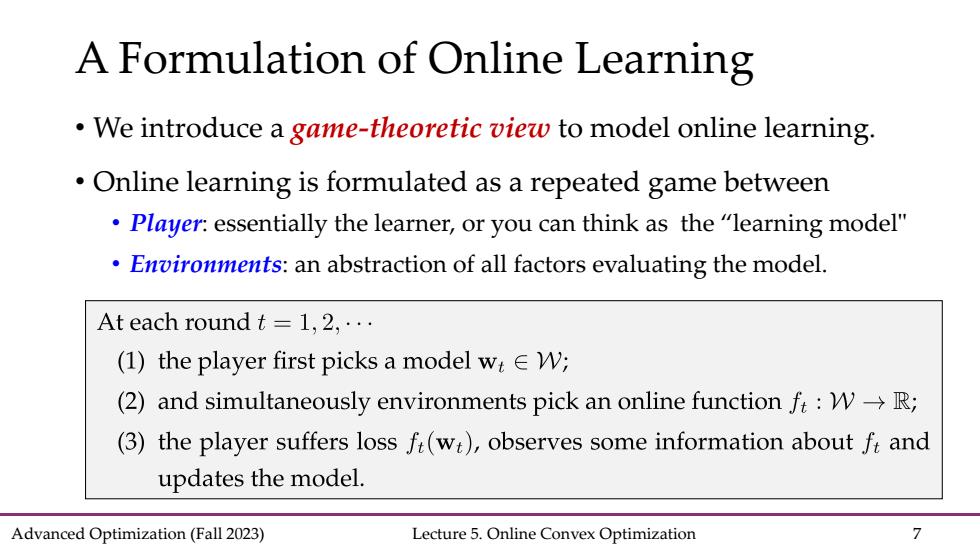

A Formulation of Online Learning We introduce a game-theoretic view to model online learning. Online learning is formulated as a repeated game between Player:essentially the learner,or you can think as the"learning model" Environments:an abstraction of all factors evaluating the model. At each round t =1,2,... (1)the player first picks a model wEW; (2)and simultaneously environments pick an online function f:w->R; (3)the player suffers loss f(wt),observes some information about fr and updates the model. Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 7

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 7 A Formulation of Online Learning • We introduce a game-theoretic view to model online learning. • Online learning is formulated as a repeated game between • Player: essentially the learner, or you can think as the “learning model" • Environments: an abstraction of all factors evaluating the model

Online Learning:Formulation At each round t=1,2,... (1)the player first picks a model wW; (2)and simultaneously environments pick an online function fr:W->R; (3)the player suffers loss ft(wt),observes some information about ft and updates the model. ·An example of online function f:W→R. Considering the task of online classification,we have (i)the loss e:Jy×Jy→R,and fi(w)=l(h(w;x:),) (i)the hypothesis function h:W×X→). =e(w xt,Ut)for simplicity Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 8

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 8 Online Learning: Formulation for simplicity • Considering the task of online classification, we have

Online Learning:Formulation At each round t=l,2,· (1)the player first picks a model wtW; (2)and simultaneously environments pick an online function fr:W->R; (3)the player suffers loss fi(wt),observes some information about fi and updates the model. Spam filtering (1)Player submits a spam classifier w ↓ (2)A mail is revealed whether it is a spam ☒ (3)Player suffers loss fi(w)and updates model Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 9

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 9 Online Learning: Formulation Spam filtering

Applications spam detection(online classification/regression):At each timet=1,2,... ·receive an email xt∈R, ·predict whether it is a spam∈{-l,+li SPAM 。see its true label y∈{-1,+1l} aggregating weather prediction(the expert problem):At each day t=1,2,... obtain temperature predictions from N models; make the final prediction by randomly following a model according to the probability p∈△w; on the next day observe the loss of each model f0,1]N. Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 10

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 10 Applications