版套是 NJUAT 南京大学 人工智能学院 SCHODL OF ARTIFICIAL INTELUGENCE,NANJING UNIVERSITY Lecture 1.Mathematical Background Advanced Optimization(Fall 2023) Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University

Lecture 1. Mathematical Background Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University Advanced Optimization (Fall 2023)

Outline 。Calculus ·Linear Algebra Probability Statistics ·Information Theory Optimization in Machine Learning Advanced Optimization (Fall 2023) Lecture 1.Mathematical Background 2

Advanced Optimization (Fall 2023) Lecture 1. Mathematical Background 2 Outline • Calculus • Linear Algebra • Probability & Statistics • Information Theory • Optimization in Machine Learning

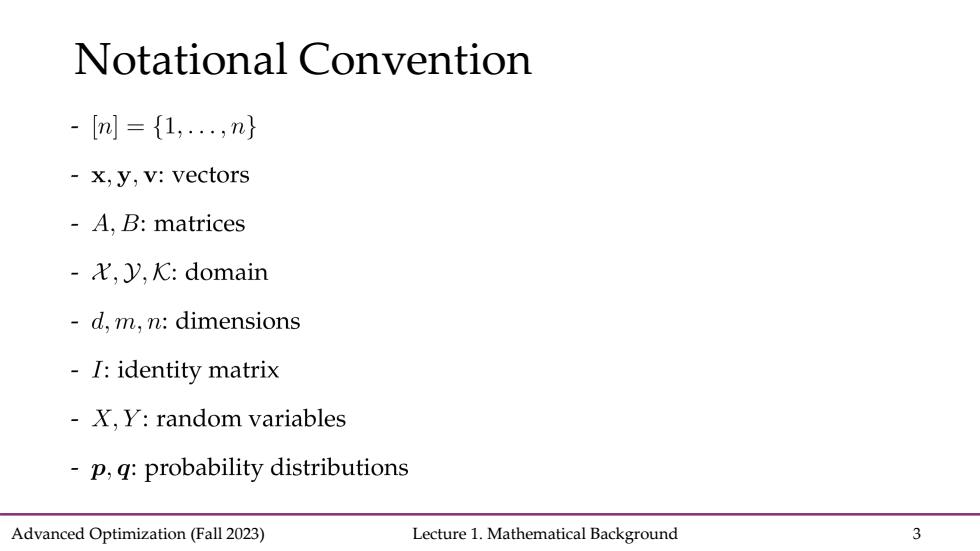

Notational Convention -[m={1,..,n} -x,y,v:vectors A.B:matrices -,K:domain d,m,n:dimensions -I:identity matrix X,Y:random variables p,q:probability distributions Advanced Optimization (Fall 2023) Lecture 1.Mathematical Background 3

Advanced Optimization (Fall 2023) Lecture 1. Mathematical Background 3 Notational Convention

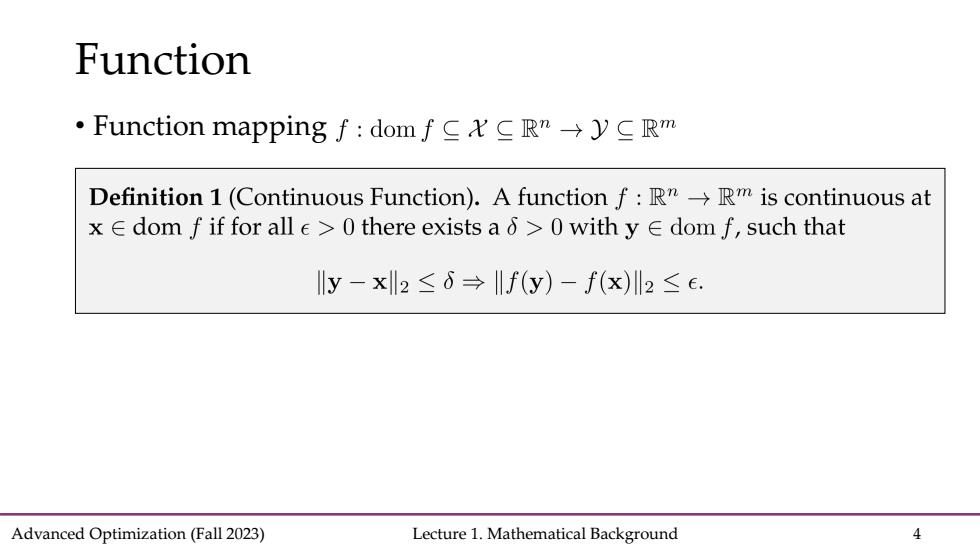

Function ·Function mapping f:domf R"→yC Rm Definition 1(Continuous Function).A function f:R"->Rm is continuous at x E dom f if for all e>0 there exists a >0 with y E dom f,such that Ily-x2≤6→If(y)-f(x)2≤e. Advanced Optimization (Fall 2023) Lecture 1.Mathematical Background 4

Advanced Optimization (Fall 2023) Lecture 1. Mathematical Background 4 Function • Function mapping

Part 1.Calculus Gradient and Derivatives ·essian ·Chain Rule Advanced Optimization (Fall 2023) Lecture 1.Mathematical Background 5

Advanced Optimization (Fall 2023) Lecture 1. Mathematical Background 5 Part 1. Calculus • Gradient and Derivatives • Hessian • Chain Rule

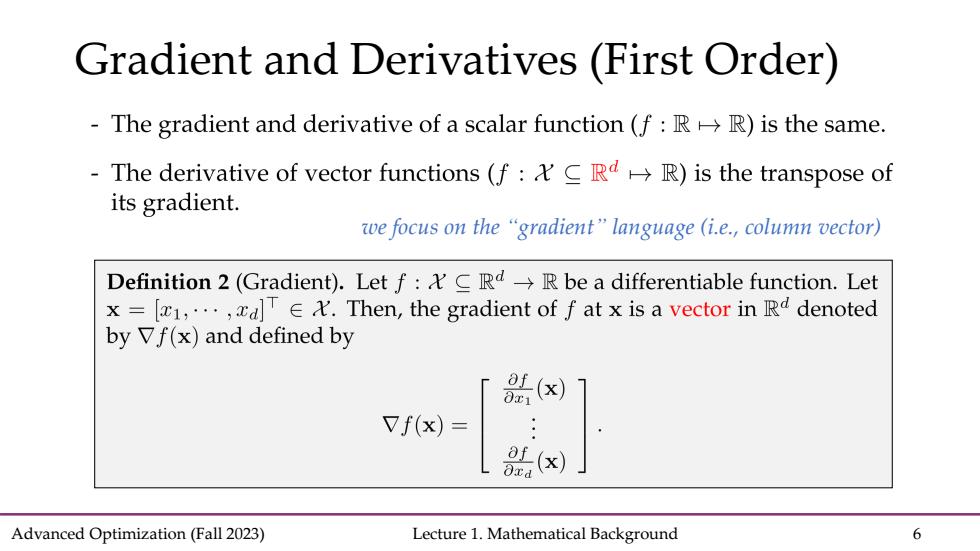

Gradient and Derivatives(First Order) The gradient and derivative of a scalar function(f:RR)is the same. The derivative of vector functions (f C RdR)is the transpose of its gradient. we focus on the "gradient"language (i.e.,column vector) Definition 2(Gradient).Let f:C Rd-R be a differentiable function.Let x=[1,...,d].Then,the gradient of f at x is a vector in Rd denoted by Vf(x)and defined by Vf(x)= ... X Advanced Optimization (Fall 2023) Lecture 1.Mathematical Background 6

Advanced Optimization (Fall 2023) Lecture 1. Mathematical Background 6 Gradient and Derivatives (First Order) we focus on the “gradient” language (i.e., column vector)

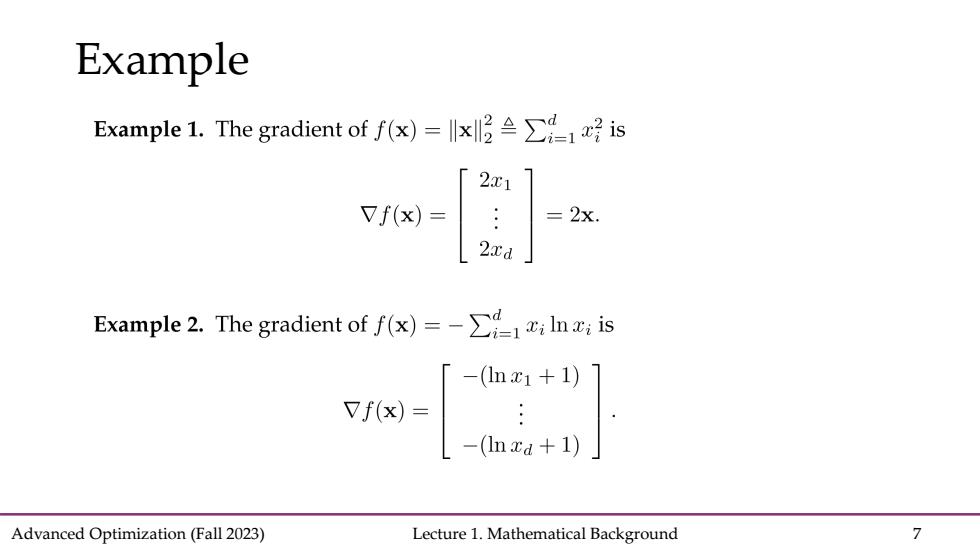

Example Example1.The gradient off(x)=x≌∑1x号is [2x1 Vf(x)= =2x. 2xd Example 2.The gradient of f(x)=->Ini is 「-(lnx1+1) Vf(x)- -(Inza+1) Advanced Optimization (Fall 2023) Lecture 1.Mathematical Background 7

Advanced Optimization (Fall 2023) Lecture 1. Mathematical Background 7 Example

Hessian(Second Order) Definition 3(Hessian).Let f:CR->R be a twice differentiable function. Let x =[1,...,d]Then,the Hessian of f at x is the matrix in Rdxd denoted by V2f(x)and defined by Example 3.The Hessian of f(x)=-;n;is V2f(x)=diag(.). Example 4.The Hessian of f(x)=x-3x1+1is V2f(x)= 6a1x号 6ax片x2-6x2 6x2x2-9x号 2x3-18:x1x2 Advanced Optimization (Fall 2023) Lecture 1.Mathematical Background 8

Advanced Optimization (Fall 2023) Lecture 1. Mathematical Background 8 Hessian (Second Order)

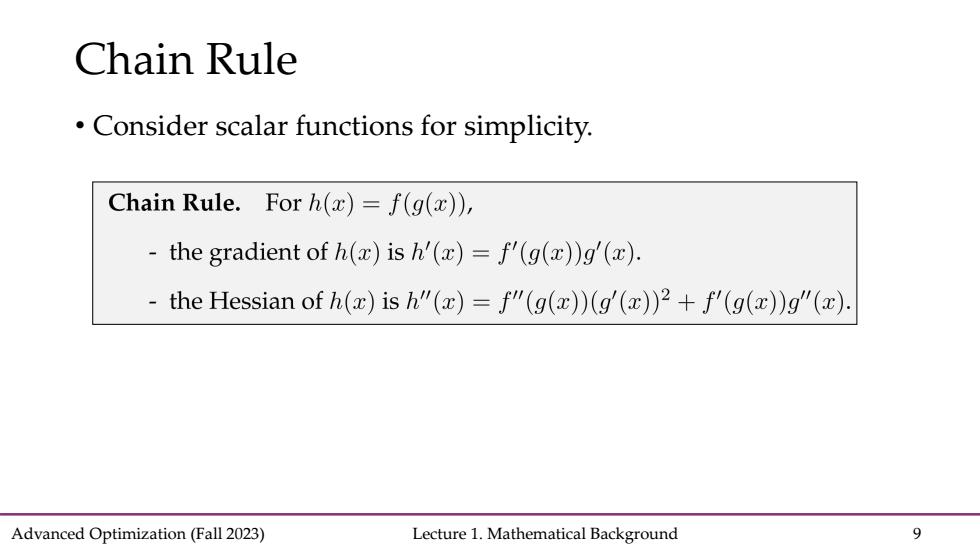

Chain rule Consider scalar functions for simplicity. Chain Rule.For h(x)=f(g(x)), the gradient of h(x)is h'(x)=f'(g(x))g'(x). - the Hessian of h(x)is h"(x)=f"(g(x))(g'(x))2+f'(g(x))g"(x). Advanced Optimization (Fall 2023) Lecture 1.Mathematical Background 9

Advanced Optimization (Fall 2023) Lecture 1. Mathematical Background 9 • Consider scalar functions for simplicity. Chain Rule

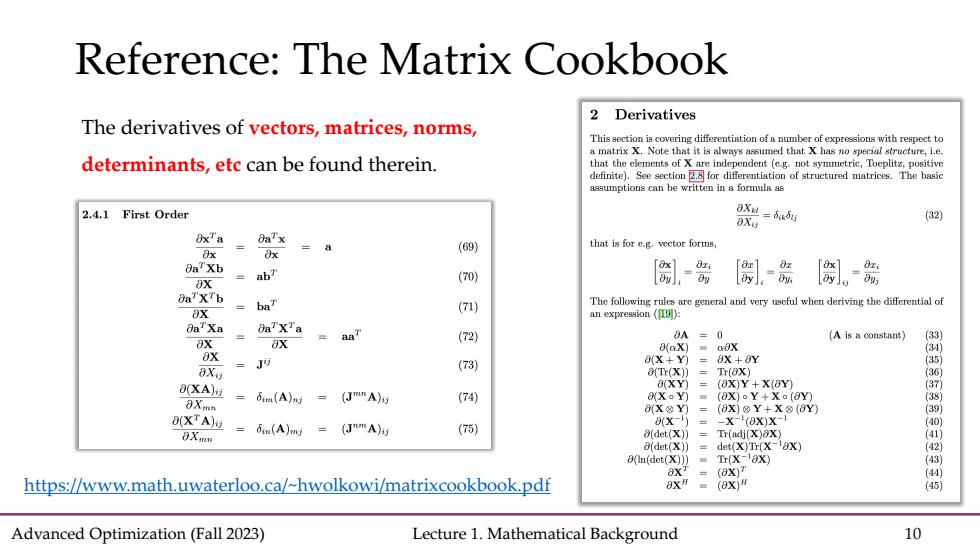

Reference:The Matrix Cookbook 2 Derivatives The derivatives of vectors,matrices,norms, This section is covering differentiation of a number of expressions with respect to a matrix X.Note that it is always assumed that X has no special structure,i.e. determinants,etc can be found therein. that the elements of X are independent (e.g.not symmetric,Toeplitz,positive definite).See section 2.8 for differentiation of structured matrices.The basic assumptions can be written in a formula as 2.4.1 First Order 8X=65时 8Xi (32) (69) that is for e.g vectorforms Ox x 0aTXb 0x abT (70) 筒- [周-张 离。斋 8aTXTb X baT (71) The following rue are general and very useful when deriving the differential of an expression (191): 0aTXa 0aTXTa aaT (72 0A=0 (A is a constant) (33) ax a(ax) 8X 34 =Jij (73) 0(X+Y)=8X+Y (3 aXy a(T(X)】 =Tr(8X) 36 (XA) 8(XY=(8X)Y+X(8Y的 (37) 8Xmn =6im(A)ng =(JmnA) (74) X。Y)■ (8X)Y+Xo(8Y) 3 8X@Y)=(8X)⑧Y+X⑧(8Y) (39 8(XTA) =Bin(A)mj (J"mA) (75) 0(x-j = -X-(8x)x- 0) 0Xmn det(X)】 = Tr(adj(X)ax) 4 a(det(X))det(XyTr(X-aX) 8(In(det(X)))=Tr(X-8X) 8X =(8X)T (44 https://www.math.uwaterloo.ca/-hwolkowi/matrixcookbook.pdf 8xH=(8x" (45) Advanced Optimization (Fall 2023) Lecture 1.Mathematical Background 10

Advanced Optimization (Fall 2023) Lecture 1. Mathematical Background 10 Reference: The Matrix Cookbook https://www.math.uwaterloo.ca/~hwolkowi/matrixcookbook.pdf The derivatives of vectors, matrices, norms, determinants, etc can be found therein