NJUA 南京大学 人工智能学院 SCHOOL OF ARTFICIAL INTELUGENCE,NANJING UNFVERSITY Lecture 8.Adaptive Online Convex Optimization Advanced Optimization(Fall 2023) Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University

Lecture 8. Adaptive Online Convex Optimization Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University Advanced Optimization (Fall 2023)

Outline ·Motivation ·Small-Loss Bounds Small-Loss bound for PEA Self-confident Tuning Small-Loss bound for OCO Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 2

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 2 Outline • Motivation • Small-Loss Bounds • Small-Loss bound for PEA • Self-confident Tuning • Small-Loss bound for OCO

General Regret Analysis for OMD OMD update: =arg min n(x Vfi()D(x) x∈X Lemma 1(Mirror Descent Lemma).Let D be the Bregman divergence w.r.t.: X→R and assume少to be X-strongly convex with respect to a norm‖·‖.Then, ∀u∈X,the following inequality holds x)-im)≤最D,ux)-D,ux)+癸f --Du(xt+1,xt) bias term(range term) variance term(stability term) negative term Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 3

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 3 General Regret Analysis for OMD OMD update: bias term (range term) variance term (stability term) negative term

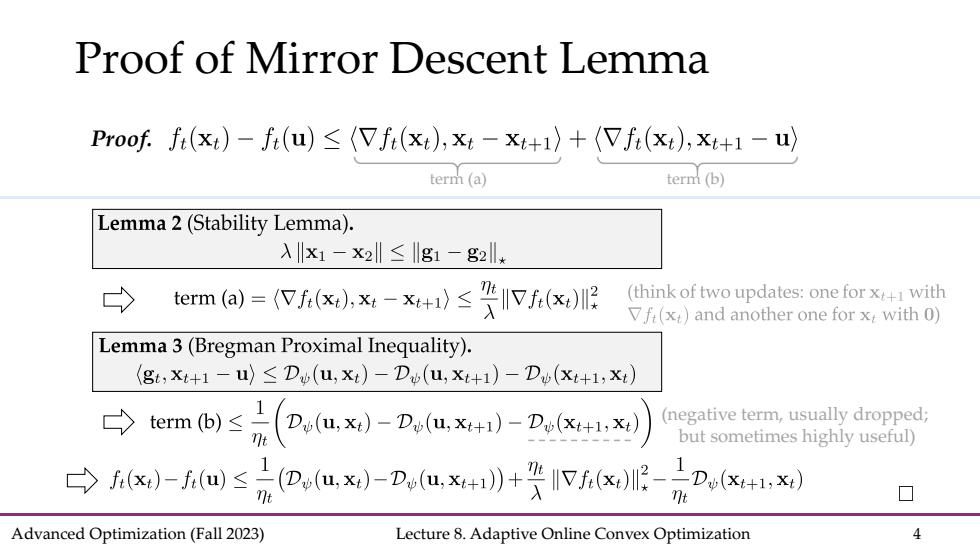

Proof of Mirror Descent Lemma Proof.fi(xt)-fi(u)<(Vfi(xt),xt-x++1)+(Vfi(xi),xi+1-u) term (a) term(b) Lemma 2(Stability Lemma). 入x1-x2‖≤g1-g2ll* tem(a)=(fx,x-x+)≤哭lVf.(x,) (think of two updates:one for x with Vfi(x)and another one for x with 0) Lemma 3(Bregman Proximal Inequality). (gi,Xi+1-u)<Du(u,xi)-Do(u,x+1)-Dv(xi+1,xi) emo)≤(aux)-nux+)-nK】 (negative term,usually dropped; but sometimes highly useful) →fx)-f四≤(D(u,x)-Deu,x+》+IVfi(xI2-D,x+1,x) Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 4

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 4 Proof of Mirror Descent Lemma Proof. term (a) term (b) (negative term, usually dropped; but sometimes highly useful)

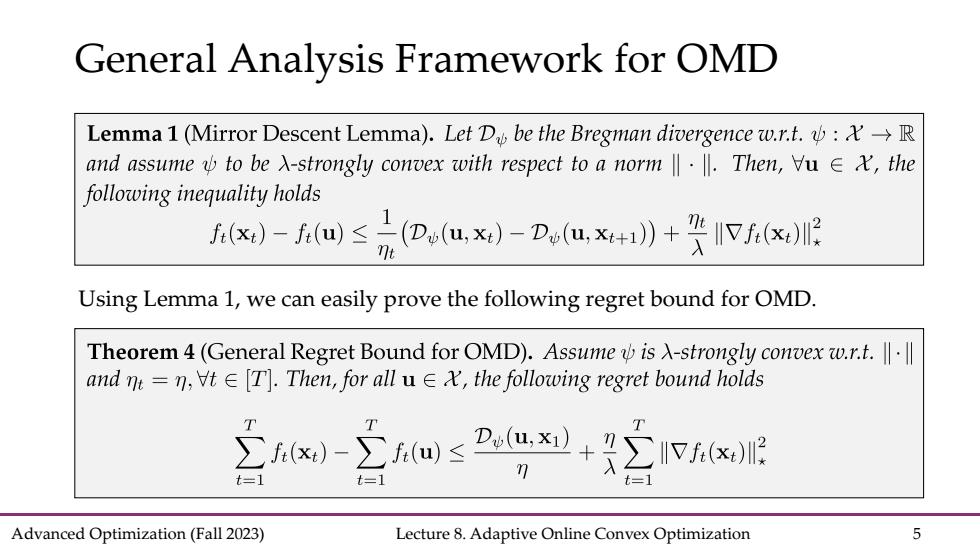

General Analysis Framework for OMD Lemma 1 (Mirror Descent Lemma).Let Du be the Bregman divergence w.r.t.->R and assume v to be入-strongly convex with respect to a norm‖·‖.Then,∀u∈X,the following inequality holds fix)-fiu)≤是(D(u,x)-D,u,x》+IVfx Using Lemma 1,we can easily prove the following regret bound for OMD. Theorem 4(General Regret Bound for OMD).Assumeis A-strongly convex w.r.t. andt=n,∀t∈[T].Then,for all u∈X,the following regret bound holds x)-≤Dg+I T T T Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 5

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 5 General Analysis Framework for OMD Using Lemma 1, we can easily prove the following regret bound for OMD

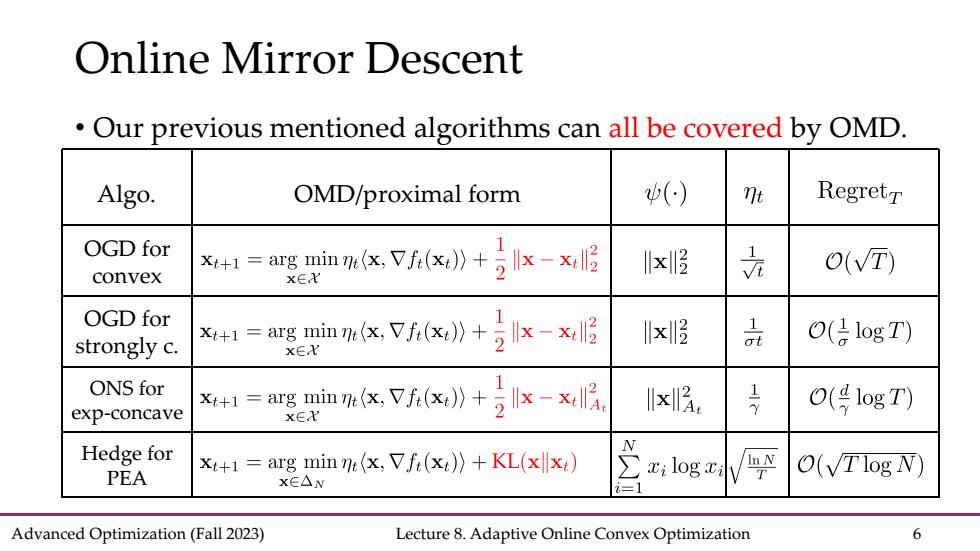

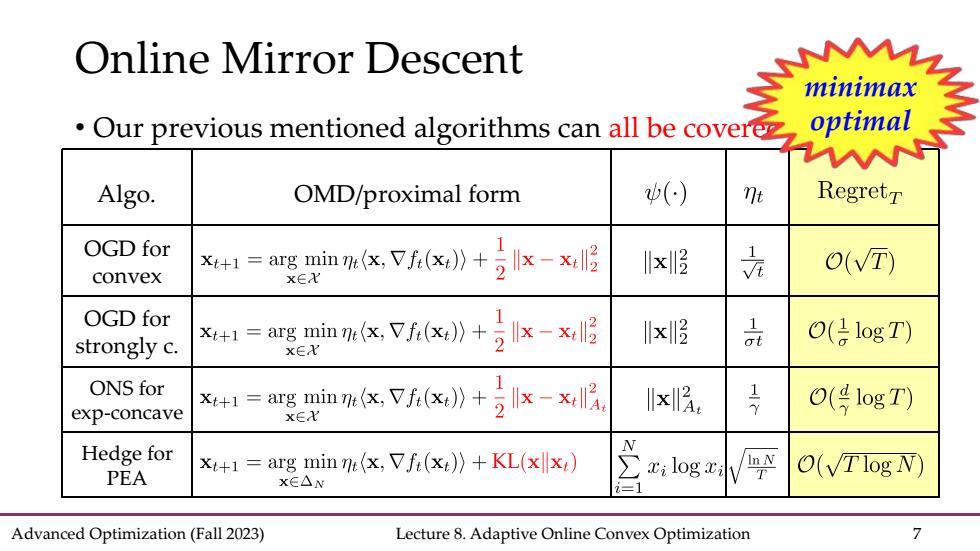

Online Mirror Descent Our previous mentioned algorithms can all be covered by OMD. Algo. OMD/proximal form () nt Regretr OGD for X4+1=arg min%x,Vf(x》+专k-x服 lxll O(VT) convex XEX OGD for x修 O(日logT) strongly c. x+1=ar,Vfx:》+2Ix-x哈 品 x∈X ONS for exp-concave X+1=are minn,Vfix》+5x-x房 x房 17 O(号1ogT) x∈X Hedge for xt+1=arg min n (x,Vfi(xt))+KL(xx:) zi log xi In N PEA O(VTlog N) X∈△N Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 6

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 6 Online Mirror Descent • Our previous mentioned algorithms can all be covered by OMD. OGD for convex OGD for strongly c. ONS for exp-concave Hedge for PEA Algo. OMD/proximal form

Online Mirror Descent minimax Our previous mentioned algorithms can all be covere optimal Algo. OMD/proximal form () nt Regretr OGD for llxll O(VT) convex arg min(Vf() x∈X OGD for strongly c. +1=arg min me(Vf() x 品 O(号logT) ONS for exp-concave x+1=au哭πinnx,Vf(x》+Ix-x服 xIl 17 O(号1ogT) Hedge for xt+1=arg min m(x,Vfi(x))+KL(x]x) zi log i In N PEA O(VTlog N) xE△N Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 7

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 7 Online Mirror Descent • Our previous mentioned algorithms can all be covered by OMD. OGD for convex OGD for strongly c. ONS for exp-concave Hedge for PEA Algo. OMD/proximal form minimax optimal

Beyond the Worst-Case Analysis All above regret guarantees hold against the worst case Matching the minimax optimality oblivious adversary adaptive adversary The environment is fully adversarial examination interview However,in practice: We are not always interested in the worst-case scenario Environments can exhibit specific patterns:gradual change,periodicity... We are after some more problem-dependent guarantees. Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 8

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 8 Beyond the Worst-Case Analysis • All above regret guarantees hold against the worst case • Matching the minimax optimality • The environment is fully adversarial interview oblivious adversary adaptive adversary examination We are after some more problem-dependent guarantees. • However, in practice: • We are not always interested in the worst-case scenario • Environments can exhibit specific patterns: gradual change, periodicity…

Beyond the Worst-Case Analysis Beyond the worst-case analysis,achieving more adaptive results. (1)adaptivity:achieving better guarantees in easy problem instances; (2)robustness:maintaining the same worst-case guarantee. VS OPTIMISTS PESSIMISTS Be cautiously optimistic Real world Easy Data Worst-Case Data [Slides from Dylan Foster,Adaptive Online Learning @NIPS'15 workshop] Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 9

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 9 Beyond the Worst-Case Analysis • Beyond the worst-case analysis, achieving more adaptive results. (1) adaptivity: achieving better guarantees in easy problem instances; (2) robustness: maintaining the same worst-case guarantee. [Slides from Dylan Foster, Adaptive Online Learning @NIPS’15 workshop]

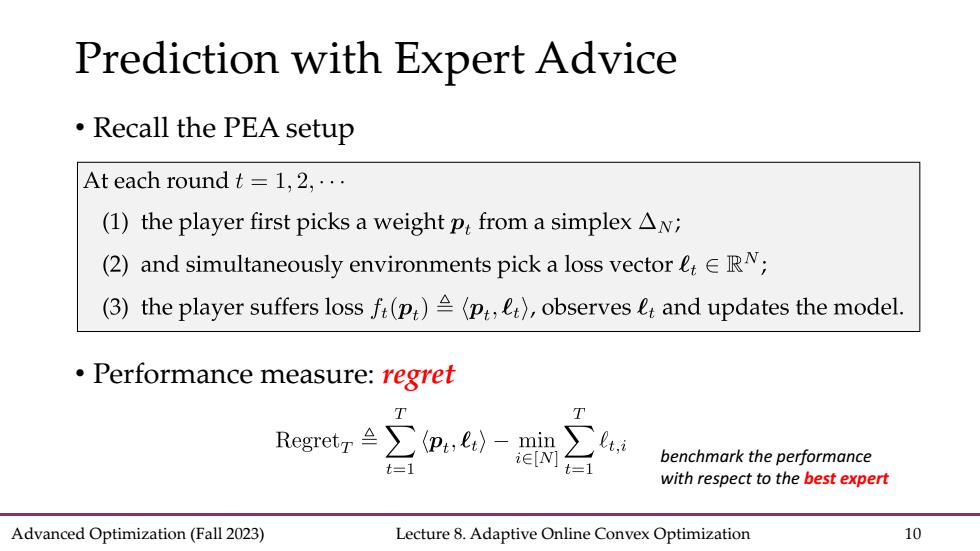

Prediction with Expert Advice ·Recall the PEA setup At each round t=1,2,... (1)the player first picks a weight p from a simplex AN; (2)and simultaneously environments pick a loss vector eERN; (3)the player suffers loss f(p:)A(p:,e),observes e and updates the model. Performance measure:regret T T Regretr∑p,lr) min benchmark the performance t=1 iE[N] =1 with respect to the best expert Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 10

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 10 Prediction with Expert Advice • Recall the PEA setup • Performance measure: regret benchmark the performance with respect to the best expert