吸窖 NJUAT 南京大学 人工智能学院 SCHODL OF ARTIFICIAL INTELUGENCE,NANJING UNIVERSITY Lecture 7.Online Mirror Descent Advanced Optimization(Fall 2023) Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University

Lecture 7. Online Mirror Descent Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University Advanced Optimization (Fall 2023)

Outline Algorithmic Framework ·Regret Analysis Interpretation from Primal-Dual View Follow-the-Regularized Leader Advanced Optimization(Fall 2023) Lecture 7.Online Mirror Descent 2

Advanced Optimization (Fall 2023) Lecture 7. Online Mirror Descent 2 Outline • Algorithmic Framework • Regret Analysis • Interpretation from Primal-Dual View • Follow-the-Regularized Leader

Recap:Reinvent Hedge Algorithm Proximal update rule for OGD: X1=竖{mx,c》+x-x卧 Proximal update rule for Hedge: x+1=arg minn(x Vfi(x))+KL(x) x∈X More possibility:changing the distance measure to a more general form using Bregman divergence =arg min n f()+D(xx) x∈X Advanced Optimization(Fall 2023) Lecture 7.Online Mirror Descent 3

Advanced Optimization (Fall 2023) Lecture 7. Online Mirror Descent 3 Recap: Reinvent Hedge Algorithm • Proximal update rule for OGD: • Proximal update rule for Hedge: • More possibility: changing the distance measure to a more general form using Bregman divergence

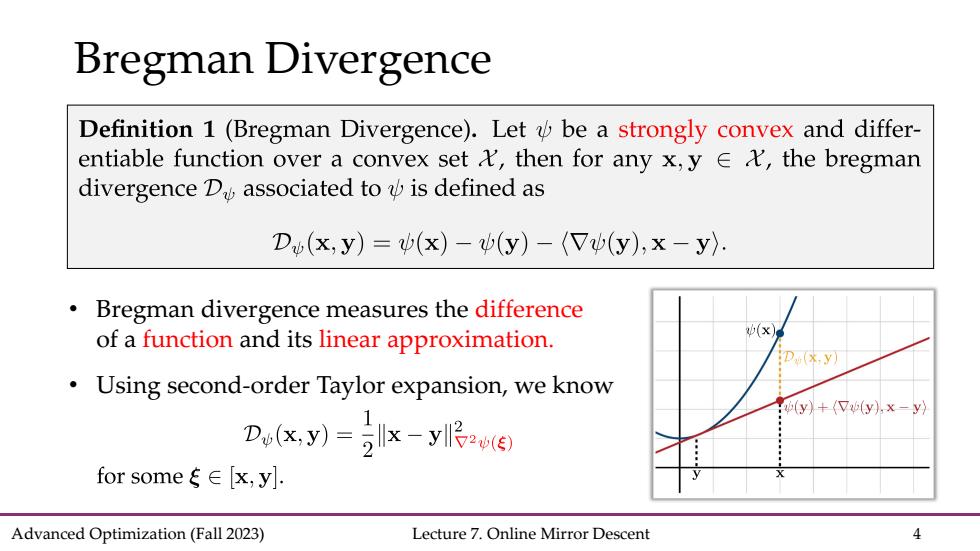

Bregman Divergence Definition 1(Bregman Divergence).Let be a strongly convex and differ- entiable function over a convex set t,then for any x,y e A,the bregman divergence D associated to is defined as D(x,y)=(x)-(y)-(V(y),x-y Bregman divergence measures the difference of a function and its linear approximation. D.x.y Using second-order Taylor expansion,we know 0(y)+(7y),x-y〉 Do(y)llx-yo for some∈[x,yl. Advanced Optimization(Fall 2023) Lecture 7.Online Mirror Descent 4

Advanced Optimization (Fall 2023) Lecture 7. Online Mirror Descent 4 Bregman Divergence • Bregman divergence measures the difference of a function and its linear approximation. • Using second-order Taylor expansion, we know

Bregman Divergence Definition 1(Bregman Divergence).Let be a strongly convex and differ- entiable function over a convex set t,then for any x,y e A,the bregman divergence D associated to is defined as Db(x,y)=(x)-(y)-((y),x-y). Table 1:Choice of ()and the corresponding Bregman divergence. (x) D(x,y) Squared L2-distance Ilxl llx-yll Mahalanobis distance x☒ llx-yll Negative entropy ∑i log KL(xlly) Advanced Optimization(Fall 2023) Lecture 7.Online Mirror Descent 5

Advanced Optimization (Fall 2023) Lecture 7. Online Mirror Descent 5 Bregman Divergence

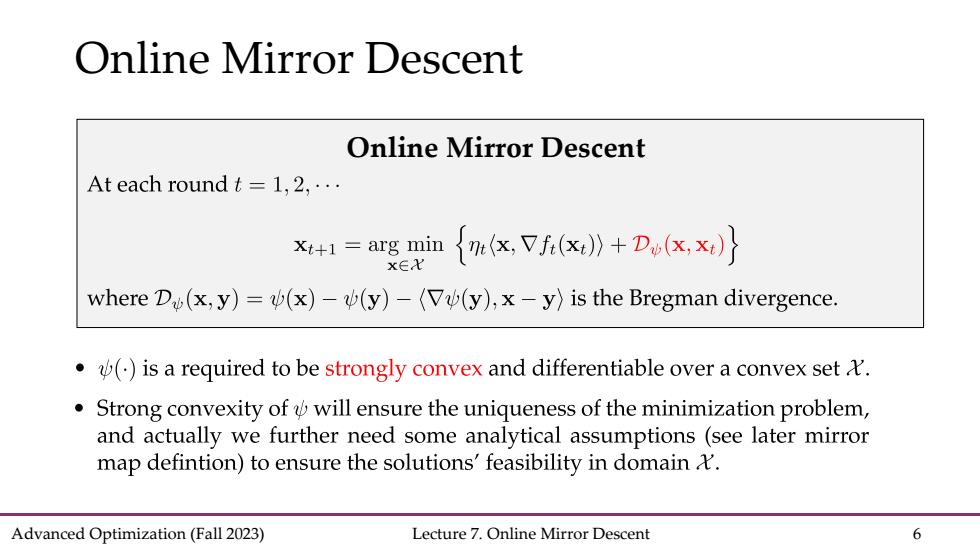

Online Mirror Descent Online Mirror Descent At each round t=1,2,... X+1=arg min xEY {nx,Vf(x》+Dw(x,x} where D(x,y)=(x)-(y)-(Vu(y),x-y)is the Bregman divergence. .()is a required to be strongly convex and differentiable over a convex set. Strong convexity of will ensure the uniqueness of the minimization problem, and actually we further need some analytical assumptions (see later mirror map defintion)to ensure the solutions'feasibility in domain t. Advanced Optimization(Fall 2023) Lecture 7.Online Mirror Descent 6

Advanced Optimization (Fall 2023) Lecture 7. Online Mirror Descent 6 Online Mirror Descent Online Mirror Descent

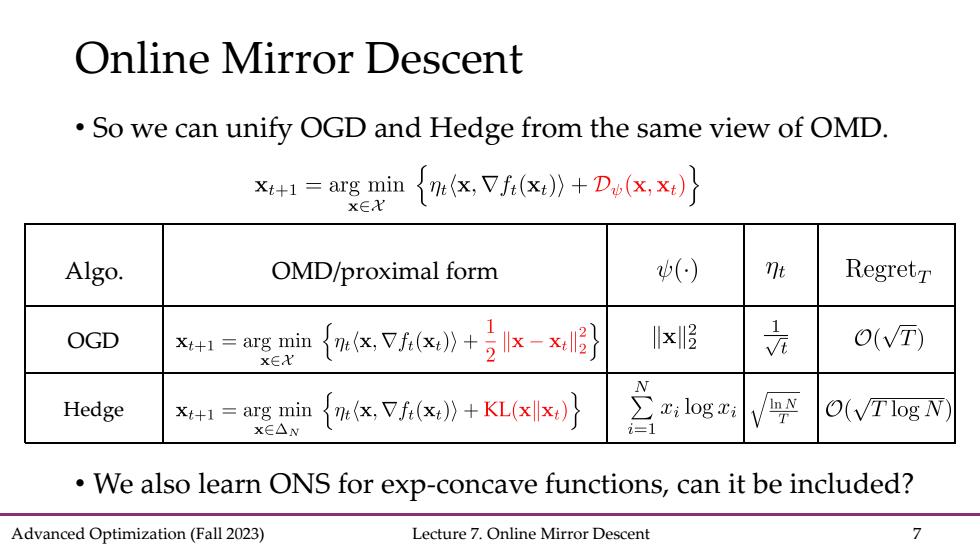

Online Mirror Descent So we can unify OGD and Hedge from the same view of OMD. x=arg min x Vfi(x))+Du(xx) x∈X Algo. OMD/proximal form () nt RegretT OGD x+1=agin{xfx》+号Ix-x x O(VT) x∈X N Hedge =arg min Vf())+KL() xilogi O(√Tlog N] x∈AN We also learn ONS for exp-concave functions,can it be included? Advanced Optimization(Fall 2023) Lecture 7.Online Mirror Descent 7

Advanced Optimization (Fall 2023) Lecture 7. Online Mirror Descent 7 Online Mirror Descent • So we can unify OGD and Hedge from the same view of OMD. OGD Hedge Algo. OMD/proximal form • We also learn ONS for exp-concave functions, can it be included?

Recap:ONS in a view of Proximal Gradient Convex Problem Exp-concave Problem Property:f(x)≥fy)+Vf(y)T(x-y) Property:fi(x)>fi(y)+Vf(y)(x-y) +号Ix-yI6w ocD. ONS:A:=A:-1+Vfi(x:)Vfi(xt)T x1=咬刘】 Proximal type update: 1=agxx》+2玩K-x侣 Proximal type update: x∈X X41=arg min(,》+3引x-x. x∈X Advanced Optimization(Fall 2023) Lecture 7.Online Mirror Descent 8

Advanced Optimization (Fall 2023) Lecture 7. Online Mirror Descent 8 Recap: ONS in a view of Proximal Gradient Convex Problem Property: Proximal type update: OGD: Exp-concave Problem Property: Proximal type update: ONS:

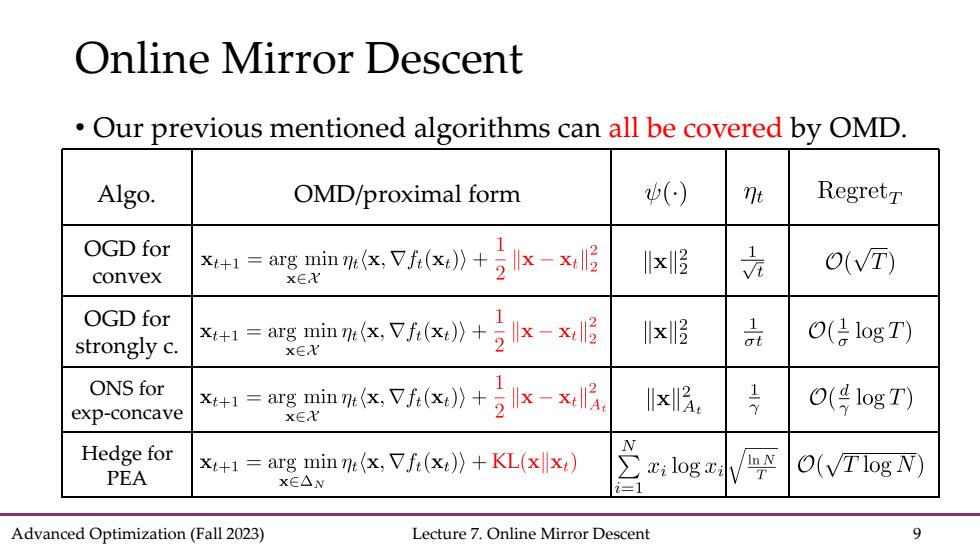

Online Mirror Descent Our previous mentioned algorithms can all be covered by OMD. Algo. OMD/proximal form () nt Regretr OGD for lxll O(VT) convex X4+1=argminn,Vf(x》+专k-x服 XEX OGD for strongly c. argemin ne(x Vfi(x) 'x侶 品 O(日logT) X∈X ONS for exp-concave X+1=are minn,Vfix》+5x-x房 Ix 17 O(号1ogT) x∈X Hedge for PEA x+1=arg min m(x,Vfi(x))+KL(xx) zi log xi T O(Tlog N) x∈△N Advanced Optimization(Fall 2023) Lecture 7.Online Mirror Descent 9

Advanced Optimization (Fall 2023) Lecture 7. Online Mirror Descent 9 Online Mirror Descent • Our previous mentioned algorithms can all be covered by OMD. OGD for convex OGD for strongly c. ONS for exp-concave Hedge for PEA Algo. OMD/proximal form

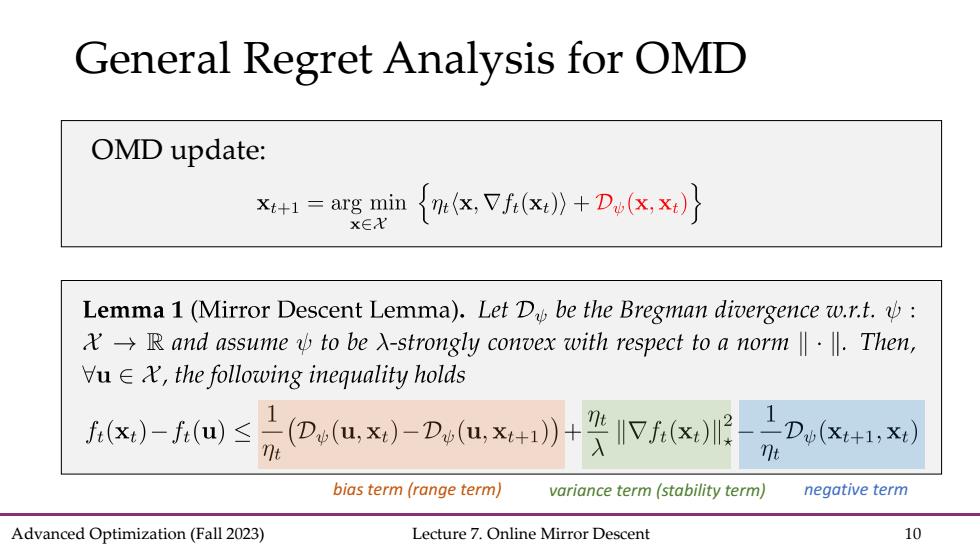

General Regret Analysis for OMD OMD update: x=arg min n(x Vf()+(xx) x∈X Lemma 1(Mirror Descent Lemma).Let D be the Bregman divergence w.r.t.: X→R and assume少to be X--strongly convex with respect to a norm‖·‖.Then, ∀u∈X,the following inequality holds )-i四≤D,ux刘-D,ax+月+紧 2--Dv(Xt+1,x:) n bias term(range term) variance term(stability term) negative term Advanced Optimization(Fall 2023) Lecture 7.Online Mirror Descent 10

Advanced Optimization (Fall 2023) Lecture 7. Online Mirror Descent 10 General Regret Analysis for OMD OMD update: bias term (range term) variance term (stability term) negative term