The Greedy Approach Greedy algorithms are usually designed to solve optimization problems in which a quantity is to be minimized or maximized. Greedy algorithms typically consist of a n iterative procedure that tries to find a local optimal solution. In some instances,these local optimal solutions translate to global optimal solutions.In others,they fail to give optimal solutions

The Greedy Approach ◼ Greedy algorithms are usually designed to solve optimization problems in which a quantity is to be minimized or maximized. ◼ Greedy algorithms typically consist of a n iterative procedure that tries to find a local optimal solution. ◼ In some instances, these local optimal solutions translate to global optimal solutions. In others, they fail to give optimal solutions

The Greedy Approach A greedy algorithm makes a correct guess on the basis of little calculation without worrying about the future.Thus,it builds a solution step by step.Each step increases the size of the partial solution and is based on local optimization. The choice make is that which produces the /argest immediate gain while maintaining feasibility. ■ Since each step consists of little work based on a small amount of information,the resulting algorithms are typically efficient

The Greedy Approach ◼ A greedy algorithm makes a correct guess on the basis of little calculation without worrying about the future. Thus, it builds a solution step by step. Each step increases the size of the partial solution and is based on local optimization. ◼ The choice make is that which produces the largest immediate gain while maintaining feasibility. ◼ Since each step consists of little work based on a small amount of information, the resulting algorithms are typically efficient

The Fractional Knapsack Problem Given n items of sizes s,s,...,s and values v, v,...,and size C the knapsack capacity,the objective is to find nonnegative real numbers, X2,...,that maximize the sum i=1 subject to the constraint ∑xs,≤C i=1

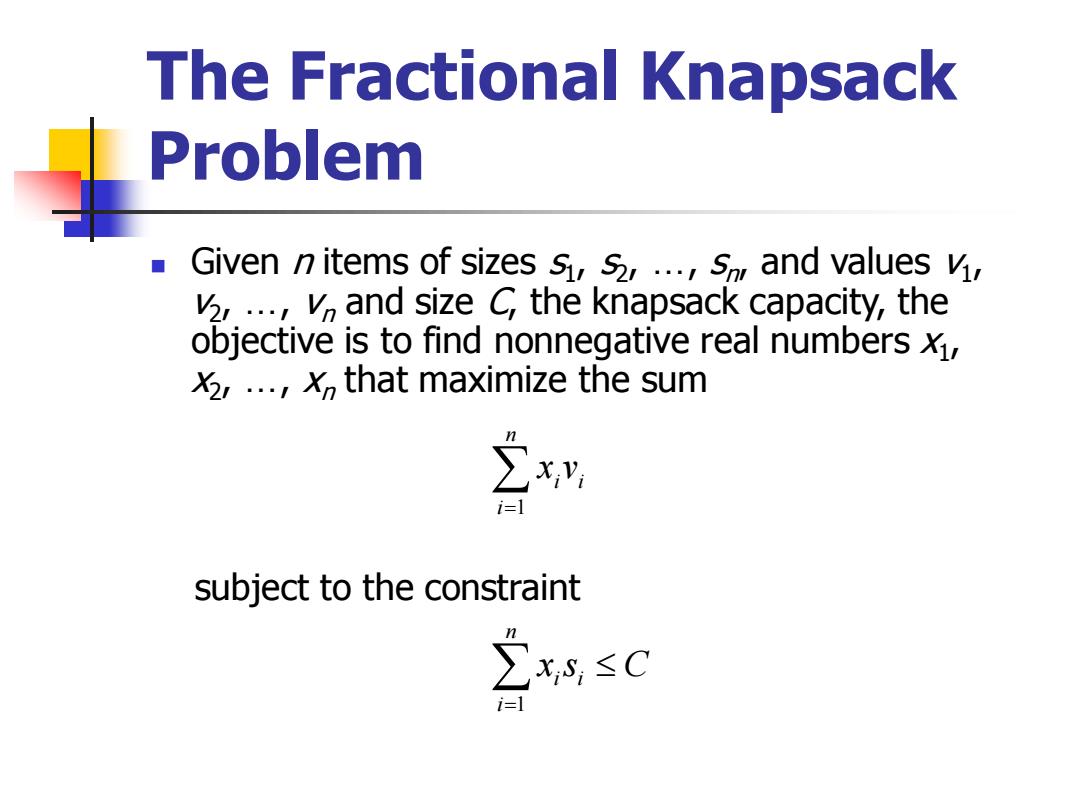

The Fractional Knapsack Problem ◼ Given n items of sizes s1 , s2 , …, sn , and values v1 , v2 , …, vn and size C, the knapsack capacity, the objective is to find nonnegative real numbers x1 , x2 , …, xn that maximize the sum = n i i i x v 1 = n i xi si C 1 subject to the constraint

The Fractional Knapsack Problem This problem can easily be solved using the following greedy strategy: For each item computey=/s the ratio of its value to its size. Sort the items by decreasing ratio,and fill the knapsack with as much as possible from the first item,then the second,and so forth. This problem reveals many of the characteristics of a greedy algorithm discussed above:7he algorithm consists of a simple iterative procedure that selects that item which produces that largest immediate gain while maintaining feasibility

The Fractional Knapsack Problem ◼ This problem can easily be solved using the following greedy strategy: ➢ For each item compute yi=vi /si , the ratio of its value to its size. ➢ Sort the items by decreasing ratio, and fill the knapsack with as much as possible from the first item, then the second, and so forth. ◼ This problem reveals many of the characteristics of a greedy algorithm discussed above: The algorithm consists of a simple iterative procedure that selects that item which produces that largest immediate gain while maintaining feasibility

File Compression Suppose we are given a file,which is a string of characters.We wish to compress the file as much as possible in such a way that the original file can easily be reconstructed. ■ Let the set of characters in the file be C=c,c,..., c}.Let also c),1<kn,be the frequency of character c in the file,i.e.,the number of times c appears in the file

File Compression ◼ Suppose we are given a file, which is a string of characters. We wish to compress the file as much as possible in such a way that the original file can easily be reconstructed. ◼ Let the set of characters in the file be C={c1 , c2 , …, cn}. Let also f(ci ), 1in, be the frequency of character ci in the file, i.e., the number of times ci appears in the file

File Compression Using a fixed number of bits to represent each character,called the encoding of the character,the size of the file depends only on the number of characters in the file. Since the frequency of some characters may be much larger than others,it is reasonable to use variable length encodings

File Compression ◼ Using a fixed number of bits to represent each character, called the encoding of the character, the size of the file depends only on the number of characters in the file. ◼ Since the frequency of some characters may be much larger than others, it is reasonable to use variable length encodings

File Compression Intuitively,those characters with large frequencies should be assigned short encodings,whereas long encodings may be assigned to those characters with small frequencies. ■ When the encodings vary in length,we stipulate that the encoding of one character must not be the prefix of the encoding of another character;such codes are called prefix codes. ■ For instance,if we assign the encodings 10 and 101 to the letters“a”and“b',there will be an ambiguity as to whether 10 is the encoding of“a”or is the prefix of the encoding of the letter“b

File Compression ◼ Intuitively, those characters with large frequencies should be assigned short encodings, whereas long encodings may be assigned to those characters with small frequencies. ◼ When the encodings vary in length, we stipulate that the encoding of one character must not be the prefix of the encoding of another character; such codes are called prefix codes. ◼ For instance, if we assign the encodings 10 and 101 to the letters “a” and “b”, there will be an ambiguity as to whether 10 is the encoding of “a” or is the prefix of the encoding of the letter “b

File Compression Once the prefix constraint is satisfied,the decoding becomes unambiguous;the sequence of bits is scanned until an encoding of some character is found. One way to“parse”a given sequence of bits is to use a full binary tree,in which each internal node has exactly two branches labeled by 0 an 1.The leaves in this tree corresponding to the characters. Each sequence of 0's and 1's on a path from the root to a leaf corresponds to a character encoding

File Compression ◼ Once the prefix constraint is satisfied, the decoding becomes unambiguous; the sequence of bits is scanned until an encoding of some character is found. ◼ One way to “parse” a given sequence of bits is to use a full binary tree, in which each internal node has exactly two branches labeled by 0 an 1. The leaves in this tree corresponding to the characters. Each sequence of 0’s and 1’s on a path from the root to a leaf corresponds to a character encoding

File Compression The algorithm presented is due to Huffman. a The algorithm consists of repeating the following procedure until Cconsists of only one character. >Let c and c be two characters with minimum frequencies. Create a new node cwhose frequency is the sum of the frequencies of c and c and make c and c the children of c. Let C=Cici chuc)

File Compression ◼ The algorithm presented is due to Huffman. ◼ The algorithm consists of repeating the following procedure until C consists of only one character. ➢ Let ci and cj be two characters with minimum frequencies. ➢ Create a new node c whose frequency is the sum of the frequencies of ci and cj , and make ci and cj the children of c. ➢ Let C=C-{ci , cj}{c}

File Compression Example: C=a,b,c,d,er f(a)=20 f(b)=7 f(G)=10 f(d)=4 f(e)=18

File Compression ◼ Example: C={a, b, c, d, e} f (a)=20 f (b)=7 f (c)=10 f (d)=4 f (e)=18