An Organizational Coevolutionary Algorithm for Classification Licheng Jiao,Senior Member:IEEE Jing Liu'Weicai Zhong Institute of Intelligent Information Processing,Xidian University,Xi'an 710071,China Abstract-Taking inspiration from the interacting process among organizations in human societies,a new classification algorithm,organizational coevolutionary algorithm for classification (OCEC),is proposed with the intrinsic properties of classification in mind.The main difference between OCEC and the available classification approaches based on evolutionary algorithms (EAs)is its use of a bottom-up search mechanism.OCEC causes the evolution of sets of examples,and at the end of the evolutionary process, extracts rules from these sets.These sets of examples form organizations.Because organizations are different from the individuals in traditional EAs,three evolutionary operators and a selection mechanism are devised for realizing the evolutionary operations performed on organizations.This method can avoid generating meaningless rules during the evolutionary process.An evolutionary method is also devised for determining the significance of each attribute,on the basis of which,the fitness function for organizations is defined.In experiments,the effectiveness of OCEC is first evaluated by multiplexer problems.Then OCEC is compared with several well-known classification algorithms on 12 benchmarks from the UCI repository datasets and multiplexer problems.Moreover,OCEC is applied to a practical case,radar target recognition problems.All results show that OCEC achieves a higher predictive accuracy and a lower computational cost.Finally,the scalability of OCEC is studied on synthetic datasets.The number of training examples increases from 100 000 to 10 million,and the number of attributes increases from 9 to 400.The results show that OCEC obtains a good scalability. Index Terms-Data mining,classification,organization,evolutionary algorithms,coevolution. Corresponding author,e-mail:neouma@163.com,telephone:86-029-88202661 1

1 An Organizational Coevolutionary Algorithm for Classification Licheng Jiao, Senior Member, IEEE Jing Liu1 Weicai Zhong Institute of Intelligent Information Processing, Xidian University, Xi’an 710071, China AbstractTaking inspiration from the interacting process among organizations in human societies, a new classification algorithm, organizational coevolutionary algorithm for classification (OCEC), is proposed with the intrinsic properties of classification in mind. The main difference between OCEC and the available classification approaches based on evolutionary algorithms (EAs) is its use of a bottom-up search mechanism. OCEC causes the evolution of sets of examples, and at the end of the evolutionary process, extracts rules from these sets. These sets of examples form organizations. Because organizations are different from the individuals in traditional EAs, three evolutionary operators and a selection mechanism are devised for realizing the evolutionary operations performed on organizations. This method can avoid generating meaningless rules during the evolutionary process. An evolutionary method is also devised for determining the significance of each attribute, on the basis of which, the fitness function for organizations is defined. In experiments, the effectiveness of OCEC is first evaluated by multiplexer problems. Then OCEC is compared with several well-known classification algorithms on 12 benchmarks from the UCI repository datasets and multiplexer problems. Moreover, OCEC is applied to a practical case, radar target recognition problems. All results show that OCEC achieves a higher predictive accuracy and a lower computational cost. Finally, the scalability of OCEC is studied on synthetic datasets. The number of training examples increases from 100 000 to 10 million, and the number of attributes increases from 9 to 400. The results show that OCEC obtains a good scalability. Index TermsData mining, classification, organization, evolutionary algorithms, coevolution. 1 Corresponding author, e-mail: neouma@163.com, telephone: 86-029-88202661

I.INTRODUCTION Evolutionary algorithms (EAs)[1],based on an analogy to natural evolution,have recently gained increasing interest.They are suitable for solving complex or ill-defined problems and have been successfully applied to the fields of numerical optimization, combinatorial optimization,machine learning,neural networks,and many other engineering problems [2]-[7].Classification is one of the fundamental tasks of data mining [8]-[13]and can be described as follows [14].The input data,also called the training set,consist of examples,each example having several attributes.Additionally,each example is tagged with a special class name.The objective of classification is to analyze the input data and to develop an accurate description for each class using the attributes present in the data.The class descriptions are used to classify future test data for which the class names are unknown. Applications of classification include credit approval,medical diagnosis,store location,etc. This paper introduces a new evolutionary algorithm for classification. A.Related work Classification has been studied extensively and the application of EAs to this field was initiated in the 1980s [15],[16].Holland [15]and Smith [16]proposed two basic reference approaches,namely,the Michigan and Pittsburgh approaches,respectively.The Michigan approach maintains a population of individual rules which compete with each other for space and priority in the population.In contrast,the Pittsburgh approach maintains a population of variable-length rule sets which compete with each other with respect to performance on the domain task. Neither of the approaches is perfect.The Michigan approach,which converges more 2

2 I. INTRODUCTION Evolutionary algorithms (EAs) [1], based on an analogy to natural evolution, have recently gained increasing interest. They are suitable for solving complex or ill-defined problems and have been successfully applied to the fields of numerical optimization, combinatorial optimization, machine learning, neural networks, and many other engineering problems [2]-[7]. Classification is one of the fundamental tasks of data mining [8]-[13] and can be described as follows [14]. The input data, also called the training set, consist of examples, each example having several attributes. Additionally, each example is tagged with a special class name. The objective of classification is to analyze the input data and to develop an accurate description for each class using the attributes present in the data. The class descriptions are used to classify future test data for which the class names are unknown. Applications of classification include credit approval, medical diagnosis, store location, etc. This paper introduces a new evolutionary algorithm for classification. A. Related work Classification has been studied extensively and the application of EAs to this field was initiated in the 1980s [15], [16]. Holland [15] and Smith [16] proposed two basic reference approaches, namely, the Michigan and Pittsburgh approaches, respectively. The Michigan approach maintains a population of individual rules which compete with each other for space and priority in the population. In contrast, the Pittsburgh approach maintains a population of variable-length rule sets which compete with each other with respect to performance on the domain task. Neither of the approaches is perfect. The Michigan approach, which converges more

rapidly,fails to learn good solutions for complex problems,whereas the Pittsburgh approach solves more difficult problems at a relatively high computational cost.Present,it seems that both approaches have their problems and advantages.This has prompted many researchers to develop new approaches along each one or hybrid ones,by selecting good features from both approaches and by avoiding the difficulties. Choenni et al.in [17]-[20]proposed some algorithms based on the Michigan approach. They have made many improvements.They modified the individual encoding method to use nonbinary representation,and did not encode the consequents of rules into the individuals. Moreover,they used extended version of crossover and mutation operators suitable to their representations,did not allowing rules to be invoked as a result of the invocation of other rules,and defined fitness functions in terms of some measures of the classification performance. De Jong et al.in [21]proposed an algorithm based on the Pittsburgh approach,GABIL. GABIL can continually learn and refine classification rules from its interaction with the environment.By incorporating a genetic algorithm(GA)as the underlying adaptive search mechanism,GABIL is able to construct a concept learning system that has a simple,unified architecture with several important features. In order to alleviate the disadvantages of the Michigan and Pittsburgh approaches,some hybrid Michigan/Pittsburgh methodologies have been proposed,for example,COGIN [22], JoinGA [23],REGAL [24],and G-Net [25]. COGIN [22]is an inductive system based on GAs that exploits the conventions of induction from examples.Its novelty lies in the use of training set coverage to simultaneously

3 rapidly, fails to learn good solutions for complex problems, whereas the Pittsburgh approach solves more difficult problems at a relatively high computational cost. Present, it seems that both approaches have their problems and advantages. This has prompted many researchers to develop new approaches along each one or hybrid ones, by selecting good features from both approaches and by avoiding the difficulties. Choenni et al. in [17]-[20] proposed some algorithms based on the Michigan approach. They have made many improvements. They modified the individual encoding method to use nonbinary representation, and did not encode the consequents of rules into the individuals. Moreover, they used extended version of crossover and mutation operators suitable to their representations, did not allowing rules to be invoked as a result of the invocation of other rules, and defined fitness functions in terms of some measures of the classification performance. De Jong et al. in [21] proposed an algorithm based on the Pittsburgh approach, GABIL. GABIL can continually learn and refine classification rules from its interaction with the environment. By incorporating a genetic algorithm (GA) as the underlying adaptive search mechanism, GABIL is able to construct a concept learning system that has a simple, unified architecture with several important features. In order to alleviate the disadvantages of the Michigan and Pittsburgh approaches, some hybrid Michigan/Pittsburgh methodologies have been proposed, for example, COGIN [22], JoinGA [23], REGAL [24], and G-Net [25]. COGIN [22] is an inductive system based on GAs that exploits the conventions of induction from examples. Its novelty lies in the use of training set coverage to simultaneously

promote competition in various classification niches within the model and constrain overall model complexity. JoinGA [23]is a combination of the Michigan and Pittsburgh approaches together with a symbiotic niching component that can be used in multimodal classification.On the level of fixed-length individuals,JoinGA uses normal genetic operators.In order to be able to deal with multimodal concepts,JoinGA uses a new level of operators above the basic genetic level. The operators on this level put together and divide groups of individuals,building families out of single,cooperating individuals.In this way JoinGA keeps the Michigan type effective fixed-length individuals and corresponding simple crossover operations.JoinGA also avoids the problems of multiple solutions by using the Pittsburgh type families,so that the system may converge to a single highly fit family. REGAL [24],a distributed GA-based system,designed for learning first order logic concept descriptions from examples.The population constitutes a redundant set of partial concept descriptions,and each evolves separately.G-Net [25]is a descendant of REGAL,and consistently achieves a better performance.The main features of the system include robustness with respect to parameter settings,use of the minimum description length criterion coupled with a stochastic search bias,use of coevolution as a high-level control strategy,the ability to face problems requiring structured representation languages,and the suitability to parallel implementation on a network of workstations. Recently,many new approaches based on EAs for the classification task have been proposed.XCS [26],[27]acts as a reinforcement learning agent.It differs from traditional approaches in several respects [27].First,XCS has a simplified structure since it does not

4 promote competition in various classification niches within the model and constrain overall model complexity. JoinGA [23] is a combination of the Michigan and Pittsburgh approaches together with a symbiotic niching component that can be used in multimodal classification. On the level of fixed-length individuals, JoinGA uses normal genetic operators. In order to be able to deal with multimodal concepts, JoinGA uses a new level of operators above the basic genetic level. The operators on this level put together and divide groups of individuals, building families out of single, cooperating individuals. In this way JoinGA keeps the Michigan type effective fixed-length individuals and corresponding simple crossover operations. JoinGA also avoids the problems of multiple solutions by using the Pittsburgh type families, so that the system may converge to a single highly fit family. REGAL [24], a distributed GA-based system, designed for learning first order logic concept descriptions from examples. The population constitutes a redundant set of partial concept descriptions, and each evolves separately. G-Net [25] is a descendant of REGAL, and consistently achieves a better performance. The main features of the system include robustness with respect to parameter settings, use of the minimum description length criterion coupled with a stochastic search bias, use of coevolution as a high-level control strategy, the ability to face problems requiring structured representation languages, and the suitability to parallel implementation on a network of workstations. Recently, many new approaches based on EAs for the classification task have been proposed. XCS [26], [27] acts as a reinforcement learning agent. It differs from traditional approaches in several respects [27]. First, XCS has a simplified structure since it does not

have an internal message list.In addition,XCS uses a modification of Q-learning instead of the "bucket brigade"[15].Most importantly,in XCS,classifier fitness is based on the accuracy of the classifier's payoff prediction instead of the prediction itself.XCS represents a major development in learning classifier systems research,and has proved effective in many domains [28]. GEP [10]is a new approach for discovering classification rules by using genetic programming with linear representation.The antecedent of discovered rules may involve many different combinations of attributes.To guide the search process,[10]suggested a fitness function considering both the rule consistency gain and completeness.A multiclass classification problem was formulated as a combination of multiple two-class problems by using the one against all learning method.Compact rule sets were subsequently evolved using a two-phase pruning method based on the minimum description length principle. DMEL [11]handles classification problems of which the accuracy of each prediction needs to be estimated.DMEL searches through the possible rule space using an evolutionary approach that has the following characteristics:1)the evolutionary process begins with the generation of an initial set of simple,one-condition rules;2)interestingness measure is used for identifying interesting rules;3)fitness of a chromosome is defined in terms of the probability that the attribute values of a record can be correctly determined using the rules it encodes;and 4)the likelihood of predictions made is estimated so that subscribers can be ranked according to their likelihood to churn. In economics,Coase in [29]explains the sizing and formation of organizations from the framework of transaction costs.This concept was introduced to the GA-based classifiers by 5

5 have an internal message list. In addition, XCS uses a modification of Q-learning instead of the “bucket brigade” [15]. Most importantly, in XCS, classifier fitness is based on the accuracy of the classifier’s payoff prediction instead of the prediction itself. XCS represents a major development in learning classifier systems research, and has proved effective in many domains [28]. GEP [10] is a new approach for discovering classification rules by using genetic programming with linear representation. The antecedent of discovered rules may involve many different combinations of attributes. To guide the search process, [10] suggested a fitness function considering both the rule consistency gain and completeness. A multiclass classification problem was formulated as a combination of multiple two-class problems by using the one against all learning method. Compact rule sets were subsequently evolved using a two-phase pruning method based on the minimum description length principle. DMEL [11] handles classification problems of which the accuracy of each prediction needs to be estimated. DMEL searches through the possible rule space using an evolutionary approach that has the following characteristics: 1) the evolutionary process begins with the generation of an initial set of simple, one-condition rules; 2) interestingness measure is used for identifying interesting rules; 3) fitness of a chromosome is defined in terms of the probability that the attribute values of a record can be correctly determined using the rules it encodes; and 4) the likelihood of predictions made is estimated so that subscribers can be ranked according to their likelihood to churn. In economics, Coase in [29] explains the sizing and formation of organizations from the framework of transaction costs. This concept was introduced to the GA-based classifiers by

Wilcox in [30],which puts emphasis on inventing an autonomous mechanism using transaction costs for forming the appropriately sized organizations within a classifier. There are many other approaches that also obtain good performances,such as EVOPROL [31],SIA [32],ESIA [33],EENCL [34],EPNet [35],etc.EAs are promising approaches for data mining,and have been applied to many problems other than the classification task.For example,Abutridy et al.in [12]presented a novel evolutionary model for knowledge discovery from texts,and Cano et al.in [13]carried out an empirical study of the performances of four representative EA models for data reduction in knowledge discovery in databases. B.Proposed approach Inspired by the idea of organizations [30],we propose a new evolutionary algorithm for classification,organizational coevolutionary algorithm for classification (OCEC).But the emphasis of OCEC is different from that of [30].Since in the real world situation, organizations usually compete or cooperate with others so that they can gain more resources, OCEC does not put emphasis on forming the appropriately sized organizations,but on simulating the interacting process among organizations. OCEC adopts the coevolutionary model of multiple populations,focusing on extracting rules from examples.It causes the evolution of sets of examples,and at the end of the evolutionary process,extracts rules from these sets.These sets of examples form organizations.Three evolutionary operators and a selection mechanism are devised to simulate the interaction among organizations.Additionally,because OCEC is inspired from the coevolutionary model,and considers the examples with identical class names as one 6

6 Wilcox in [30], which puts emphasis on inventing an autonomous mechanism using transaction costs for forming the appropriately sized organizations within a classifier. There are many other approaches that also obtain good performances, such as EVOPROL [31], SIA [32], ESIA [33], EENCL [34], EPNet [35], etc. EAs are promising approaches for data mining, and have been applied to many problems other than the classification task. For example, Abutridy et al. in [12] presented a novel evolutionary model for knowledge discovery from texts, and Cano et al. in [13] carried out an empirical study of the performances of four representative EA models for data reduction in knowledge discovery in databases. B. Proposed approach Inspired by the idea of organizations [30], we propose a new evolutionary algorithm for classification, organizational coevolutionary algorithm for classification (OCEC). But the emphasis of OCEC is different from that of [30]. Since in the real world situation, organizations usually compete or cooperate with others so that they can gain more resources, OCEC does not put emphasis on forming the appropriately sized organizations, but on simulating the interacting process among organizations. OCEC adopts the coevolutionary model of multiple populations, focusing on extracting rules from examples. It causes the evolution of sets of examples, and at the end of the evolutionary process, extracts rules from these sets. These sets of examples form organizations. Three evolutionary operators and a selection mechanism are devised to simulate the interaction among organizations. Additionally, because OCEC is inspired from the coevolutionary model, and considers the examples with identical class names as one

population,it can handle multi-class learning in a natural way so that multiple classes can be learned simultaneously. The main process of the existing EA-based and stochastic search classification algorithms [36],is first generating rules randomly,and then improving the quality of the rules by using the training examples.So these algorithms adopt an up-bottom search mechanism,and may generate meaningless rules during the evolutionary process.But OCEC adopts a completely different search mechanism.For classification,if the obtained description is represented as rules,then each rule covers some examples,and the examples covered by the same rule have some similarities in attribute values.Based on this,OCEC first clusters the examples with similar attribute values so as to form organizations,and then guides the evolutionary process by using the differing significance of attributes.At the end of the evolutionary process,rules are extracted from organizations.Such a process can avoid generating meaningless rules. Therefore,OCEC adopts a bottom-up search mechanism. C.Organization ofpaper In the remainder of this paper,OCEC is described in Section II.Section III evaluates the effectiveness of OCEC by multiplexer problems.Section IV compares OCEC with the available algorithms on benchmarks,and applies OCEC to a practical case,the radar target recognition problem.The scalability of OCEC is studied in Section V.Finally,conclusions and some ideas for the future work are presented in the last section. >

7 population, it can handle multi-class learning in a natural way so that multiple classes can be learned simultaneously. The main process of the existing EA-based and stochastic search classification algorithms [36], is first generating rules randomly, and then improving the quality of the rules by using the training examples. So these algorithms adopt an up-bottom search mechanism, and may generate meaningless rules during the evolutionary process. But OCEC adopts a completely different search mechanism. For classification, if the obtained description is represented as rules, then each rule covers some examples, and the examples covered by the same rule have some similarities in attribute values. Based on this, OCEC first clusters the examples with similar attribute values so as to form organizations, and then guides the evolutionary process by using the differing significance of attributes. At the end of the evolutionary process, rules are extracted from organizations. Such a process can avoid generating meaningless rules. Therefore, OCEC adopts a bottom-up search mechanism. C. Organization of paper In the remainder of this paper, OCEC is described in Section II. Section III evaluates the effectiveness of OCEC by multiplexer problems. Section IV compares OCEC with the available algorithms on benchmarks, and applies OCEC to a practical case, the radar target recognition problem. The scalability of OCEC is studied in Section V. Finally, conclusions and some ideas for the future work are presented in the last section

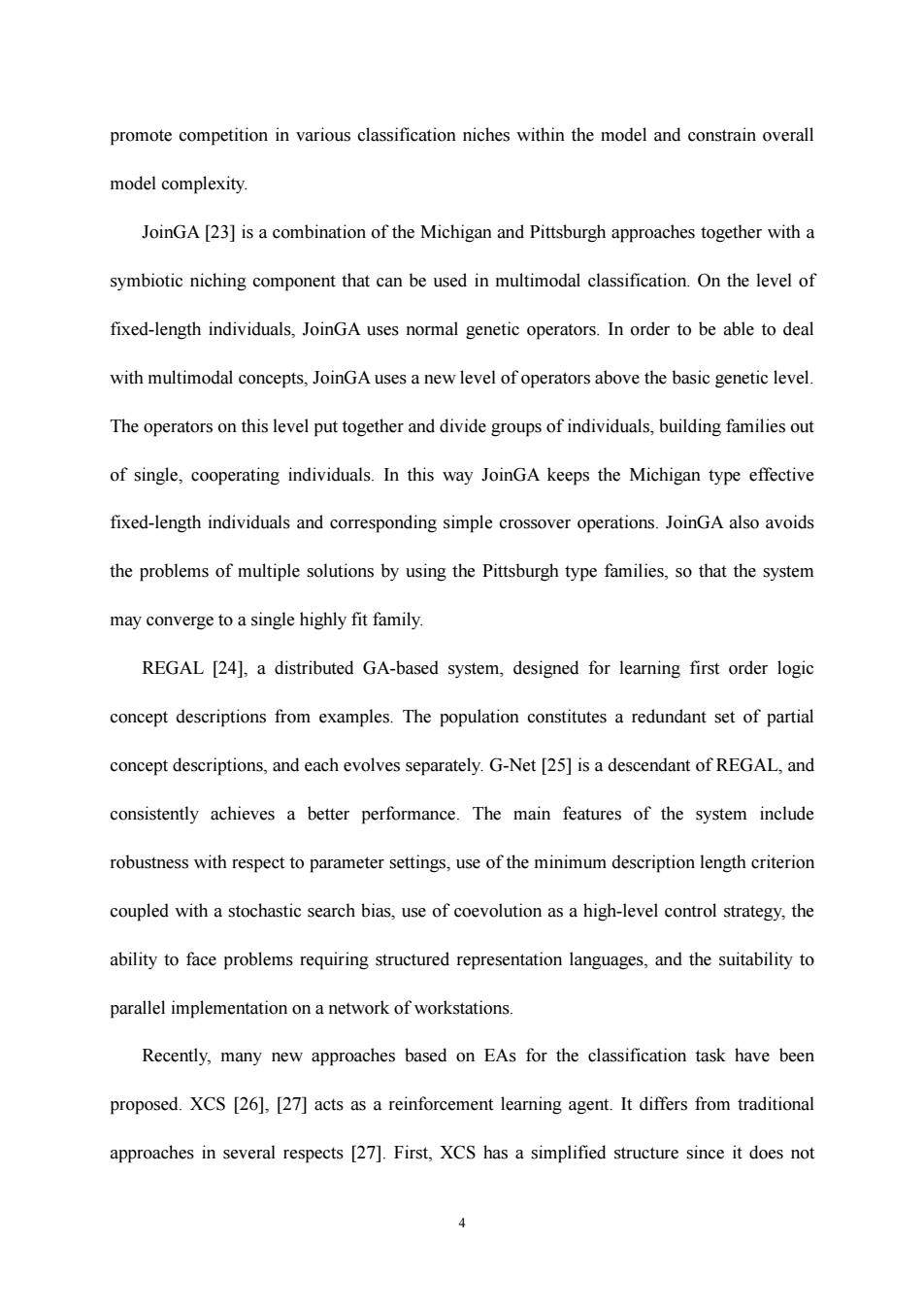

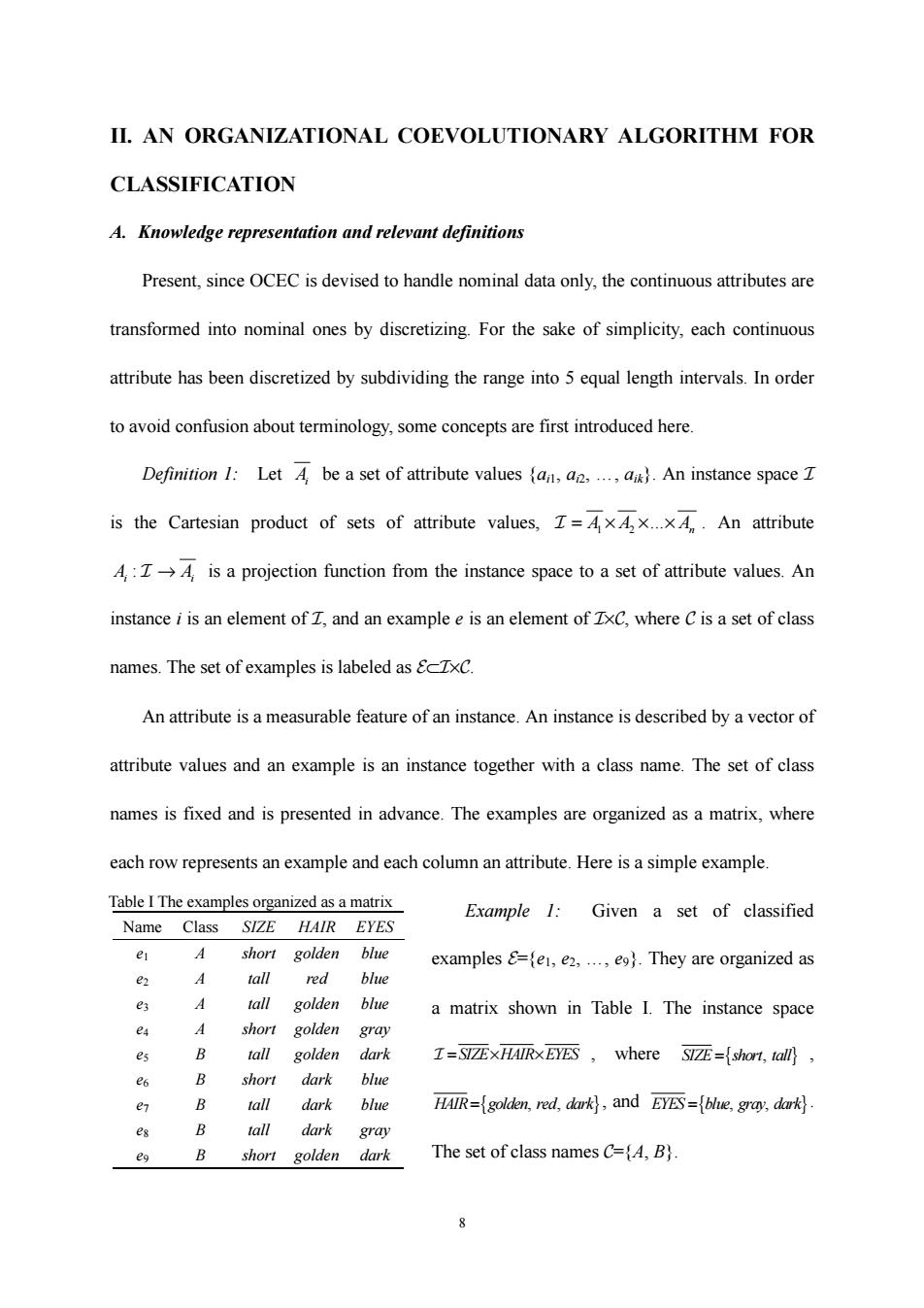

II.AN ORGANIZATIONAL COEVOLUTIONARY ALGORITHM FOR CLASSIFICATION A.Knowledge representation and relevant definitions Present,since OCEC is devised to handle nominal data only,the continuous attributes are transformed into nominal ones by discretizing.For the sake of simplicity,each continuous attribute has been discretized by subdividing the range into 5 equal length intervals.In order to avoid confusion about terminology,some concepts are first introduced here. Definition I:Let 4 be a set of attribute values (an,a,...,a).An instance space I is the Cartesian product of sets of attribute values,Z=Ax4x...xA..An attribute 4:Z->4,is a projection function from the instance space to a set of attribute values.An instance i is an element of Z,and an example e is an element of ZxC,where C is a set of class names.The set of examples is labeled as EcZxC. An attribute is a measurable feature of an instance.An instance is described by a vector of attribute values and an example is an instance together with a class name.The set of class names is fixed and is presented in advance.The examples are organized as a matrix,where each row represents an example and each column an attribute.Here is a simple example. Table I The examples organized as a matrix Example 1:Given a set of classified Name Class SIZE HAIR EYES 9 1 short golden blue examples =e1,e2,...,e9).They are organized as e2 A tall red blue e3 y tall golden blue a matrix shown in Table I.The instance space g short golden gray es B tall golden dark I=SIZEXHAIRXEYES where SIZE={short,tall 及 short dark blue S B tall dark blue HAIR=golden,red,dark,and EYES=blue,gray,dark tall dark gray B short golden dark The set of class names C=(A,B). 8

8 II. AN ORGANIZATIONAL COEVOLUTIONARY ALGORITHM FOR CLASSIFICATION A. Knowledge representation and relevant definitions Present, since OCEC is devised to handle nominal data only, the continuous attributes are transformed into nominal ones by discretizing. For the sake of simplicity, each continuous attribute has been discretized by subdividing the range into 5 equal length intervals. In order to avoid confusion about terminology, some concepts are first introduced here. Definition 1: Let Ai be a set of attribute values {ai1, ai2, …, aik}. An instance space I is the Cartesian product of sets of attribute values, 1 2 ... I = × ×× AA An . An attribute : A A i i I → is a projection function from the instance space to a set of attribute values. An instance i is an element of I, and an example e is an element of I×C, where C is a set of class names. The set of examples is labeled as E⊂I×C. An attribute is a measurable feature of an instance. An instance is described by a vector of attribute values and an example is an instance together with a class name. The set of class names is fixed and is presented in advance. The examples are organized as a matrix, where each row represents an example and each column an attribute. Here is a simple example. Example 1: Given a set of classified examples E={e1, e2, …, e9}. They are organized as a matrix shown in Table I. The instance space I =× × SIZE HAIR EYES , where SIZE short tall ={ , } , HAIR golden red dark ={ , , } , and EYES blue gray dark ={ , , } . The set of class names C={A, B}. Table I The examples organized as a matrix Name Class SIZE HAIR EYES e1 A short golden blue e2 A tall red blue e3 A tall golden blue e4 A short golden gray e5 B tall golden dark e6 B short dark blue e7 B tall dark blue e8 B tall dark gray e9 B short golden dark

Definition 2:An Organization,org,is a set of examples with identical class names, and the intersection of different organizations is empty,that is, orgcEc,ceC,where &c denotes the set of examples whose class names are c; Vorg1,o1g2CE。,org1≠org2→0rg1∩0rg2=☑: The examples in an organization are called Members. Because each attribute has different significance in determining the class name of an instance,the attributes are classified into different types for an organization according to the information of the members,and thus is convenient for rule extraction at the end of the evolutionary process. Definition 3:If all members of org have the same value for attribute A,then A is a Fixed-value Attribute.If 4'is a fixed-value attribute and satisfies the conditions required for rule extraction,then 4'is a Useful Attribute.The fixed-value attribute set of org is labeled as Fand the useful attribute set is labeled as Because rules extracted from some organizations are meaningless,organizations are also classified into three types. Normal organization:It is the organizations with more than one members and non-empty useful attribute set. Trivial organization:It is the organizations with only one member.All attributes of such organizations are useful ones; Abnormal organization:It is the organizations with empty useful attribute set. The sets of the three types of organizations are labeled as ORGN,ORGT,and ORG, respectively.To satisfy the requirement of evolutionary operations,each organization need 9

9 Definition 2: An Organization, org, is a set of examples with identical class names, and the intersection of different organizations is empty, that is, org⊆Ec, c∈C, where Ec denotes the set of examples whose class names are c; ∀org1, org2⊆Ec, org1 ≠ org2 ⇒ org1 ∩ org2 = ∅; The examples in an organization are called Members. Because each attribute has different significance in determining the class name of an instance, the attributes are classified into different types for an organization according to the information of the members, and thus is convenient for rule extraction at the end of the evolutionary process. Definition 3: If all members of org have the same value for attribute A, then A is a Fixed-value Attribute. If A′ is a fixed-value attribute and satisfies the conditions required for rule extraction, then A′ is a Useful Attribute. The fixed-value attribute set of org is labeled as Forg, and the useful attribute set is labeled as Uorg. Because rules extracted from some organizations are meaningless, organizations are also classified into three types. Normal organization: It is the organizations with more than one members and non-empty useful attribute set. Trivial organization: It is the organizations with only one member. All attributes of such organizations are useful ones; Abnormal organization: It is the organizations with empty useful attribute set. The sets of the three types of organizations are labeled as ORGN, ORGT, and ORGA, respectively. To satisfy the requirement of evolutionary operations, each organization need

record some information.Therefore,an organization is represented as the following structure, Organization Record Member List:Record the name of each member in this organization; Attribute Type:Record the type of each attribute in this organization,that is,fixed-value attribute,useful attribute, or others; Organization Type:Record the type of this organization,that is,trivial organization,abnormal organization,or normal organization; Member class:Record the class name of all members; Fitness:Record the fitness of this organization; End. B.Fitness function for organizations After analyzing the relation between attributes and examples,we think that there are two factors to be considered in devising the fitness function for organizations, (1)The number of members:The more members an organization has,the better the quality of the rule extracted from it.Therefore,the fitness of an organization should increase with the number of members. (2)The number of useful attributes:Useful attributes will be used to generate rules at the end of the evolutionary process.The more useful attributes are,the more conditions the rules have.In fact,the more conditions a rule has,the fewer examples the rule covers.But over-generalization will result if the conditions are too few.Therefore,the fitness of an 10

10 record some information. Therefore, an organization is represented as the following structure, Organization = Record Member_List: Record the name of each member in this organization; Attribute_Type: Record the type of each attribute in this organization, that is, fixed-value attribute, useful attribute, or others; Organization_Type: Record the type of this organization, that is, trivial organization, abnormal organization, or normal organization; Member_Class: Record the class name of all members; Fitness: Record the fitness of this organization; End. B. Fitness function for organizations After analyzing the relation between attributes and examples, we think that there are two factors to be considered in devising the fitness function for organizations, (1) The number of members: The more members an organization has, the better the quality of the rule extracted from it. Therefore, the fitness of an organization should increase with the number of members. (2) The number of useful attributes: Useful attributes will be used to generate rules at the end of the evolutionary process. The more useful attributes are, the more conditions the rules have. In fact, the more conditions a rule has, the fewer examples the rule covers. But over-generalization will result if the conditions are too few. Therefore, the fitness of an