Lecture 4 Association Rules of Data Reasoning Dr.李晓瑜Xiaoyu Li Email:xiaoyuuestc@uestc.edu.cn http://blog.sciencenet.cn/u/uestc2014xiaoyu 2019-Spring SunData Group http://www.sundatagroup.org School of Information and Software Engineering,UESTC 1966 Copyright2019 by Xiaoyu Li

Dr.李晓瑜 Xiaoyu Li Email:xiaoyuuestc@uestc.edu.cn http://blog.sciencenet.cn/u/uestc2014xiaoyu 2019-Spring Lecture 4 Association Rules of Data Reasoning SunData Group http://www.sundatagroup.org/ School of Information and Software Engineering, UESTC Copyright © 2019 by Xiaoyu Li. 1

Topic 。Apriori Algorithm Improve of Apriori Algorithm 。FP-growth Algorithm Multilevel Association Rules Quantitative association Rules Multidimensional Association Rules 3 Copyright 2019 by Xiaoyu Li

Apriori Algorithm Improve of Apriori Algorithm FP-growth Algorithm Multilevel Association Rules Quantitative Association Rules Multidimensional Association Rules Copyright © 2019 by Xiaoyu Li. 3 Topic

Apriori Algorithm DATA 4 Copyright 2019 by Xiaoyu Li

Copyright © 2019 by Xiaoyu Li. 4 Apriori Algorithm

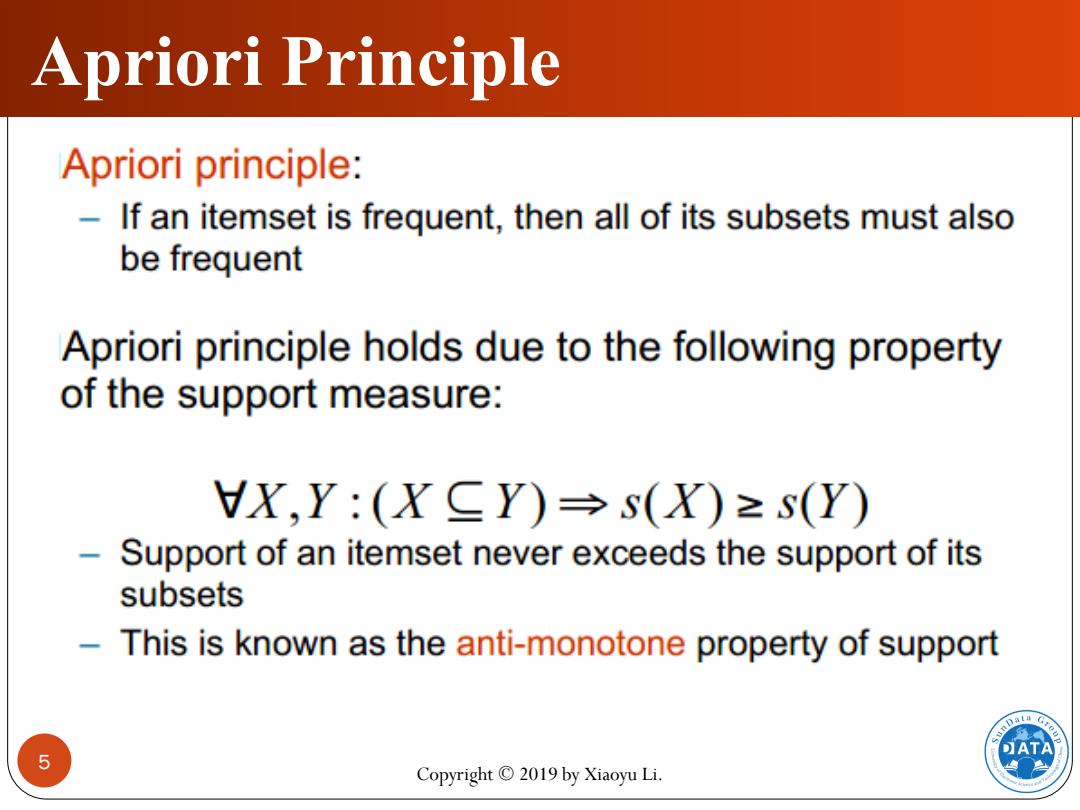

Apriori Principle Apriori principle: If an itemset is frequent,then all of its subsets must also be frequent Apriori principle holds due to the following property of the support measure: VX,Y:(XCY)→s(X)≥s(Y) Support of an itemset never exceeds the support of its subsets This is known as the anti-monotone property of support ATA 5 Copyright 2019 by Xiaoyu Li

5 Copyright © 2019 by Xiaoyu Li. Apriori Principle

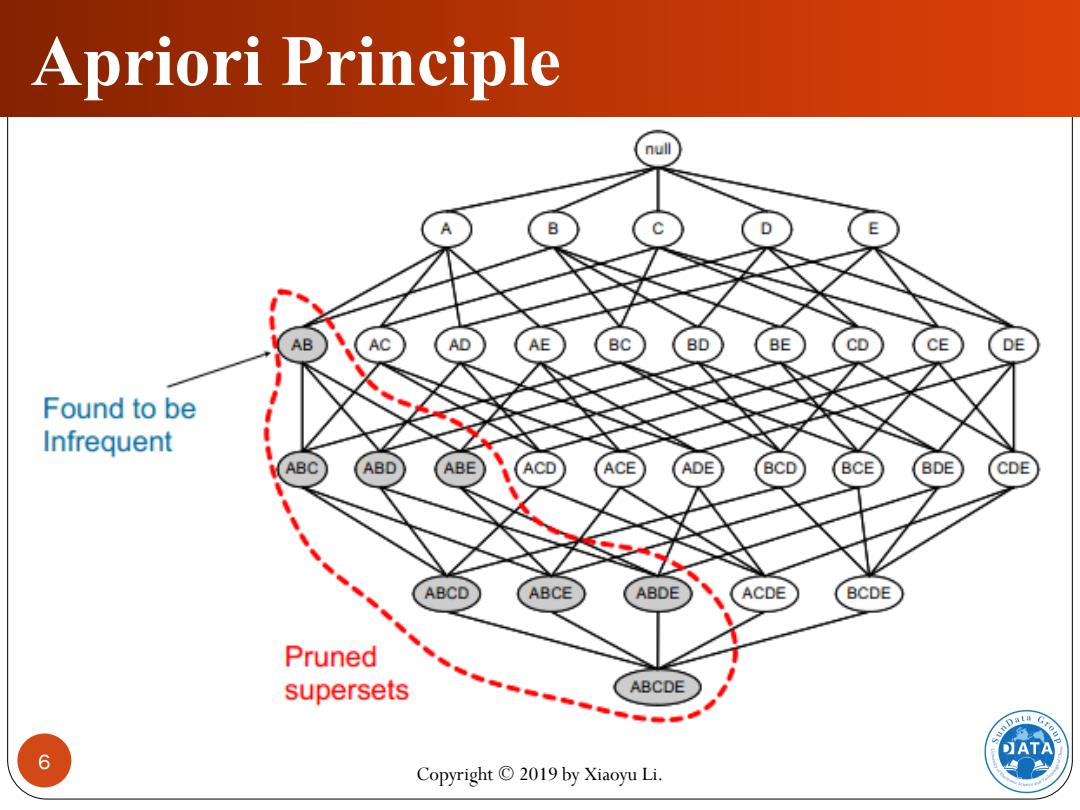

Apriori Principle null AB AD AE BC BD BE CD CE DE Found to be Infrequent (ABC ABD ABE ACD ACE ADE BCD BCE BDE CDE ABCD ABCE ABDE ACDE BCDE Pruned supersets ABCDE ATA 6 Copyright 2019 by Xiaoyu Li

6 Copyright © 2019 by Xiaoyu Li. Apriori Principle

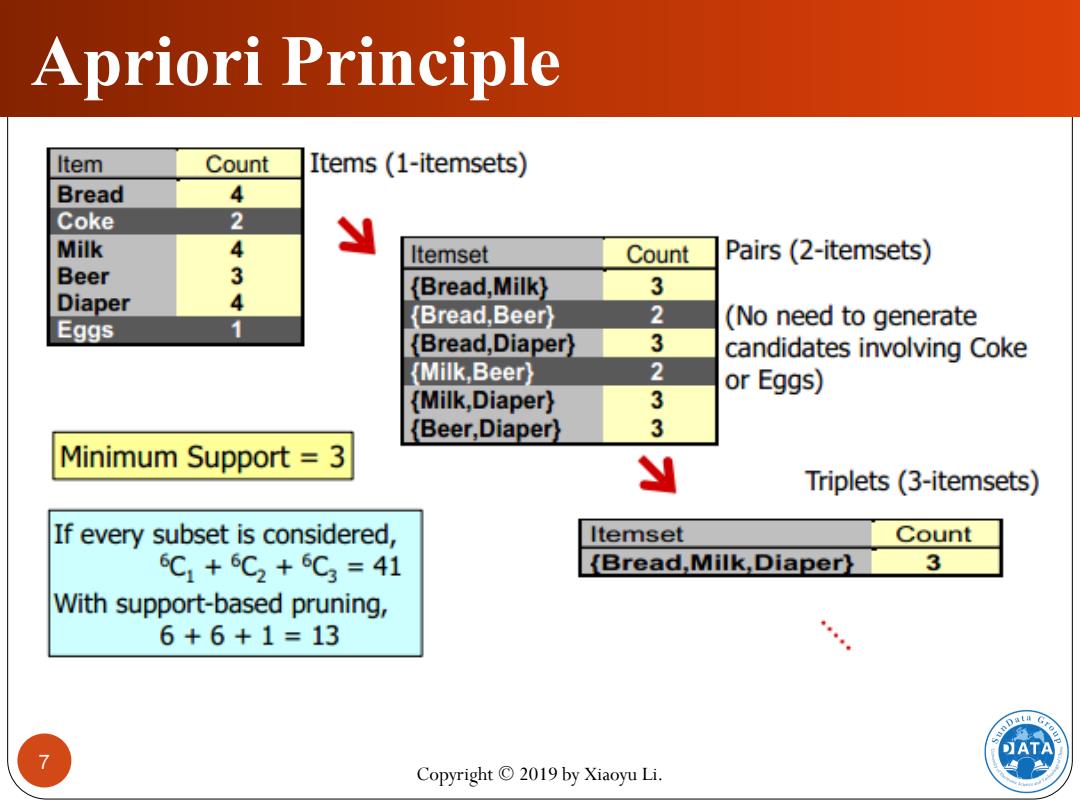

Apriori Principle Item Count Items(1-itemsets) Bread 4 Coke 2 Milk 4 Itemset Count Pairs(2-itemsets) Beer 3 (Bread,Milk) 3 Diaper 4 Bread,Beer) 2 Eggs 1 (No need to generate (Bread,Diaper) 3 candidates involving Coke (Milk,Beer) 2 or Eggs) (Milk,Diaper) 3 (Beer,Diaper) 3 Minimum Support 3 Triplets(3-itemsets) If every subset is considered, Itemset Count 6C1+6C2+6C3=41 (Bread,Milk,Diaper) 3 With support-based pruning, 6+6+1=13 DATA Copyright 2019 by Xiaoyu Li

7 Copyright © 2019 by Xiaoyu Li. Apriori Principle

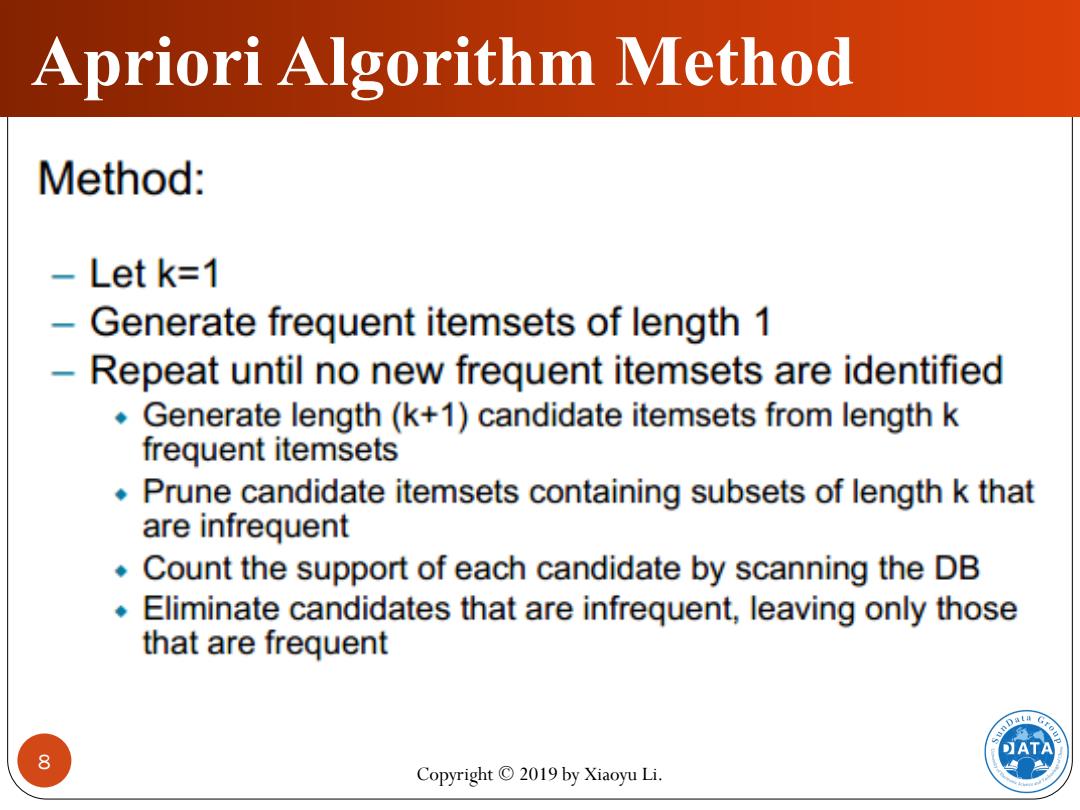

Apriori Algorithm Method Method: Let k=1 Generate frequent itemsets of length 1 Repeat until no new frequent itemsets are identified Generate length(k+1)candidate itemsets from length k frequent itemsets Prune candidate itemsets containing subsets of length k that are infrequent Count the support of each candidate by scanning the DB Eliminate candidates that are infrequent,leaving only those that are frequent ATA 8 Copyright 2019 by Xiaoyu Li

8 Copyright © 2019 by Xiaoyu Li. Apriori Algorithm Method

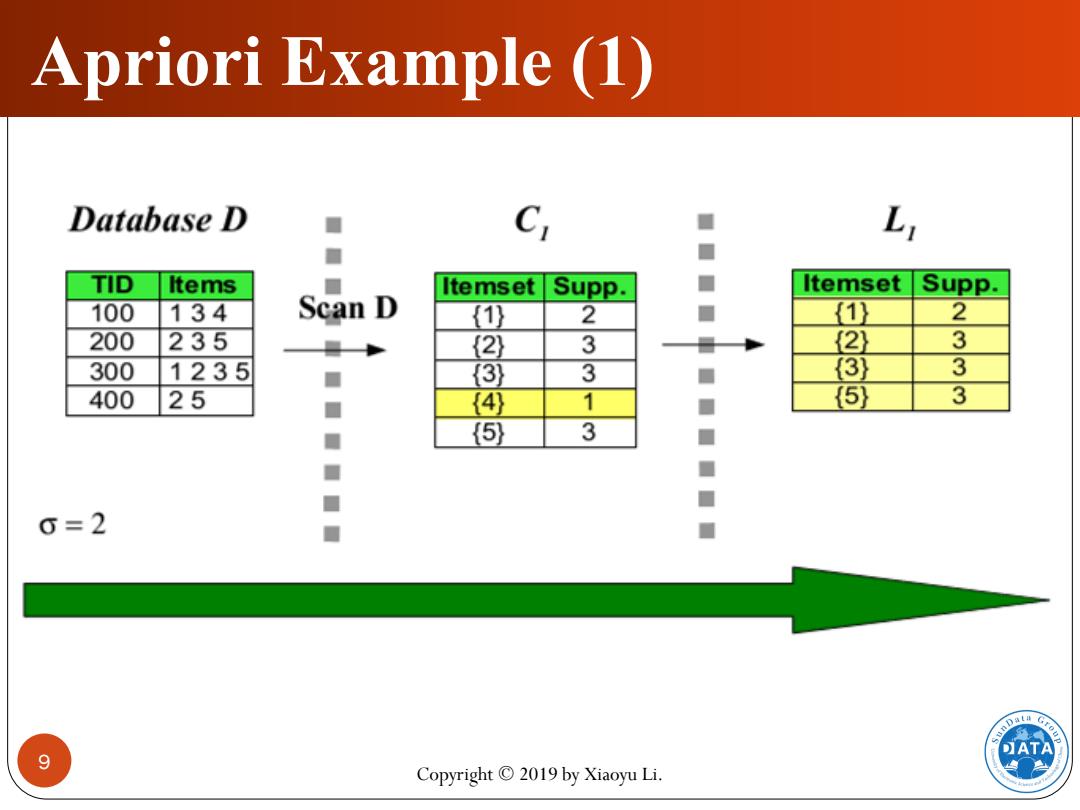

Apriori Example (1) Database D TID Items ■ Itemset Supp. Itemset Supp. 100 134 Scan D 1仍 2 1 2 200 235 {2) 3 3 300 1235 3) 3 3 3 400 25 ■ {4④ 1 5) 3 {5) 3 6=2 DATA 9 Copyright 2019 by Xiaoyu Li

9 Copyright © 2019 by Xiaoyu Li. Apriori Example (1)

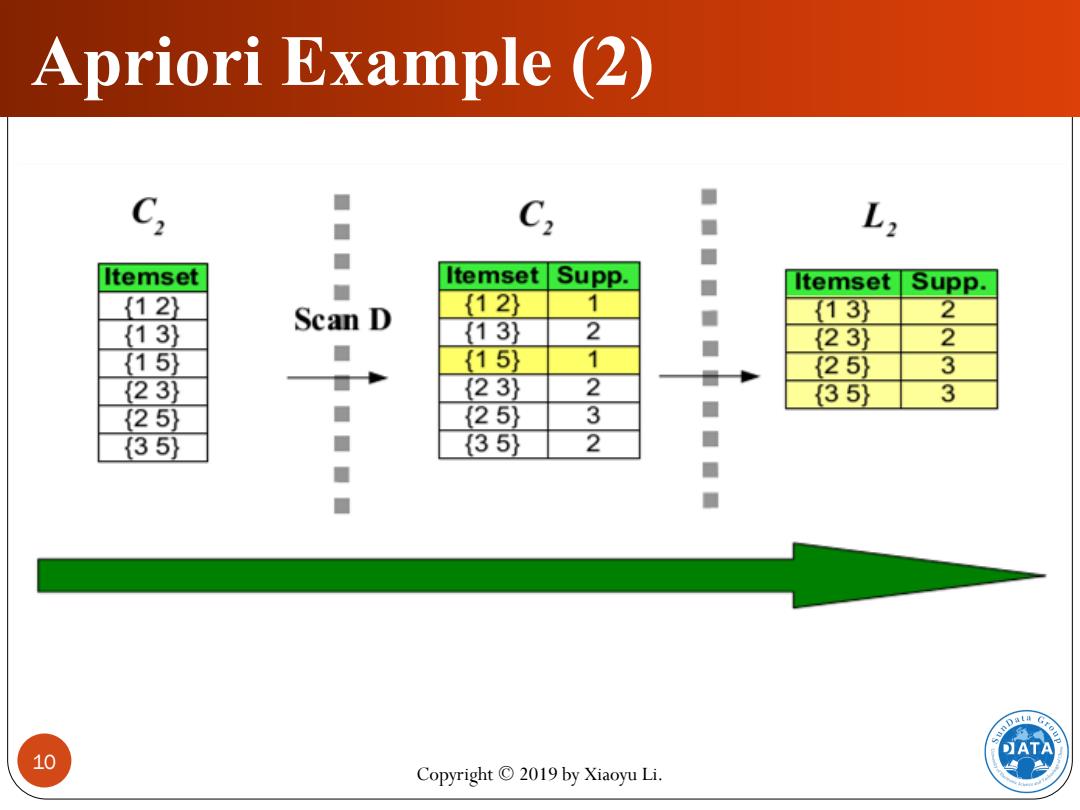

Apriori Example (2) ■ C2 ■ ■ Itemset Itemset Supp. Itemset Supp. 123 {12 1 13) 2 {13) Scan D 13) 2 23) 2 {15 {15 1 {25} 3 {23) 23) 2 35) 3 {25 {25) 3 35 35} 2 ■ ATA 10 Copyright 2019 by Xiaoyu Li

10 Copyright © 2019 by Xiaoyu Li. Apriori Example (2)

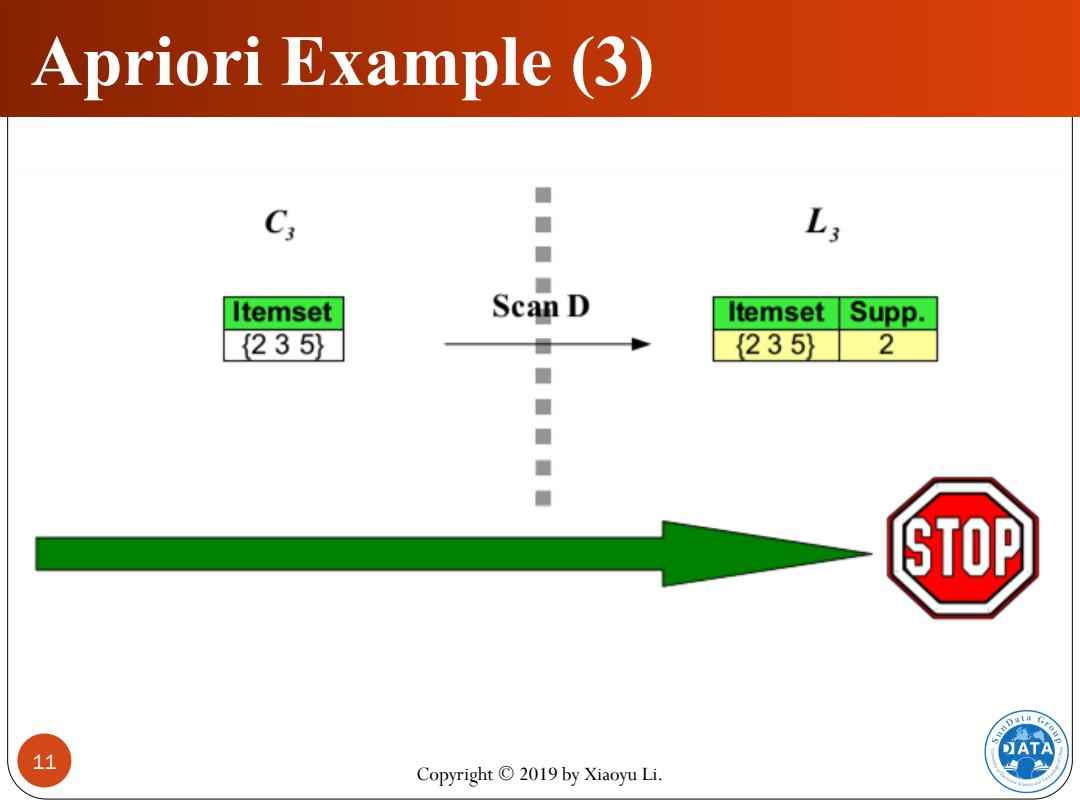

Apriori Example (3) L Itemset Scan D Itemset Supp. 235} 235 2 STOP DATA 11 Copyright 2019 by Xiaoyu Li

11 Copyright © 2019 by Xiaoyu Li. Apriori Example (3)