Lecture 4 Association Rules of Data Reasoning Dr.李晓瑜Xiaoyu Li Email:xiaoyuuestc@uestc.edu.cn http://blog.sciencenet.cn/u/uestc2014xiaoyu 2019-Spring SunData Group http://www.sundatagroup.org School of Information and Software Engineering,UESTC 1966 Copyright2019 by Xiaoyu Li

Dr.李晓瑜 Xiaoyu Li Email:xiaoyuuestc@uestc.edu.cn http://blog.sciencenet.cn/u/uestc2014xiaoyu 2019-Spring Lecture 4 Association Rules of Data Reasoning SunData Group http://www.sundatagroup.org/ School of Information and Software Engineering, UESTC Copyright © 2019 by Xiaoyu Li. 1

FP-growth Algorithm 2 DATA Copyright 2019 by Xiaoyu Li

Copyright © 2019 by Xiaoyu Li. 2 FP-growth Algorithm

FP-growth Algorithm Use a compressed representation of the database using an FP-tree Once an FP-tree has been constructed,it uses a recursive divide-and-conquer approach to mine the frequent itemsets DATA 3 Copyright 2019 by Xiaoyu Li

3 Copyright © 2019 by Xiaoyu Li. FP-growth Algorithm

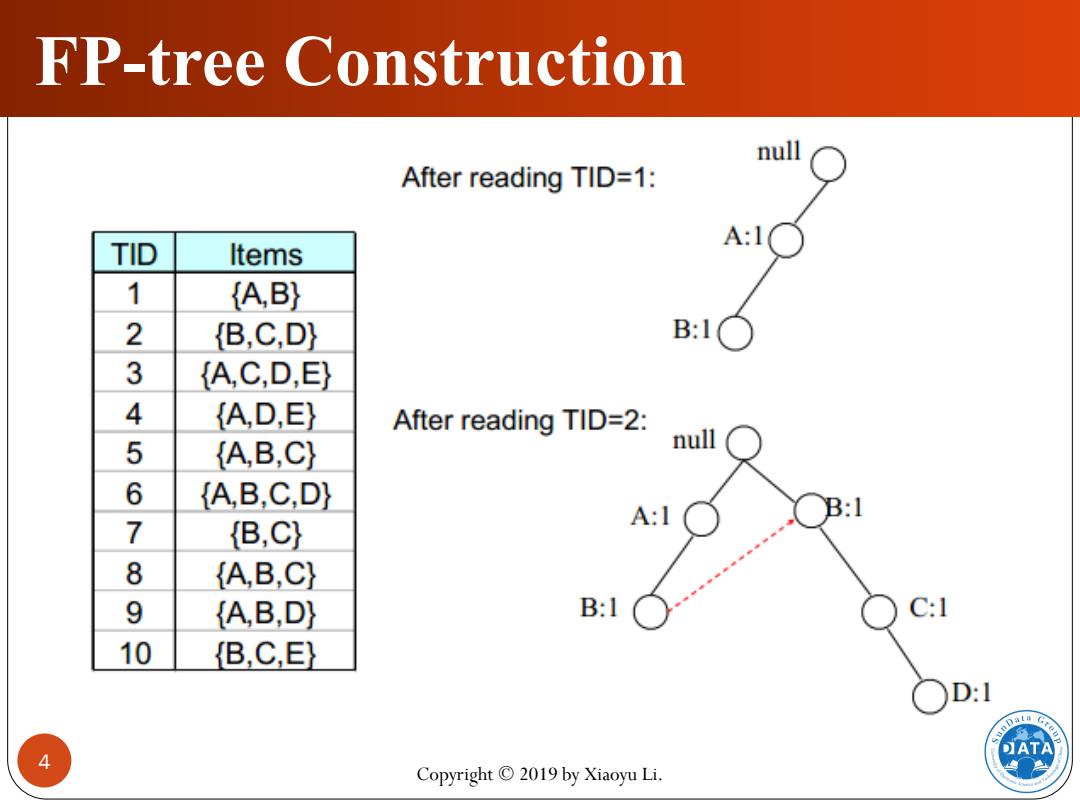

FP-tree Construction null After reading TID=1: A:1 TID Items 1 (A,B) 2 (B,C,D) B:1○ 3 (A,C,D,E) 4 (A,D,E) After reading TID=2: 5 (A,B,C} null 6 (A,B,C,D) A:I B:l 7 {B,C} 8 (A,B,C) 9 (A,B,D) B:1 C:1 10 B.C,E) D:1 4 Copyright 2019 by Xiaoyu Li

4 Copyright © 2019 by Xiaoyu Li. FP-tree Construction

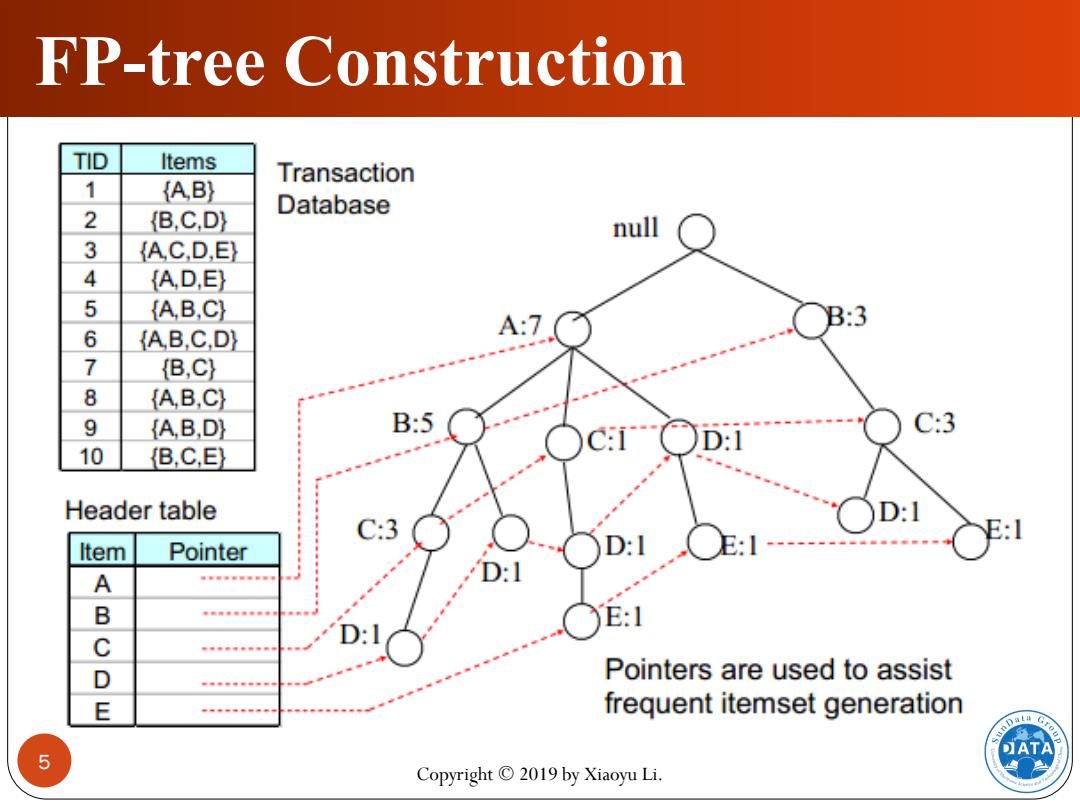

FP-tree Construction TID Items Transaction 1 (A,B) Database 2 (B.C,D) null 3 (A,C,D,E) 4 (A,D,E) 5 (A,B,C) 6 A:7 B:3 (A,B,C,D) 7 (B,C) 8 (A,B,C) 9 (A,B,D) B:5 C:I D:1 C:3 10 (B.C,E) Header table D:1 :3 E:1 Item Pointer D: A 海年际带新际带标海司 B 带新带带带带带带带带 E:1 C D: 带带标带参带新 D Pointers are used to assist E frequent itemset generation ATA 5 Copyright 2019 by Xiaoyu Li

5 Copyright © 2019 by Xiaoyu Li. FP-tree Construction

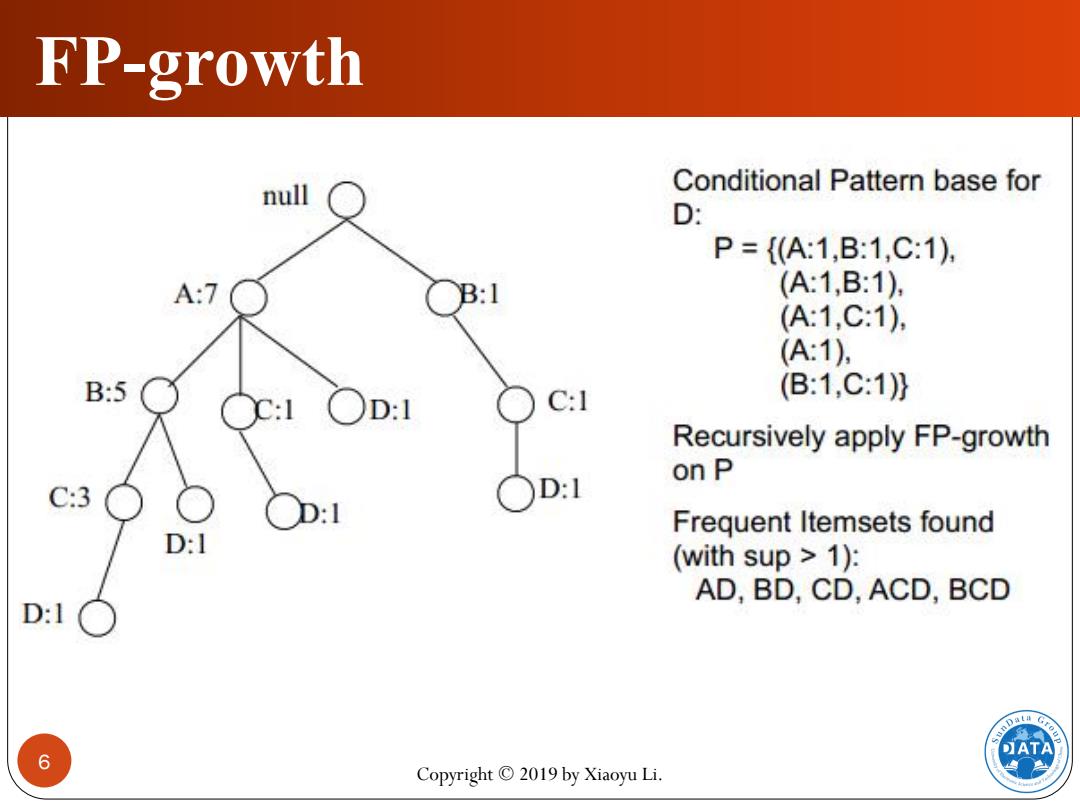

FP-growth null Conditional Pattern base for D: P={A:1,B:1,C:1) A:7 B:1 (A1,B:1) (A:1,C:1) (A:1), B:5 C:I (B1,C:1)} D:1 Recursively apply FP-growth C:3 ○Dl on P D:1 Frequent Itemsets found D:I (with sup>1): AD,BD,CD,ACD,BCD D:1 ATA 6 Copyright 2019 by Xiaoyu Li

6 Copyright © 2019 by Xiaoyu Li. FP-growth

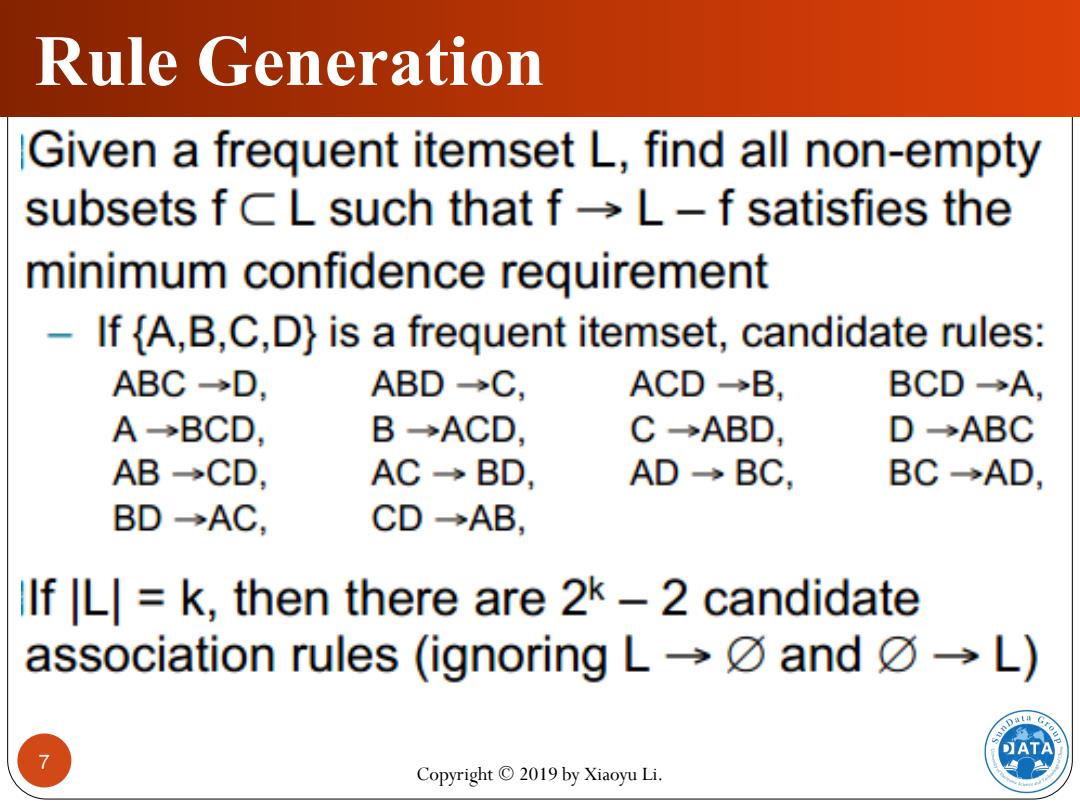

Rule Generation Given a frequent itemset L,find all non-empty subsets f CL such that f-L-f satisfies the minimum confidence requirement If [A,B,C,D}is a frequent itemset,candidate rules: ABC→D, ABD→C, ACD→B, BCD→A, A→BCD, B→ACD, C→ABD, D→ABC AB→CD AC→BD, AD→BC BC→AD, BD→AC CD→AB, llf L =k,then there are 2k-2 candidate association rules(ignoring L→☑and☑→L) ATA Copyright 2019 by Xiaoyu Li

7 Copyright © 2019 by Xiaoyu Li. Rule Generation

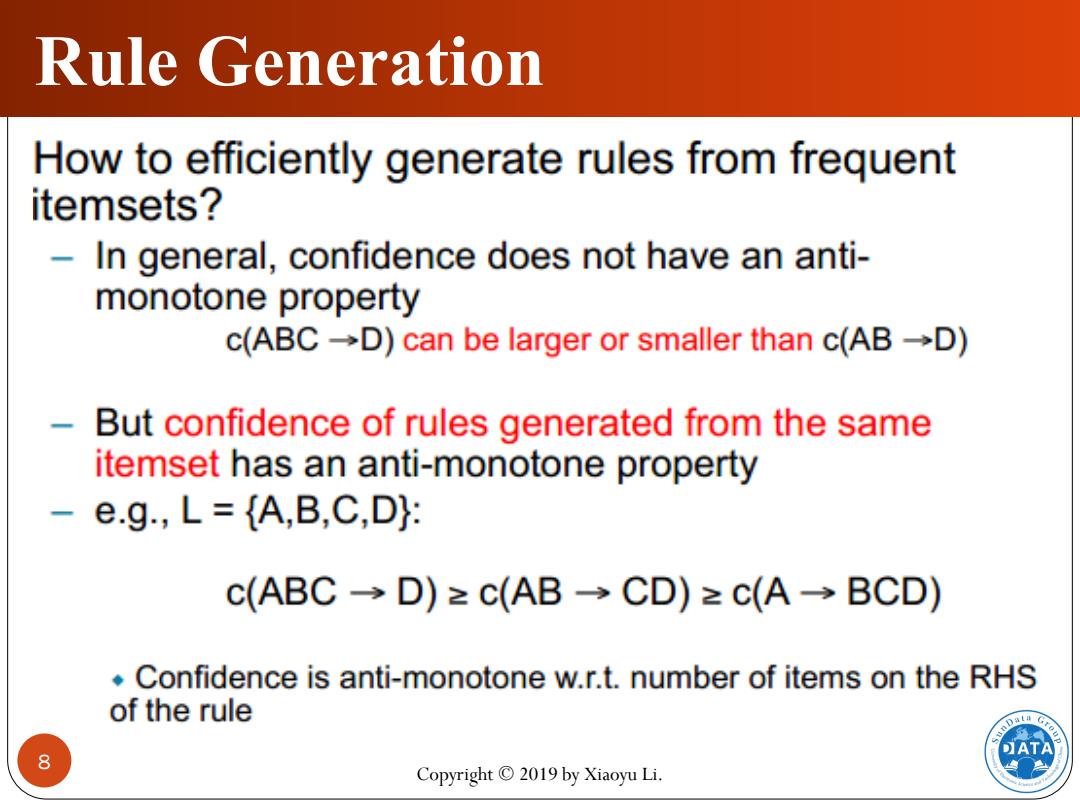

Rule generation How to efficiently generate rules from frequent itemsets? In general,confidence does not have an anti- monotone property c(ABC→D)can be larger or smaller than c(AB→D) But confidence of rules generated from the same itemset has an anti-monotone property -e.g.,L=(A,B,C,D): c(ABC→D)2c(AB→CD)≥c(A→BCD) Confidence is anti-monotone w.r.t.number of items on the RHS of the rule ATA 8 Copyright 2019 by Xiaoyu Li

8 Copyright © 2019 by Xiaoyu Li. Rule Generation

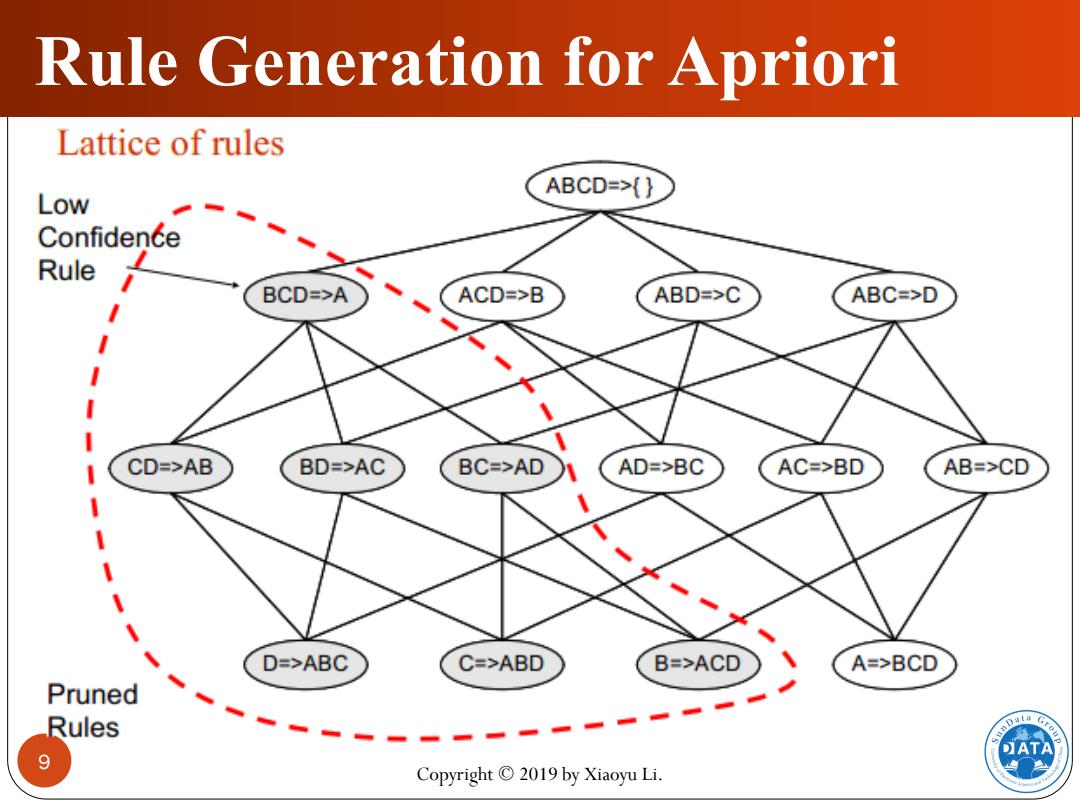

Rule Generation for Apriori Lattice of rules ABCD=>(} Low Confidence Rule BCD=>A ACD=>B ABD=>C ABC=>D CD=>AB BD=>AC BC=>AD AD=>BC AC=>BD AB=>CD D=>ABC C=>ABD B=>ACD A=>BCD Pruned Rules ATA 9 Copyright 2019 by Xiaoyu Li

9 Copyright © 2019 by Xiaoyu Li. Rule Generation for Apriori

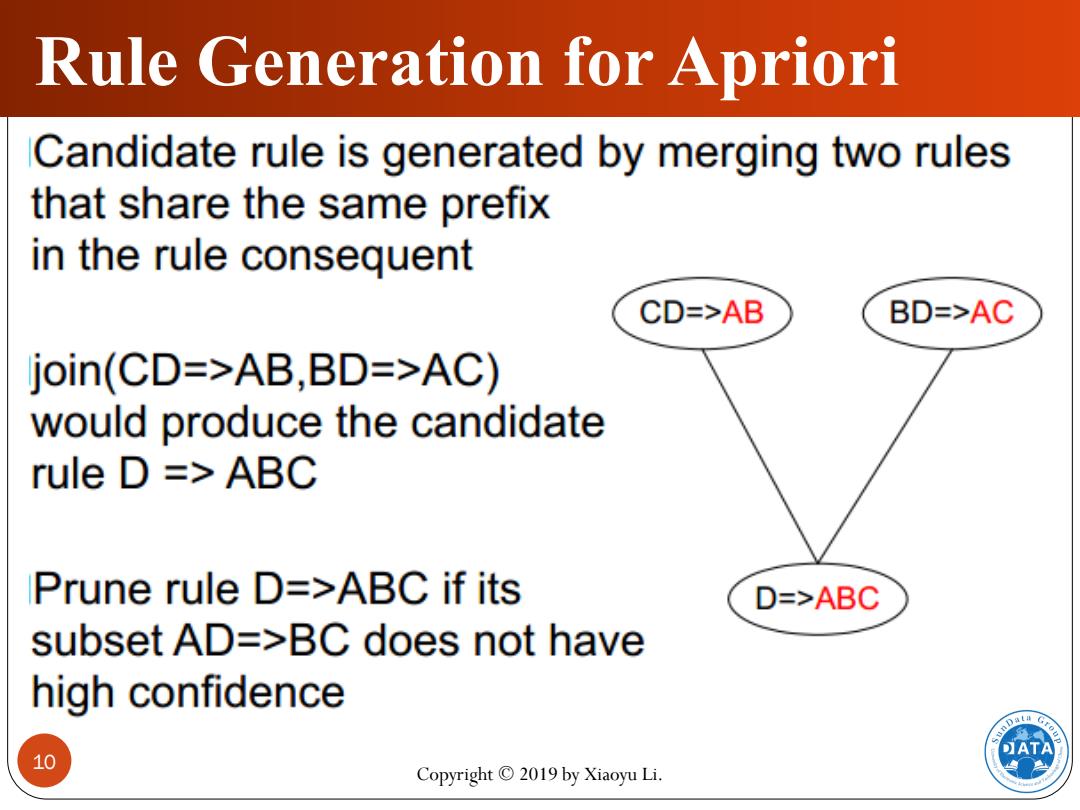

Rule Generation for Apriori Candidate rule is generated by merging two rules that share the same prefix in the rule consequent CD=>AB BD=>AC join(CD=>AB,BD=>AC) would produce the candidate rule D =ABC Prune rule D=>ABC if its D=>ABC subset AD=>BC does not have high confidence DATA 10 Copyright 2019 by Xiaoyu Li

10 Copyright © 2019 by Xiaoyu Li. Rule Generation for Apriori