Lecture 7.Decision Tree Complexity and Fourier analysis 1.Decision Tree Complexity Recall that in the first lecture,we introduced decision tree as a model to compute a function.Basically, we make a sequence of queries of the form "xi=?"and finally output the answer f(x).The (deterministic)decision tree complexity,or query complexity,ofa functionf,is the minimum number of queries a decision tree needs to make in order to compute f.It's interesting that some functions have sublinear query complexity,namely one can always get to know f(x)long before learningx itself.We've seen a couple of such examples.Let's see one more. Example:Sink problem.We are given a directed graph,and we wish to know whether the graph has a"sink"vertex,which has out-degree n-1 and in-degree 0.(Namely,everyone else points to it,but it doesn't point to anyone.)What we can ask is for any ordered pair(i j),whether there is an edge from i to j.What's the minimum number of queries we need? We can also consider randomized decision trees,which can make random choices during the computation.Two types ofrequirement for correctness are used: Monte Carlo:On any input,the algorithm finishes in at most g queries and is correct with probability 1-e. Las Vegas:On any input,the algorithm is correct with probability 1,and the expected the number of queries is at most g. The randomized query complexity is thus the minimum number g of queries needed.Denote by Re(f)and R(f)the randomized query complexity offin the Monte Carlo and Las Vegas models, respectively.Similar as in the communication complexity case,for any distribution u on input set {0,1)",we can define the u-distributional query complexity De(f)as the minimum number of queries needed for any deterministic query algorithm which has error probability,averaged over x u,at most e.Also define D4(f)as the minimum expected (over x-u)number of queries by any deterministic query algorithm that computes fcorrectly on all inputs.By Yao's minmax principle,the following holds. Theorem 1.1.Re(f)=maxuDe (f),R(f)=maxuD4(f)

Lecture 7. Decision Tree Complexity and Fourier analysis 1. Decision Tree Complexity Recall that in the first lecture, we introduced decision tree as a model to compute a function. Basically, we make a sequence of queries of the form "𝑥𝑖 =? " and finally output the answer 𝑓(𝑥). The (deterministic) decision tree complexity, or query complexity, of a function f, is the minimum number of queries a decision tree needs to make in order to compute f. It’s interesting that some functions have sublinear query complexity, namely one can always get to know 𝑓(𝑥) long before learning x itself. We’ve seen a couple of such examples. Let’s see one more. Example: Sink problem. We are given a directed graph, and we wish to know whether the graph has a “sink” vertex, which has out-degree n-1 and in-degree 0. (Namely, everyone else points to it, but it doesn’t point to anyone.) What we can ask is for any ordered pair (i,j), whether there is an edge from i to j. What’s the minimum number of queries we need? We can also consider randomized decision trees, which can make random choices during the computation. Two types of requirement for correctness are used: ⚫ Monte Carlo: On any input, the algorithm finishes in at most q queries and is correct with probability 1 − ϵ. ⚫ Las Vegas: On any input, the algorithm is correct with probability 1, and the expected the number of queries is at most q. The randomized query complexity is thus the minimum number q of queries needed. Denote by 𝑅𝜖(𝑓) and 𝑅(𝑓) the randomized query complexity of f in the Monte Carlo and Las Vegas models, respectively. Similar as in the communication complexity case, for any distribution 𝜇 on input set {0,1} 𝑛, we can define the 𝜇-distributional query complexity 𝐷𝜖 𝜇 (𝑓) as the minimum number of queries needed for any deterministic query algorithm which has error probability, averaged over 𝑥 ← 𝜇, at most 𝜖. Also define 𝐷𝜇(𝑓) as the minimum expected (over 𝑥 ← 𝜇) number of queries by any deterministic query algorithm that computes f correctly on all inputs. By Yao’s minmax principle, the following holds. Theorem 1.1. 𝑅𝜖 (𝑓) = max𝜇𝐷𝜖 𝜇 (𝑓), 𝑅(𝑓) = max𝜇𝐷𝜇(𝑓)

While some functions enjoy small decision tree complexity,many don't.As always in complexity theories,we hope to understand what functions have small decision tree complexity. An important class of functions are those with symmetries.For example,consider ● AND(x)=x1A...Axm ●0R(x)=x1V.Vxn ●Thk(x)=1ifx1+…+xn≥k,and Thy(x)=0 otherwise. These functions are"very symmetric".Actually they depend only on the number of 1's in {x1,..,n--it's not important which variables are I's. Some other functions are"less symmetric".For example,consider all graph properties---functions on raphsthtonabftheveti.Thasthenutothe functionhas variables,indicating whether each pair (i,)is an edge in the graph.The function depends only on the graph structure,not the name of vertices.Formally,fis invariant to vertex permutations.If we view the input G as an n x n matrix AG(which is symmetric with all diagonal entries being0),and simultaneously perform a permutation to rows and to columns,then f(x)remains unchanged. Examples include the functions that we are familiar,such as ● Hamiltonian Cycle:Does the graph have a Hamiltonian cycle? ● k-Clique:Does the graph have a clique ofsize k? ● Connectivity:Is the graph connected? ● Bipartiteness:Is the graph a bipartite graph? ● Matching:Does the graph have a perfect matching? ● Much attention has been drawn on the effect of symmetry on the decision tree complexity.Aanderaa nettallappropertieshavescmlexitynmenastoak all variables.Functions fon N variables with D(f)=N are called evasive.It was soon found by Rosenberg that this is not correct.Consider the Sink problem at the beginning of this lecture.(Well, technically speaking,Sink is a directed graph property.But there is also an analog called Scorpion, which is indeed a graph property,and the decision tree complexity is 0(n).)So Rosenberg added one more condition:monotonicity.This leads to the most famous conjecture in decision tree complexity: Aanderaa-Rosenberg Conjecture:All monotone graph properties are evasive

While some functions enjoy small decision tree complexity, many don’t. As always in complexity theories, we hope to understand what functions have small decision tree complexity. An important class of functions are those with symmetries. For example, consider ⚫ AND(𝑥) = 𝑥1 ∧ …∧ 𝑥𝑛. ⚫ OR(𝑥) = 𝑥1 ∨… ∨ 𝑥𝑛. ⚫ Th𝑘 (𝑥) = 1 if 𝑥1 + ⋯ + 𝑥𝑛 ≥ 𝑘, and Th𝑘 (𝑥) = 0 otherwise. These functions are “very symmetric”. Actually they depend only on the number of 1’s in {𝑥1,… ,𝑥𝑛}---it’s not important which variables are 1’s. Some other functions are “less symmetric”. For example, consider all graph properties---functions on graphs that don’t depend on the labeling of the vertices. That is, the input to the function has ( 𝑛 2 ) variables, indicating whether each pair (𝑖, 𝑗) is an edge in the graph. The function depends only on the graph structure, not the name of vertices. Formally, f is invariant to vertex permutations. If we view the input G as an 𝑛 × 𝑛 matrix AG (which is symmetric with all diagonal entries being 0), and simultaneously perform a permutation to rows and to columns, then 𝑓(𝑥) remains unchanged. Examples include the functions that we are familiar, such as ⚫ Hamiltonian Cycle: Does the graph have a Hamiltonian cycle? ⚫ k-Clique: Does the graph have a clique of size k? ⚫ Connectivity: Is the graph connected? ⚫ Bipartiteness: Is the graph a bipartite graph? ⚫ Matching: Does the graph have a perfect matching? ⚫ … Much attention has been drawn on the effect of symmetry on the decision tree complexity. Aanderaa once conjectured that all graph properties have decision tree complexity ( 𝑛 2 ), namely one has to ask all variables. Functions f on N variables with 𝐷(𝑓) = 𝑁 are called evasive. It was soon found by Rosenberg that this is not correct. Consider the Sink problem at the beginning of this lecture. (Well, technically speaking, Sink is a directed graph property. But there is also an analog called Scorpion, which is indeed a graph property, and the decision tree complexity is 𝑂(𝑛).) So Rosenberg added one more condition: monotonicity. This leads to the most famous conjecture in decision tree complexity: Aanderaa-Rosenberg Conjecture: All monotone graph properties are evasive

While this conjecture was not fully solved,it was known that such functions need (N)queries.It can also be proved that D(f)=N if the input size N is a prime power [RV76].See [LY94]for a survey ofevasiveness. However,things become much more complicated when it comes to randomized query complexity. Karp modified the conjecture to the following. Aanderaa-Karp-Rosenberg Conjecture:All monotone graph properties fof N variables have R(f)=2(N) This conjecture was still wide open despite many attacks by first-tier researchers.It's considered to be one of the few most notorious conjectures in theoretical computer science.The best lower bound that we have is R(f)=(N2/3).(Notation:Tilde hides a log factor.)There are two proofs of this fact. One is by graph packing,initialized by Yao [Yao87]and improved by King [Kin88],Hajnal [Haj91], Chakrabarti -Khot [CK01].The other approach is by Fourier analysis.Both are very beautiful,but the second one is simpler,which we'll talk about. First we'll need some basic knowledge of Fourier analysis on Boolean functions. 2.Fourier analysis There are two common ways to represent Boolean values:{0,1}or {+1,-1).To emphasize the difference,we will use x and ffor (0,1}and y andg for (+1,-1).For each sE{0,1)7,define xs by xs(x)=(-1)sx (or equivalently xs(y)=nliesyi.)The following fact is easy to verify. Fact 2.1.{xs:s E{0,1)m}is an orthonormal basis of the space (h:{0,1)nR}underthe inner product (h,h')=Ex[h(x)h'()]. By the above fact,any real function h on {0,1]n can be expanded in this basis.We can write it as h=Es h(s)xs,where the coefficients h(s)are called the Fourier coefficients.The transform from h to h is the Fourier transform.A basic property is about the transform is that it keeps the 2-norm. Fact 2.2.(Parseval's formula)Ex[h(x)2]=Esh(s)2. Thus for Boolean functions g of range {+1,-1),we have sg(s)2=1.So {g(s)2}can be viewed as a probability distribution over {0,1)n

While this conjecture was not fully solved, it was known that such functions need 𝛺(𝑁) queries. It can also be proved that 𝐷(𝑓) = 𝑁 if the input size N is a prime power [RV76]. See [LY94] for a survey of evasiveness. However, things become much more complicated when it comes to randomized query complexity. Karp modified the conjecture to the following. Aanderaa-Karp-Rosenberg Conjecture: All monotone graph properties f of N variables have 𝑅(𝑓) = 𝛺(𝑁). This conjecture was still wide open despite many attacks by first-tier researchers. It’s considered to be one of the few most notorious conjectures in theoretical computer science. The best lower bound that we have is 𝑅(𝑓) = Ω̃(𝑁2/3 ). (Notation: Tilde hides a log factor.) There are two proofs of this fact. One is by graph packing, initialized by Yao [Yao87] and improved by King [Kin88], Hajnal [Haj91], Chakrabarti -Khot [CK01]. The other approach is by Fourier analysis.Both are very beautiful, but the second one is simpler, which we’ll talk about. First we’ll need some basic knowledge of Fourier analysis on Boolean functions. 2. Fourier analysis There are two common ways to represent Boolean values: {0,1} or {+1, −1}. To emphasize the difference, we will use x and f for {0,1} and y and g for {+1,−1}. For each 𝑠 ∈ {0,1} 𝑛, define 𝜒𝑠 by 𝜒𝑠 (𝑥) = (−1) 𝑠⋅𝑥 (or equivalently 𝜒𝑠 (𝑦) = ∏𝑖∈𝑆𝑦𝑖 .) The following fact is easy to verify. Fact 2.1. {𝜒𝑠 :𝑠 ∈ {0,1} 𝑛} is an orthonormal basis of the space {ℎ:{0,1} 𝑛 → 𝑅} under the inner product 〈h, h ′〉 = Ex [ℎ(𝑥)ℎ ′(𝑥)]. By the above fact, any real function h on {0,1} 𝑛 can be expanded in this basis. We can write it as ℎ = ∑𝑠 ℎ̂(𝑠)𝜒𝑠 , where the coefficients ℎ̂(𝑠) are called the Fourier coefficients. The transform from h to ℎ̂ is the Fourier transform. A basic property is about the transform is that it keeps the ℓ2-norm. Fact 2.2. (Parseval’s formula) Ex [ℎ(𝑥) 2] = ∑ ℎ̂(𝑠) 2 s . Thus for Boolean functions 𝑔 of range {+1,−1}, we have ∑ 𝑔̂(𝑠) 2 s = 1. So {𝑔̂(𝑠) 2 } can be viewed as a probability distribution over {0,1} 𝑛

An important concept is that of the influence of variables.Suppose we pick input x randomly by letting each xi=1 with probability p.Denote by up this probability distribution ofx.For a Boolean function h,define Infi(hp)=Pr [h(x)#h(xi)], x←p where xis the string obtained fromx by flipping the i-th variable.Intuitively,the influence of variable i is the probability that it matters for the function,when other input variables are drawn randomly.When p =1/2,we omit it and just write Infi(h). Let ei be the n-bit string where all bits except the i-th one are 0.We usually denote g(i)=g(e). Fact 2.3.If a Boolean function g is monotone,then Infi(g)=g(i). Proof Note that for monotone g, gy-io1)≠g(y-io(-1))台g(y-io1)=1,9(y-io(-1))=-1 Therfore, =Eulg(y)vi] =(Ev-g(-i01i)]-Ev-g(-:o(-1):)) Pry_ig(y-io1i)g(y-i(-1)i)] inf i(g) We finally need a fact about symmetry of influences. Fact.For graph properties,all the influences Inf(f)are the same. Proof.For any variables e and e',there is a permutation n over vertices to map e to e'.(More precisely,e =(i,j)and e'=(i',j),there is a permutation n over vertices to map i to i',and j to .)Then Infh,p)=,Prh(x)≠h(x]=Pr.[h(π(x)≠h(π(x) (h is invariant toπ) =Pr[h(π(x)≠h(π(x))](flipping xi&then actingπactingπ&then flipping x) x←p =P,h≠hx]=nfp).a

An important concept is that of the influence of variables. Suppose we pick input x randomly by letting each 𝑥𝑖 = 1 with probability p. Denote by 𝜇𝑝 this probability distribution of x. For a Boolean function h, define Inf𝑖 (ℎ,𝑝) = Pr 𝑥←𝜇𝑝 [ℎ(𝑥) ≠ ℎ(𝑥 𝑖 )], where 𝑥 𝑖 is the string obtained from x by flipping the i-th variable.Intuitively, the influence of variable i is the probability that it matters for the function, when other input variables are drawn randomly. When 𝑝 = 1/2, we omit it and just write Infi(ℎ). Let 𝑒𝑖 be the n-bit string where all bits except the i-th one are 0. We usually denote 𝑔̂(𝑖) = 𝑔̂(𝑒𝑖 ). Fact 2.3. If a Boolean function 𝑔 is monotone, then Infi (𝑔) = 𝑔̂(𝑖). We finally need a fact about symmetry of influences. Fact. For graph properties, all the influences Inf(𝑖,𝑗) (𝑓) are the same. Proof. For any variables e and e’, there is a permutation 𝜋 over vertices to map e to e’. (More precisely, 𝑒 = (𝑖,𝑗) and 𝑒 ′ = (𝑖′,𝑗′), there is a permutation 𝜋 over vertices to map 𝑖 to 𝑖’, and 𝑗 to 𝑗’.) Then Infi (ℎ,𝑝) = Pr 𝑥←𝜇𝑝 [ℎ(𝑥) ≠ ℎ(𝑥 𝑖)] = Pr 𝑥←𝜇𝑝 [ℎ(𝜋(𝑥)) ≠ ℎ(𝜋(𝑥 𝑖 ))] (h is invariant to 𝜋) = Pr 𝑥←𝜇𝑝 [ℎ(𝜋(𝑥)) ≠ ℎ(𝜋(𝑥) 𝑗 )] (flipping 𝑥𝑖 & then acting 𝜋 ↔ acting 𝜋 & then flipping 𝑥𝑗 ) = Pr 𝑥←𝜇𝑝 [ℎ(𝑥) ≠ ℎ(𝑥 𝑗 )] = Infj(ℎ,𝑝). □

3.Aanderaa-Karp-Rosenberg Conjecture Now we can prove the theorem. Theorem3.1.R(f)=R(N2/3)for all monotone graph properties f. The proof below is so short that it fits in one page.(You'd appreciate the proof more ifyou've ever tried aleng the appreaehesef read the original proofs by graph packing...)The lower bound is obtained by combining two inequalities: Var[f] R() pq·maxi Inf:(f,p) (1) and R(f)≥pq·lnff,p)2 (2) 3.1Eq.(1) The original proof was given in [OSSS05].Here we present a simplified proof given in [JZ11]. Take a best deterministic algorithm A with k queries and up-distributional error at most 6,and we'll construct anotheralgorithm A'with k-1 queries and up-distributional error at most 6+pq. max Infip).Then repeat thisk timesand we'll get an algorithm making no query and has error 6+kpqmax Infi(f,p).But an algorithm without any query can do nothing but guessing the most likely f(x);the error is e(Var[f]),and we then obtained the bound. A'is very simple.Suppose A's first query is xi,then A'is the same as A except that A'doesn't make this query.Instead,A'drawsxip as a guess of xi.With probability p2+q2=1-2pq,x= xigreat:A'guessed correctly.With probability 2pq,xxi bad.But how bad is it?The newly introduced error probability is at most Infi(f,p)by definition of influence!Thus we are done.(The above algorithm is randomized.An averaging argument gives a deterministic one.) 3.2Eq2) For simplicity,we prove the special case of p =1/2;the general case is similar.By Yao's minimax principle,it's enough to argue that for any fixed deterministic query algorithm,the expected number of queries is at least |Inf(g)=ig(i)(equality because of Fact 2.).Note that any decision tree partitions {+1,-1)n into monochromatic subcubes {C).A key (and very simple)observation is that the following two random processes give the same distribution of (y,C):1)Draw y E{+1,-1)n uniformly at random,and thenlet C be the cube containingy.2)Draw C with probability 2dim(c)-n

3. Aanderaa-Karp-Rosenberg Conjecture Now we can prove the theorem. Theorem 3.1. 𝑅(𝑓) = 𝛺(𝑁2/3 ) for all monotone graph properties f. The proof below is so short that it fits in one page. (You’d appreciate the proof more if you’ve ever tried along the approaches of read the original proofs by graph packing…) The lower bound is obtained by combining two inequalities: 𝑅(𝑓) ≥ Var[𝑓] 𝑝𝑞 ⋅ max𝑖 Inf𝑖 (𝑓, 𝑝) (1) and 𝑅(𝑓) ≥ 𝑝𝑞 ⋅ Inf(𝑓,𝑝) 2 (2) 3.1 Eq.(1) The original proof was given in [OSSS05]. Here we present a simplified proof given in [JZ11]. Take a best deterministic algorithm A with k queries and 𝜇𝑝-distributional error at most 𝛿, and we’ll construct another algorithm A’ with 𝑘 − 1 queries and 𝜇𝑝-distributional error at most 𝛿 + 𝑝𝑞 ⋅ max i Infi(𝑓,𝑝). Then repeat this k times and we’ll get an algorithm making no query and has error 𝛿 + 𝑘𝑝𝑞 ⋅ max i Infi(𝑓,𝑝). But an algorithm without any query can do nothing but guessing the most likely 𝑓(𝑥); the error is Θ(Var[𝑓]), and we then obtained the bound. A’ is very simple. Suppose A’s first query is 𝑥𝑖 , then A’ is the same as A except that A’ doesn’t make this query. Instead, A’ draws 𝑥𝑖 ′ ← 𝜇𝑝 as a guess of 𝑥𝑖 . With probability 𝑝 2 + 𝑞 2 = 1 − 2𝑝𝑞, 𝑥𝑖 ′ = 𝑥𝑖 , great: A’ guessed correctly. With probability 2𝑝𝑞, 𝑥𝑖 ′ ≠ 𝑥𝑖 , bad. But how bad is it? The newly introduced error probability is at most Infi(𝑓,𝑝) by definition of influence! Thus we are done. (The above algorithm is randomized. An averaging argument gives a deterministic one.) □ 3.2 Eq (2) For simplicity, we prove the special case of 𝑝 = 1/2; the general case is similar. By Yao's minimax principle, it's enough to argue that for any fixed deterministic query algorithm, the expected number of queries is at least |Inf(𝑔)| = | ∑𝑖𝑔̂(𝑖) | (equality because of Fact 2.). Note that any decision tree partitions {+1,−1} 𝑛 into monochromatic subcubes {C}. A key (and very simple) observation is that the following two random processes give the same distribution of (𝑦,𝐶): 1) Draw 𝑦 ∈ {+1,−1} 𝑛 uniformly at random, and then let C be the cube containing y. 2) Draw C with probability 2 𝑑𝑖𝑚(𝐶)−𝑛

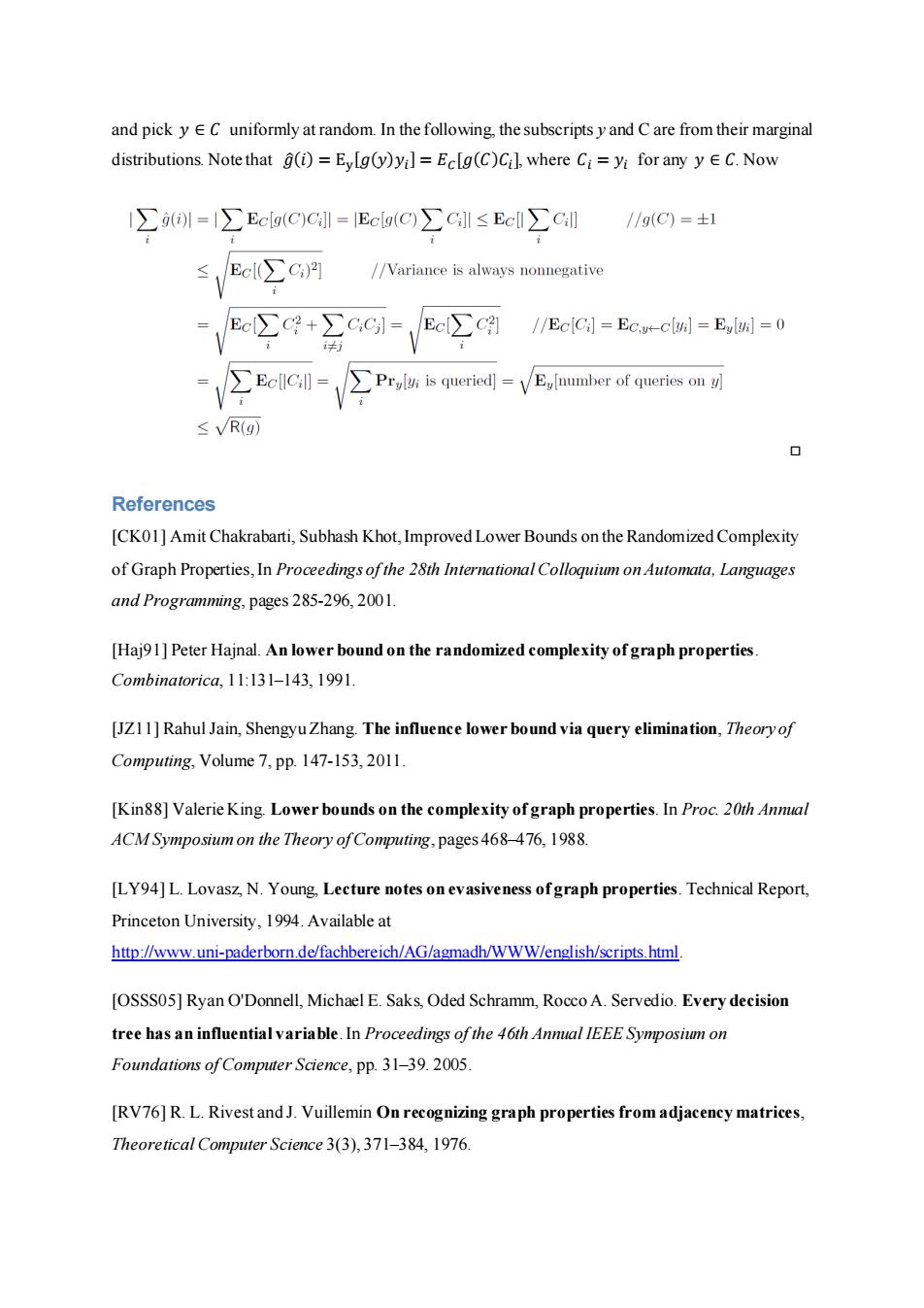

and pick y EC uniformly at random.In the following,the subscripts y and C are from their marginal distributions.Note that g(i)=Eylg(y)yi]=Ec[g(C)Cil,where Ci=yi for any y EC.Now ∑()=I∑Ecg(C)Cl=Eel(C)∑Cl≤Ecl∑C /g(C)=±1 ≤,Ecl∑cA //Variance is always nonnegative Ec∑C?+∑C,Cl= /Ec∑cH //Ec[Ci]EC.u+-clui]Eui]=0 ≠ ∑Ec[lC:l] Prylyi is queried]=VEnumber of queries on y) ≤VR(g References [CK01]Amit Chakrabarti,Subhash Khot,Improved Lower Bounds on the Randomized Complexity of Graph Properties,In Proceedings ofthe 28th International Colloquium on Automata,Languages and Programming,pages 285-296,2001. [Haj91]Peter Hajnal.An lower bound on the randomized complexity of graph properties Combinatorica,11:131-143,1991 [JZ11]Rahul Jain,Shengyu Zhang.The influence lower bound via query elimination,Theory of Computing,Volume 7,pp.147-153,2011. [Kin88]Valerie King.Lower bounds on the complexity of graph properties.In Proc.20th Annal ACM Symposium on the Theory ofComputing,pages 468-476,1988. [LY94]L.Lovasz,N.Young,Lecture notes on evasiveness of graph properties.Technical Report, Princeton University,1994.Available at http://www.uni-paderborn.de/fachbereich/AG/agmadh/WWW/english/scripts.html. [OSSS05]Ryan O'Donnell,Michael E.Saks,Oded Schramm,Rocco A.Servedio.Every decision tree has an influential variable.In Proceedings of the 46th Annual IEEE Symposium on Foundations ofComputer Science,pp.31-39.2005. [RV76]R.L.Rivest and J.Vuillemin On recognizing graph properties from adjacency matrices, Theoretical Computer Science 3(3),371-384,1976

and pick 𝑦 ∈ 𝐶 uniformly at random. In the following, the subscripts y and C are from their marginal distributions. Note that 𝑔̂(𝑖) = Ey [𝑔(𝑦)𝑦𝑖 ] = 𝐸𝐶[𝑔(𝐶)𝐶𝑖 ], where 𝐶𝑖 = 𝑦𝑖 for any 𝑦 ∈ 𝐶. Now □ References [CK01] Amit Chakrabarti, Subhash Khot, Improved Lower Bounds on the Randomized Complexity of Graph Properties, In Proceedings of the 28th International Colloquium on Automata, Languages and Programming, pages 285-296, 2001. [Haj91] Peter Hajnal. An lower bound on the randomized complexity of graph properties. Combinatorica, 11:131–143, 1991. [JZ11] Rahul Jain, Shengyu Zhang. The influence lower bound via query elimination, Theory of Computing, Volume 7, pp. 147-153, 2011. [Kin88] Valerie King. Lower bounds on the complexity of graph properties. In Proc. 20th Annual ACM Symposium on the Theory of Computing, pages 468–476, 1988. [LY94] L. Lovasz, N. Young, Lecture notes on evasiveness of graph properties. Technical Report, Princeton University, 1994. Available at http://www.uni-paderborn.de/fachbereich/AG/agmadh/WWW/english/scripts.html. [OSSS05] Ryan O'Donnell, Michael E. Saks, Oded Schramm, Rocco A. Servedio. Every decision tree has an influential variable. In Proceedings of the 46th Annual IEEE Symposium on Foundations of Computer Science, pp. 31–39. 2005. [RV76] R. L. Rivest and J. Vuillemin On recognizing graph properties from adjacency matrices, Theoretical Computer Science 3(3), 371–384, 1976

[Yao87]Andrew C.Yao.Lower bounds to randomized algorithms for graph properties.In Proc. 28th Annual IEEE Symposium on Foundations ofComputer Science,pages 393-400,1987

[Yao87] Andrew C. Yao. Lower bounds to randomized algorithms for graph properties. In Proc. 28th Annual IEEE Symposium on Foundations of Computer Science, pages 393–400, 1987