LECTURE 3-MEMORY AND DATA LOCALITY

LECTURE 3 – MEMORY AND DATA LOCALITY

Memory access efficiency Tiled Matrix Multiplication Tiled Matrix Multiplication Kernel Handling Boundary Conditions in Tiling Tiled Kernel for Arbitrary Matrix Dimensions Universityf Electr Science and TachnoloChina

Memory access efficiency Tiled Matrix Multiplication Tiled Matrix Multiplication Kernel Handling Boundary Conditions in Tiling Tiled Kernel for Arbitrary Matrix Dimensions

OBIECTIVE .To learn to effectively use the CUDA memory types in a parallel program .Importance of memory access efficiency Registers,shared memory,global memory .Scope and lifetime 电子料发女学 University of ElectriScience and TachnolopChina O

▪To learn to effectively use the CUDA memory types in a parallel program ▪ Importance of memory access efficiency ▪ Registers, shared memory, global memory ▪ Scope and lifetime

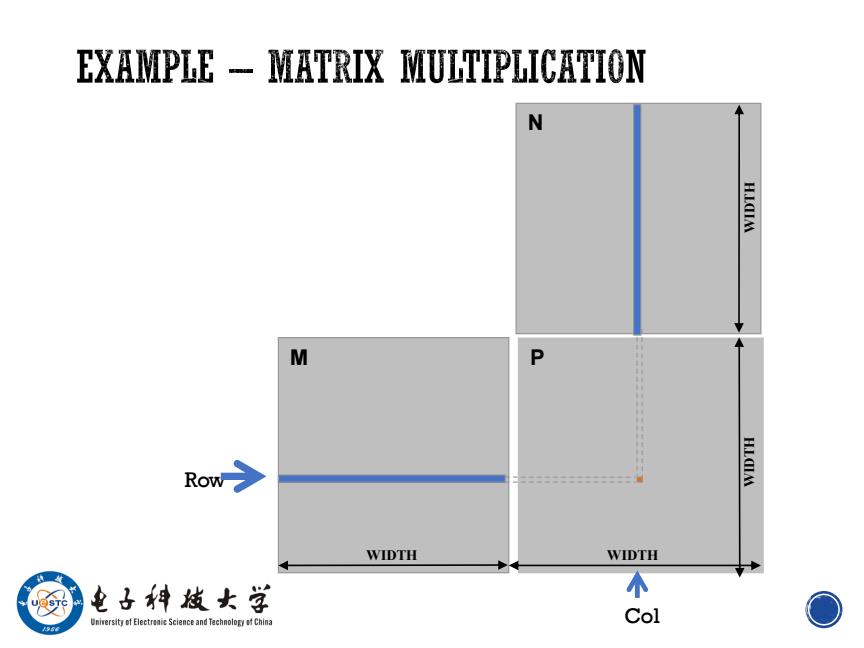

EXAMPLE-MATRIX MULTIPLICATION N H.LQIM M P Row H.LCIA WIDTH WIDTH 电子料效女学 个 niversitof Electr Science and TachnoloChina Col O

M N P WIDTH WIDTH WIDTH Row WIDTH Col

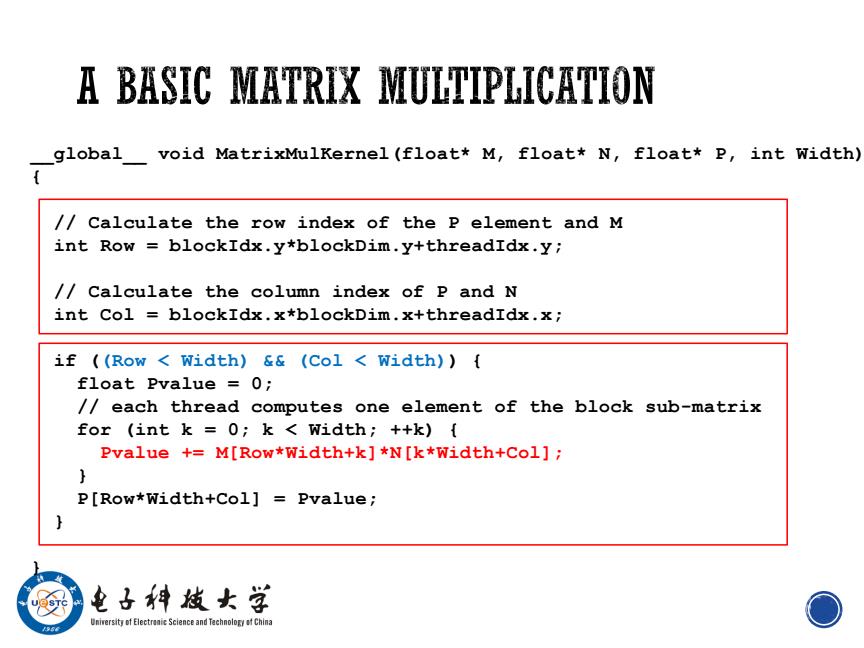

A BASIC MATRIX MULTIPLICATION global void MatrixMulKernel(float*M,float*N,float*P,int Width) /Calculate the row index of the p element and M int Row blockIdx.y*blockDim.y+threadIdx.y; /Calculate the column index of P and N int Col blockIdx.x*blockDim.x+threadIdx.x; if ((Row Width)&&(Col Width)){ float Pvalue 0; /each thread computes one element of the block sub-matrix for (int k 0;k Width;++k){ Pvalue +M[Row*Width+k]*N[k*Width+Col]; P[Row*Width+Col]Pvalue; 电子料烛女学 University of Electreaie Science and Technolory of China O

__global__ void MatrixMulKernel(float* M, float* N, float* P, int Width) { // Calculate the row index of the P element and M int Row = blockIdx.y*blockDim.y+threadIdx.y; // Calculate the column index of P and N int Col = blockIdx.x*blockDim.x+threadIdx.x; if ((Row < Width) && (Col < Width)) { float Pvalue = 0; // each thread computes one element of the block sub-matrix for (int k = 0; k < Width; ++k) { Pvalue += M[Row*Width+k]*N[k*Width+Col]; } P[Row*Width+Col] = Pvalue; } }

HOW ABOUT PERFORMANCE ON A GPU CGMA ratio (Compute to Global Memory Access ratio):The number of floating-point calculations performed for each access to the global memory within a region of a CUDA kernel. The bigger,the better. What is the CGMA of Basic Matrix Multiplication? 电子料战女学 Universityof Electri Science and Tachnolopf China O

▪ CGMA ratio(Compute to Global Memory Access ratio):The number of floating-point calculations performed for each access to the global memory within a region of a CUDA kernel. ▪ The bigger, the better. What is the CGMA of Basic Matrix Multiplication?

CGMA OF BASIC MATRIX MULTIPLICATION All threads access global memory for their input matrix elements .One memory accesses(4 bytes)per floating-point addition ·CGMA ratio=l 4B/s of memory bandwidth/FLOPS .Assume a GPU with Peak floating-point rate 1,500 GFLOPS with 200 GB/s DRAM bandwidth 4*1,500=6,000 GB/s required to achieve peak FLOPS rating The 200 GB/s memory bandwidth limits the execution at 50 GFLOPS This limits the execution rate to 3.3%(50/1500)of the peak floating-point execution rate of the device! Need to drastically cut down memory accesses to get close to thel,500 GFLOPS To achive peak 1,500 GFLOPS,we need CGMA=30 U 电子件点女学 University of Electreaie Science and Technolory of China

▪ All threads access global memory for their input matrix elements ▪ One memory accesses (4 bytes) per floating-point addition ▪ CGMA ratio =1 ▪ 4B/s of memory bandwidth/FLOPS ▪ Assume a GPU with ▪ Peak floating-point rate 1,500 GFLOPS with 200 GB/s DRAM bandwidth ▪ 4*1,500 = 6,000 GB/s required to achieve peak FLOPS rating ▪ The 200 GB/s memory bandwidth limits the execution at 50 GFLOPS ▪ This limits the execution rate to 3.3% (50/1500) of the peak floating-point execution rate of the device! ▪ Need to drastically cut down memory accesses to get close to the1,500 GFLOPS ▪ To achive peak 1,500 GFLOPS, we need CGMA=30

QUESTION How to improve memory access efficiency? .To increase calculation .To improve utilization To utilize the memory hierarchy .Global memory .Shared memory .Register file 电子料发女学 Universityof Electrcience and TachnolopChina O

▪How to improve memory access efficiency? ▪ To increase calculation ▪ To improve utilization ▪ To utilize the memory hierarchy ▪ Global memory ▪ Shared memory ▪ Register file

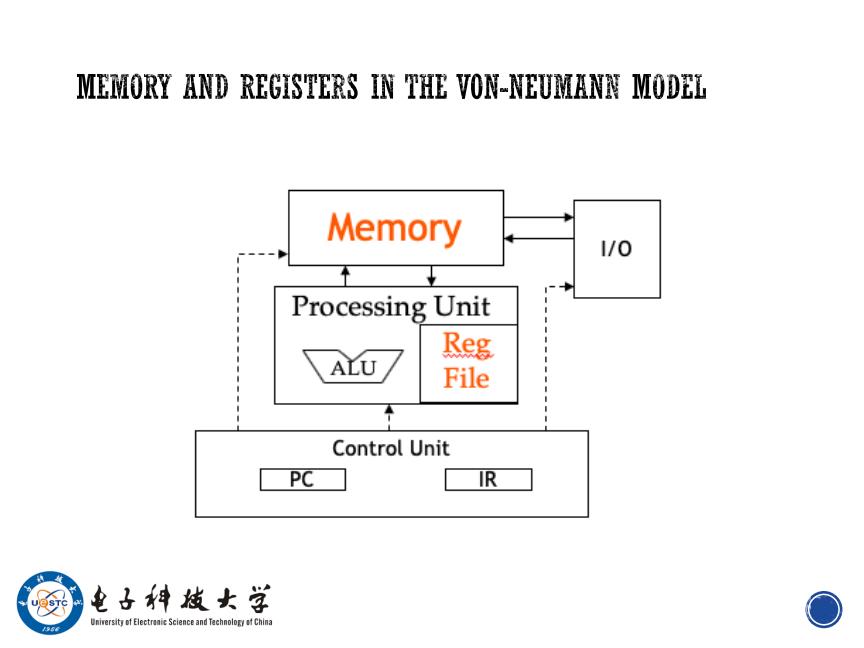

MEMORY AND REGISTERS IN THE VON-NEUMANN MODEL Memory 1/0 Processing Unit ALU Reg File Control Unit PC IR 电子料做女学 University of ElectriScience and TachnolopfChina 0

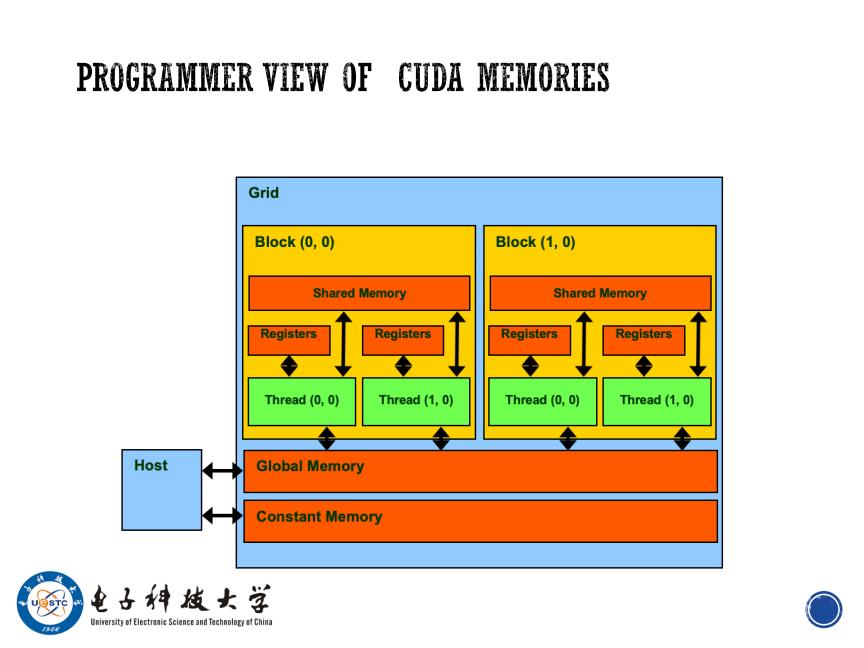

PROGRAMMER VIEW OF CUDA MEMORIES Grid Block (0,0) B1ock(1,0) Shared Memory Shared Memory Registers Registers Registers Registers Thread(0,0) Thread(1,0) Thread (0,0) Thread(1,0) Host Global Memory Constant Memory 电子料战女学 Universityf Electr Science and TachnoloChina O