Solving Problems by Searching 吉建民 USTC jianminOustc.edu.cn 2022年3月6日 4口◆4⊙t1三1=,¥9QC

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Solving Problems by Searching 吉建民 USTC jianmin@ustc.edu.cn 2022 年 3 月 6 日

Used Materials Disclaimer:本课件采用了S.Russell and P.Norvig's Artificial Intelligence-A modern approach slides,徐林莉老师课件和其他网 络课程课件,也采用了GitHub中开源代码,以及部分网络博客 内容 口卡4三4色进分QC

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Used Materials Disclaimer: 本课件采用了 S. Russell and P. Norvig’s Artificial Intelligence –A modern approach slides, 徐林莉老师课件和其他网 络课程课件,也采用了 GitHub 中开源代码,以及部分网络博客 内容

回顾 Agents interact with environments through actuators and sensors The performance measure evaluates the environment sequence A perfectly rational agent maximizes expected performance PEAS descriptions define task environments Environments are categorized along several dimensions: observable?deterministic?episodic?static?discrete? single-agent? Several basic agent architectures exist: reflex,reflex with state,goal-based,utility-based 4口◆4⊙t1三1=,¥9QC

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 回顾 ▶ Agents interact with environments through actuators and sensors ▶ The performance measure evaluates the environment sequence ▶ A perfectly rational agent maximizes expected performance ▶ PEAS descriptions define task environments ▶ Environments are categorized along several dimensions: ▶ observable? deterministic? episodic? static? discrete? single-agent? ▶ Several basic agent architectures exist: ▶ reflex, reflex with state, goal-based, utility-based

Table of Contents Problem-solving Agents Searching for Solutions Uninformed Search Strategies 口卡4三,4色,进分QC

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Table of Contents Problem-solving Agents Searching for Solutions Uninformed Search Strategies

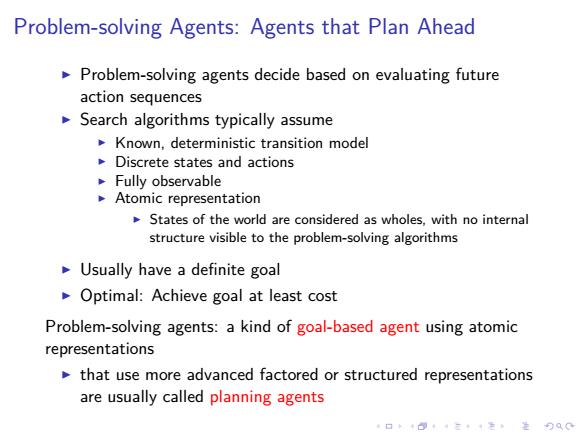

Problem-solving Agents:Agents that Plan Ahead Problem-solving agents decide based on evaluating future action sequences Search algorithms typically assume Known,deterministic transition model Discrete states and actions Fully observable Atomic representation States of the world are considered as wholes,with no internal structure visible to the problem-solving algorithms Usually have a definite goal Optimal:Achieve goal at least cost Problem-solving agents:a kind of goal-based agent using atomic representations that use more advanced factored or structured representations are usually called planning agents 日◆4日4三+1=,¥9QC

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Problem-solving Agents: Agents that Plan Ahead ▶ Problem-solving agents decide based on evaluating future action sequences ▶ Search algorithms typically assume ▶ Known, deterministic transition model ▶ Discrete states and actions ▶ Fully observable ▶ Atomic representation ▶ States of the world are considered as wholes, with no internal structure visible to the problem-solving algorithms ▶ Usually have a definite goal ▶ Optimal: Achieve goal at least cost Problem-solving agents: a kind of goal-based agent using atomic representations ▶ that use more advanced factored or structured representations are usually called planning agents

从例子开始:Romania ▣Oradea Neamt 75 白Zerind 151 Arad 140 92 Sibiu 99 Fagaras 118 4 80 ▣Vaslui Timisoara Rimnicu Vilcea 142 110 口Lugoj 97 Pitesti 211 70 ▣ 98 Mehadia 146 101 85 ▣Hirsova Orziceni 75 138 86 Bucharest Dobreta自 120 OCraiova 90 Eforie Giurgiu 如何找到一条路径,从Arad前往Bucharest? 口卡+四t4二老正)QG

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 从例子开始: Romania 如何找到一条路径,从 Arad 前往 Bucharest?

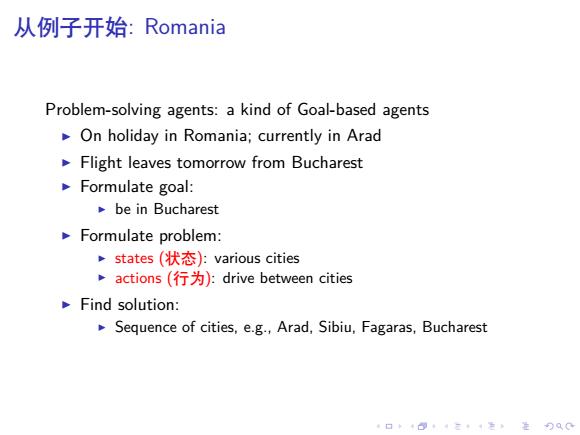

从例子开始:Romania Problem-solving agents:a kind of Goal-based agents On holiday in Romania;currently in Arad Flight leaves tomorrow from Bucharest Formulate goal: be in Bucharest Formulate problem: ,states(状态):various cities ,actions(行为:drive between cities Find solution: Sequence of cities,e.g.,Arad,Sibiu,Fagaras,Bucharest 4口◆464三1=,¥9QC

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 从例子开始: Romania Problem-solving agents: a kind of Goal-based agents ▶ On holiday in Romania; currently in Arad ▶ Flight leaves tomorrow from Bucharest ▶ Formulate goal: ▶ be in Bucharest ▶ Formulate problem: ▶ states (状态): various cities ▶ actions (行为): drive between cities ▶ Find solution: ▶ Sequence of cities, e.g., Arad, Sibiu, Fagaras, Bucharest

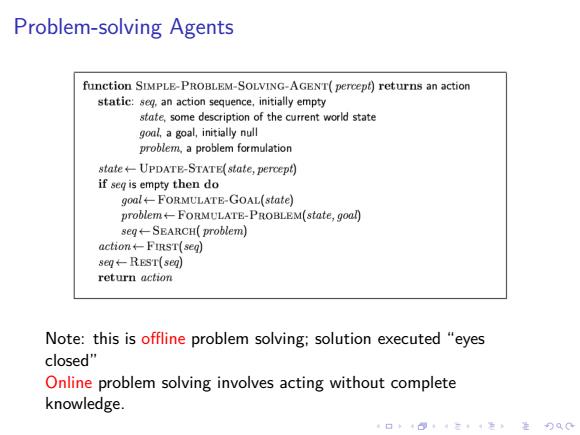

Problem-solving Agents function SIMPLE-PROBLEM-SOLVING-AGENT(percept)returns an action static:seg,an action sequence,initially empty state,some description of the current world state goal a goal,initially null problem,a problem formulation state+UPDATE-STATE(state,percept) if seq is empty then do goal+FORMULATE-GOAL(state) problem+FORMULATE-PROBLEM(state,goal) seg←SEARCH(problem) action←FIRST(se] sem←REsT(seq return action Note:this is offline problem solving:solution executed "eyes closed" Online problem solving involves acting without complete knowledge. 口卡回·三4色,是分Q0

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Problem-solving Agents Note: this is offline problem solving; solution executed “eyes closed” Online problem solving involves acting without complete knowledge

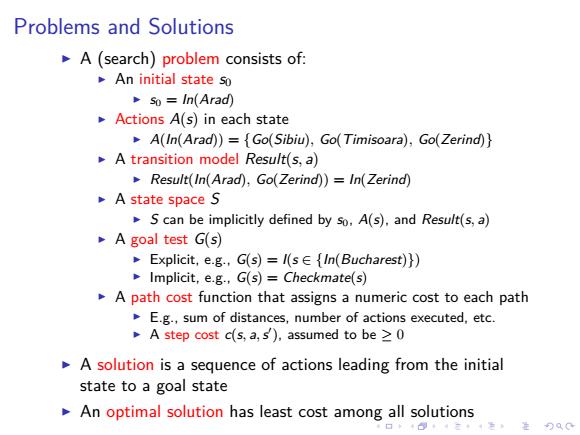

Problems and Solutions A (search)problem consists of: An initial state so so In(Arad) Actions A(s)in each state A(In(Arad))={Go(Sibiu),Go(Timisoara),Go(Zerind)} A transition model Result(s,a) Result(In(Arad),Go(Zerind))=In(Zerind) A state space S S can be implicitly defined by so.A(s).and Result(s,a) A goal test G(s) ·Explicit,eg,G(s)=I(s∈{In(Bucharest)}) Implicit,e.g.,G(s)=Checkmate(s) A path cost function that assigns a numeric cost to each path E.g.,sum of distances,number of actions executed,etc. A step cost c(s,a,s),assumed to be >0 A solution is a sequence of actions leading from the initial state to a goal state An optimal solution has least cost among all solutions

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Problems and Solutions ▶ A (search) problem consists of: ▶ An initial state s0 ▶ s0 = In(Arad) ▶ Actions A(s) in each state ▶ A(In(Arad)) = {Go(Sibiu), Go(Timisoara), Go(Zerind)} ▶ A transition model Result(s, a) ▶ Result(In(Arad), Go(Zerind)) = In(Zerind) ▶ A state space S ▶ S can be implicitly defined by s0, A(s), and Result(s, a) ▶ A goal test G(s) ▶ Explicit, e.g., G(s) = I(s ∈ {In(Bucharest)}) ▶ Implicit, e.g., G(s) = Checkmate(s) ▶ A path cost function that assigns a numeric cost to each path ▶ E.g., sum of distances, number of actions executed, etc. ▶ A step cost c(s, a,s ′ ), assumed to be ≥ 0 ▶ A solution is a sequence of actions leading from the initial state to a goal state ▶ An optimal solution has least cost among all solutions

Selecting a State Space Real world is absurdly complex ,state space must be abstracted(抽象化)for problem solving (Abstract)state set of real states (Abstract)action complex combination of real actions e.g.,Go(Zerind)EA(Arad)represents a complex set of possible routes,detours,rest stops,etc. For guaranteed realizability,any real state In(Arad)must get to some real state In(Zerind) (Abstract)solution set of real paths that are solutions in the real world Each abstract action should be "easier"than the original problem 口卡4三4色进分QC

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Selecting a State Space ▶ Real world is absurdly complex ▶ state space must be abstracted (抽象化) for problem solving ▶ (Abstract) state = set of real states ▶ (Abstract) action = complex combination of real actions ▶ e.g., Go(Zerind) ∈ A(Arad) represents a complex set of possible routes, detours, rest stops, etc. ▶ For guaranteed realizability, any real state In(Arad) must get to some real state In(Zerind) ▶ (Abstract) solution = set of real paths that are solutions in the real world ▶ Each abstract action should be “easier” than the original problem