Outline How to collect data from Web? -Build a Crawler High Performance Web Crawler Distributed Crawling/Incremental Crawling State-of-art technology CCF-ADL at Zhengzhou University, June25-27,2010

Outline • How to collect data from Web? – Build a Crawler – High Performance Web Crawler – Distributed Crawling/Incremental Crawling – State-of-art technology 2 CCF-ADL at Zhengzhou University, June 25-27, 2010

Build a Crawler!

Build a Crawler!

业于上-M10Z1 a rirer0X (E)编辑E)查看)历史(⑤)书签但)工具(工)帮助) X http://www.pku.edu.cn/ ☆· ical R... ASPLOS X... 周Computer. 围Statistics Statistical http:…Fo71图百度搜索_☐ht系结构欢迎…图☐[WebCat】 北京大 110周年校庆 ENGLISH 旧版主页 新闻资讯 北大概况 教育教学 科学研究 招生就业 合作交流 图书档案 PEKING UNIVERSITY 链接是哪些? 「北大新闻 详细》 |图文热点 本网特约评论:让“超越、融合、共享”成为促进北大前进 2008-09-26 ,北京大学举行2006年新职工岗前培训 2008-09-26 ,北大医学部在国家“高等学校本科教学质量与教学改革工程: 2008-09-26 ·孟杰数授研究团队在原子核自旋-同位旋激发研究中取得新进展 2008-09-26 ,北大光华与加拿大丰业银行签署双学位BA奖学金捐赠协议 2008-09-28 今天,从这里启航:北京大 北大万有教授荣获“2008年国际疼痛学会疼痛研究杰出奖” 2008-09-25 学举行2008级新生开学典礼 “我们来自江南塞北,情系 搜索北大习情输入关键字 搜索 着城箱乡野;我们走向海角天 涯,指点着三山五岳。”金秋九 1通知公告 详细》 月,伴随着一年中收获., ,关于校本部统一办理学生公交IC卡的通知 2008-09-25 ,关于举办北京大学第21期党的知识培训班的通知 2008-09-23 院系设置 医学部 ,关于北京大学“选留2009届忧秀本科毕业生从事学生工作” 2008-09-23 管理服务 基金合 人才招聘 校友网 ◆北大校内信息今天有3条新信息 详细》 校园文化 产学研 校内门户」网铭服务 电子邮箱」 未名BBS 北大故事 相关链接 本站地图 版权所有北京大学 地址:北京市海淀区颐和园路5号 邮编:1008711 邮箱:ebmaster@pku.edu,cn 建站献策

链接是哪些?

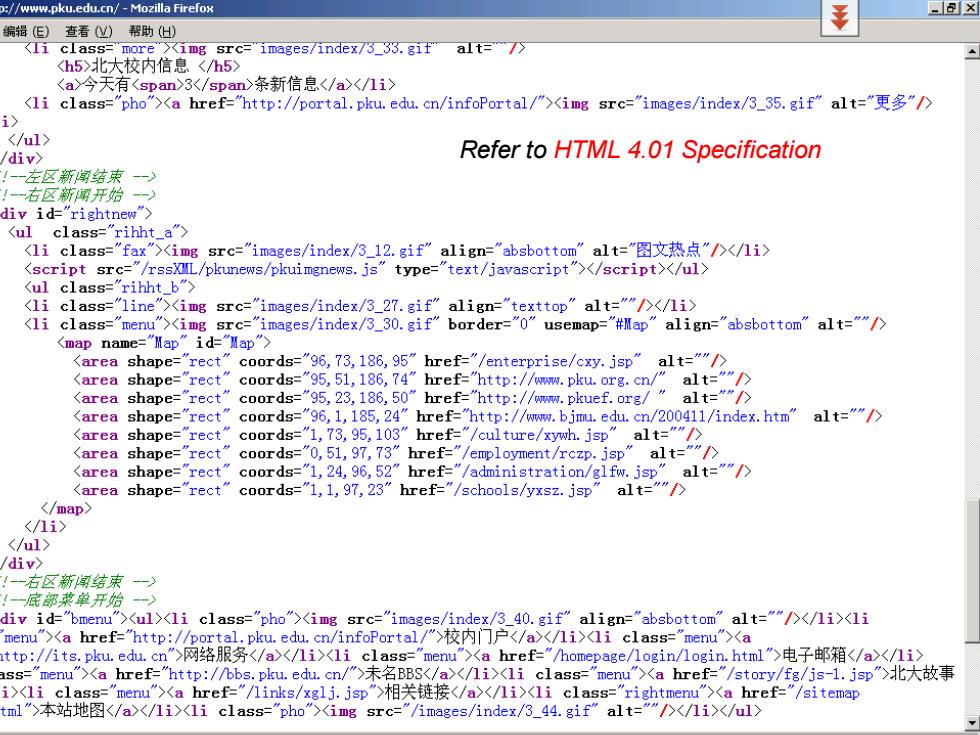

://www.pku.edu.cn/-Mozilla Firefox x 编辑(E)查看) 帮助) 北大校内信息 3条新信息 a href="http://portal.pku.edu.cn/infoPortal/">img src="images/index/3_35.gif"alt=/> i> div> Refer to HTML 4.01 Specification 左区新闻结束 右区新闻开始 div id正"rightnew"> 〈area shape="rect" coords="96,73,186,95"href="/enterprise/cxy.jsp"alt=""/> area shape= rect" coords="95,23,186,50"href="http://www.pkuef.org/"alt="/> Karea shape=" rect" coords="96,1,185,24"href="http://www.bjmu.edu.cn/200411/index.htm"alt=""/> Karea shape="rect" coords="1,73,95,103"href="/culture/xywh.jsp"alt=""/> Karea shape="rect" coords="0,51,97,73"href="/employment/rczp.jsp" alt="" area shape="rect” coords="1,24,96,52"href="/administration/glfw.jsp"alt=""/> Karea shape="rect" coords="1,1,97,23"href="/schools/yxsz.jsp" alt="" div> 右区新闻结束 一》 底部藂单开始 liv id-"bmenu">li menu">网络服务电子邮箱Ka href="http:/bbs.pku.edu.cm/">未名BBSa href="/story/fg/js-l.jsp">北大故事 i>/a>本站地图

Refer to HTML 4.01 Specification

GET Method in HTTP Refer to RFC 2616 HTTP Made Really Easy Figure 2.4 Example of the use of the GET method in an HTTP 1.1 session

GET Method in HTTP Refer to RFC 2616 HTTP Made Really Easy

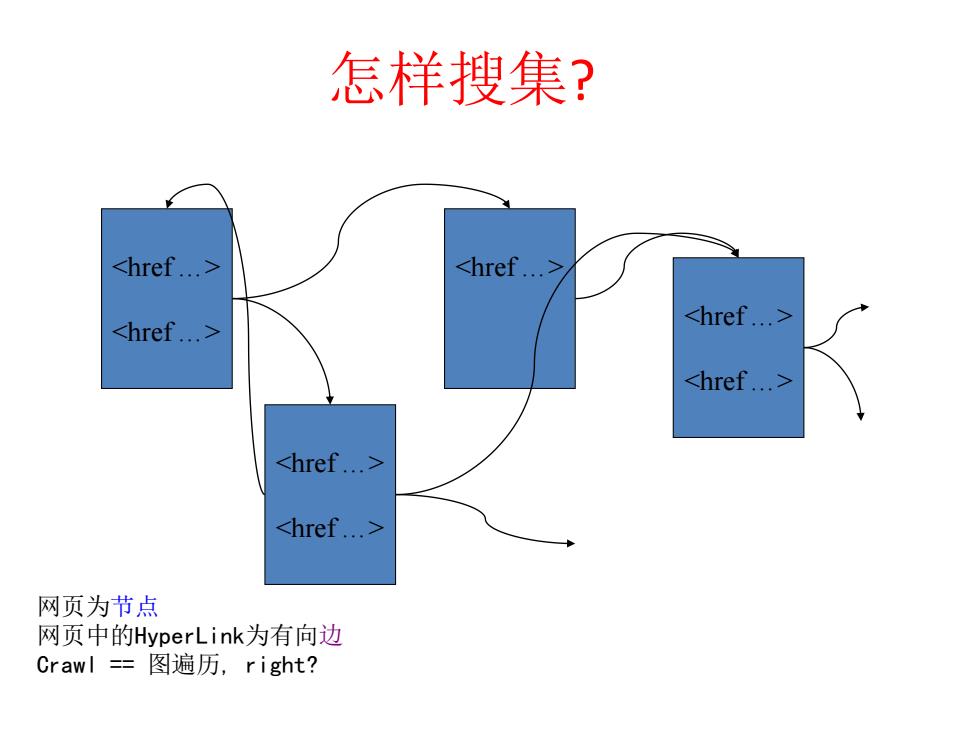

怎样搜集? href..≥ 网页为节点 网页中的HyperLink为有向边 Crawl=图遍历,right?

怎样搜集? 网页为节点 网页中的HyperLink为有向边 Crawl == 图遍历, right?

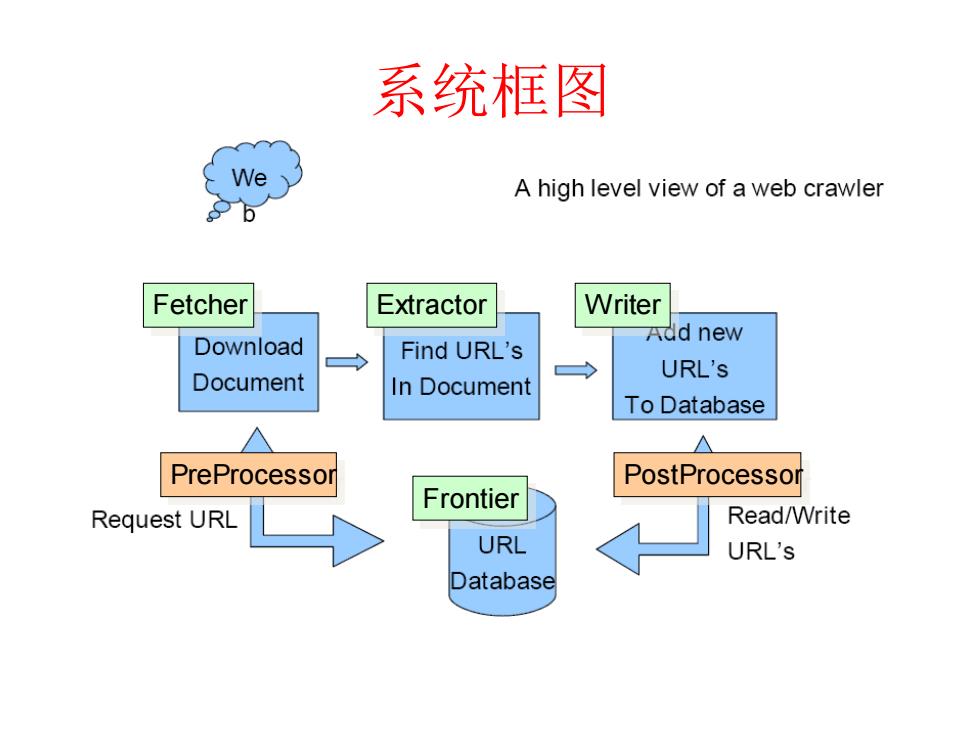

系统框图 A high level view of a web crawler Fetcher Extractor Writer Add new Download Find URL's ◇ URL's Document In Document To Database △ △ PreProcessor PostProcessor Frontier Request URL Read/Write URL URL's Database

系统框图 Frontier Fetcher Extractor Writer PreProcessor PostProcessor

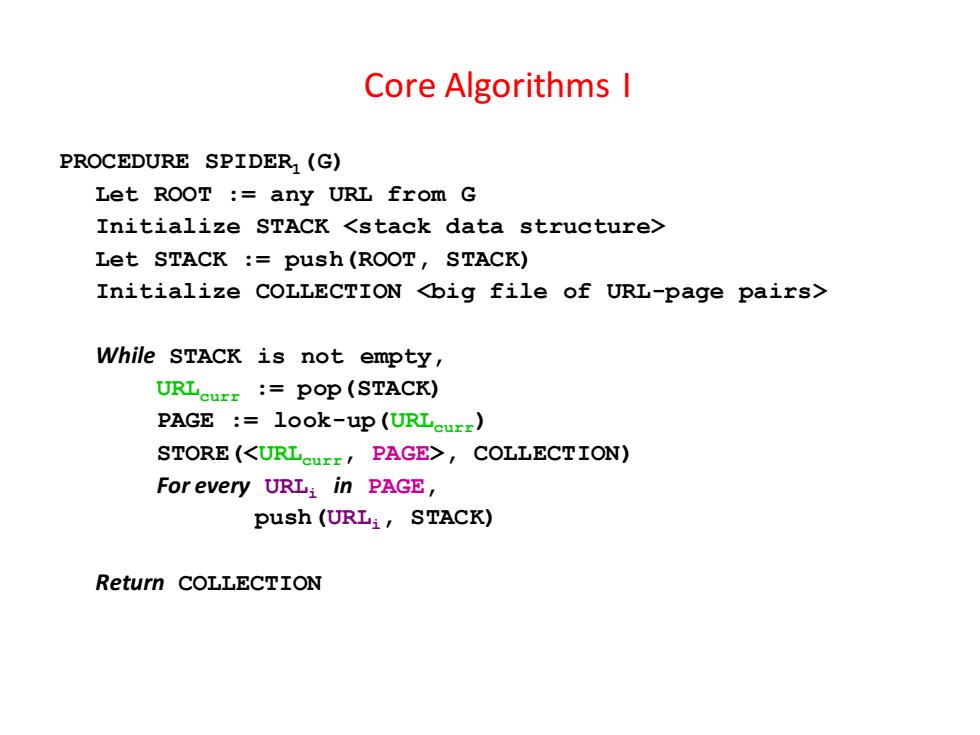

Core Algorithms I PROCEDURE SPIDER (G) Let ROOT :any URL from G Initialize STACK Let STACK push(ROOT,STACK) Initialize COLLECTION While STACK is not empty, URLeu :POP(STACK) PAGE :1ook-up (URLeurr) STORE (,COLLECTION) For every URL:in PAGE, push (URL;,STACK) Return COLLECTION

Core Algorithms I PROCEDURE SPIDER1(G) Let ROOT := any URL from G Initialize STACK Let STACK := push(ROOT, STACK) Initialize COLLECTION While STACK is not empty, URLcurr := pop(STACK) PAGE := look-up(URLcurr) STORE(, COLLECTION) For every URLi in PAGE, push(URLi, STACK) Return COLLECTION

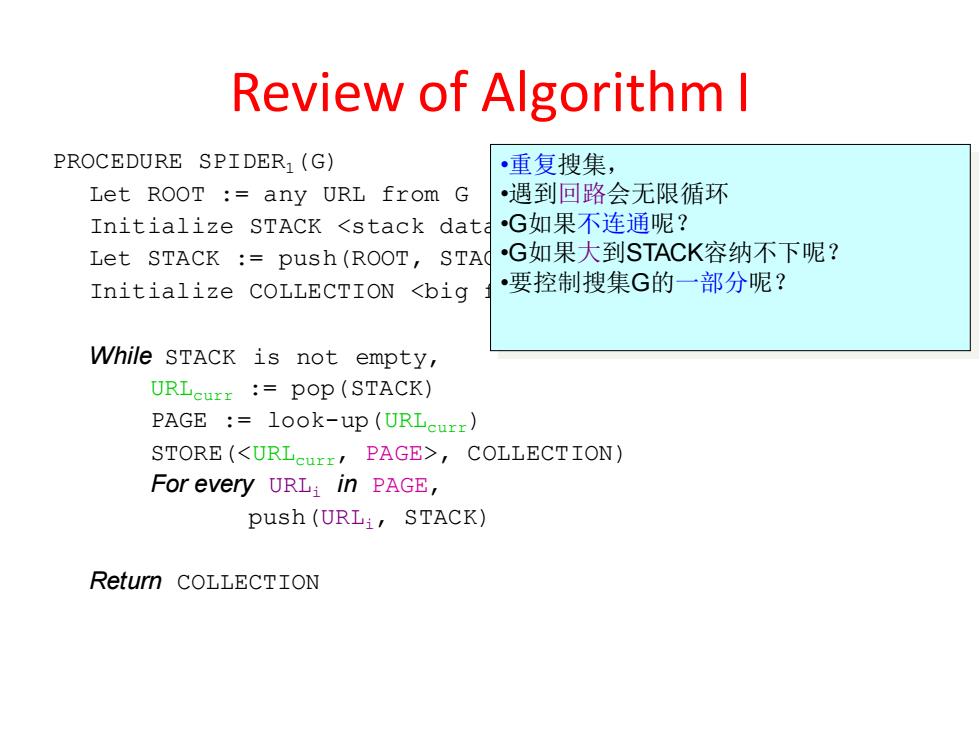

Review of Algorithm I PROCEDURE SPIDER (G) 重复搜集, Let ROOT :any URL from G 遇到回路会无限循环 Initia1 ize STACK,COLLECTION) For every URLi in PAGE, push (URLi,STACK) Return COLLECTION

Review of Algorithm I PROCEDURE SPIDER1(G) Let ROOT := any URL from G Initialize STACK Let STACK := push(ROOT, STACK) Initialize COLLECTION While STACK is not empty, URLcurr := pop(STACK) PAGE := look-up(URLcurr) STORE(, COLLECTION) For every URLi in PAGE, push(URLi, STACK) Return COLLECTION •重复搜集, •遇到回路会无限循环 •G如果不连通呢? •G如果大到STACK容纳不下呢? •要控制搜集G的一部分呢?

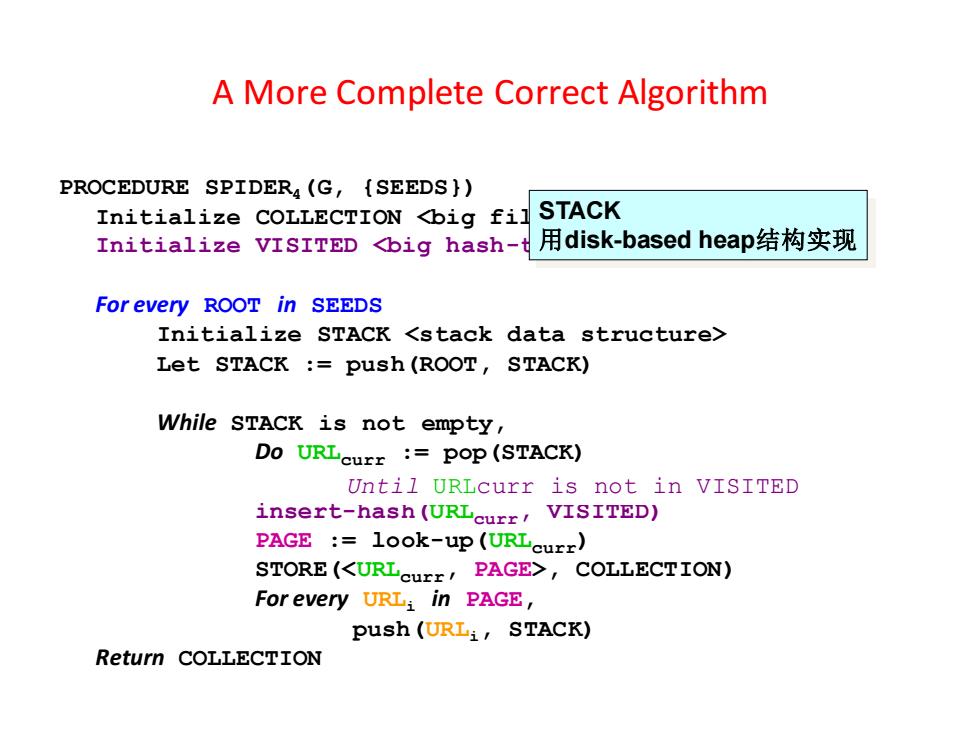

A More Complete Correct Algorithm PROCEDURE SPIDER(G,{SEEDS } Initialize COLLECTION Let STACK push (ROOT,STACK) While STACK is not empty, Do URLcurr :pop (STACK) Until URLcurr is not in VISITED insert-hash (URLcurr,VISITED) PAGE look-up (URLcurr) STORE (,COLLECTION) For every URL:in PAGE, Push(URL生,STACK) Return COLLECTION

A More Complete Correct Algorithm PROCEDURE SPIDER4(G, {SEEDS}) Initialize COLLECTION Initialize VISITED For every ROOT in SEEDS Initialize STACK Let STACK := push(ROOT, STACK) While STACK is not empty, Do URLcurr := pop(STACK) Until URLcurr is not in COLLECTION insert-hash(URLcurr, VISITED) PAGE := look-up(URLcurr) STORE(, COLLECTION) For every URLi in PAGE, push(URLi, STACK) Return COLLECTION Until URLcurr is not in VISITED STACK 用disk-based heap结构实现