Digital Image Processing Chapter 8: Image Compression-1

Digital Image Processing Chapter 8: Image Compression-1

Outline 1 Fundamentals 2 Some basic compression method ·Huffman coding ·Arithmetic coding ·LZW coding Block Transform coding ·Predictive coding

Outline 1 Fundamentals 2 Some basic compression method • Huffman coding • Arithmetic coding • LZW coding • Block Transform coding • Predictive coding • …

Image Compression Reducing the amount of data required to represent a digital image while keeping information as much as possible

Reducing the amount of data required to represent a digital image while keeping information as much as possible Image Compression

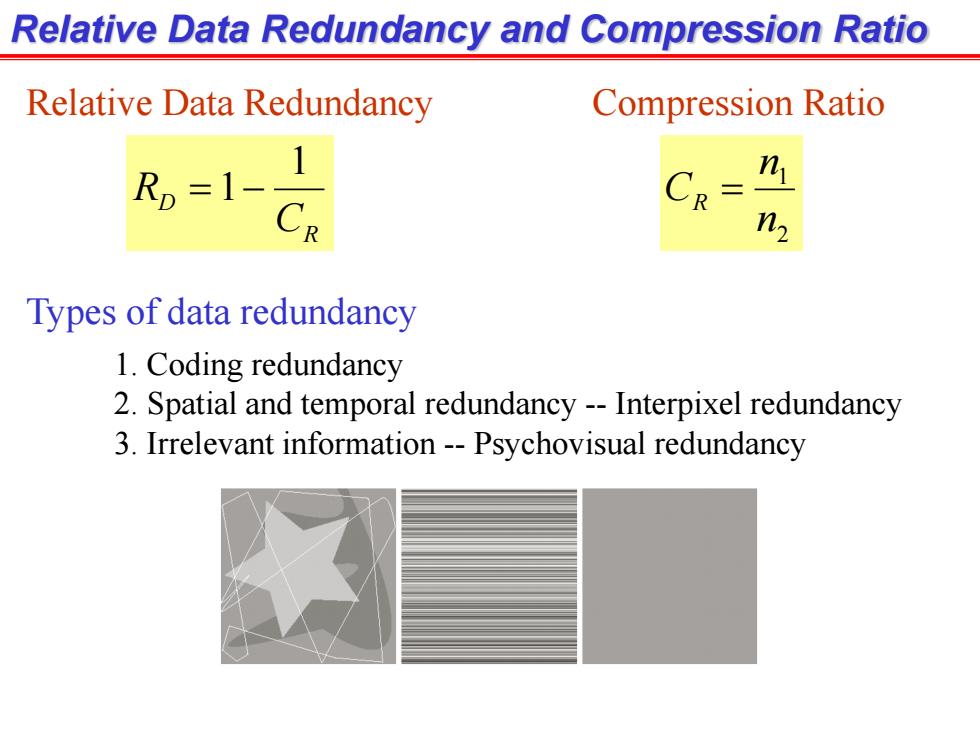

Relative Data Redundancy and Compression Ratio Relative Data Redundancy Compression Ratio R=1- n R n, Types of data redundancy 1.Coding redundancy 2.Spatial and temporal redundancy--Interpixel redundancy 3.Irrelevant information--Psychovisual redundancy

Relative Data Redundancy and Compression Ratio R D C R 1 1 Relative Data Redundancy Compression Ratio 2 1 n n CR Types of data redundancy 1. Coding redundancy 2. Spatial and temporal redundancy -- Interpixel redundancy 3. Irrelevant information -- Psychovisual redundancy

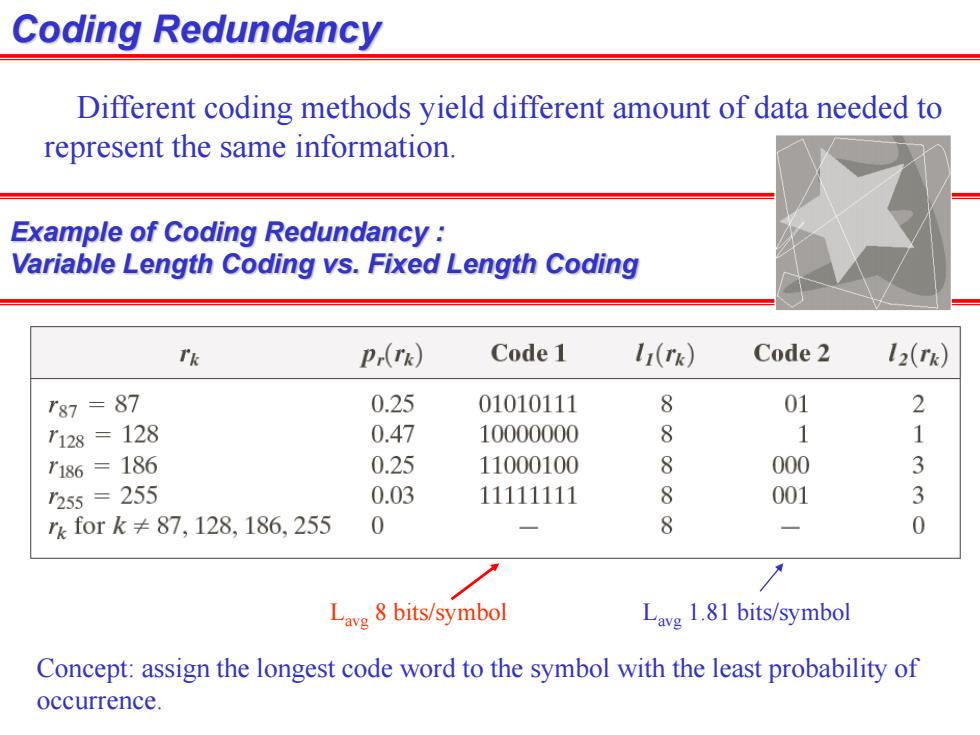

Coding Redundancy Different coding methods yield different amount of data needed to represent the same information. Example of Coding Redundancy Variable Length Coding vs.Fixed Length Coding Tk Pr(rk) Code 1 11(rk) Code2 12(Tk) T87=87 0.25 01010111 8 01 2 7128=128 0.47 10000000 8 1 7186=186 0.25 11000100 8 000 3 255=255 0.03 11111111 8 001 3 rk for k≠87,128,186,255 0 一 8 0 Lav8 bits/symbol Lavg 1.81 bits/symbol Concept:assign the longest code word to the symbol with the least probability of occurrence

Coding Redundancy Different coding methods yield different amount of data needed to represent the same information. Example of Coding Redundancy : Variable Length Coding vs. Fixed Length Coding Lavg 8 bits/symbol Lavg 1.81 bits/symbol Concept: assign the longest code word to the symbol with the least probability of occurrence

Spatial and temporal Redundancy Interpixel redundancy: Parts of an image are highly correlated. In other words,we can predict a given pixel from its neighbor. nk P(rk) 1 256 256 o 50 100 150 200 250

Spatial and temporal Redundancy Interpixel redundancy: Parts of an image are highly correlated. In other words,we can predict a given pixel from its neighbor

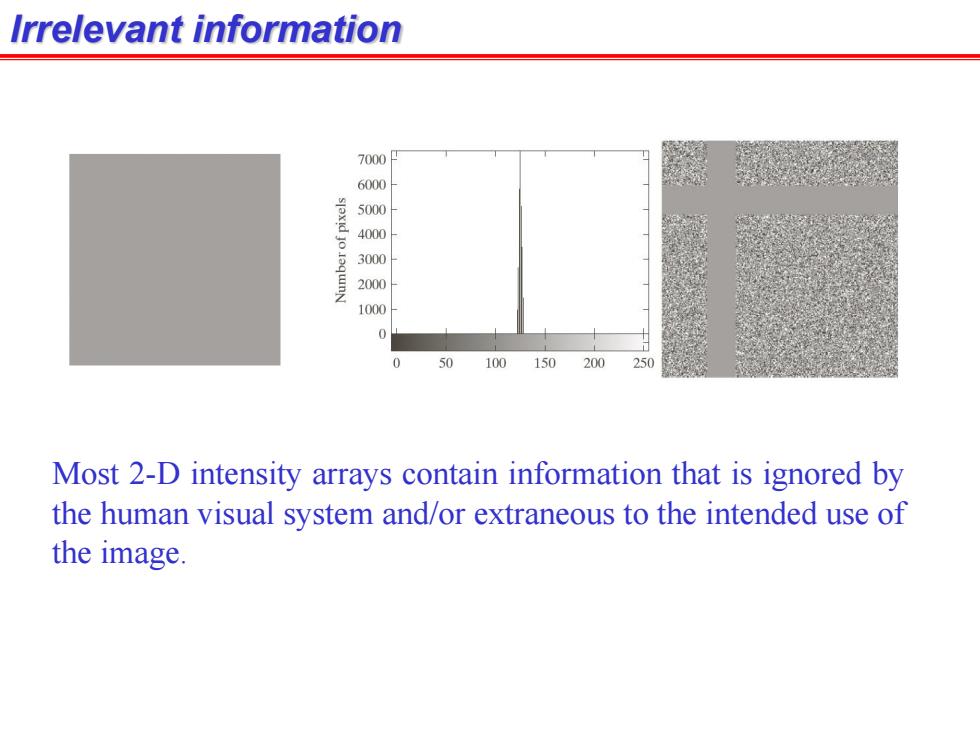

Irrelevant information 7000 6000 5000 00 30 00 1000 0 0 100150200250 Most 2-D intensity arrays contain information that is ignored by the human visual system and/or extraneous to the intended use of the image

Irrelevant information Most 2-D intensity arrays contain information that is ignored by the human visual system and/or extraneous to the intended use of the image

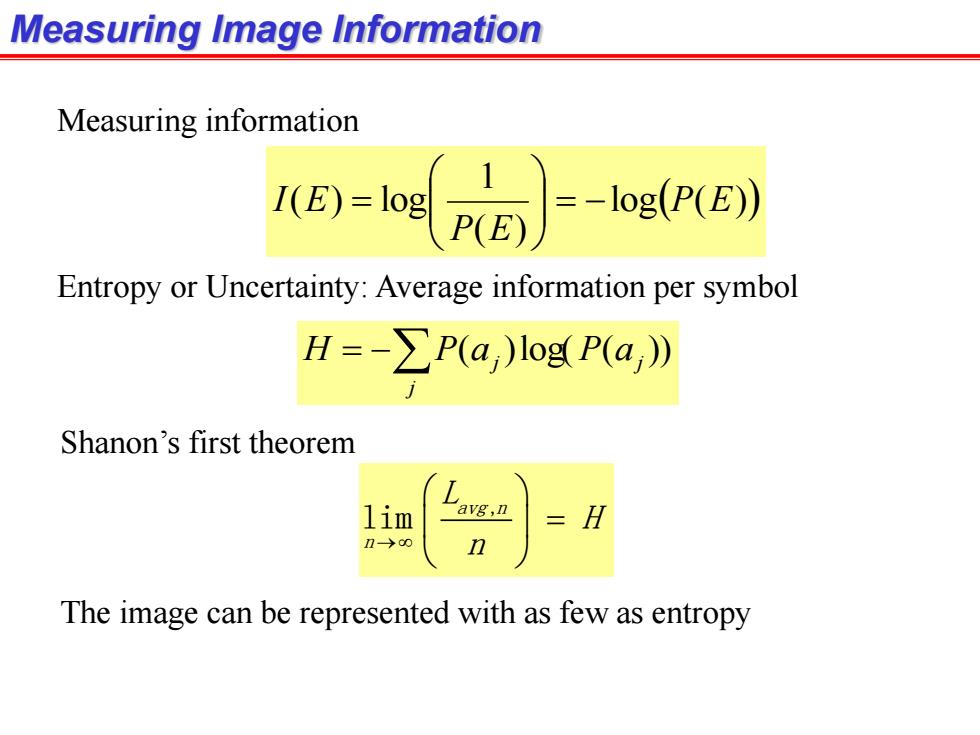

Measuring Image Information Measuring information I(E)=log =-log(P(E)) Entropy or Uncertainty:Average information per symbol H=-∑P(a,)logP(a,》 Shanon's first theorem lim avg,n The image can be represented with as few as entropy

Measuring Image Information Measuring information log ( ) ( ) 1 ( ) log P E P E I E Entropy or Uncertainty: Average information per symbol j H P aj P aj ( )log( ( )) Shanon’s first theorem The image can be represented with as few as entropy , lim avg n n L H n

Fidelity Criteria:how good is the compression algorithm -Objective Fidelity Criterion RMSE,PSNR -Subjective Fidelity Criterion: -Human Rating Value Rating Description 1 Excellent An image of extremely high quality,as good as you could desire. 2 Fine An image of high quality.providing enjoyable viewing.Interference is not objectionable. 3 Passable An image of acceptable quality.Interference is not objectionable. 4 Marginal An image of poor quality;you wish you could improve it.Interference is somewhat objectionable. 5 Inferior A very poor image,but you could watch it. Objectionable interference is definitely present. Unusable An image so bad that you could not watch it

Fidelity Criteria: how good is the compression algorithm -Objective Fidelity Criterion - RMSE, PSNR -Subjective Fidelity Criterion: -Human Rating

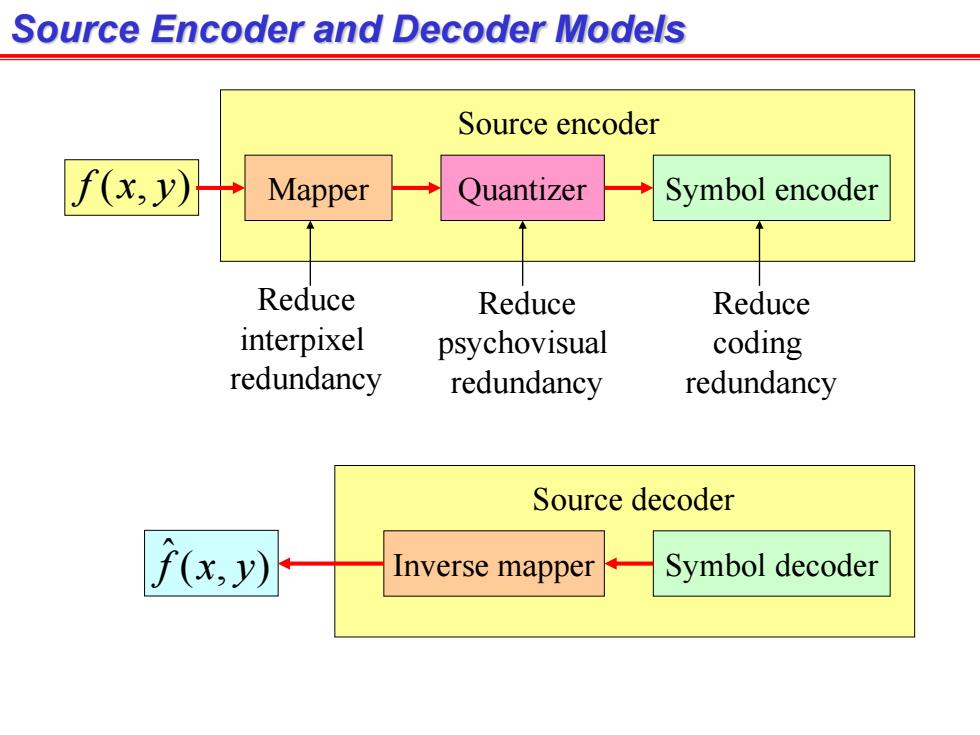

Source Encoder and Decoder Models Source encoder f(x,y) Mapper Quantizer Symbol encoder Reduce Reduce Reduce interpixel psychovisual coding redundancy redundancy redundancy Source decoder 子(x,y) Inverse mapper Symbol decoder

Source Encoder and Decoder Models f (x, y) Mapper Quantizer Symbol encoder Source encoder Inverse mapper Symbol decoder Source decoder ( , ) ˆ f x y Reduce interpixel redundancy Reduce psychovisual redundancy Reduce coding redundancy