Artificial Neural Networks 人工神经网络 Introduction

Artificial Neural Networks 人工神经网络 Introduction

Table of Contents ·Introduction to ANNs Taxonomy Features Learning Applications I ·Supervised ANNs ·Unsupervised ANNs Examples Examples -Applications Applications -Further topics Further topics II I1I 09/07/2023 Artificial Neural Networks-I 2

09/07/2023 Artificial Neural Networks - I 2 Table of Contents • Introduction to ANNs – Taxonomy – Features – Learning – Applications I • Supervised ANNs – Examples – Applications – Further topics II • Unsupervised ANNs – Examples – Applications – Further topics III

Contents -I ·Introduction to ANNs Processing elements (neurons) -Architecture Functional Taxonomy of ANNs Structural Taxonomy of ANNs ·Features 。Learning Paradigms Applications 09/07/2023 Artificial Neural Networks-I 3

09/07/2023 Artificial Neural Networks - I 3 Contents - I • Introduction to ANNs – Processing elements (neurons) – Architecture • Functional Taxonomy of ANNs • Structural Taxonomy of ANNs • Features • Learning Paradigms • Applications

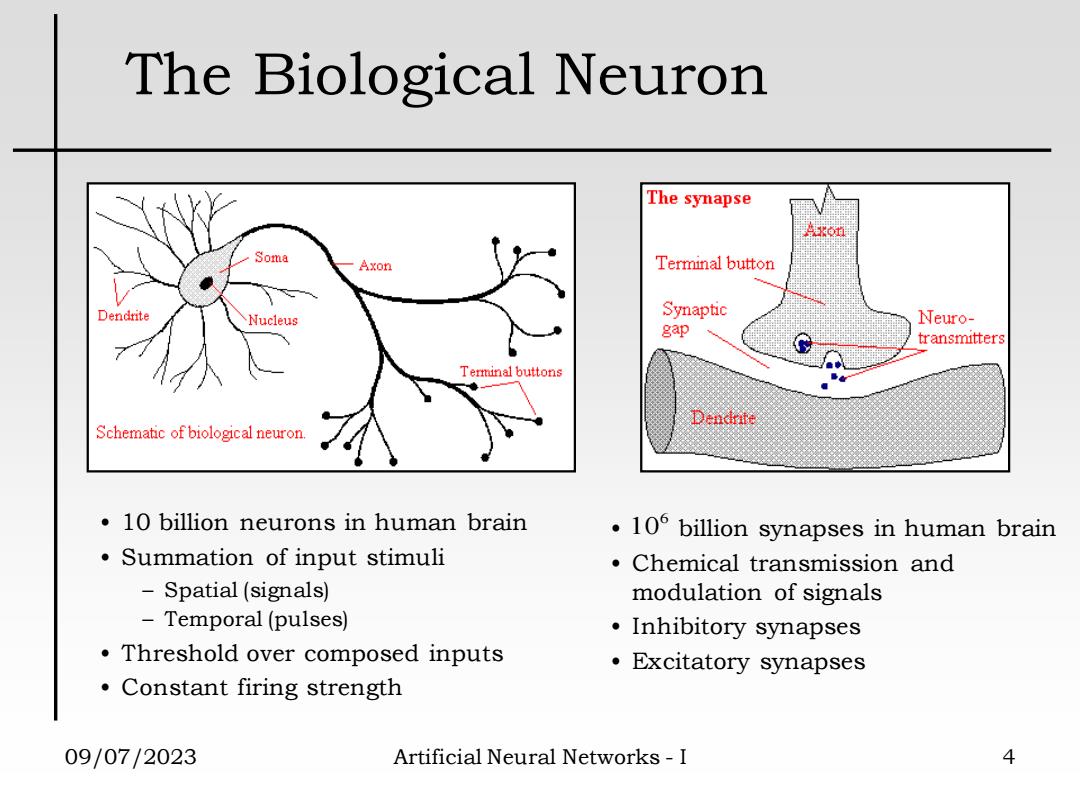

The Biological Neuron The synapse Soma Axon Terminal button Dendrite Nucleus Synaptic Neuro- gap transmitters Terminal buttons Dendrite Schematic of biological neuron. 10 billion neurons in human brain 10 billion synapses in human brain Summation of input stimuli Chemical transmission and -Spatial(signals) modulation of signals Temporal(pulses) ·Inhibitory synapses Threshold over composed inputs ·Excitatory synapses Constant firing strength 09/07/2023 Artificial Neural Networks-I 4

09/07/2023 Artificial Neural Networks - I 4 The Biological Neuron • 10 billion neurons in human brain • Summation of input stimuli – Spatial (signals) – Temporal (pulses) • Threshold over composed inputs • Constant firing strength • billion synapses in human brain • Chemical transmission and modulation of signals • Inhibitory synapses • Excitatory synapses 6 10

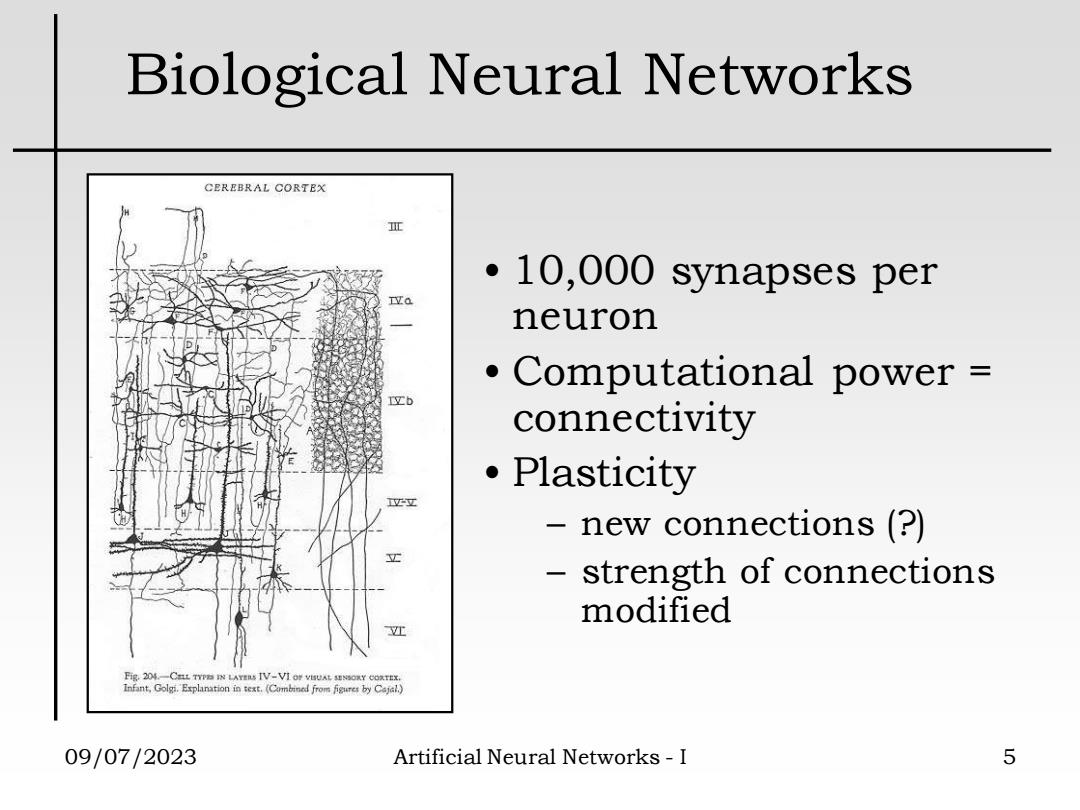

Biological Neural Networks CEREBRAL CORTEX 证 10,000 synapses per neuron Computational power b connectivity ·Plasticity new connections(?) strength of connections modified Fig 204-CHL TYPB IN LAYERS TV-VI OF VLUAL SINSORY CORTEX. Infant,Golgi.Explanation in text.(Cambined from figures by Cajal.) 09/07/2023 Artificial Neural Networks-I 5

09/07/2023 Artificial Neural Networks - I 5 Biological Neural Networks • 10,000 synapses per neuron • Computational power = connectivity • Plasticity – new connections (?) – strength of connections modified

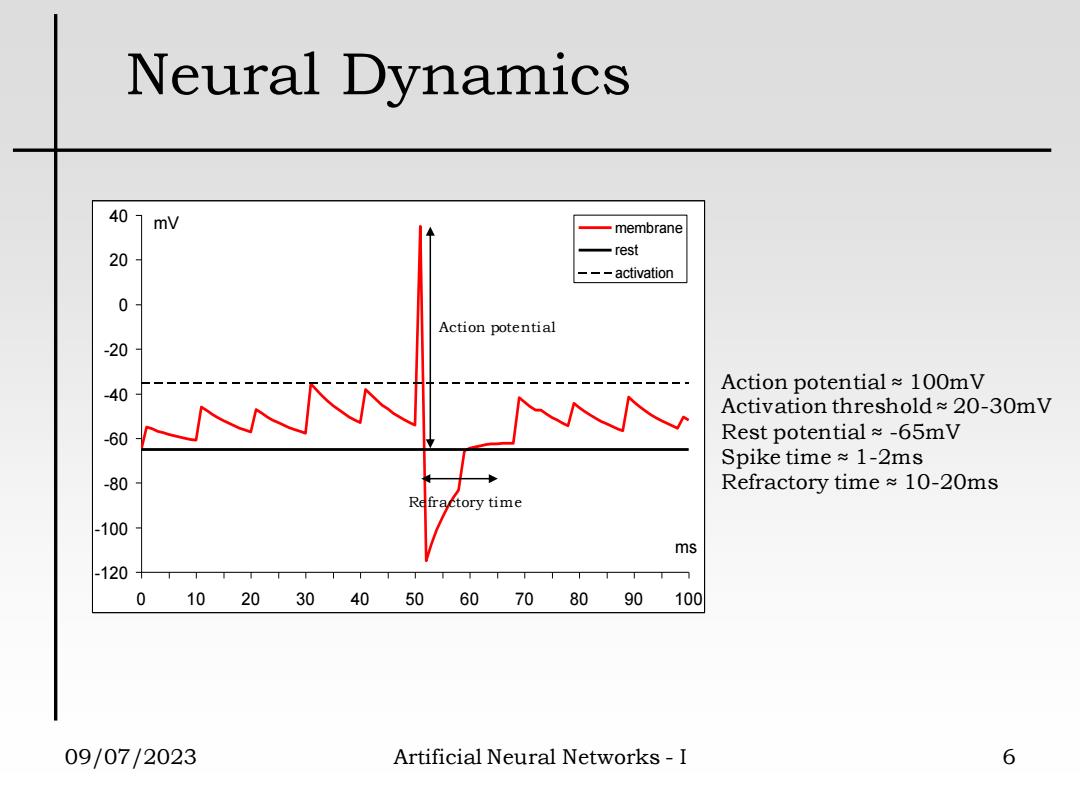

Neural Dynamics 40 mV membrane 20 -rest ---activation 0 Action potential -20 - Action potential~100mV 60 Activation threshold20-30mV Rest potential≈-65mV Spike time≈1-2ms -80 Refractory time 10-20ms Refractory time -100 ms -120 0 10 20304050 6070 80 90100 09/07/2023 Artificial Neural Networks-I 6

09/07/2023 Artificial Neural Networks - I 6 Neural Dynamics -120 -100 -80 -60 -40 -20 0 20 40 0 10 20 30 40 50 60 70 80 90 100 ms mV membrane rest activation Refractory time Action potential Action potential ≈ 100mV Activation threshold ≈ 20-30mV Rest potential ≈ -65mV Spike time ≈ 1-2ms Refractory time ≈ 10-20ms

神经网络的复杂性 神经网路的复杂多样,不仅在于神经元和突触 的数量大、组合方式复杂和联系广泛,还在于 突触传递的机制复杂。现在己经发现和阐明的 突触传递机制有:突触后兴奋,突触后抑制, 突触前抑制,突触前兴奋,以及“远程”抑制 等等。在突触传递机制中,释放神经递质是实 现突触传递机能的中心环节,而不同的神经递 质有着不同的作用性质和特点 09/07/2023 Artificial Neural Networks-I 7

09/07/2023 Artificial Neural Networks - I 7 神经网络的复杂性 • 神经网路的复杂多样,不仅在于神经元和突触 的数量大、组合方式复杂和联系广泛,还在于 突触传递的机制复杂。现在已经发现和阐明的 突触传递机制有:突触后兴奋,突触后抑制, 突触前抑制,突触前兴奋,以及“远程”抑制 等等。在突触传递机制中,释放神经递质是实 现突触传递机能的中心环节,而不同的神经递 质有着不同的作用性质和特点

神经网络的研究 神经系统活动,不论是感觉、运动,还是脑的 高级功能(如学习、记忆、情绪等)都有整体 上的表现,面对这种表现的神经基础和机理的 分析不可避免地会涉及各种层次。这些不同层 次的研究互相启示,互相推动。在低层次(细 胞、分子水平)上的工作为较高层次的观察提 供分析的基础,而较高层次的观察又有助于引 导低层次工作的方向和体现其功能意义。既有 物理的、化学的、生理的、心理的分门别类研 究,又有综合研究。 09/07/2023 Artificial Neural Networks-I 8

09/07/2023 Artificial Neural Networks - I 8 神经网络的研究 • 神经系统活动,不论是感觉、运动,还是脑的 高级功能(如学习、记忆、情绪等)都有整体 上的表现,面对这种表现的神经基础和机理的 分析不可避免地会涉及各种层次。这些不同层 次的研究互相启示,互相推动。在低层次(细 胞、分子水平)上的工作为较高层次的观察提 供分析的基础,而较高层次的观察又有助于引 导低层次工作的方向和体现其功能意义。既有 物理的、化学的、生理的、心理的分门别类研 究,又有综合研究

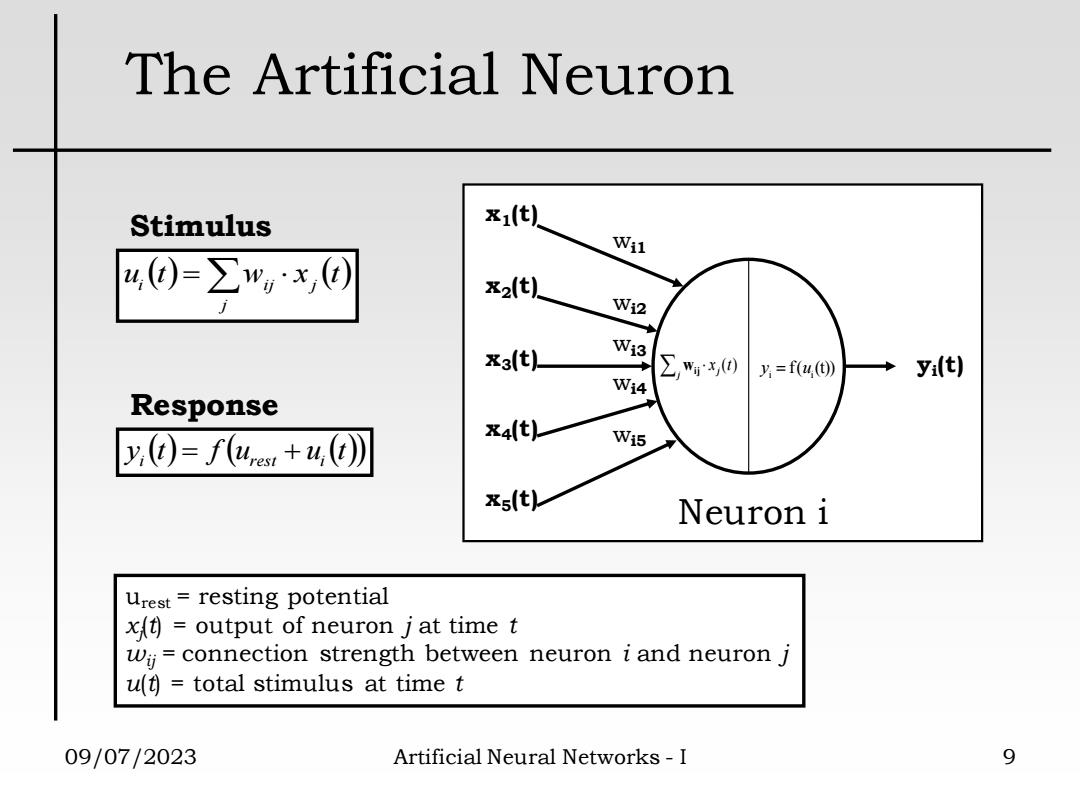

The Artificial Neuron Stimulus xi(t) W31 4,)=∑w)x,) x2(t) Wi2 x3(t) Wj3 ∑,0 yi(t) W14 Response X4(t) y,()=fue+4) Wi5 xs(t) Neuron i urest=resting potential x=output of neuron j at time t wj=connection strength between neuron i and neuron j u(t=total stimulus at time t 09/07/2023 Artificial Neural Networks-I 9

09/07/2023 Artificial Neural Networks - I 9 The Artificial Neuron ( ) = ( ) j i ij j u t w x t Stimulus urest = resting potential xj (t) = output of neuron j at time t wij = connection strength between neuron i and neuron j u(t) = total stimulus at time t yi (t) x1(t) x2(t) x5(t) x3(t) x4(t) wi1 wi3 wi2 wi4 wi5 f( (t)) i i j wij x j (t) y = u Neuron i y (t) f (u u (t)) i = rest + i Response

Artificial Neural Models McCulloch Pitts-type Neurons (static) -Digital neurons:activation state interpretation (snapshot of the system each time a unit fires) Analog neurons:firing rate interpretation (activation of units equal to firing rate) Activation of neurons encodes information Spiking Neurons (dynamic) Firing pattern interpretation(spike trains of units) - Timing of spike trains encodes information (time to first spike,phase of signal,correlation and synchronicity 09/07/2023 Artificial Neural Networks-I 10

09/07/2023 Artificial Neural Networks - I 10 Artificial Neural Models • McCulloch Pitts-type Neurons (static) – Digital neurons: activation state interpretation (snapshot of the system each time a unit fires) – Analog neurons: firing rate interpretation (activation of units equal to firing rate) – Activation of neurons encodes information • Spiking Neurons (dynamic) – Firing pattern interpretation (spike trains of units) – Timing of spike trains encodes information (time to first spike, phase of signal, correlation and synchronicity