Chapter 6 Architecture and Equilibria 结构和平衡 学生:郑巍 导师:刘三阳

Architecture and Equilibria 结构和平衡 学生:郑巍 导师:刘三阳 Chapter 6

Chapter 6 Architecture and Equilibria 6.1 Neutral Network As Stochastic Gradient system Classify Neutral network model By their synaptic connection topologies and by how learning modifies their connection topologies synaptic connection topologies (1.feedforward.if No closed synaptic loops 2.feedback if closed synapticloops orfeedback pathways how learning modifies their connection topologies 1.Supervised learning:use class-membership information of training samplings 2.Unsupervised learning:use unlabelled training samplings 2006.11.10 2

2006.11.10 2 Chapter 6 Architecture and Equilibria 6.1 Neutral Network As Stochastic Gradient system Classify Neutral network model By their synaptic connection topologies and by how learning modifies their connection topologies feedback i f closed synapticloops orfeedback pathways feedforward i f No closed synaptic loops 2. . 1. . 1. : 2. : Supervised learning use class membership information of training samplings Unsupervised learning use unlabelled training samplings − synaptic connection topologies how learning modifies their connection topologies

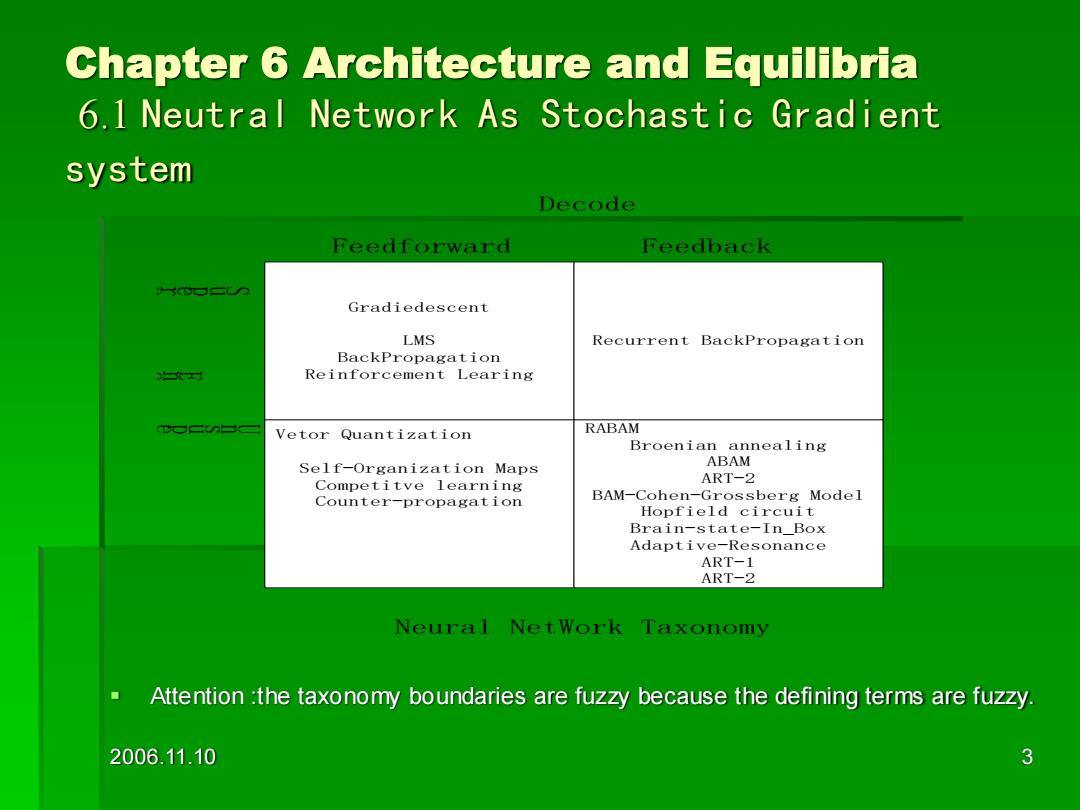

Chapter 6 Architecture and Equilibria 6.1 Neutral Network As Stochastic Gradient system Decode Feedforward Feedback s口二 Gradiedescent LMS Recurrent BackPropagation BackPropagation 1 Reinforcement Learing Vetor Quantization RABAM Broenian annealing Self-Organization Maps ABAM Competitve learning ART-2 Counter-propagation BAM-Cohen-Grossberg Model Hopfield circuit Brain-state-In_Box Adaptive-Resonance ART-1 ART-2 Neural NetWork Taxonomy Attention the taxonomy boundaries are fuzzy because the defining terms are fuzzy. 2006.11.10 3

2006.11.10 3 Chapter 6 Architecture and Equilibria 6.1 Neutral Network As Stochastic Gradient system ▪ Attention :the taxonomy boundaries are fuzzy because the defining terms are fuzzy. Gradiedescent LMS BackPropagation Reinforcement Learing Recurrent BackPropagation Vetor Quantization Self-Organization Maps Competitve learning Counter-propagation RABAM Broenian annealing ABAM ART-2 BAM-Cohen-Grossberg Model Hopfield circuit Brain-state-In_Box Adaptive-Resonance ART-1 ART-2 Feedforward Feedback Decode d e s i v r e p u S d e s i v r e p u s n U e d o c n E Neural NetWork Taxonomy

Chapter 6 Architecture and Equilibria 6.1 Neutral Network As Stochastic Gradient system Three stochastic gradient systems represent the three main categories 1 Backpropagation (BP) 2 Adaptive vector quantization (AVQ) 3 Random adaptive bidirectional associative memory (RABAM) 2006.11.10 4

2006.11.10 4 Chapter 6 Architecture and Equilibria 6.1 Neutral Network As Stochastic Gradient system ▪ Three stochastic gradient systems represent the three main categories 1 Backpropagation (BP) 2 Adaptive vector quantization (AVQ) 3 Random adaptive bidirectional associative memory (RABAM)

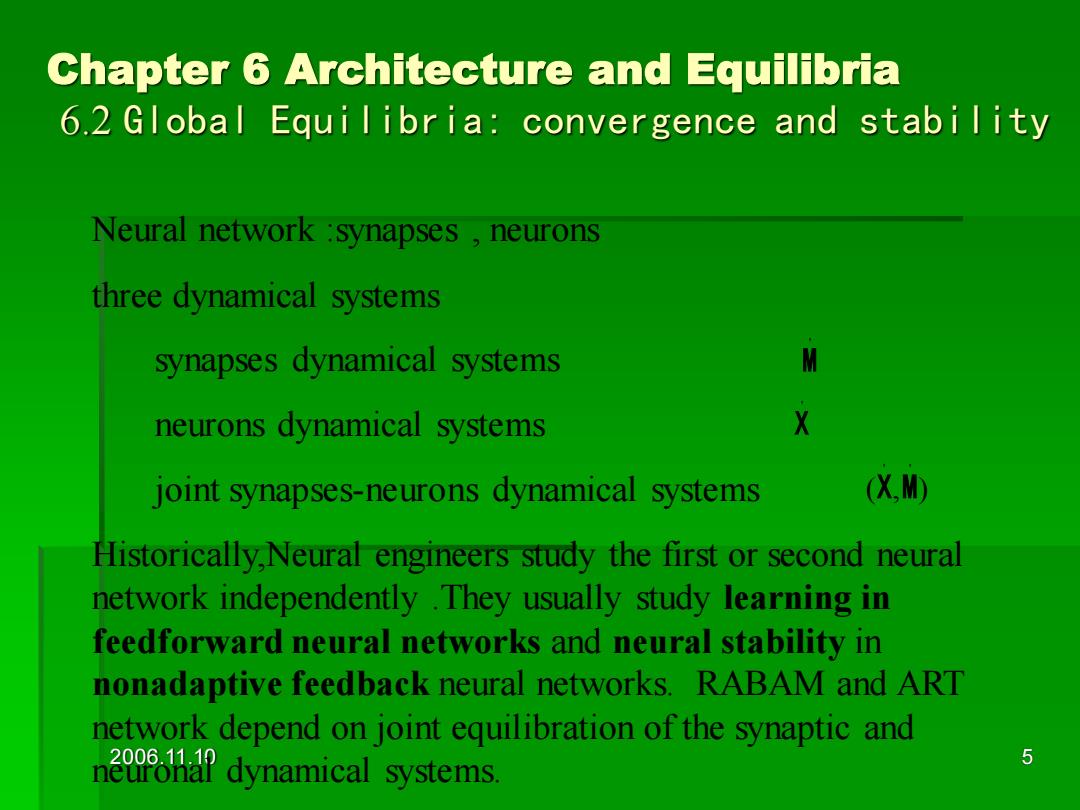

Chapter 6 Architecture and Equilibria 6.2 Global Equilibria:convergence and stability Neural network synapses neurons three dynamical systems synapses dynamical systems M neurons dynamical systems X joint synapses-neurons dynamical systems (X.M) Historically,Neural engineers study the first or second neural network independently.They usually study learning in feedforward neural networks and neural stability in nonadaptive feedback neural networks.RABAM and ART network depend on joint equilibration of the synaptic and n2081a9 dynamical systems. 5

2006.11.10 5 Chapter 6 Architecture and Equilibria 6.2 Global Equilibria: convergence and stability Neural network :synapses , neurons three dynamical systems synapses dynamical systems neurons dynamical systems joint synapses-neurons dynamical systems Historically,Neural engineers study the first or second neural network independently .They usually study learning in feedforward neural networks and neural stability in nonadaptive feedback neural networks. RABAM and ART network depend on joint equilibration of the synaptic and neuronal dynamical systems. ' M ' X ( , ) ' ' X M

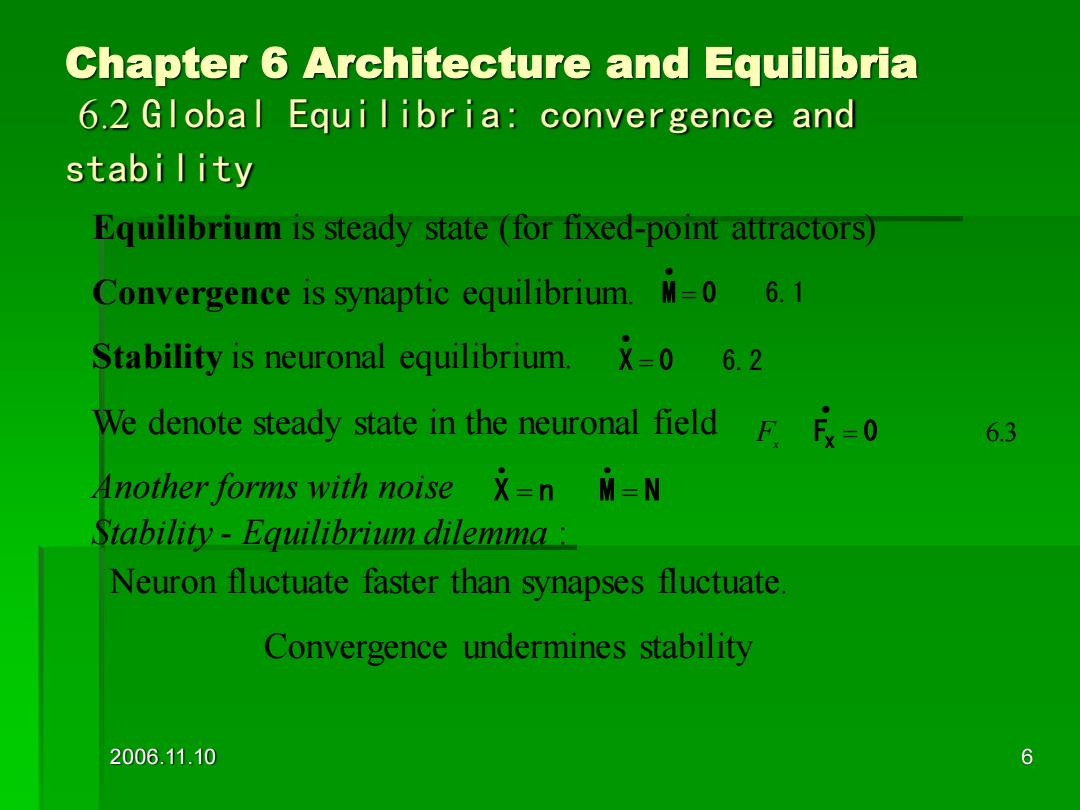

Chapter 6 Architecture and Equilibria 6.2 Global Equilibria:convergence and stabi lity Equilibrium is steady state (for fixed-point attractors) Convergence is synaptic equilibrium.M=0 6.1 Stability is neuronal equilibrium.X=0 6.2 We denote steady state in the neuronal field F=0 6.3 Another forms with noise x=n M=N Stability-Equilibrium dilemma: Neuron fluctuate faster than synapses fluctuate Convergence undermines stability 2006.11.10 6

2006.11.10 6 Chapter 6 Architecture and Equilibria 6.2 Global Equilibria: convergence and stability Equilibrium is steady state (for fixed-point attractors) Convergence is synaptic equilibrium. Stability is neuronal equilibrium. We denote steady state in the neuronal field Another forms with noise Stability - Equilibrium dilemma : Neuron fluctuate faster than synapses fluctuate. Convergence undermines stability M = 0 6.1 • X = 0 6.2 • F x Fx = 0 6.3 • • • X n M N = =

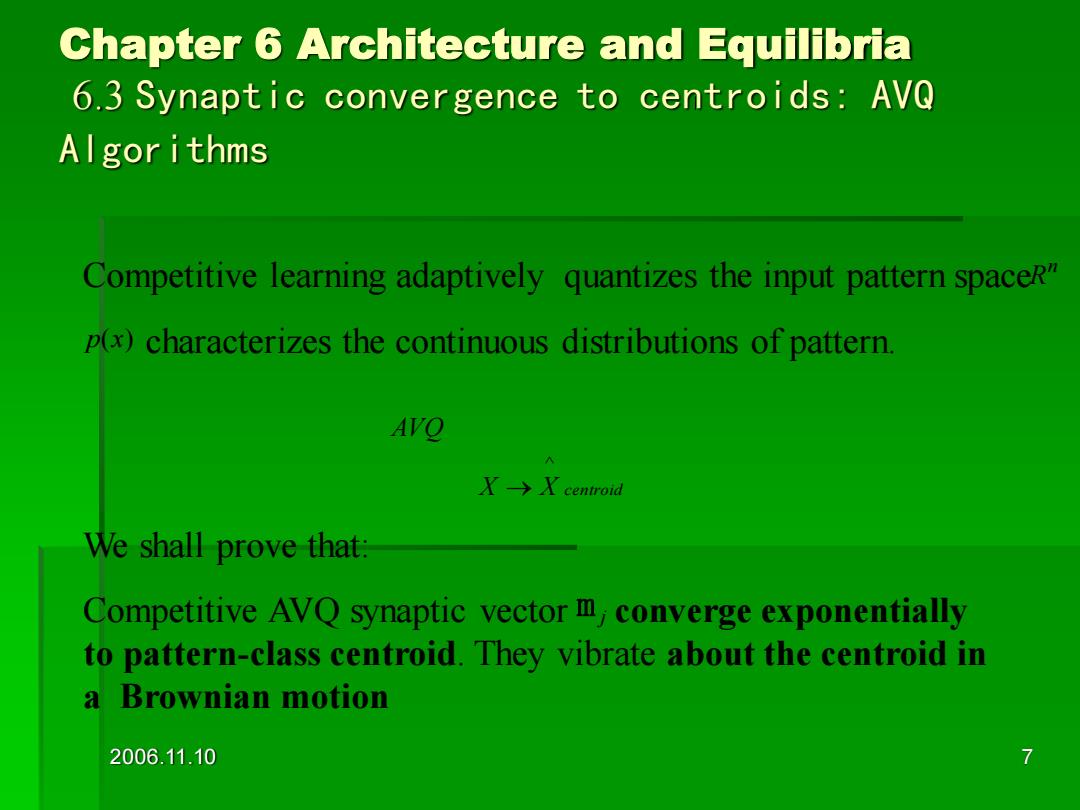

Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids:AVQ Al gor i thms Competitive learning adaptively quantizes the input pattern spaceR" p(x)characterizes the continuous distributions of pattern AVO X-→X centroid We shall prove that: Competitive AVQ synaptic vector m,converge exponentially to pattern-class centroid.They vibrate about the centroid in a Brownian motion 2006.11.10 7

2006.11.10 7 Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids: AVQ Algorithms We shall prove that: Competitive AVQ synaptic vector converge exponentially to pattern-class centroid. They vibrate about the centroid in a Brownian motion m j Competitive learning adaptively quantizes the input pattern space characterizes the continuous distributions of pattern. n R p(x) X X centroid AVQ ^ →

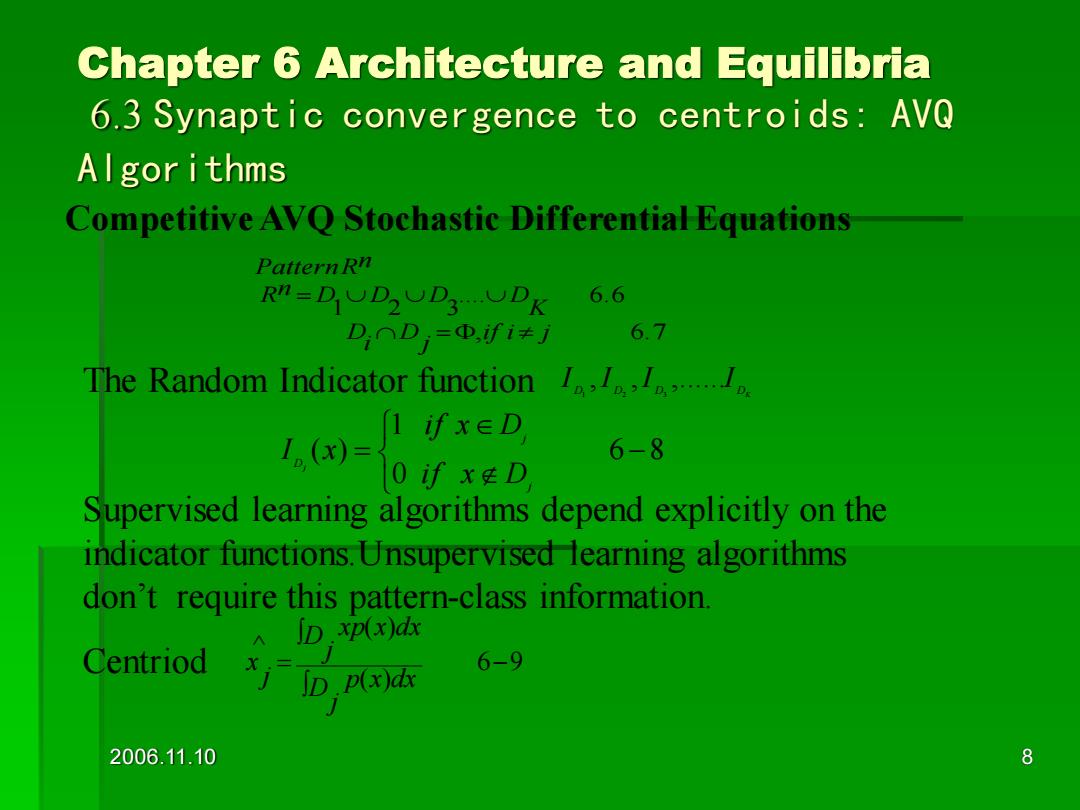

Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids:AVQ Algor i thms Competitive AVQ Stochastic Differential Equations Pattern Rn Rn=DOD2UD3DK 6.6 DnD=D,fi≠j 6.7 The Random Indicator function... 1.()=ixeD 6-8 oif xD Supervised learning algorithms depend explicitly on the indicator functions.Unsupervised learning algorithms don't require this pattern-class information. ID.xp(x)dx Centriod 6-9 (x)dx 2006.11.10 8

2006.11.10 8 Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids: AVQ Algorithms , 6.7 .... 6.6 1 2 3 if i j j D i D K Rn D D D D PatternRn = = The Random Indicator function Supervised learning algorithms depend explicitly on the indicator functions.Unsupervised learning algorithms don’t require this pattern-class information. Centriod D D D DK I ,I ,I ,......I 1 2 3 6 8 0 1 ( ) − = j j D i f x D i f x D I x j 6 9 ( ) ( ) ^ − = j D p x dx j D xp x dx j x Competitive AVQ Stochastic Differential Equations

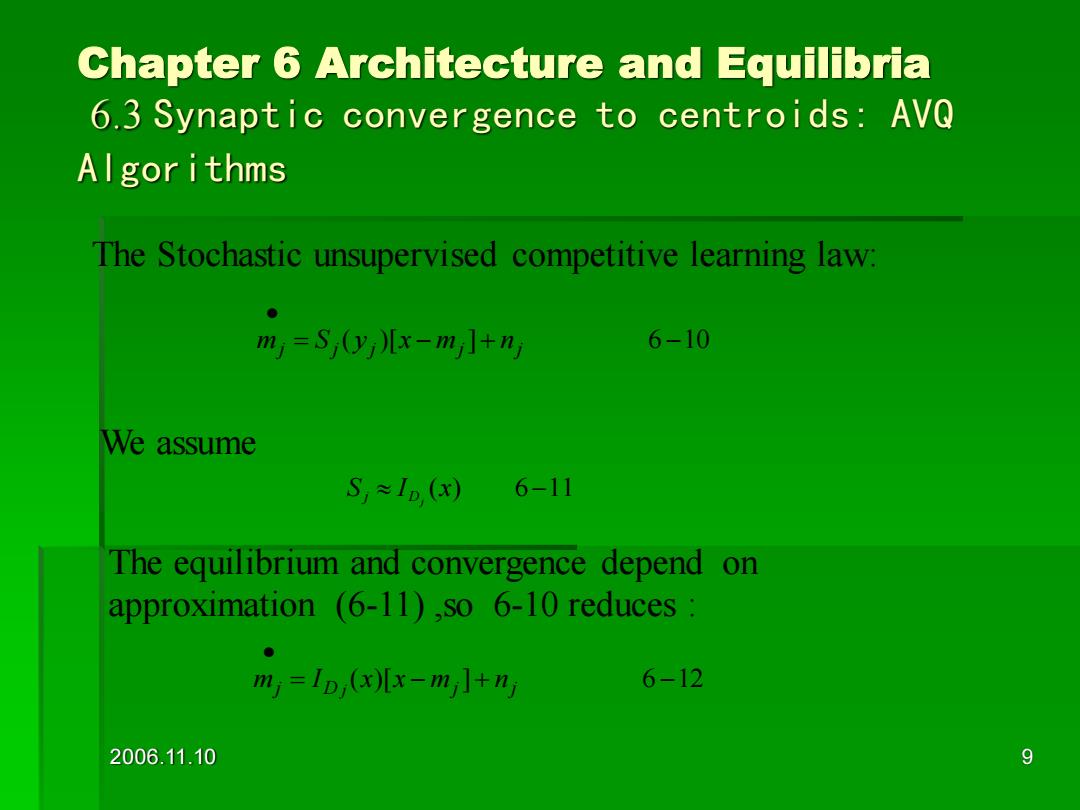

Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids:AVQ Al gor i thms The Stochastic unsupervised competitive learning law: m)=S0y儿x-m]+n 6-10 We assume S≈ID(x)6-11 The equilibrium and convergence depend on approximation (6-11),so 6-10 reduces m=D(x儿x-m]+n 6-12 2006.11.10 9

2006.11.10 9 Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids: AVQ Algorithms The Stochastic unsupervised competitive learning law: = ( )[ − ]+ 6 −10 • j j j mj nj m S y x S I (x) 6−11 Dj j We assume The equilibrium and convergence depend on approximation (6-11) ,so 6-10 reduces : = ( )[ − ]+ 6 −12 • j D mj nj m I x x j

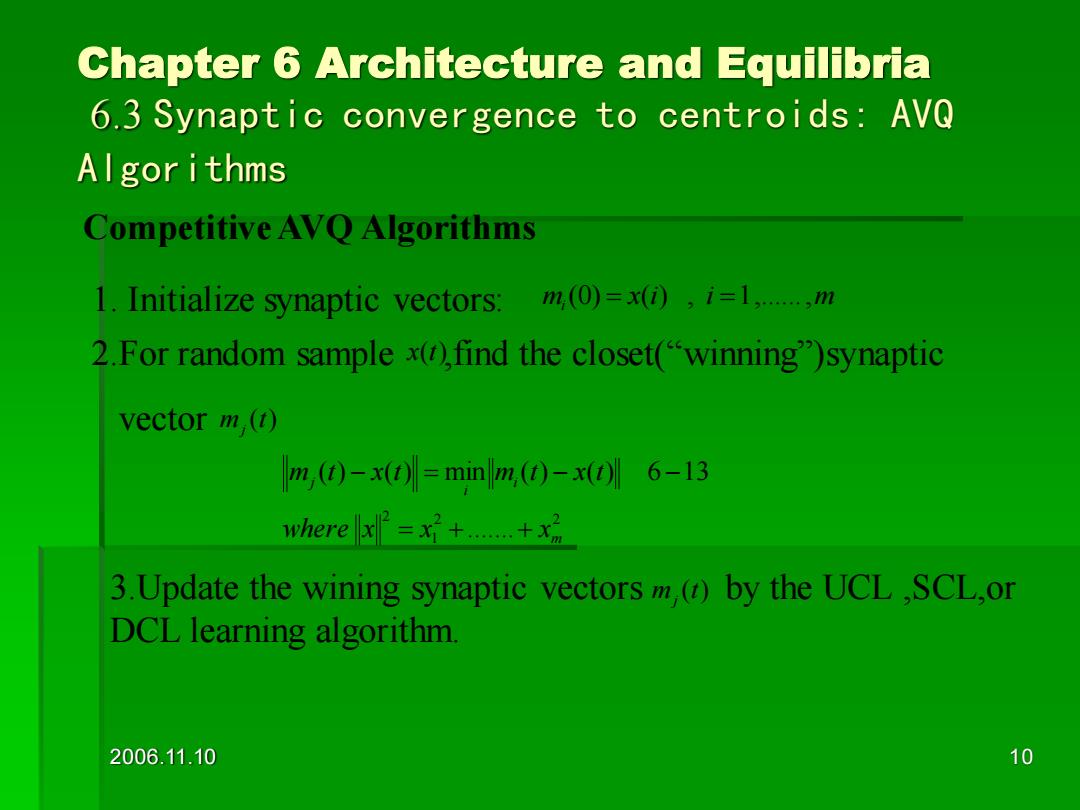

Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids:AVQ Al gor ithms Competitive AVQ Algorithms 1.Initialize synaptic vectors: m,(0)=x(0,i=1,…,m 2.For random sample x()find the closet("winning")synaptic vector m (t) m,(0)-x0)=minm,(0-x06-13 where x=++x 3.Update the wining synaptic vectors()by the UCL.SCL,or DCL learning algorithm. 2006.11.10 10

2006.11.10 10 Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids: AVQ Algorithms Competitive AVQ Algorithms 1. Initialize synaptic vectors: mi (0) = x(i) , i =1,......,m 2.For random sample ,find the closet(“winning”)synaptic vector x(t) m (t) j 2 2 1 2 ....... ( ) ( ) min ( ) ( ) 6 13 m i i j where x x x m t x t m t x t = + + − = − − 3.Update the wining synaptic vectors by the UCL ,SCL,or DCL learning algorithm. m (t) j